4878:

112:

6278:. Similar to reinforcement learning, a learning automata algorithm also has the advantage of solving the problem when probability or rewards are unknown. The difference between learning automata and Q-learning is that the former technique omits the memory of Q-values, but updates the action probability directly to find the learning result. Learning automata is a learning scheme with a rigorous proof of convergence.

1988:, explicitly. In such cases, a simulator can be used to model the MDP implicitly by providing samples from the transition distributions. One common form of implicit MDP model is an episodic environment simulator that can be started from an initial state and yields a subsequent state and reward every time it receives an action input. In this manner, trajectories of states, actions, and rewards, often called

4511:

2201:

4032:. Under this assumption, although the decision maker can make a decision at any time in the current state, there is no benefit in taking multiple actions. It is better to take an action only at the time when system is transitioning from the current state to another state. Under some conditions, if our optimal value function

4873:{\displaystyle {\begin{aligned}{\text{Maximize}}&\sum _{i\in S}\sum _{a\in A(i)}R(i,a)y(i,a)\\{\text{s.t.}}&\sum _{i\in S}\sum _{a\in A(i)}q(j\mid i,a)y(i,a)=0\quad \forall j\in S,\\&\sum _{i\in S}\sum _{a\in A(i)}y(i,a)=1,\\&y(i,a)\geq 0\qquad \forall a\in A(i){\text{ and }}\forall i\in S\end{aligned}}}

4498:

5551:

5888:

Reinforcement learning can solve Markov-Decision processes without explicit specification of the transition probabilities which are instead needed to perform policy iteration. In this setting, transition probabilities and rewards must be learned from experience, i.e. by letting an agent interact with

2175:

These model classes form a hierarchy of information content: an explicit model trivially yields a generative model through sampling from the distributions, and repeated application of a generative model yields an episodic simulator. In the opposite direction, it is only possible to learn approximate

76:. Reinforcement learning utilizes the MDP framework to model the interaction between a learning agent and its environment. In this framework, the interaction is characterized by states, actions, and rewards. The MDP framework is designed to provide a simplified representation of key elements of

2984:

Their order depends on the variant of the algorithm; one can also do them for all states at once or state by state, and more often to some states than others. As long as no state is permanently excluded from either of the steps, the algorithm will eventually arrive at the correct solution.

2979:

2653:

The algorithm has two steps, (1) a value update and (2) a policy update, which are repeated in some order for all the states until no further changes take place. Both recursively update a new estimation of the optimal policy and state value using an older estimation of those values.

5184:

3334:

2813:

4023:

If the state space and action space are finite, we could use linear programming to find the optimal policy, which was one of the earliest approaches applied. Here we only consider the ergodic model, which means our continuous-time MDP becomes an

4212:

4340:

603:

3684:

can find useful solutions in larger problems, and, in theory, it is possible to construct online planning algorithms that can find an arbitrarily near-optimal policy with no computational complexity dependence on the size of the state space.

2528:. The algorithms in this section apply to MDPs with finite state and action spaces and explicitly given transition probabilities and reward functions, but the basic concepts may be extended to handle other problem classes, for example using

6092:

3680:, the size of the problem representation is often exponential in the number of state and action variables, limiting exact solution techniques to problems that have a compact representation. In practice, online planning techniques such as

5284:

6658:

The terminology and notation for MDPs are not entirely settled. There are two main streams — one focuses on maximization problems from contexts like economics, using the terms action, reward, value, and calling the discount factor

3814:, decisions can be made at any time the decision maker chooses. In comparison to discrete-time Markov decision processes, continuous-time Markov decision processes can better model the decision-making process for a system that has

5797:

5889:

the MDP for a given number of steps. Both on a theoretical and on a practical level, effort is put in maximizing the sample efficiency, i.e. minimimizing the number of samples needed to learn a policy whose performance is

2313:

3521:, step one is performed once, and then step two is performed once, then both are repeated until policy converges. Then step one is again performed once and so on. (Policy iteration was invented by Howard to optimize

758:

103:. The process is called a "decision process" because it involves making decisions that influence these state transitions, extending the concept of a Markov chain into the realm of decision-making under uncertainty.

6620:

5000:

3837:

which could maximize the expected cumulated reward. The only difference with the standard case stays in the fact that, due to the continuous nature of the time variable, the sum is replaced by an integral:

2821:

1862:

1383:

5289:

5005:

4516:

4345:

3979:

6546:

1933:. A particular MDP may have multiple distinct optimal policies. Because of the Markov property, it can be shown that the optimal policy is a function of the current state, as assumed above.

793:

3156:

2172:

are the new state and reward. Compared to an episodic simulator, a generative model has the advantage that it can yield data from any state, not only those encountered in a trajectory.

1453:

2085:

6972:

4014:

634:

1774:

1295:

178:

7019:

6926:

6827:

2468:

2419:

1986:

846:

339:

6873:

1519:

6648:

6485:

4493:{\displaystyle {\begin{aligned}{\text{Minimize}}\quad &g\\{\text{s.t}}\quad &g-\sum _{j\in S}q(j\mid i,a)h(j)\geq R(i,a)\,\,\forall i\in S,\,a\in A(i)\end{aligned}}}

4327:

4271:

5910:

4085:

1591:

1412:

5264:

4992:

5669:

5582:

3557:

2370:

1931:

6457:

6217:

6152:

3758:

3447:

3119:

3061:

2513:

2150:

1105:

1032:

891:

424:

5222:

4950:

4915:

1725:

1246:

6127:

5859:

5546:{\displaystyle {\begin{aligned}V(s(0),0)={}&\max _{a(t)=\pi (s(t))}\int _{0}^{T}r(s(t),a(t))\,dt+D\\{\text{s.t.}}\quad &{\frac {dx(t)}{dt}}=f\end{aligned}}}

4057:

3410:

1891:

270:

5829:

5640:

5611:

3651:

3584:

3148:

3090:

3032:

2628:

2599:

2579:

1128:

1003:

935:

3383:

2660:

2003:, a single step simulator that can generate samples of the next state and reward given any state and action. (Note that this is a different meaning from the term

450:

3653:

around those states recently) or based on use (those states are near the starting state, or otherwise of interest to the person or program using the algorithm).

1565:

6237:

6192:

6172:

5938:

5912:

close to the optimal one (due to the stochastic nature of the process, learning the optimal policy with a finite number of samples is, in general, impossible).

4291:

4235:

4077:

3768:

Constrained Markov decision processes (CMDPS) are extensions to Markov decision process (MDPs). There are three fundamental differences between MDPs and CMDPs.

3729:

3631:

3487:

3467:

3357:

2648:

2556:

2488:

2335:

2236:

2170:

2125:

2105:

2033:

1611:

1539:

1473:

1076:

1052:

975:

955:

911:

866:

399:

379:

359:

290:

237:

201:

6667:, while the other focuses on minimization problems from engineering and navigation, using the terms control, cost, cost-to-go, and calling the discount factor

3613:

In this variant, the steps are preferentially applied to states which are in some way important – whether based on the algorithm (there were large changes in

7048:

3706:

3528:

Instead of repeating step two to convergence, it may be formulated and solved as a set of linear equations. These equations are merely obtained by making

1054:. Once a Markov decision process is combined with a policy in this way, this fixes the action for each state and the resulting combination behaves like a

5677:

7885:

7064:

6559:

In this way, Markov decision processes could be generalized from monoids (categories with one object) to arbitrary categories. One can call the result

5275:

2180:. The type of model available for a particular MDP plays a significant role in determining which solution algorithms are appropriate. For example, the

455:

2241:

2535:

The standard family of algorithms to calculate optimal policies for finite state and action MDPs requires storage for two arrays indexed by state:

636:

measurable. In case the state space is discrete, the integral is intended with respect to the counting measure, so that the latter simplifies as

5946:

1130:

that will maximize some cumulative function of the random rewards, typically the expected discounted sum over a potentially infinite horizon:

7850:

7769:

7376:

7228:

7161:

7124:

6413:+ 1) and outputs the corresponding action. The automaton's environment, in turn, reads the action and sends the next input to the automaton.

5179:{\displaystyle {\begin{aligned}\sum _{i\in S}\sum _{a\in A(i)}R(i,a)y^{*}(i,a)\geq \sum _{i\in S}\sum _{a\in A(i)}R(i,a)y(i,a)\end{aligned}}}

3516:

3005:

6562:

7840:

6266:

theory is called learning automata. This is also one type of reinforcement learning if the environment is stochastic. The first detail

3760:

cannot be calculated. When this assumption is not true, the problem is called a partially observable Markov decision process or POMDP.

7479:

5885:

that has, as main objective, finding an approximately optimal policy for MDPs where transition probabilities and rewards are unknown.

3833:

Like the discrete-time Markov decision processes, in continuous-time Markov decision processes the agent aims at finding the optimal

639:

7920:

7863:

7829:

7808:

7728:

7285:

1541:). A lower discount factor motivates the decision maker to favor taking actions early, rather than postpone them indefinitely.

3819:

3605:), step one is performed once, and then step two is repeated several times. Then step one is again performed once and so on.

2974:{\displaystyle \pi (s):=\operatorname {argmax} _{a}\left\{\sum _{s'}P_{a}(s,s')\left(R_{a}(s,s')+\gamma V(s')\right)\right\}}

38:

5274:

In continuous-time MDP, if the state space and action space are continuous, the optimal criterion could be found by solving

115:

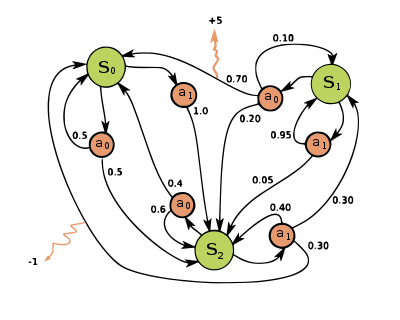

Example of a simple MDP with three states (green circles) and two actions (orange circles), with two rewards (orange arrows)

3560:

1779:

1300:

3844:

7944:

7119:. Wiley series in probability and mathematical statistics. Applied probability and statistics section. New York: Wiley.

3559:

in the step two equation. Thus, repeating step two to convergence can be interpreted as solving the linear equations by

6510:

7954:

7431:

van Nunen, J.A. E. E (1976). "A set of successive approximation methods for discounted

Markovian decision problems".

7404:

Puterman, M. L.; Shin, M. C. (1978). "Modified Policy

Iteration Algorithms for Discounted Markov Decision Problems".

2421:

is the transition of the system, which in this case is going to be deterministic and driven by the laws of mechanics.

7939:

5920:

For the purpose of this section, it is useful to define a further function, which corresponds to taking the action

3329:{\displaystyle V_{i+1}(s):=\max _{a}\left\{\sum _{s'}P_{a}(s,s')\left(R_{a}(s,s')+\gamma V_{i}(s')\right)\right\},}

42:

7949:

763:

7079:

2630:

will contain the discounted sum of the rewards to be earned (on average) by following that solution from state

1417:

7223:. Adaptive computation and machine learning series (2nd ed.). Cambridge, Massachusetts: The MIT Press.

7043:

3681:

3677:

2185:

77:

7819:

7146:

Papers presented at the

February 26-28, 1957, western joint computer conference: Techniques for reliability

3790:

The method of

Lagrange multipliers applies to CMDPs. Many Lagrangian-based algorithms have been developed.

2205:

7673:

7466:

7033:

5874:

5869:

2529:

73:

3987:

2524:

Solutions for MDPs with finite state and action spaces may be found through a variety of methods such as

6275:

3810:

In discrete-time Markov

Decision Processes, decisions are made at discrete time intervals. However, for

46:

3501:

included as a special case the value iteration method for MDPs, but this was recognized only later on.

1730:

1251:

125:

4207:{\displaystyle g\geq R(i,a)+\sum _{j\in S}q(j\mid i,a)h(j)\quad \forall i\in S{\text{ and }}a\in A(i)}

2038:

7317:

6931:

2188:

requires a generative model (or an episodic simulator that can be copied at any state), whereas most

1894:

1478:

7678:

6629:

6466:

4296:

4240:

985:

The goal in a Markov decision process is to find a good "policy" for the decision maker: a function

7712:

7665:

7074:

7053:

6352:

6271:

5892:

3780:

2525:

2181:

2177:

608:

214:

53:

5671:

shows how the state vector changes over time. The

Hamilton–Jacobi–Bellman equation is as follows:

7448:

7392:

7364:

7178:

6977:

6885:

6785:

6389:

The states of such an automaton correspond to the states of a "discrete-state discrete-parameter

3827:

3776:

3011:

2426:

2377:

1944:

1570:

1391:

804:

297:

69:

6834:

5227:

4955:

2490:

if the pole is up after the transition, zero otherwise. Therefore, this function only depend on

7474:

7148:. IRE-AIEE-ACM '57 (Western). New York, NY, USA: Association for Computing Machinery: 115–121.

5645:

5558:

3589:

Policy iteration is usually slower than value iteration for a large number of possible states.

848:

is the immediate reward (or expected immediate reward) received after transitioning from state

7916:

7856:

7846:

7825:

7804:

7765:

7724:

7691:

7372:

7345:

7281:

7224:

7198:

7157:

7120:

6257:

2340:

1909:

204:

96:

6424:

3734:

3419:

3095:

3037:

1081:

1008:

7683:

7618:

7536:

7524:

7496:

7488:

7440:

7413:

7335:

7325:

7257:

7190:

7149:

7084:

7058:

6500:

6263:

5878:

5192:

4920:

4885:

3670:

3666:

3586:

does not change in the course of applying step 1 to all states, the algorithm is completed.

3498:

3490:

2808:{\displaystyle V(s):=\sum _{s'}P_{\pi (s)}(s,s')\left(R_{\pi (s)}(s,s')+\gamma V(s')\right)}

2004:

1619:

1136:

796:

7899:

7612:

6100:

5837:

4035:

3566:

This variant has the advantage that there is a definite stopping condition: when the array

3388:

1870:

248:

7525:"A Sparse Sampling Algorithm for Near-Optimal Planning in Large Markov Decision Processes"

7470:

7246:"A Sparse Sampling Algorithm for Near-Optimal Planning in Large Markov Decision Processes"

7069:

6460:

5882:

5805:

5616:

5587:

3823:

3815:

3799:

3694:

3662:

3636:

3569:

3531:

3124:

3066:

3017:

2604:

2584:

2564:

1113:

988:

920:

100:

7867:

3362:

429:

7321:

6197:

6132:

2493:

2212:

An example of MDP is the Pole-Balancing model, which comes from classic control theory.

2130:

1893:

is the time horizon. Compared to the previous objective, the latter one is more used in

1547:

871:

404:

111:

7340:

7305:

7038:

6390:

6222:

6177:

6157:

5923:

5832:

4276:

4220:

4062:

3714:

3673:

3616:

3472:

3452:

3413:

3342:

2633:

2541:

2473:

2320:

2221:

2155:

2110:

2090:

2018:

1596:

1524:

1458:

1061:

1037:

960:

940:

896:

851:

384:

364:

344:

275:

222:

186:

84:, the management of uncertainty and nondeterminism, and the pursuit of explicit goals.

17:

7194:

1941:

In many cases, it is difficult to represent the transition probability distributions,

7933:

7717:

7594:

7301:

3494:

92:

7580:

Natural policy gradient primal-dual method for constrained Markov decision processes

4952:

is nonnative and satisfied the constraints in the D-LP problem. A feasible solution

7595:"Risk-aware path planning using hierarchical constrained Markov Decision Processes"

7452:

7094:

5940:

and then continuing optimally (or according to whatever policy one currently has):

5792:{\displaystyle 0=\max _{u}(r(t,s,a)+{\frac {\partial V(t,s)}{\partial x}}f(t,s,a))}

1055:

88:

56:

in the 1950s, MDPs have since gained recognition in a variety of fields, including

7637:

3665:

polynomial in the size of the problem representation exist for finite MDPs. Thus,

95:. The "Markov" in "Markov decision process" refers to the underlying structure of

7910:

7798:

7141:

6488:

598:{\displaystyle \Pr(s_{t+1}\in S'\mid s_{t}=s,a_{t}=a)=\int _{S'}P_{a}(s,s')ds',}

65:

7310:

Proceedings of the

National Academy of Sciences of the United States of America

2308:{\displaystyle (\theta ,{\dot {\theta }},x,{\dot {x}})\subset \mathbb {R} ^{4}}

1544:

Another possible, but strictly related, objective that is commonly used is the

7687:

7622:

7541:

7262:

7245:

7089:

6240:

4025:

3489:

converges with the left-hand side equal to the right-hand side (which is the "

2012:

2008:

7695:

7202:

7417:

7330:

7153:

6397:= 0,1,2,3,..., the automaton reads an input from its environment, updates P(

6097:

While this function is also unknown, experience during learning is based on

81:

61:

7349:

6087:{\displaystyle \ Q(s,a)=\sum _{s'}P_{a}(s,s')(R_{a}(s,s')+\gamma V(s')).\ }

5278:. In order to discuss the HJB equation, we need to reformulate our problem

3798:

There are a number of applications for CMDPs. It has recently been used in

2035:

is often used to represent a generative model. For example, the expression

7578:

Ding, Dongsheng; Zhang, Kaiqing; Jovanovic, Mihailo; Basar, Tamer (2020).

7492:

6504:

3772:

There are multiple costs incurred after applying an action instead of one.

2315:

given by pole agle, angular velocity, position of the cart and its speed.

3525:

catalogue mailing, which he had been optimizing using value iteration.)

2184:

algorithms described in the next section require an explicit model, and

7764:(Dover paperback ed.). Princeton, NJ: Princeton University Press.

7501:

7444:

1776:, i.e. actions given by the policy). And the expectation is taken over

1297:, i.e. actions given by the policy). And the expectation is taken over

57:

6409:, randomly chooses a successor state according to the probabilities P(

7393:"Comments on the Origin and Application of Markov Decision Processes"

6650:

changes the set of available actions and the set of possible states.

6274:

and

Thathachar (1974), which were originally described explicitly as

4029:

6615:{\displaystyle ({\mathcal {C}},F:{\mathcal {C}}\to \mathbf {Dist} )}

2372:, corresponding to applying a force on the left (right) on the cart.

2200:

2087:

might denote the action of sampling from the generative model where

6671:. In addition, the notation for the transition probability varies.

27:

Mathematical model for sequential decision making under uncertainty

7117:

Markov decision processes: discrete stochastic dynamic programming

3522:

2199:

120:

7363:

Kallenberg, Lodewijk (2002). "Finite state and action MDPs". In

452:. In general, this probability transition is defined to satisfy

7668:; Thathachar, M. A. L. (1974). "Learning Automata – A Survey".

7369:

Handbook of Markov decision processes: methods and applications

1613:

steps of the process, with each reward having the same weight.

753:{\displaystyle P_{a}(s,s')=\Pr(s_{t+1}=s'\mid s_{t}=s,a_{t}=a)}

7523:

Kearns, Michael; Mansour, Yishay; Ng, Andrew (November 2002).

2204:

Pole

Balancing example (rendering of the environment from the

6635:

6587:

6571:

6516:

6472:

6239:

and uses experience to update it directly. This is known as

5276:

Hamilton–Jacobi–Bellman (HJB) partial differential equation

3870:

1567:

step return. This time, instead of using a discount factor

937:

is a (potentially probabilistic) mapping from state space (

7824:. Stochastic Modelling and Applied Probability. Springer.

213:. The state space may be discrete or continuous, like the

80:

challenges. These elements encompass the understanding of

6882:

In addition, transition probability is sometimes written

7278:

Reinforcement

Learning: Theory and Python Implementation

5802:

We could solve the equation to find the optimal control

1900:

A policy that maximizes the function above is called an

292:). As for state, this set may be discrete or continuous.

7638:"Multi-agent reinforcement learning: a critical survey"

4329:, we could use the following linear programming model:

3092:

whenever it is needed. Substituting the calculation of

2581:, which contains actions. At the end of the algorithm,

795:, the integral is usually intended with respect to the

341:

is, on an intuitive level, the probability that action

7179:"Dynamic programming and stochastic control processes"

7244:

Kearns, Michael; Mansour, Yishay; Ng, Andrew (2002).

6980:

6934:

6888:

6837:

6788:

6632:

6565:

6513:

6469:

6427:

6385:: Φ → α which generates the output at each time step.

6225:

6200:

6180:

6160:

6135:

6103:

5949:

5926:

5895:

5840:

5808:

5680:

5648:

5619:

5590:

5561:

5287:

5230:

5224:

to the D-LP. Once we have found the optimal solution

5195:

5003:

4958:

4923:

4888:

4514:

4343:

4299:

4279:

4243:

4223:

4088:

4065:

4038:

3990:

3847:

3737:

3717:

3639:

3619:

3572:

3534:

3475:

3455:

3422:

3391:

3365:

3345:

3159:

3127:

3098:

3069:

3040:

3020:

2824:

2663:

2636:

2607:

2587:

2567:

2544:

2496:

2476:

2429:

2380:

2343:

2323:

2244:

2224:

2158:

2133:

2113:

2093:

2041:

2021:

1947:

1912:

1873:

1782:

1733:

1622:

1599:

1573:

1550:

1527:

1481:

1461:

1420:

1394:

1303:

1254:

1139:

1116:

1084:

1064:

1040:

1011:

991:

963:

943:

923:

899:

874:

854:

807:

766:

642:

611:

458:

432:

407:

387:

367:

347:

300:

278:

251:

225:

189:

128:

7582:. Advances in Neural Information Processing Systems.

1857:{\displaystyle s_{t+1}\sim P_{a_{t}}(s_{t},s_{t+1})}

1378:{\displaystyle s_{t+1}\sim P_{a_{t}}(s_{t},s_{t+1})}

7707:

7705:

7601:. IEEE International Conference. pp. 297, 303.

5266:, we can use it to establish the optimal policies.

3974:{\displaystyle \max \operatorname {E} _{\pi }\left}

3359:is the iteration number. Value iteration starts at

91:, a concept developed by the Russian mathematician

7743:

7716:

7670:IEEE Transactions on Systems, Man, and Cybernetics

7280:. Beijing: China Machine Press. 2019. p. 44.

7013:

6966:

6920:

6867:

6821:

6642:

6614:

6540:

6479:

6451:

6421:Other than the rewards, a Markov decision process

6231:

6211:

6186:

6166:

6146:

6121:

6086:

5932:

5904:

5853:

5823:

5791:

5663:

5634:

5605:

5576:

5545:

5270:Continuous space: Hamilton–Jacobi–Bellman equation

5258:

5216:

5178:

4986:

4944:

4909:

4872:

4492:

4321:

4285:

4265:

4229:

4206:

4071:

4051:

4008:

3973:

3752:

3723:

3645:

3625:

3578:

3551:

3481:

3461:

3441:

3404:

3377:

3351:

3328:

3142:

3113:

3084:

3055:

3026:

2973:

2807:

2642:

2622:

2593:

2573:

2550:

2507:

2482:

2462:

2413:

2364:

2329:

2307:

2230:

2164:

2144:

2119:

2099:

2079:

2027:

2007:in the context of statistical classification.) In

1980:

1925:

1885:

1856:

1768:

1719:

1605:

1585:

1559:

1533:

1513:

1467:

1447:

1406:

1377:

1289:

1240:

1122:

1099:

1070:

1046:

1034:that the decision maker will choose when in state

1026:

997:

969:

949:

929:

905:

885:

860:

840:

787:

752:

628:

597:

444:

418:

393:

373:

353:

333:

284:

264:

231:

195:

172:

6541:{\displaystyle {\mathcal {A}}\to \mathbf {Dist} }

4994:to the D-LP is said to be an optimal solution if

6935:

6889:

6378:), the current input, and the current state, and

5688:

5329:

4293:satisfying the above equation. In order to find

4028:continuous-time Markov chain under a stationary

3848:

3189:

676:

459:

6626:, because moving from one object to another in

3818:, i.e., the system dynamics is defined by

3786:The final policy depends on the starting state.

3731:is known when action is to be taken; otherwise

2192:algorithms require only an episodic simulator.

7887:Introduction to stochastic dynamic programming

4019:Discrete space: Linear programming formulation

41:or stochastic control problem, is a model for

7636:Shoham, Y.; Powers, R.; Grenager, T. (2003).

7475:"The Complexity of Markov Decision Processes"

7219:Sutton, Richard S.; Barto, Andrew G. (2018).

5642:is the system control vector we try to find.

3661:Algorithms for finding optimal policies with

8:

7593:Feyzabadi, S.; Carpin, S. (18–22 Aug 2014).

7049:Partially observable Markov decision process

3822:(ODEs). These kind of applications raise in

3707:Partially observable Markov decision process

3602:

3034:function is not used; instead, the value of

2359:

2344:

1593:, the agent is interested only in the first

7140:Schneider, S.; Wagner, D. H. (1957-02-26).

3794:Natural policy gradient primal-dual method.

3004:harv error: no target: CITEREFBellman1957 (

2189:

788:{\displaystyle S\subseteq \mathbb {R} ^{d}}

272:is the set of actions available from state

7797:Feinberg, E.A.; Shwartz, A., eds. (2002).

3950:

3711:The solution above assumes that the state

3515:harv error: no target: CITEREFHoward1960 (

7821:Continuous-Time Markov Decision Processes

7790:Finite state Markovian decision processes

7677:

7614:Continuous-Time Markov Decision Processes

7599:Automation Science and Engineering (CASE)

7540:

7500:

7339:

7329:

7261:

6985:

6979:

6933:

6887:

6842:

6836:

6793:

6787:

6634:

6633:

6631:

6624:context-dependent Markov decision process

6595:

6586:

6585:

6570:

6569:

6564:

6524:

6515:

6514:

6512:

6471:

6470:

6468:

6426:

6224:

6199:

6179:

6159:

6134:

6102:

6026:

5993:

5978:

5948:

5925:

5894:

5845:

5839:

5807:

5727:

5691:

5679:

5647:

5618:

5589:

5560:

5465:

5457:

5422:

5380:

5375:

5332:

5322:

5288:

5286:

5235:

5229:

5194:

5115:

5099:

5071:

5028:

5012:

5004:

5002:

4963:

4957:

4922:

4887:

4849:

4750:

4734:

4638:

4622:

4609:

4548:

4532:

4519:

4515:

4513:

4467:

4451:

4450:

4381:

4363:

4348:

4344:

4342:

4313:

4302:

4301:

4298:

4278:

4257:

4246:

4245:

4242:

4222:

4181:

4120:

4087:

4079:, we will have the following inequality:

4064:

4043:

4037:

3989:

3960:

3943:

3892:

3882:

3877:

3855:

3846:

3812:continuous-time Markov decision processes

3736:

3716:

3638:

3618:

3598:

3571:

3533:

3474:

3454:

3427:

3421:

3416:. It then iterates, repeatedly computing

3396:

3390:

3364:

3344:

3293:

3257:

3222:

3207:

3192:

3164:

3158:

3126:

3097:

3068:

3039:

3019:

2912:

2877:

2862:

2844:

2823:

2742:

2698:

2683:

2662:

2635:

2606:

2586:

2566:

2543:

2495:

2475:

2434:

2428:

2385:

2379:

2342:

2322:

2299:

2295:

2294:

2276:

2275:

2255:

2254:

2243:

2223:

2157:

2132:

2112:

2092:

2040:

2020:

1952:

1946:

1917:

1911:

1872:

1839:

1826:

1811:

1806:

1787:

1781:

1757:

1738:

1732:

1696:

1683:

1668:

1663:

1658:

1646:

1635:

1621:

1598:

1572:

1549:

1526:

1491:

1480:

1460:

1448:{\displaystyle 0\leq \ \gamma \ \leq \ 1}

1419:

1393:

1360:

1347:

1332:

1327:

1308:

1302:

1278:

1259:

1253:

1217:

1204:

1189:

1184:

1174:

1169:

1163:

1152:

1138:

1115:

1083:

1063:

1039:

1010:

990:

962:

942:

922:

898:

873:

853:

812:

806:

779:

775:

774:

765:

735:

716:

686:

647:

641:

610:

555:

540:

518:

499:

469:

457:

431:

406:

386:

366:

346:

305:

299:

277:

256:

250:

224:

188:

161:

148:

127:

6673:

6319:} of possible outputs, or actions, with

5916:Reinforcement Learning for discrete MDPs

110:

7901:Reinforcement Learning: An Introduction

7842:Control Techniques for Complex Networks

7781:Dynamic Programming and Optimal Control

7221:Reinforcement learning: an introduction

7107:

6552:of states and the probability function

3806:Continuous-time Markov decision process

2999:

7142:"Error detection in redundant systems"

6262:Another application of MDP process in

4917:is a feasible solution to the D-LP if

3510:

2127:are the current state and action, and

87:The name comes from its connection to

7818:Guo, X.; Hernández-Lerma, O. (2009).

7800:Handbook of Markov Decision Processes

7715:; Thathachar, Mandayam A. L. (1989).

7645:Technical Report, Stanford University

7565:Constrained Markov decision processes

3764:Constrained Markov decision processes

7:

7898:Sutton, R. S.; Barto, A. G. (2017).

7214:

7212:

6330:an initial state probability vector

1110:The objective is to choose a policy

7912:A First Course in Stochastic Models

7433:Zeitschrift für Operations Research

6219:happened"). Thus, one has an array

4009:{\displaystyle 0\leq \gamma <1.}

3669:based on MDPs are in computational

7719:Learning automata: An introduction

7480:Mathematics of Operations Research

5831:, which could give us the optimal

5753:

5730:

4854:

4828:

4708:

4452:

4169:

3883:

3852:

2558:, which contains real values, and

1414:is the discount factor satisfying

1164:

1058:(since the action chosen in state

25:

6417:Category theoretic interpretation

6129:pairs (together with the outcome

5584:is the terminal reward function,

1769:{\displaystyle a_{t}=\pi (s_{t})}

1290:{\displaystyle a_{t}=\pi (s_{t})}

173:{\displaystyle (S,A,P_{a},R_{a})}

119:A Markov decision process is a 4-

7065:Hamilton–Jacobi–Bellman equation

6605:

6602:

6599:

6596:

6534:

6531:

6528:

6525:

5877:is an interdisciplinary area of

2080:{\displaystyle s',r\gets G(s,a)}

7904:. Cambridge, MA: The MIT Press.

7177:Bellman, Richard (1958-09-01).

6967:{\displaystyle \Pr(s'\mid s,a)}

5462:

4827:

4707:

4368:

4353:

4168:

3820:ordinary differential equations

3693:A Markov decision process is a

1997:Another form of simulator is a

1514:{\displaystyle \gamma =1/(1+r)}

239:is a set of actions called the

7845:. Cambridge University Press.

7744:Narendra & Thathachar 1974

7061:for applications to economics.

7005:

6999:

6961:

6938:

6915:

6892:

6862:

6856:

6816:

6799:

6643:{\displaystyle {\mathcal {C}}}

6609:

6592:

6566:

6521:

6480:{\displaystyle {\mathcal {A}}}

6459:can be understood in terms of

6446:

6428:

6306:} of possible internal states,

6116:

6104:

6075:

6072:

6061:

6049:

6032:

6019:

6016:

5999:

5968:

5956:

5818:

5812:

5786:

5783:

5765:

5748:

5736:

5721:

5703:

5697:

5658:

5652:

5629:

5623:

5600:

5594:

5571:

5565:

5536:

5533:

5527:

5518:

5512:

5500:

5480:

5474:

5450:

5447:

5441:

5435:

5419:

5416:

5410:

5401:

5395:

5389:

5366:

5363:

5357:

5351:

5342:

5336:

5316:

5307:

5301:

5295:

5253:

5241:

5211:

5199:

5169:

5157:

5151:

5139:

5131:

5125:

5089:

5077:

5064:

5052:

5044:

5038:

4981:

4969:

4939:

4927:

4904:

4892:

4846:

4840:

4818:

4806:

4786:

4774:

4766:

4760:

4698:

4686:

4680:

4662:

4654:

4648:

4602:

4590:

4584:

4572:

4564:

4558:

4483:

4477:

4447:

4435:

4426:

4420:

4414:

4396:

4322:{\displaystyle {\bar {V}}^{*}}

4307:

4266:{\displaystyle {\bar {V}}^{*}}

4251:

4201:

4195:

4165:

4159:

4153:

4135:

4110:

4098:

3940:

3937:

3934:

3928:

3922:

3913:

3907:

3901:

3747:

3741:

3689:Extensions and generalizations

3597:In modified policy iteration (

3310:

3299:

3280:

3263:

3245:

3228:

3182:

3176:

3137:

3131:

3108:

3102:

3079:

3073:

3050:

3044:

2958:

2947:

2935:

2918:

2900:

2883:

2834:

2828:

2797:

2786:

2774:

2757:

2752:

2746:

2730:

2713:

2708:

2702:

2673:

2667:

2617:

2611:

2601:will contain the solution and

2457:

2440:

2408:

2391:

2287:

2245:

2074:

2062:

2056:

1975:

1958:

1851:

1819:

1763:

1750:

1708:

1676:

1508:

1496:

1372:

1340:

1284:

1271:

1229:

1197:

1094:

1088:

1021:

1015:

835:

818:

747:

679:

670:

653:

578:

561:

530:

462:

328:

311:

167:

129:

1:

7195:10.1016/S0019-9958(58)80003-0

6281:In learning automata theory,

5905:{\displaystyle \varepsilon -}

2238:is the set of ordered tuples

629:{\displaystyle S'\subseteq S}

7115:Puterman, Martin L. (1994).

5613:is the system state vector,

1455:, which is usually close to

1078:is completely determined by

7861:Appendix contains abridged

7014:{\displaystyle p_{s's}(a).}

6921:{\displaystyle \Pr(s,a,s')}

6822:{\displaystyle P_{a}(s,s')}

6358:which after each time step

6154:; that is, "I was in state

4333:Primal linear program(P-LP)

4217:If there exists a function

2463:{\displaystyle R_{a}(s,s')}

2414:{\displaystyle P_{a}(s,s')}

1981:{\displaystyle P_{a}(s,s')}

1586:{\displaystyle \ \gamma \ }

1407:{\displaystyle \ \gamma \ }

841:{\displaystyle R_{a}(s,s')}

334:{\displaystyle P_{a}(s,s')}

7971:

7783:. Vol. 2. MA: Athena.

6868:{\displaystyle p_{ss'}(a)}

6255:

5867:

5259:{\displaystyle y^{*}(i,a)}

5189:for all feasible solution

4987:{\displaystyle y^{*}(i,a)}

3826:, epidemic processes, and

3704:

1005:that specifies the action

43:sequential decision making

39:stochastic dynamic program

7877:Markov Decision Processes

7875:Puterman., M. L. (1994).

7760:Bellman., R. E. (2003) .

7688:10.1109/TSMC.1974.5408453

7623:10.1007/978-3-642-02547-1

7567:. Vol. 7. CRC Press.

5664:{\displaystyle f(\cdot )}

5577:{\displaystyle D(\cdot )}

4504:Dual linear program(D-LP)

3593:Modified policy iteration

3150:gives the combined step:

2215:In this example, we have

2011:that are expressed using

7367:; Shwartz, Adam (eds.).

7080:Mabinogion sheep problem

4059:is independent of state

3657:Computational complexity

3603:Puterman & Shin 1978

3121:into the calculation of

2365:{\displaystyle \{-1,1\}}

1926:{\displaystyle \pi ^{*}}

7542:10.1023/A:1017932429737

7467:Papadimitriou, Christos

7418:10.1287/mnsc.24.11.1127

7331:10.1073/pnas.39.10.1095

7263:10.1023/A:1017932429737

7183:Information and Control

7154:10.1145/1455567.1455587

7044:Quantum finite automata

6831:transition probability

6782:transition probability

6452:{\displaystyle (S,A,P)}

3802:scenarios in robotics.

3753:{\displaystyle \pi (s)}

3682:Monte Carlo tree search

3678:curse of dimensionality

3442:{\displaystyle V_{i+1}}

3114:{\displaystyle \pi (s)}

3056:{\displaystyle \pi (s)}

3010:, which is also called

2186:Monte Carlo tree search

1906:and is usually denoted

1521:for some discount rate

1100:{\displaystyle \pi (s)}

1027:{\displaystyle \pi (s)}

78:artificial intelligence

31:Markov decision process

18:Markov Decision Process

7803:. Boston, MA: Kluwer.

7779:Bertsekas, D. (1995).

7672:. SMC-4 (4): 323–334.

7563:Altman, Eitan (1999).

7034:Probabilistic automata

7015:

6968:

6922:

6869:

6823:

6644:

6616:

6542:

6481:

6453:

6283:a stochastic automaton

6233:

6213:

6188:

6168:

6148:

6123:

6088:

5934:

5906:

5875:Reinforcement learning

5870:Reinforcement learning

5864:Reinforcement learning

5855:

5825:

5793:

5665:

5636:

5607:

5578:

5547:

5260:

5218:

5217:{\displaystyle y(i,a)}

5180:

4988:

4946:

4945:{\displaystyle y(i,a)}

4911:

4910:{\displaystyle y(i,a)}

4874:

4494:

4323:

4287:

4267:

4231:

4208:

4073:

4053:

4010:

3975:

3775:CMDPs are solved with

3754:

3725:

3697:with only one player.

3676:. However, due to the

3647:

3627:

3580:

3553:

3483:

3463:

3443:

3406:

3379:

3353:

3330:

3144:

3115:

3086:

3057:

3028:

2975:

2809:

2644:

2624:

2595:

2575:

2552:

2530:function approximation

2515:in this specific case.

2509:

2484:

2464:

2415:

2366:

2331:

2309:

2232:

2209:

2190:reinforcement learning

2166:

2146:

2121:

2101:

2081:

2029:

1982:

1927:

1887:

1858:

1770:

1721:

1720:{\displaystyle E\left}

1657:

1607:

1587:

1561:

1535:

1515:

1469:

1449:

1408:

1379:

1291:

1242:

1241:{\displaystyle E\left}

1168:

1124:

1101:

1072:

1048:

1028:

999:

981:Optimization objective

971:

951:

931:

907:

887:

862:

842:

789:

754:

630:

599:

446:

420:

395:

375:

355:

335:

286:

266:

233:

197:

174:

116:

99:that still follow the

74:reinforcement learning

7909:Tijms., H.C. (2003).

7493:10.1287/moor.12.3.441

7016:

6969:

6923:

6870:

6824:

6654:Alternative notations

6645:

6617:

6548:encodes both the set

6543:

6482:

6454:

6393:". At each time step

6276:finite state automata

6270:paper is surveyed by

6234:

6214:

6189:

6169:

6149:

6124:

6122:{\displaystyle (s,a)}

6089:

5935:

5907:

5856:

5854:{\displaystyle V^{*}}

5826:

5794:

5666:

5637:

5608:

5579:

5548:

5261:

5219:

5181:

4989:

4947:

4912:

4875:

4495:

4324:

4288:

4273:will be the smallest

4268:

4232:

4209:

4074:

4054:

4052:{\displaystyle V^{*}}

4011:

3976:

3755:

3726:

3701:Partial observability

3648:

3628:

3581:

3554:

3509:In policy iteration (

3493:" for this problem).

3484:

3464:

3444:

3407:

3405:{\displaystyle V_{0}}

3380:

3354:

3331:

3145:

3116:

3087:

3063:is calculated within

3058:

3029:

2976:

2810:

2645:

2625:

2596:

2576:

2553:

2510:

2485:

2465:

2416:

2367:

2332:

2310:

2233:

2206:Open AI gym benchmark

2203:

2167:

2147:

2122:

2102:

2082:

2030:

1983:

1928:

1888:

1886:{\displaystyle \ H\ }

1859:

1771:

1722:

1631:

1608:

1588:

1562:

1536:

1516:

1470:

1450:

1409:

1380:

1292:

1243:

1148:

1125:

1102:

1073:

1049:

1029:

1000:

972:

952:

932:

908:

888:

863:

843:

790:

755:

631:

600:

447:

421:

396:

376:

356:

336:

287:

267:

265:{\displaystyle A_{s}}

234:

207:of states called the

198:

175:

114:

7884:Ross, S. M. (1983).

7870:on 18 December 2012.

7864:"Meyn & Tweedie"

7839:Meyn, S. P. (2007).

7713:Narendra, Kumpati S.

7256:(193–208): 193–208.

6978:

6932:

6886:

6835:

6786:

6630:

6563:

6511:

6491:with generating set

6467:

6425:

6223:

6198:

6178:

6158:

6133:

6101:

5947:

5924:

5893:

5838:

5824:{\displaystyle a(t)}

5806:

5678:

5646:

5635:{\displaystyle a(t)}

5617:

5606:{\displaystyle s(t)}

5588:

5559:

5285:

5228:

5193:

5001:

4956:

4921:

4886:

4512:

4341:

4297:

4277:

4241:

4221:

4086:

4063:

4036:

3988:

3845:

3828:population processes

3735:

3715:

3646:{\displaystyle \pi }

3637:

3617:

3609:Prioritized sweeping

3579:{\displaystyle \pi }

3570:

3552:{\displaystyle s=s'}

3532:

3473:

3453:

3420:

3389:

3363:

3343:

3157:

3143:{\displaystyle V(s)}

3125:

3096:

3085:{\displaystyle V(s)}

3067:

3038:

3027:{\displaystyle \pi }

3018:

2998:In value iteration (

2822:

2661:

2634:

2623:{\displaystyle V(s)}

2605:

2594:{\displaystyle \pi }

2585:

2574:{\displaystyle \pi }

2565:

2542:

2494:

2474:

2427:

2378:

2341:

2321:

2242:

2222:

2156:

2131:

2111:

2091:

2039:

2019:

1945:

1910:

1871:

1780:

1731:

1620:

1597:

1571:

1548:

1525:

1479:

1459:

1418:

1392:

1301:

1252:

1137:

1123:{\displaystyle \pi }

1114:

1082:

1062:

1038:

1009:

998:{\displaystyle \pi }

989:

961:

941:

930:{\displaystyle \pi }

921:

897:

872:

852:

805:

764:

640:

609:

456:

430:

405:

385:

365:

345:

298:

276:

249:

223:

187:

126:

7945:Dynamic programming

7788:Derman, C. (1970).

7762:Dynamic Programming

7365:Feinberg, Eugene A.

7322:1953PNAS...39.1095S

7075:Recursive economics

7054:Dynamic programming

6772:discounting factor

6766:discounting factor

6742:is the negative of

6719:is the negative of

6353:computable function

6293:of possible inputs,

5385:

3887:

3816:continuous dynamics

3781:dynamic programming

3378:{\displaystyle i=0}

2526:dynamic programming

2182:dynamic programming

957:) to action space (

445:{\displaystyle t+1}

401:will lead to state

215:set of real numbers

54:operations research

7955:Stochastic control

7445:10.1007/bf01920264

7406:Management Science

7306:"Stochastic Games"

7011:

6964:

6918:

6865:

6819:

6640:

6612:

6538:

6477:

6449:

6229:

6212:{\displaystyle s'}

6209:

6184:

6174:and I tried doing

6164:

6147:{\displaystyle s'}

6144:

6119:

6084:

5988:

5930:

5902:

5851:

5821:

5789:

5696:

5661:

5632:

5603:

5574:

5543:

5541:

5371:

5370:

5256:

5214:

5176:

5174:

5135:

5110:

5048:

5023:

4984:

4942:

4907:

4870:

4868:

4770:

4745:

4658:

4633:

4568:

4543:

4490:

4488:

4392:

4319:

4283:

4263:

4227:

4204:

4131:

4069:

4049:

4006:

3971:

3873:

3750:

3721:

3643:

3623:

3576:

3549:

3479:

3459:

3439:

3412:as a guess of the

3402:

3375:

3349:

3326:

3217:

3197:

3140:

3111:

3082:

3053:

3024:

3012:backward induction

2971:

2872:

2805:

2693:

2640:

2620:

2591:

2571:

2548:

2508:{\displaystyle s'}

2505:

2480:

2460:

2411:

2362:

2327:

2305:

2228:

2210:

2162:

2145:{\displaystyle s'}

2142:

2117:

2097:

2077:

2025:

1978:

1923:

1883:

1854:

1766:

1717:

1603:

1583:

1560:{\displaystyle H-}

1557:

1531:

1511:

1465:

1445:

1404:

1375:

1287:

1238:

1120:

1097:

1068:

1044:

1024:

995:

967:

947:

927:

917:A policy function

903:

886:{\displaystyle s'}

883:

858:

838:

785:

750:

626:

595:

442:

419:{\displaystyle s'}

416:

391:

371:

351:

331:

282:

262:

229:

193:

170:

117:

70:telecommunications

7940:Optimal decisions

7893:. Academic press.

7852:978-0-521-88441-9

7792:. Academic Press.

7771:978-0-486-42809-3

7723:. Prentice Hall.

7412:(11): 1127–1137.

7378:978-0-7923-7459-6

7316:(10): 1095–1100.

7230:978-0-262-03924-6

7163:978-1-4503-7861-1

7126:978-0-471-61977-2

6880:

6879:

6507:. Then a functor

6268:learning automata

6258:Learning automata

6252:Learning automata

6232:{\displaystyle Q}

6187:{\displaystyle a}

6167:{\displaystyle s}

6083:

5974:

5952:

5933:{\displaystyle a}

5760:

5687:

5492:

5460:

5328:

5111:

5095:

5024:

5008:

4852:

4746:

4730:

4634:

4618:

4612:

4544:

4528:

4522:

4377:

4366:

4351:

4310:

4286:{\displaystyle g}

4254:

4230:{\displaystyle h}

4184:

4116:

4072:{\displaystyle i}

3724:{\displaystyle s}

3667:decision problems

3626:{\displaystyle V}

3497:'s 1953 paper on

3482:{\displaystyle V}

3462:{\displaystyle s}

3352:{\displaystyle i}

3203:

3188:

2858:

2679:

2643:{\displaystyle s}

2551:{\displaystyle V}

2483:{\displaystyle 1}

2330:{\displaystyle A}

2284:

2263:

2231:{\displaystyle S}

2165:{\displaystyle r}

2120:{\displaystyle a}

2100:{\displaystyle s}

2028:{\displaystyle G}

1994:may be produced.

1882:

1876:

1727:(where we choose

1606:{\displaystyle H}

1582:

1576:

1534:{\displaystyle r}

1468:{\displaystyle 1}

1441:

1435:

1429:

1403:

1397:

1248:(where we choose

1071:{\displaystyle s}

1047:{\displaystyle s}

970:{\displaystyle A}

950:{\displaystyle S}

906:{\displaystyle a}

861:{\displaystyle s}

394:{\displaystyle t}

374:{\displaystyle s}

354:{\displaystyle a}

285:{\displaystyle s}

232:{\displaystyle A}

196:{\displaystyle S}

97:state transitions

52:Originating from

37:), also called a

16:(Redirected from

7962:

7950:Markov processes

7926:

7905:

7894:

7892:

7880:

7871:

7866:. Archived from

7860:

7859:on 19 June 2010.

7855:. Archived from

7835:

7814:

7793:

7784:

7775:

7747:

7741:

7735:

7734:

7722:

7709:

7700:

7699:

7681:

7662:

7656:

7655:

7653:

7652:

7642:

7633:

7627:

7626:

7609:

7603:

7602:

7590:

7584:

7583:

7575:

7569:

7568:

7560:

7554:

7553:

7551:

7549:

7544:

7529:Machine Learning

7520:

7514:

7513:

7511:

7509:

7504:

7471:Tsitsiklis, John

7463:

7457:

7456:

7428:

7422:

7421:

7401:

7395:

7389:

7383:

7382:

7360:

7354:

7353:

7343:

7333:

7298:

7292:

7291:

7274:

7268:

7267:

7265:

7250:Machine Learning

7241:

7235:

7234:

7216:

7207:

7206:

7174:

7168:

7167:

7137:

7131:

7130:

7112:

7085:Stochastic games

7059:Bellman equation

7020:

7018:

7017:

7012:

6998:

6997:

6993:

6973:

6971:

6970:

6965:

6948:

6927:

6925:

6924:

6919:

6914:

6874:

6872:

6871:

6866:

6855:

6854:

6853:

6828:

6826:

6825:

6820:

6815:

6798:

6797:

6775:

6769:

6759:

6753:

6745:

6741:

6736:

6730:

6722:

6718:

6713:

6707:

6697:

6691:

6674:

6670:

6666:

6662:

6649:

6647:

6646:

6641:

6639:

6638:

6621:

6619:

6618:

6613:

6608:

6591:

6590:

6575:

6574:

6547:

6545:

6544:

6539:

6537:

6520:

6519:

6501:Kleisli category

6486:

6484:

6483:

6478:

6476:

6475:

6458:

6456:

6455:

6450:

6264:machine learning

6238:

6236:

6235:

6230:

6218:

6216:

6215:

6210:

6208:

6193:

6191:

6190:

6185:

6173:

6171:

6170:

6165:

6153:

6151:

6150:

6145:

6143:

6128:

6126:

6125:

6120:

6093:

6091:

6090:

6085:

6081:

6071:

6048:

6031:

6030:

6015:

5998:

5997:

5987:

5986:

5950:

5939:

5937:

5936:

5931:

5911:

5909:

5908:

5903:

5879:machine learning

5860:

5858:

5857:

5852:

5850:

5849:

5830:

5828:

5827:

5822:

5798:

5796:

5795:

5790:

5761:

5759:

5751:

5728:

5695:

5670:

5668:

5667:

5662:

5641:

5639:

5638:

5633:

5612:

5610:

5609:

5604:

5583:

5581:

5580:

5575:

5552:

5550:

5549:

5544:

5542:

5493:

5491:

5483:

5466:

5461:

5458:

5384:

5379:

5369:

5323:

5265:

5263:

5262:

5257:

5240:

5239:

5223:

5221:

5220:

5215:

5185:

5183:

5182:

5177:

5175:

5134:

5109:

5076:

5075:

5047:

5022:

4993:

4991:

4990:

4985:

4968:

4967:

4951:

4949:

4948:

4943:

4916:

4914:

4913:

4908:

4879:

4877:

4876:

4871:

4869:

4853:

4850:

4801:

4769:

4744:

4726:

4657:

4632:

4613:

4610:

4567:

4542:

4523:

4520:

4499:

4497:

4496:

4491:

4489:

4391:

4367:

4364:

4352:

4349:

4328:

4326:

4325:

4320:

4318:

4317:

4312:

4311:

4303:

4292:

4290:

4289:

4284:

4272:

4270:

4269:

4264:

4262:

4261:

4256:

4255:

4247:

4236:

4234:

4233:

4228:

4213:

4211:

4210:

4205:

4185:

4182:

4130:

4078:

4076:

4075:

4070:

4058:

4056:

4055:

4050:

4048:

4047:

4015:

4013:

4012:

4007:

3980:

3978:

3977:

3972:

3970:

3966:

3965:

3964:

3955:

3951:

3897:

3896:

3886:

3881:

3860:

3859:

3824:queueing systems

3759:

3757:

3756:

3751:

3730:

3728:

3727:

3722:

3671:complexity class

3652:

3650:

3649:

3644:

3632:

3630:

3629:

3624:

3585:

3583:

3582:

3577:

3558:

3556:

3555:

3550:

3548:

3520:

3505:Policy iteration

3499:stochastic games

3491:Bellman equation

3488:

3486:

3485:

3480:

3468:

3466:

3465:

3460:

3448:

3446:

3445:

3440:

3438:

3437:

3411:

3409:

3408:

3403:

3401:

3400:

3384:

3382:

3381:

3376:

3358:

3356:

3355:

3350:

3335:

3333:

3332:

3327:

3322:

3318:

3317:

3313:

3309:

3298:

3297:

3279:

3262:

3261:

3244:

3227:

3226:

3216:

3215:

3196:

3175:

3174:

3149:

3147:

3146:

3141:

3120:

3118:

3117:

3112:

3091:

3089:

3088:

3083:

3062:

3060:

3059:

3054:

3033:

3031:

3030:

3025:

3009:

2989:Notable variants

2980:

2978:

2977:

2972:

2970:

2966:

2965:

2961:

2957:

2934:

2917:

2916:

2899:

2882:

2881:

2871:

2870:

2849:

2848:

2814:

2812:

2811:

2806:

2804:

2800:

2796:

2773:

2756:

2755:

2729:

2712:

2711:

2692:

2691:

2649:

2647:

2646:

2641:

2629:

2627:

2626:

2621:

2600:

2598:

2597:

2592:

2580:

2578:

2577:

2572:

2557:

2555:

2554:

2549:

2514:

2512:

2511:

2506:

2504:

2489:

2487:

2486:

2481:

2469:

2467:

2466:

2461:

2456:

2439:

2438:

2420:

2418:

2417:

2412:

2407:

2390:

2389:

2371:

2369:

2368:

2363:

2336:

2334:

2333:

2328:

2314:

2312:

2311:

2306:

2304:

2303:

2298:

2286:

2285:

2277:

2265:

2264:

2256:

2237:

2235:

2234:

2229:

2171:

2169:

2168:

2163:

2151:

2149:

2148:

2143:

2141:

2126:

2124:

2123:

2118:

2106:

2104:

2103:

2098:

2086:

2084:

2083:

2078:

2049:

2034:

2032:

2031:

2026:

2005:generative model

2000:generative model

1987:

1985:

1984:

1979:

1974:

1957:

1956:

1937:Simulator models

1932:

1930:

1929:

1924:

1922:

1921:

1892:

1890:

1889:

1884:

1880:

1874:

1863:

1861:

1860:

1855:

1850:

1849:

1831:

1830:

1818:

1817:

1816:

1815:

1798:

1797:

1775:

1773:

1772:

1767:

1762:

1761:

1743:

1742:

1726:

1724:

1723:

1718:

1716:

1712:

1711:

1707:

1706:

1688:

1687:

1675:

1674:

1673:

1672:

1656:

1645:

1612:

1610:

1609:

1604:

1592:

1590:

1589:

1584:

1580:

1574:

1566:

1564:

1563:

1558:

1540:

1538:

1537:

1532:

1520:

1518:

1517:

1512:

1495:

1474:

1472:

1471:

1466:

1454:

1452:

1451:

1446:

1439:

1433:

1427:

1413:

1411:

1410:

1405:

1401:

1395:

1384:

1382:

1381:

1376:

1371:

1370:

1352:

1351:

1339:

1338:

1337:

1336:

1319:

1318:

1296:

1294:

1293:

1288:

1283:

1282:

1264:

1263:

1247:

1245:

1244:

1239:

1237:

1233:

1232:

1228:

1227:

1209:

1208:

1196:

1195:

1194:

1193:

1179:

1178:

1167:

1162:

1129:

1127:

1126:

1121:

1106:

1104:

1103:

1098:

1077:

1075:

1074:

1069:

1053:

1051:

1050:

1045:

1033:

1031:

1030:

1025:

1004:

1002:

1001:

996:

976:

974:

973:

968:

956:

954:

953:

948:

936:

934:

933:

928:

912:

910:

909:

904:

893:, due to action

892:

890:

889:

884:

882:

867:

865:

864:

859:

847:

845:

844:

839:

834:

817:

816:

797:Lebesgue measure

794:

792:

791:

786:

784:

783:

778:

759:

757:

756:

751:

740:

739:

721:

720:

708:

697:

696:

669:

652:

651:

635:

633:

632:

627:

619:

604:

602:

601:

596:

591:

577:

560:

559:

550:

549:

548:

523:

522:

504:

503:

491:

480:

479:

451:

449:

448:

443:

425:

423:

422:

417:

415:

400:

398:

397:

392:

380:

378:

377:

372:

360:

358:

357:

352:

340:

338:

337:

332:

327:

310:

309:

291:

289:

288:

283:

271:

269:

268:

263:

261:

260:

245:(alternatively,

238:

236:

235:

230:

202:

200:

199:

194:

179:

177:

176:

171:

166:

165:

153:

152:

82:cause and effect

21:

7970:

7969:

7965:

7964:

7963:

7961:

7960:

7959:

7930:

7929:

7923:

7908:

7897:

7890:

7883:

7874:

7862:

7853:

7838:

7832:

7817:

7811:

7796:

7787:

7778:

7772:

7759:

7756:

7754:Further reading

7751:

7750:

7742:

7738:

7731:

7711:

7710:

7703:

7679:10.1.1.295.2280

7666:Narendra, K. S.

7664:

7663:

7659:

7650:

7648:

7640:

7635:

7634:

7630:

7611:

7610:

7606:

7592:

7591:

7587:

7577:

7576:

7572:

7562:

7561:

7557:

7547:

7545:

7522:

7521:

7517:

7507:

7505:

7465:

7464:

7460:

7430:

7429:

7425:

7403:

7402:

7398:

7390:

7386:

7379:

7362:

7361:

7357:

7300:

7299:

7295:

7288:

7276:

7275:

7271:

7243:

7242:

7238:

7231:

7218:

7217:

7210:

7176:

7175:

7171:

7164:

7139:

7138:

7134:

7127:

7114:

7113:

7109:

7104:

7099:

7070:Optimal control

7029:

7023:

6986:

6981:

6976:

6975:

6941:

6930:

6929:

6907:

6884:

6883:

6846:

6838:

6833:

6832:

6808:

6789:

6784:

6783:

6773:

6767:

6757:

6751:

6743:

6739:

6734:

6728:

6720:

6716:

6711:

6705:

6695:

6689:

6677:in this article

6668:

6664:

6660:

6656:

6628:

6627:

6561:

6560:

6509:

6508:

6465:

6464:

6461:Category theory

6423:

6422:

6419:

6346:

6340:

6318:

6312:

6305:

6299:

6260:

6254:

6249:

6221:

6220:

6201:

6196:

6195:

6176:

6175:

6156:

6155:

6136:

6131:

6130:

6099:

6098:

6064:

6041:

6022:

6008:

5989:

5979:

5945:

5944:

5922:

5921:

5918:

5891:

5890:

5883:optimal control

5872:

5866:

5841:

5836:

5835:

5804:

5803:

5752:

5729:

5676:

5675:

5644:

5643:

5615:

5614:

5586:

5585:

5557:

5556:

5540:

5539:

5484:

5467:

5463:

5454:

5453:

5324:

5283:

5282:

5272:

5231:

5226:

5225:

5191:

5190:

5173:

5172:

5067:

4999:

4998:

4959:

4954:

4953:

4919:

4918:

4884:

4883:

4867:

4866:

4851: and

4799:

4798:

4724:

4723:

4614:

4606:

4605:

4524:

4510:

4509:

4487:

4486:

4369:

4360:

4359:

4354:

4339:

4338:

4300:

4295:

4294:

4275:

4274:

4244:

4239:

4238:

4219:

4218:

4183: and

4084:

4083:

4061:

4060:

4039:

4034:

4033:

4021:

3986:

3985:

3956:

3888:

3872:

3869:

3868:

3864:

3851:

3843:

3842:

3808:

3800:motion planning

3777:linear programs

3766:

3733:

3732:

3713:

3712:

3709:

3703:

3695:stochastic game

3691:

3663:time complexity

3659:

3635:

3634:

3615:

3614:

3611:

3595:

3568:

3567:

3541:

3530:

3529:

3514:

3507:

3471:

3470:

3451:

3450:

3449:for all states

3423:

3418:

3417:

3392:

3387:

3386:

3361:

3360:

3341:

3340:

3302:

3289:

3272:

3253:

3252:

3248:

3237:

3218:

3208:

3202:

3198:

3160:

3155:

3154:

3123:

3122:

3094:

3093:

3065:

3064:

3036:

3035:

3016:

3015:

3003:

2996:

2994:Value iteration

2991:

2950:

2927:

2908:

2907:

2903:

2892:

2873:

2863:

2857:

2853:

2840:

2820:

2819:

2789:

2766:

2738:

2737:

2733:

2722:

2694:

2684:

2659:

2658:

2632:

2631:

2603:

2602:

2583:

2582:

2563:

2562:

2540:

2539:

2522:

2497:

2492:

2491:

2472:

2471:

2449:

2430:

2425:

2424:

2400:

2381:

2376:

2375:

2339:

2338:

2319:

2318:

2293:

2240:

2239:

2220:

2219:

2198:

2176:models through

2154:

2153:

2134:

2129:

2128:

2109:

2108:

2089:

2088:

2042:

2037:

2036:

2017:

2016:

2001:

1992:

1967:

1948:

1943:

1942:

1939:

1913:

1908:

1907:

1904:

1895:Learning Theory

1869:

1868:

1835:

1822:

1807:

1802:

1783:

1778:

1777:

1753:

1734:

1729:

1728:

1692:

1679:

1664:

1659:

1630:

1626:

1618:

1617:

1595:

1594:

1569:

1568:

1546:

1545:

1523:

1522:

1477:

1476:

1457:

1456:

1416:

1415:

1390:

1389:

1356:

1343:

1328:

1323:

1304:

1299:

1298:

1274:

1255:

1250:

1249:

1213:

1200:

1185:

1180:

1170:

1147:

1143:

1135:

1134:

1112:

1111:

1080:

1079:

1060:

1059:

1036:

1035:

1007:

1006:

987:

986:

983:

959:

958:

939:

938:

919:

918:

895:

894:

875:

870:

869:

850:

849:

827:

808:

803:

802:

773:

762:

761:

731:

712:

701:

682:

662:

643:

638:

637:

612:

607:

606:

584:

570:

551:

541:

536:

514:

495:

484:

465:

454:

453:

428:

427:

408:

403:

402:

383:

382:

363:

362:

343:

342:

320:

301:

296:

295:

274:

273:

252:

247:

246:

243:

221:

220:

211:

185:

184:

157:

144:

124:

123:

109:

101:Markov property

49:are uncertain.

28:

23:

22:

15:

12:

11:

5:

7968:

7966:

7958:

7957:

7952:

7947:

7942:

7932:

7931:

7928:

7927:

7921:

7906:

7895:

7881:

7872:

7851:

7836:

7830:

7815:

7809:

7794:

7785:

7776:

7770:

7755:

7752:

7749:

7748:

7736:

7729:

7701:

7657:

7628:

7604:

7585:

7570:

7555:

7515:

7458:

7439:(5): 203–208.

7423:

7396:

7384:

7377:

7355:

7302:Shapley, Lloyd

7293:

7286:

7269:

7236:

7229:

7208:

7189:(3): 228–239.

7169:

7162:

7132:

7125:

7106:

7105:

7103:

7100:

7098:

7097:

7092:

7087:

7082:

7077:

7072:

7067:

7062:

7056:

7051:

7046:

7041:

7039:Odds algorithm

7036:

7030:

7028:

7025:

7010:

7007:

7004:

7001:

6996:

6992:

6989:

6984:

6963:

6960:

6957:

6954:

6951:

6947:

6944:

6940:

6937:

6917:

6913:

6910:

6906:

6903:

6900:

6897:

6894:

6891:

6878:

6877:

6875:

6864:

6861:

6858:

6852:

6849:

6845:

6841:

6829:

6818:

6814:

6811:

6807:

6804:

6801:

6796:

6792:

6779:

6778:

6776:

6770:

6763:

6762:

6760:

6754:

6747:

6746:

6737:

6731:

6724:

6723:

6714:

6708:

6701:

6700:

6698:

6692:

6685:

6684:

6681:

6678:

6655:

6652:

6637:

6611:

6607:

6604:

6601:

6598:

6594:

6589:

6584:

6581:

6578:

6573:

6568:

6536:

6533:

6530:

6527:

6523:

6518:

6474:

6463:. Namely, let

6448:

6445:

6442:

6439:

6436:

6433:

6430:

6418:

6415:

6391:Markov process

6387:

6386:

6379:

6349:

6344:

6338:

6328:

6314:

6310:

6307:

6301:

6297:

6294:

6256:Main article:

6253:

6250:

6248:

6245:

6228:

6207: