25:

181:. For optimistic locking each row has an independent version number, typically a sequential counter. This allows a process to atomically update a row and increment its counter only if another process has not incremented the counter. But CDC cannot use row-level versions to find all changes unless it knows the original "starting" version of every row. This is impractical to maintain.)

216:

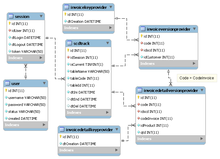

queue table. The queue table might have schema with the following fields: Id, TableName, RowId, Timestamp, Operation. The data inserted for our

Account sample might be: 1, Accounts, 76, 2008-11-02 00:15, Update. More complicated designs might log the actual data that changed. This queue table could then be "played back" to replicate the data from the source system to a target.

362:

141:

In a simplified CDC context, one computer system has data believed to have changed from a previous point in time, and a second computer system needs to take action based on that changed data. The former is the source, the latter is the target. It is possible that the source and target are the same

198:

This approach combines the three previously discussed methods. As noted, it is not uncommon to see multiple CDC solutions at work in a single system, however, the combination of time, version, and status provides a particularly powerful mechanism and programmers should utilize them as a trio where

189:

This technique can either supplement or complement timestamps and versioning. It can configure an alternative if, for example, a status column is set up on a table row indicating that the row has changed (e.g., a boolean column that, when set to true, indicates that the row has changed). Otherwise,

376:

is used as an alternative method. CDC and SCD are similar in that both methods can detect changes in a data set. The most common forms of SCD are type 1 (overwrite), type 2 (maintain history) or 3 (only previous and current value). SCD 2 can be useful if history is needed in the target system. CDC

173:

One technique is to mark each changed row with a version number. A current version is maintained for the table, or possibly a group of tables. This is stored in a supporting construct such as a reference table. When a change capture occurs, all data with the latest version number is considered to

215:

log events that happen to the transactional table into another queue table that can later be "played back". For example, imagine an

Accounts table, when transactions are taken against this table, triggers would fire that would then store a history of the event or even the deltas into a separate

219:

Data capture offers a challenge in that the structure, contents and use of a transaction log is specific to a database management system. Unlike data access, no standard exists for transaction logs. Most database management systems do not document the internal format of their transaction logs,

199:

possible. The three elements are not redundant or superfluous. Using them together allows for such logic as, "Capture all data for version 2.1 that changed between 2005-06-01 00:00 and 2005-07-01 00:00 where the status code indicates it is ready for production."

230:

Translation between physical storage formats that are recorded in the transaction logs and the logical formats typically expected by database users (e.g., some transaction logs save only minimal buffer differences that are not directly useful for change

190:

it can act as a complement to the previous methods, indicating that a row, despite having a new version number or a later date, still shouldn't be updated on the target (for example, the data may require human validation).

272:: log scanning can produce a change stream that replays the original transactions in the order they were committed. Such a change stream include changes made to all tables participating in the captured transaction.

154:

change. Names such as LAST_UPDATE, LAST_MODIFIED, etc. are common. Any row in any table that has a timestamp in that column that is more recent than the last time data was captured is considered to have changed.

346:: the source process creates a snapshot of changes within its own process and delivers rows downstream. The downstream process uses the snapshot, creates its own subset and delivers them to the next process.

352:: the target that is immediately downstream from the source, prepares a request for data from the source. The downstream target delivers the snapshot to the next target, as in the push model.

130:

environments since capturing and preserving the state of data across time is one of the core functions of a data warehouse, but CDC can be utilized in any database or data repository system.

227:

Coordinating the reading of the transaction logs and the archiving of log files (database management software typically archives log files off-line on a regular basis).

54:

138:

System developers can set up CDC mechanisms in a number of ways and in any one or a combination of system layers from application logic down to physical storage.

119:

that is based on the identification, capture and delivery of the changes made to enterprise data sources. For instance it can be used for incremental update of

170:

Database designers give tables whose changes must be captured a column that contains a version number. Names such as VERSION_NUMBER, etc. are common.

105:

used to determine and track the data that has changed (the "deltas") so that action can be taken using the changed data. The result is a

220:

although some provide programmatic interfaces to their transaction logs (for example: Oracle, DB2, SQL/MP, SQL/MX and SQL Server 2008).

102:

76:

142:

system physically, but that would not change the design pattern logically. Multiple CDC solutions can exist in a single system.

377:

overwrites in the target system (akin to SCD1), and is ideal when only the changed data needs to arrive at the target, i.e. a

37:

284:

As often occurs in complex domains, the final solution to a CDC problem may have to balance many competing concerns.

47:

41:

33:

442:

Ankorion, Itamar

Ankorion 2005. “Change Data Capture Efficient ETL for Real-Time BI.” Information Management, 15(1).

238:

300:

track the user who last looked at but did not change the data in the same structure as the data. This results in

432:

398:

373:

365:

301:

58:

472:

234:

Dealing with changes to the format of the transaction logs between versions of the database management system.

174:

have changed. Once the change capture is complete, the reference table is updated with a new version number.

467:

452:

393:

263:

312:

Actually tracking the changes depends on the data source. If the data is being persisted in a modern

292:

Change data capture both increases in complexity and reduces in value if the source system saves

178:

159:

316:

then Change Data

Capture is a simple matter of permissions. Two techniques are in common use:

321:

212:

208:

116:

327:

237:

Eliminating uncommitted changes that the database wrote to the transaction log and later

269:

127:

461:

248:

CDC solutions based on transaction log files have distinct advantages that include:

150:

Tables whose changes must be captured may have a column that represents the time of

253:

120:

211:

pattern to communicate the changed data to multiple targets. In this approach,

334:

If the data is not in a modern database, CDC becomes a programming challenge.

297:

223:

Other challenges in using transaction logs for change data capture include:

259:

no need for programmatic changes to the applications that use the database.

313:

293:

90:

380:

108:

361:

360:

177:(Do not confuse this technique with row-level versioning used for

296:

changes when the data itself is not modified. For example, some

244:

Dealing with changes to the metadata of tables in the database.

18:

433:

Slowly

Changing Dimensions (SCD) vs Change Data Capture (CDC)

252:

minimal impact on the database (even more so if one uses

158:Timestamps on rows are also frequently used for

46:but its sources remain unclear because it lacks

16:Set of software design patterns in a database

8:

256:to process the logs on a dedicated host).

77:Learn how and when to remove this message

410:

275:no need to change the database schema

7:

330:as, or shortly after, it is written.

162:so this column is often available.

14:

23:

1:

304:in the Change Data Capture.

194:Time/version/status on rows

489:

399:Slowly changing dimension

374:slowly changing dimension

366:Slowly changing dimension

288:Unsuitable source systems

185:Status indicators on rows

32:This article includes a

320:Tracking changes using

270:transactional integrity

166:Version numbers on rows

101:) is a set of software

61:more precise citations.

453:Oracle Data Integrator

369:

115:CDC is an approach to

394:Referential integrity

364:

266:in acquiring changes.

308:Tracking the capture

126:CDC occurs often in

418:Eroe, Erit (2015).

368:(SCD) model example

280:Confounding factors

95:change data capture

370:

203:Triggers on tables

179:optimistic locking

160:optimistic locking

146:Timestamps on rows

34:list of references

322:database triggers

209:publish/subscribe

87:

86:

79:

480:

435:

430:

424:

423:

415:

338:Push versus pull

117:data integration

82:

75:

71:

68:

62:

57:this article by

48:inline citations

27:

26:

19:

488:

487:

483:

482:

481:

479:

478:

477:

473:Data management

458:

457:

449:

439:

438:

431:

427:

417:

416:

412:

407:

390:

359:

340:

328:transaction log

310:

290:

282:

205:

196:

187:

168:

148:

136:

103:design patterns

83:

72:

66:

63:

52:

38:related reading

28:

24:

17:

12:

11:

5:

486:

484:

476:

475:

470:

460:

459:

456:

455:

448:

445:

444:

443:

437:

436:

425:

409:

408:

406:

403:

402:

401:

396:

389:

386:

372:Sometimes the

358:

355:

354:

353:

347:

339:

336:

332:

331:

324:

309:

306:

289:

286:

281:

278:

277:

276:

273:

267:

260:

257:

246:

245:

242:

235:

232:

228:

207:May include a

204:

201:

195:

192:

186:

183:

167:

164:

147:

144:

135:

132:

128:data warehouse

85:

84:

42:external links

31:

29:

22:

15:

13:

10:

9:

6:

4:

3:

2:

485:

474:

471:

469:

468:Computer data

466:

465:

463:

454:

451:

450:

446:

441:

440:

434:

429:

426:

421:

414:

411:

404:

400:

397:

395:

392:

391:

387:

385:

383:

382:

379:delta-driven

375:

367:

363:

356:

351:

348:

345:

342:

341:

337:

335:

329:

325:

323:

319:

318:

317:

315:

307:

305:

303:

299:

295:

287:

285:

279:

274:

271:

268:

265:

261:

258:

255:

251:

250:

249:

243:

240:

236:

233:

229:

226:

225:

224:

221:

217:

214:

210:

202:

200:

193:

191:

184:

182:

180:

175:

171:

165:

163:

161:

156:

153:

145:

143:

139:

133:

131:

129:

124:

122:

118:

113:

111:

110:

107:delta-driven

104:

100:

96:

92:

81:

78:

70:

60:

56:

50:

49:

43:

39:

35:

30:

21:

20:

428:

419:

413:

378:

371:

357:Alternatives

349:

343:

333:

326:Reading the

311:

291:

283:

254:log shipping

247:

222:

218:

206:

197:

188:

176:

172:

169:

157:

151:

149:

140:

137:

125:

121:data loading

114:

106:

98:

94:

88:

73:

64:

53:Please help

45:

298:Data models

239:rolled back

231:consumers).

134:Methodology

59:introducing

462:Categories

405:References

67:March 2016

91:databases

447:See also

388:See also

314:database

294:metadata

213:triggers

381:dataset

264:latency

109:dataset

55:improve

422:. Rty.

302:noise

40:, or

420:4ggg

350:Pull

344:Push

262:low

152:last

99:CDC

89:In

464::

384:.

123:.

112:.

93:,

44:,

36:,

241:.

97:(

80:)

74:(

69:)

65:(

51:.

Text is available under the Creative Commons Attribution-ShareAlike License. Additional terms may apply.