38:

184:

190:

86:

513:

231:– You must give appropriate credit, provide a link to the license, and indicate if changes were made. You may do so in any reasonable manner, but not in any way that suggests the licensor endorses you or your use.

52:

48:

42:

97:

65:

544:

301:

424:

388:

318:

352:

335:

372:

444:

408:

285:

512:

507:

56:

37:

530:

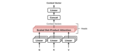

Uploaded a work by dvgodoy from https://github.com/dvgodoy/dl-visuals/?tab=readme-ov-file with UploadWizard

200:

197:

562:

523:

269:

Illustrations for the

Transformer, and attention mechanism. Multiheaded attention, block diagram.

140:

Illustrations for the

Transformer, and attention mechanism. Multiheaded attention, block diagram.

105:

159:

92:

149:

480:

Click on a date/time to view the file as it appeared at that time.

80:

160:

https://github.com/dvgodoy/dl-visuals/?tab=readme-ov-file

124:

104:

Commons is a freely licensed media file repository.

70:(1,426 × 630 pixels, file size: 18 KB, MIME type:

563:Transformador (modelo de aprendizaxe automática)

425:Creative Commons Attribution 4.0 International

85:

183:

8:

238:https://creativecommons.org/licenses/by/4.0

214:– to copy, distribute and transmit the work

482:

265:

555:The following other wikis use this file:

545:Transformer (deep learning architecture)

130:Multiheaded attention, block diagram.png

542:

458:

442:

422:

406:

386:

370:

350:

333:

316:

299:

283:

280:

261:

254:

7:

540:The following page uses this file:

471:

189:

274:

268:

205:

180:

134:

117:

63:

273:

244:Creative Commons Attribution 4.0

259:

225:Under the following conditions:

196:This file is licensed under the

188:

182:

84:

31:

21:

256:

135:

14:

255:

26:

1:

201:Attribution 4.0 International

281:Items portrayed in this file

581:

559:Usage on gl.wikipedia.org

472:

16:

258:

91:This is a file from the

529:

165:

155:

148:

145:

127:

95:. Information from its

98:description page there

41:Size of this preview:

508:03:42, 6 August 2024

220:– to adapt the work

47:Other resolutions:

57:1,426 × 630 pixels

551:Global file usage

533:

409:copyright license

267:

173:

172:

113:

112:

93:Wikimedia Commons

32:Global file usage

572:

520:

373:copyright status

251:

248:

245:

242:

239:

198:Creative Commons

192:

191:

186:

185:

151:

139:

131:

125:

109:

88:

87:

81:

75:

73:

60:

53:640 × 283 pixels

49:320 × 141 pixels

43:800 × 353 pixels

580:

579:

575:

574:

573:

571:

570:

569:

549:

534:

526:

518:

474:

473:

470:

469:

468:

467:

466:

465:

464:

463:

461:

451:

450:

449:

447:

436:

435:

434:

433:

432:

431:

430:

429:

427:

415:

414:

413:

411:

400:

399:

398:

397:

396:

395:

394:

393:

391:

379:

378:

377:

375:

364:

363:

362:

361:

360:

359:

358:

357:

355:

344:

343:

342:

341:

340:

338:

327:

326:

325:

324:

323:

321:

310:

309:

308:

307:

306:

304:

292:

291:

290:

288:

272:

271:

270:

253:

252:

249:

246:

243:

240:

237:

236:

204:

193:

179:

174:

141:

129:

122:

115:

114:

103:

102:

101:is shown below.

77:

71:

69:

62:

61:

46:

12:

11:

5:

578:

576:

568:

567:

566:

565:

553:

552:

548:

547:

538:

537:

532:

531:

528:

524:

521:

515:

510:

505:

501:

500:

497:

494:

491:

488:

485:

478:

477:

462:

459:

457:

456:

455:

454:

453:

452:

448:

443:

441:

440:

439:

438:

437:

428:

423:

421:

420:

419:

418:

417:

416:

412:

407:

405:

404:

403:

402:

401:

392:

387:

385:

384:

383:

382:

381:

380:

376:

371:

369:

368:

367:

366:

365:

356:

351:

349:

348:

347:

346:

345:

339:

334:

332:

331:

330:

329:

328:

322:

317:

315:

314:

313:

312:

311:

305:

300:

298:

297:

296:

295:

294:

293:

289:

284:

282:

279:

278:

277:

276:

275:

264:

263:

260:

257:

235:

234:

233:

232:

223:

222:

221:

215:

208:You are free:

195:

194:

181:

178:

175:

171:

170:

167:

163:

162:

157:

153:

152:

147:

143:

142:

132:

123:

121:

118:

116:

111:

110:

89:

79:

78:

40:

36:

35:

34:

29:

24:

19:

13:

10:

9:

6:

4:

3:

2:

577:

564:

561:

560:

558:

557:

556:

550:

546:

543:

541:

535:

527:

525:Cosmia Nebula

522:

516:

514:

511:

509:

506:

503:

502:

498:

495:

492:

489:

486:

484:

483:

481:

475:

446:

426:

410:

390:

374:

354:

337:

320:

303:

287:

230:

227:

226:

224:

219:

216:

213:

210:

209:

207:

206:

202:

199:

187:

176:

168:

164:

161:

158:

154:

144:

138:

133:

126:

119:

107:

100:

99:

94:

90:

83:

82:

76:

67:

66:Original file

58:

54:

50:

44:

39:

33:

30:

28:

25:

23:

20:

18:

15:

554:

539:

517:1,426 × 630

479:

476:File history

228:

217:

211:

136:

106:You can help

96:

64:

22:File history

460:6 June 2021

389:copyrighted

319:transformer

229:attribution

150:6 June 2021

128:Description

536:File usage

493:Dimensions

241:CC BY 4.0

27:File usage

490:Thumbnail

487:Date/Time

445:inception

302:attention

177:Licensing

137:English:

72:image/png

262:Captions

218:to remix

212:to share

203:license.

519:(18 KB)

504:current

499:Comment

353:encoder

336:decoder

286:depicts

266:English

169:dvgodoy

120:Summary

68:

166:Author

156:Source

496:User

250:true

247:true

146:Date

17:File

55:|

51:|

45:.

108:.

74:)

59:.

Text is available under the Creative Commons Attribution-ShareAlike License. Additional terms may apply.