2159:. The convergence of multilayered GMDH algorithms was investigated. It was shown that some multilayered algorithms have "multilayerness error" – analogous to static error of control systems. In 1977 a solution of objective systems analysis problems by multilayered GMDH algorithms was proposed. It turned out that sorting-out by criteria ensemble finds the only optimal system of equations and therefore to show complex object elements, their main input and output variables.

1887:

2149:. The problem of modeling of noised data and incomplete information basis was solved. Multicriteria selection and utilization of additional priory information for noiseimmunity increasing were proposed. Best experiments showed that with extended definition of the optimal model by additional criterion noise level can be ten times more than signal. Then it was improved using

2165:. Many important theoretical results were received. It became clear that full physical models cannot be used for long-term forecasting. It was proved, that non-physical models of GMDH are more accurate for approximation and forecast than physical models of regression analysis. Two-level algorithms which use two different time scales for modeling were developed.

1572:

1021:

1294:

2142:

is characterized by application of only regularity criterion for solving of the problems of identification, pattern recognition and short-term forecasting. As reference functions polynomials, logical nets, fuzzy Zadeh sets and Bayes probability formulas were used. Authors were stimulated by very high

2206:

Another important approach to partial models consideration that becomes more and more popular is a combinatorial search that is either limited or full. This approach has some advantages against

Polynomial Neural Networks, but requires considerable computational power and thus is not effective for

2197:

with polynomial activation function of neurons. Therefore, the algorithm with such an approach usually referred as GMDH-type Neural

Network or Polynomial Neural Network. Li showed that GMDH-type neural network performed better than the classical forecasting algorithms such as Single Exponential

2207:

objects with a large number of inputs. An important achievement of

Combinatorial GMDH is that it fully outperforms linear regression approach if noise level in the input data is greater than zero. It guarantees that the most optimal model will be founded during exhaustive sorting.

2188:

There are many different ways to choose an order for partial models consideration. The very first consideration order used in GMDH and originally called multilayered inductive procedure is the most popular one. It is a sorting-out of gradually complicated models generated from

275:

First, we split the full dataset into two parts: a training set and a validation set. The training set would be used to fit more and more model parameters, and the validation set would be used to decide which parameters to include, and when to stop fitting completely.

707:

1882:{\displaystyle Y(x_{1},\dots ,x_{n})=a_{0}+\sum \limits _{i=1}^{n}{a_{i}}x_{i}+\sum \limits _{i=1}^{n}{\sum \limits _{j=i}^{n}{a_{ij}}}x_{i}x_{j}+\sum \limits _{i=1}^{n}{\sum \limits _{j=i}^{n}{\sum \limits _{k=j}^{n}{a_{ijk}}}}x_{i}x_{j}x_{k}+\cdots }

501:

2111:

The model is structured as a feedforward neural network, but without restrictions on the depth, they had a procedure for automatic models structure generation, which imitates the process of biological selection with pairwise genetic features.

2009:

2233:

In contrast to GMDH-type neural networks

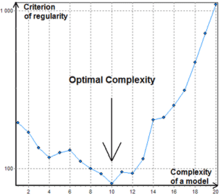

Combinatorial algorithm usually does not stop at the certain level of complexity because a point of increase of criterion value can be simply a local minimum, see Fig.1.

2171:

the new algorithms (AC, OCC, PF) for non-parametric modeling of fuzzy objects and SLP for expert systems were developed and investigated. Present stage of GMDH development can be described as blossom out of

2062:

Criterion of

Minimum bias or Consistency – squared difference between the estimated outputs (or coefficients vectors) of two models fit on the A and B set, divided by squared predictions on the B set.

178:

308:

1523:

More sophisticated methods for deciding when to terminate are possible. For example, one might keep running the algorithm for several more steps, in the hope of passing a temporary rise in

30:

is a family of inductive algorithms for computer-based mathematical modeling of multi-parametric datasets that features fully automatic structural and parametric optimization of models.

1492:

1381:

1288:

2108:, they proposed "noise-immune modelling": the higher the noise, the less parameters must the optimal model have, since the noisy channel does not allow more bits to be sent through.

1144:

1066:

702:

1016:{\displaystyle y\approx f_{(i,j);a,b,c,d,e,h}(x_{i},x_{j}):=a_{i,j}+b_{i,j}x_{i}+c_{i,j}x_{j}+d_{i,j}x_{i}^{2}+e_{i,j}x_{j}^{2}+f_{i,j}x_{i}x_{j}\quad \forall 1\leq i<j\leq k}

57:. GMDH algorithms are characterized by inductive procedure that performs sorting-out of gradually complicated polynomial models and selecting the best solution by means of the

250:

605:

551:

1186:

1556:

1416:

1216:

1518:

657:

631:

303:

270:

198:

2824:

Li, Rita Yi Man; Fong, Simon; Chong, Kyle Weng Sang (2017). "Forecasting the REITs and stock indices: Group Method of Data

Handling Neural Network approach".

2789:

Takao, S.; Kondo, S.; Ueno, J.; Kondo, T. (2017). "Deep feedback GMDH-type neural network and its application to medical image analysis of MRI brain images".

1071:

We do not want to accept all the polynomial models, since it would contain too many models. To only select the best subset of these models, we run each model

1146:

on the validation dataset, and select the models whose mean-square-error is below a threshold. We also write down the smallest mean-square-error achieved as

2121:

2075:

1902:

2304:— GMDH-based, predictive analytics and time series forecasting software. Free Academic Licensing and Free Trial version available. Windows-only.

2930:

2675:

Ivakhnenko, Aleksei G., and

Grigorii A. Ivakhnenko. "Problems of further development of the group method of data handling algorithms. Part I."

2920:

2925:

2390:

2071:

Like linear regression, which fits a linear equation over data, GMDH fits arbitrarily high orders of polynomial equations over data.

2915:

2193:. The best model is indicated by the minimum of the external criterion characteristic. Multilayered procedure is equivalent to the

2720:

2585:

2380:

2289:

2129:

2086:

2056:

87:

279:

The GMDH starts by considering degree-2 polynomial in 2 variables. Suppose we want to predict the target using just the

64:

Other names include "polynomial feedforward neural network", or "self-organization of models". It was one of the first

2935:

50:

2295:

2194:

1566:

Instead of a degree-2 polynomial in 2 variables, each unit may use higher-degree polynomials in more variables:

1421:

1304:

1221:

2176:

neuronets and parallel inductive algorithms for multiprocessor computers. Such procedure is currently used in

1418:

is smaller than the previous one, the process continues, giving us increasingly deep models. As soon as some

2329:

1293:

1074:

2769:

1026:

662:

1218:

models. We now run the models on the training dataset, to obtain a sequence of transformed observations:

2626:(Modern Analytic and Computational Methods in Science and Mathematics, v.8 ed.). American Elsevier.

2120:

2098:

496:{\displaystyle y\approx f_{a,b,c,d,e,h}(x_{i},x_{j}):=a+bx_{i}+cx_{j}+dx_{i}^{2}+ex_{j}^{2}+fx_{i}x_{j}}

2104:

Inspired by an analogy between constructing a model out of noisy data, and sending messages through a

1892:

And more generally, a GMDH model with multiple inputs and one output is a subset of components of the

2150:

203:

2421:

2774:

2105:

54:

38:

1297:

Fig.1. A typical distribution of minimal errors. The process terminates when a minimum is reached.

2841:

2806:

2638:

2563:

2537:

560:

506:

1149:

305:

parts of the observation, and using only degree-2 polynomials, then the most we can do is this:

2658:

2555:

2480:

2441:

2386:

2090:

554:

2229:

For the selected model of optimal complexity recalculate coefficients on a whole data sample.

2833:

2798:

2650:

2547:

2510:

2472:

2461:"Learning polynomial feedforward neural networks by genetic programming and backpropagation"

2433:

2217:

Generates subsamples from A according to partial models with steadily increasing complexity.

1526:

1386:

2755:"The Review of Problems Solvable by Algorithms of the Group Method of Data Handling (GMDH)"

1194:

2677:

Pattern

Recognition and Image Analysis c/c of raspoznavaniye obrazov i analiz izobrazhenii

46:

2301:

1497:

636:

610:

282:

2074:

To choose between models, two or more subsets of a data sample are used, similar to the

2886:

2620:

1520:) is discarded, as it has overfit the training set. The previous layers are outputted.

255:

183:

2729:

2909:

2880:

2845:

2177:

2173:

2049:

2041:

65:

2810:

2567:

2143:

accuracy of forecasting with the new approach. Noise immunity was not investigated.

61:. The last section of contains a summary of the applications of GMDH in the 1970s.

2754:

2437:

2226:

Chooses the best model (set of models) indicated by minimal value of the criterion.

2094:

2837:

2594:

2499:

2551:

84:

This is the general problem of statistical modelling of data: Consider a dataset

2654:

2460:

2335:

42:

34:

2897:

2396:

2802:

2514:

2319:

2198:

Smooth, Double

Exponential Smooth, ARIMA and back-propagation neural network.

2691:

Perspectives of

Planing. Organization of Economic Cooperation and Development

2662:

2528:

Schmidhuber, Jürgen (2015). "Deep learning in neural networks: An overview".

2484:

2445:

2341:

2004:{\displaystyle Y(x_{1},\dots ,x_{n})=a_{0}+\sum \limits _{i=1}^{m}a_{i}f_{i}}

2476:

2276:

Algorithm on the base of Multilayered Theory of Statistical Decisions (MTSD)

2220:

Estimates coefficients of partial models at each layer of models complexity.

2082:

2559:

2307:

2862:

2347:

272:

to predict. How to best predict the target based on the observations?

2044:

on the validation set, as given above. The most common criteria are:

2040:

External criteria are optimization objectives for the model, such as

2313:

659:

we should choose, so we choose all of them. That is, we perform all

17:

2637:

Ivakhenko, A.G.; Savchenko, E.A..; Ivakhenko, G.A. (October 2003).

2881:

Heuristic Self-Organization in Problems of Engineering Cybernetics

2542:

2325:

2119:

1292:

2310:— Commercial product. Mac OS X-only. Free Demo version available.

2722:

Inductive Method of Models Self-organisation for Complex Systems

2587:

Pomekhoustojchivost' Modelirovanija (Noise Immunity of Modeling)

2354:

Python library of basic GMDH algorithms (COMBI, MULTI, MIA, RIA)

2353:

2133:

2021:

are elementary functions dependent on different sets of inputs,

2223:

Calculates value of external criterion for models on sample B.

1191:

Suppose that after this process, we have obtained a set of

2899:

Inductive Learning Algorithms for Complex Systems Modeling

2891:

Self-Organizing Methods in Modelling: GMDH Type Algorithms

2382:

Inductive Learning Algorithms for Complex Systems Modeling

2124:

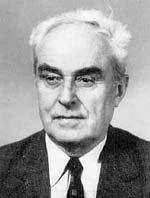

GMDH author – Soviet scientist Prof. Alexey G. Ivakhnenko.

68:

methods, used to train an eight-layer neural net in 1971.

2210:

Basic Combinatorial algorithm makes the following steps:

1494:, the algorithm terminates. The last layer fitted (layer

2214:

Divides data sample at least into two samples A and B.

1905:

1575:

1529:

1500:

1424:

1389:

1307:

1224:

1197:

1152:

1077:

1029:

710:

665:

639:

613:

563:

509:

311:

285:

258:

206:

186:

90:

2097:

principle of "freedom of decisions choice", and the

2893:. New-York, Bazel: Marcel Decker Inc., 1984, 350 p.

2298:— Free upon request for academic use. Windows-only.

173:{\displaystyle \{(x_{1},...,x_{k};y)_{s}\}_{s=1:n}}

2867:

2619:

2507:IEEE Transactions on Systems, Man, and Cybernetics

2003:

1881:

1550:

1512:

1486:

1410:

1375:

1282:

1210:

1180:

1138:

1060:

1015:

696:

651:

625:

599:

545:

495:

297:

264:

244:

192:

172:

2883:, Automatica, vol.6, 1970 — p. 207-219.

2639:"Problems of future GMDH algorithms development"

2032:is the number of the base function components.

2267:Pointing Finger (PF) clusterization algorithm;

8:

149:

91:

2753:Ivakhnenko, O.G.; Ivakhnenko, G.A. (1995).

2128:The method was originated in 1968 by Prof.

2584:Ivakhnenko, O.G.; Stepashko, V.S. (1985).

1487:{\displaystyle minMSE_{L+1}>minMSE_{L}}

1290:. The same algorithm can now be run again.

2773:

2541:

2279:Group of Adaptive Models Evolution (GAME)

1995:

1985:

1975:

1964:

1951:

1935:

1916:

1904:

1867:

1857:

1847:

1828:

1823:

1817:

1806:

1801:

1795:

1784:

1779:

1773:

1762:

1749:

1739:

1724:

1719:

1713:

1702:

1697:

1691:

1680:

1667:

1656:

1651:

1645:

1634:

1621:

1605:

1586:

1574:

1528:

1499:

1478:

1444:

1423:

1388:

1376:{\displaystyle minMSE_{1},minMSE_{2},...}

1355:

1327:

1306:

1283:{\displaystyle z_{1},z_{2},...,z_{k_{1}}}

1272:

1267:

1242:

1229:

1223:

1202:

1196:

1172:

1151:

1082:

1076:

1030:

1028:

982:

972:

956:

943:

938:

922:

909:

904:

888:

875:

859:

846:

830:

811:

795:

782:

721:

709:

666:

664:

638:

633:we have chosen, and we do not know which

612:

562:

508:

487:

477:

461:

456:

440:

435:

419:

403:

378:

365:

322:

310:

284:

257:

236:

211:

205:

185:

152:

142:

126:

101:

89:

2264:Objective Computer Clusterization (OCC);

2379:Madala, H.R.; Ivakhnenko, O.G. (1994).

2366:

2762:Pattern Recognition and Image Analysis

2622:Cybernetics and Forecasting Techniques

2500:"Polynomial theory of complex systems"

2459:Nikolaev, N.Y.; Iba, H. (March 2003).

2826:Pacific Rim Property Research Journal

2728:. Kyiv: Naukova Dumka. Archived from

2643:Systems Analysis Modelling Simulation

2618:Ivakhnenko, O.G.; Lapa, V.G. (1967).

2593:. Kyiv: Naukova Dumka. Archived from

1139:{\displaystyle f_{(i,j);a,b,c,d,e,h}}

7:

2579:

2577:

2465:IEEE Transactions on Neural Networks

2420:Farlow, Stanley J. (November 1981).

2415:

2413:

2374:

2372:

2370:

1061:{\displaystyle {\frac {1}{2}}k(k-1)}

697:{\displaystyle {\frac {1}{2}}k(k-1)}

28:Group method of data handling (GMDH)

2132:in the Institute of Cybernetics in

1961:

1803:

1781:

1759:

1699:

1677:

1631:

1301:The algorithm continues, giving us

2863:Library of GMDH books and articles

2422:"The GMDH Algorithm of Ivakhnenko"

1068:polynomial models of the dataset.

989:

25:

2153:of General Communication theory.

2101:principle of external additions.

2348:Python library of MIA algorithm

2252:Objective System Analysis (OSA)

2048:Criterion of Regularity (CR) –

988:

245:{\displaystyle x_{1},...,x_{k}}

33:GMDH is used in such fields as

2902:. CRC Press, Boca Raton, 1994.

2896:H.R. Madala, A.G. Ivakhnenko.

2708:. London: English Univ. Press.

2438:10.1080/00031305.1981.10479358

2342:R Package for regression tasks

2292:— Open source. Cross-platform.

1941:

1909:

1611:

1579:

1095:

1083:

1055:

1043:

801:

775:

734:

722:

691:

679:

384:

358:

139:

94:

1:

2931:Regression variable selection

2868:Group Method of Data Handling

2838:10.1080/14445921.2016.1225149

2261:Multiplicative–Additive (MAA)

2042:minimizing mean-squared error

252:observations, and one target

2791:Artificial Life and Robotics

2552:10.1016/j.neunet.2014.09.003

2246:Multilayered Iterative (MIA)

704:such polynomial regressions:

200:points. Each point contains

2655:10.1080/0232929032000115029

2498:Ivakhnenko, Alexey (1971).

2273:Harmonical Rediscretization

2076:train-validation-test split

600:{\displaystyle a,b,c,d,e,f}

546:{\displaystyle a,b,c,d,e,f}

2952:

2921:Artificial neural networks

2706:Cybernetics and Management

2081:GMDH combined ideas from:

1181:{\displaystyle minMSE_{1}}

81:This section is based on.

2926:Classification algorithms

2803:10.1007/s10015-017-0410-1

2515:10.1109/TSMC.1971.4308320

2426:The American Statistician

2385:. Boca Raton: CRC Press.

2270:Analogues Complexing (AC)

2195:Artificial Neural Network

2184:GMDH-type neural networks

2916:Computational statistics

2719:Ivahnenko, O.G. (1982).

2322:— Freeware, Open source.

2284:Software implementations

2477:10.1109/TNN.2003.809405

2509:. SMC-1 (4): 364–378.

2125:

2028:are coefficients and

2005:

1980:

1883:

1822:

1800:

1778:

1718:

1696:

1650:

1552:

1551:{\displaystyle minMSE}

1514:

1488:

1412:

1411:{\displaystyle minMSE}

1377:

1298:

1284:

1212:

1182:

1140:

1062:

1017:

698:

653:

627:

601:

557:. Now, the parameters

547:

497:

299:

266:

246:

194:

174:

2679:10.2 (2000): 187-194.

2316:— Commercial product.

2243:Combinatorial (COMBI)

2123:

2006:

1960:

1884:

1802:

1780:

1758:

1698:

1676:

1630:

1553:

1515:

1489:

1413:

1378:

1296:

1285:

1213:

1211:{\displaystyle k_{1}}

1183:

1141:

1063:

1018:

699:

654:

628:

602:

548:

503:where the parameters

498:

300:

267:

247:

195:

175:

77:Polynomial regression

2332:plugin, Open source.

2314:PNN Discovery client

2130:Alexey G. Ivakhnenko

2052:on a validation set.

1903:

1573:

1527:

1498:

1422:

1387:

1305:

1222:

1195:

1150:

1075:

1027:

708:

663:

637:

611:

561:

507:

309:

283:

256:

204:

184:

88:

72:Mathematical content

2693:. London: Imp.Coll.

2055:Least squares on a

1513:{\displaystyle L+1}

948:

914:

652:{\displaystyle i,j}

626:{\displaystyle i,j}

466:

445:

298:{\displaystyle i,j}

55:pattern recognition

39:knowledge discovery

2689:Gabor, D. (1971).

2258:Two-level (ARIMAD)

2202:Combinatorial GMDH

2126:

2083:black box modeling

2050:least mean squares

2001:

1879:

1548:

1510:

1484:

1408:

1383:. As long as each

1373:

1299:

1280:

1208:

1178:

1136:

1058:

1013:

934:

900:

694:

649:

623:

597:

543:

493:

452:

431:

295:

262:

242:

190:

170:

59:external criterion

2936:Soviet inventions

2879:A.G. Ivakhnenko.

2704:Beer, S. (1959).

2649:(10): 1301–1309.

2290:FAKE GAME Project

2151:Shannon's Theorem

2087:genetic selection

2036:External criteria

1038:

674:

555:linear regression

265:{\displaystyle y}

193:{\displaystyle n}

16:(Redirected from

2943:

2850:

2849:

2821:

2815:

2814:

2786:

2780:

2779:

2777:

2759:

2750:

2744:

2743:

2741:

2740:

2734:

2727:

2716:

2710:

2709:

2701:

2695:

2694:

2686:

2680:

2673:

2667:

2666:

2634:

2628:

2627:

2625:

2615:

2609:

2608:

2606:

2605:

2599:

2592:

2581:

2572:

2571:

2545:

2525:

2519:

2518:

2504:

2495:

2489:

2488:

2456:

2450:

2449:

2417:

2408:

2407:

2405:

2404:

2395:. Archived from

2376:

2163:Period 1980–1988

2157:Period 1976–1979

2147:Period 1972–1975

2140:Period 1968–1971

2057:cross-validation

2010:

2008:

2007:

2002:

2000:

1999:

1990:

1989:

1979:

1974:

1956:

1955:

1940:

1939:

1921:

1920:

1888:

1886:

1885:

1880:

1872:

1871:

1862:

1861:

1852:

1851:

1842:

1841:

1840:

1839:

1838:

1821:

1816:

1799:

1794:

1777:

1772:

1754:

1753:

1744:

1743:

1734:

1733:

1732:

1731:

1717:

1712:

1695:

1690:

1672:

1671:

1662:

1661:

1660:

1649:

1644:

1626:

1625:

1610:

1609:

1591:

1590:

1557:

1555:

1554:

1549:

1519:

1517:

1516:

1511:

1493:

1491:

1490:

1485:

1483:

1482:

1455:

1454:

1417:

1415:

1414:

1409:

1382:

1380:

1379:

1374:

1360:

1359:

1332:

1331:

1289:

1287:

1286:

1281:

1279:

1278:

1277:

1276:

1247:

1246:

1234:

1233:

1217:

1215:

1214:

1209:

1207:

1206:

1187:

1185:

1184:

1179:

1177:

1176:

1145:

1143:

1142:

1137:

1135:

1134:

1067:

1065:

1064:

1059:

1039:

1031:

1022:

1020:

1019:

1014:

987:

986:

977:

976:

967:

966:

947:

942:

933:

932:

913:

908:

899:

898:

880:

879:

870:

869:

851:

850:

841:

840:

822:

821:

800:

799:

787:

786:

774:

773:

703:

701:

700:

695:

675:

667:

658:

656:

655:

650:

632:

630:

629:

624:

607:depend on which

606:

604:

603:

598:

553:are computed by

552:

550:

549:

544:

502:

500:

499:

494:

492:

491:

482:

481:

465:

460:

444:

439:

424:

423:

408:

407:

383:

382:

370:

369:

357:

356:

304:

302:

301:

296:

271:

269:

268:

263:

251:

249:

248:

243:

241:

240:

216:

215:

199:

197:

196:

191:

179:

177:

176:

171:

169:

168:

147:

146:

131:

130:

106:

105:

21:

2951:

2950:

2946:

2945:

2944:

2942:

2941:

2940:

2906:

2905:

2876:

2874:Further reading

2859:

2854:

2853:

2823:

2822:

2818:

2788:

2787:

2783:

2757:

2752:

2751:

2747:

2738:

2736:

2732:

2725:

2718:

2717:

2713:

2703:

2702:

2698:

2688:

2687:

2683:

2674:

2670:

2636:

2635:

2631:

2617:

2616:

2612:

2603:

2601:

2597:

2590:

2583:

2582:

2575:

2530:Neural Networks

2527:

2526:

2522:

2502:

2497:

2496:

2492:

2458:

2457:

2453:

2419:

2418:

2411:

2402:

2400:

2393:

2378:

2377:

2368:

2363:

2286:

2240:

2204:

2186:

2118:

2069:

2038:

2026:

2019:

1991:

1981:

1947:

1931:

1912:

1901:

1900:

1863:

1853:

1843:

1824:

1745:

1735:

1720:

1663:

1652:

1617:

1601:

1582:

1571:

1570:

1564:

1525:

1524:

1496:

1495:

1474:

1440:

1420:

1419:

1385:

1384:

1351:

1323:

1303:

1302:

1268:

1263:

1238:

1225:

1220:

1219:

1198:

1193:

1192:

1168:

1148:

1147:

1078:

1073:

1072:

1025:

1024:

978:

968:

952:

918:

884:

871:

855:

842:

826:

807:

791:

778:

717:

706:

705:

661:

660:

635:

634:

609:

608:

559:

558:

505:

504:

483:

473:

415:

399:

374:

361:

318:

307:

306:

281:

280:

254:

253:

232:

207:

202:

201:

182:

181:

148:

138:

122:

97:

86:

85:

79:

74:

47:complex systems

23:

22:

15:

12:

11:

5:

2949:

2947:

2939:

2938:

2933:

2928:

2923:

2918:

2908:

2907:

2904:

2903:

2894:

2884:

2875:

2872:

2871:

2870:

2865:

2858:

2857:External links

2855:

2852:

2851:

2832:(2): 123–160.

2816:

2797:(2): 161–172.

2781:

2775:10.1.1.19.2971

2768:(4): 527–535.

2745:

2711:

2696:

2681:

2668:

2629:

2610:

2573:

2520:

2490:

2471:(2): 337–350.

2451:

2432:(4): 210–215.

2409:

2392:978-0849344381

2391:

2365:

2364:

2362:

2359:

2358:

2357:

2356:- Open source.

2351:

2350:- Open source.

2345:

2344:– Open source.

2339:

2338:– Open source.

2333:

2323:

2317:

2311:

2308:KnowledgeMiner

2305:

2299:

2293:

2285:

2282:

2281:

2280:

2277:

2274:

2271:

2268:

2265:

2262:

2259:

2256:

2253:

2250:

2247:

2244:

2239:

2236:

2231:

2230:

2227:

2224:

2221:

2218:

2215:

2203:

2200:

2185:

2182:

2117:

2114:

2068:

2065:

2064:

2063:

2060:

2053:

2037:

2034:

2024:

2017:

2012:

2011:

1998:

1994:

1988:

1984:

1978:

1973:

1970:

1967:

1963:

1959:

1954:

1950:

1946:

1943:

1938:

1934:

1930:

1927:

1924:

1919:

1915:

1911:

1908:

1890:

1889:

1878:

1875:

1870:

1866:

1860:

1856:

1850:

1846:

1837:

1834:

1831:

1827:

1820:

1815:

1812:

1809:

1805:

1798:

1793:

1790:

1787:

1783:

1776:

1771:

1768:

1765:

1761:

1757:

1752:

1748:

1742:

1738:

1730:

1727:

1723:

1716:

1711:

1708:

1705:

1701:

1694:

1689:

1686:

1683:

1679:

1675:

1670:

1666:

1659:

1655:

1648:

1643:

1640:

1637:

1633:

1629:

1624:

1620:

1616:

1613:

1608:

1604:

1600:

1597:

1594:

1589:

1585:

1581:

1578:

1563:

1560:

1547:

1544:

1541:

1538:

1535:

1532:

1509:

1506:

1503:

1481:

1477:

1473:

1470:

1467:

1464:

1461:

1458:

1453:

1450:

1447:

1443:

1439:

1436:

1433:

1430:

1427:

1407:

1404:

1401:

1398:

1395:

1392:

1372:

1369:

1366:

1363:

1358:

1354:

1350:

1347:

1344:

1341:

1338:

1335:

1330:

1326:

1322:

1319:

1316:

1313:

1310:

1275:

1271:

1266:

1262:

1259:

1256:

1253:

1250:

1245:

1241:

1237:

1232:

1228:

1205:

1201:

1175:

1171:

1167:

1164:

1161:

1158:

1155:

1133:

1130:

1127:

1124:

1121:

1118:

1115:

1112:

1109:

1106:

1103:

1100:

1097:

1094:

1091:

1088:

1085:

1081:

1057:

1054:

1051:

1048:

1045:

1042:

1037:

1034:

1012:

1009:

1006:

1003:

1000:

997:

994:

991:

985:

981:

975:

971:

965:

962:

959:

955:

951:

946:

941:

937:

931:

928:

925:

921:

917:

912:

907:

903:

897:

894:

891:

887:

883:

878:

874:

868:

865:

862:

858:

854:

849:

845:

839:

836:

833:

829:

825:

820:

817:

814:

810:

806:

803:

798:

794:

790:

785:

781:

777:

772:

769:

766:

763:

760:

757:

754:

751:

748:

745:

742:

739:

736:

733:

730:

727:

724:

720:

716:

713:

693:

690:

687:

684:

681:

678:

673:

670:

648:

645:

642:

622:

619:

616:

596:

593:

590:

587:

584:

581:

578:

575:

572:

569:

566:

542:

539:

536:

533:

530:

527:

524:

521:

518:

515:

512:

490:

486:

480:

476:

472:

469:

464:

459:

455:

451:

448:

443:

438:

434:

430:

427:

422:

418:

414:

411:

406:

402:

398:

395:

392:

389:

386:

381:

377:

373:

368:

364:

360:

355:

352:

349:

346:

343:

340:

337:

334:

331:

328:

325:

321:

317:

314:

294:

291:

288:

261:

239:

235:

231:

228:

225:

222:

219:

214:

210:

189:

167:

164:

161:

158:

155:

151:

145:

141:

137:

134:

129:

125:

121:

118:

115:

112:

109:

104:

100:

96:

93:

78:

75:

73:

70:

24:

14:

13:

10:

9:

6:

4:

3:

2:

2948:

2937:

2934:

2932:

2929:

2927:

2924:

2922:

2919:

2917:

2914:

2913:

2911:

2901:

2900:

2895:

2892:

2888:

2885:

2882:

2878:

2877:

2873:

2869:

2866:

2864:

2861:

2860:

2856:

2847:

2843:

2839:

2835:

2831:

2827:

2820:

2817:

2812:

2808:

2804:

2800:

2796:

2792:

2785:

2782:

2776:

2771:

2767:

2763:

2756:

2749:

2746:

2735:on 2017-12-31

2731:

2724:

2723:

2715:

2712:

2707:

2700:

2697:

2692:

2685:

2682:

2678:

2672:

2669:

2664:

2660:

2656:

2652:

2648:

2644:

2640:

2633:

2630:

2624:

2623:

2614:

2611:

2600:on 2017-12-31

2596:

2589:

2588:

2580:

2578:

2574:

2569:

2565:

2561:

2557:

2553:

2549:

2544:

2539:

2535:

2531:

2524:

2521:

2516:

2512:

2508:

2501:

2494:

2491:

2486:

2482:

2478:

2474:

2470:

2466:

2462:

2455:

2452:

2447:

2443:

2439:

2435:

2431:

2427:

2423:

2416:

2414:

2410:

2399:on 2017-12-31

2398:

2394:

2388:

2384:

2383:

2375:

2373:

2371:

2367:

2360:

2355:

2352:

2349:

2346:

2343:

2340:

2337:

2334:

2331:

2327:

2324:

2321:

2318:

2315:

2312:

2309:

2306:

2303:

2300:

2297:

2294:

2291:

2288:

2287:

2283:

2278:

2275:

2272:

2269:

2266:

2263:

2260:

2257:

2254:

2251:

2248:

2245:

2242:

2241:

2237:

2235:

2228:

2225:

2222:

2219:

2216:

2213:

2212:

2211:

2208:

2201:

2199:

2196:

2192:

2191:base function

2183:

2181:

2179:

2178:deep learning

2175:

2174:deep learning

2170:

2166:

2164:

2160:

2158:

2154:

2152:

2148:

2144:

2141:

2137:

2135:

2131:

2122:

2115:

2113:

2109:

2107:

2106:noisy channel

2102:

2100:

2096:

2092:

2088:

2085:, successive

2084:

2079:

2077:

2072:

2066:

2061:

2058:

2054:

2051:

2047:

2046:

2045:

2043:

2035:

2033:

2031:

2027:

2020:

1996:

1992:

1986:

1982:

1976:

1971:

1968:

1965:

1957:

1952:

1948:

1944:

1936:

1932:

1928:

1925:

1922:

1917:

1913:

1906:

1899:

1898:

1897:

1895:

1894:base function

1876:

1873:

1868:

1864:

1858:

1854:

1848:

1844:

1835:

1832:

1829:

1825:

1818:

1813:

1810:

1807:

1796:

1791:

1788:

1785:

1774:

1769:

1766:

1763:

1755:

1750:

1746:

1740:

1736:

1728:

1725:

1721:

1714:

1709:

1706:

1703:

1692:

1687:

1684:

1681:

1673:

1668:

1664:

1657:

1653:

1646:

1641:

1638:

1635:

1627:

1622:

1618:

1614:

1606:

1602:

1598:

1595:

1592:

1587:

1583:

1576:

1569:

1568:

1567:

1561:

1559:

1545:

1542:

1539:

1536:

1533:

1530:

1521:

1507:

1504:

1501:

1479:

1475:

1471:

1468:

1465:

1462:

1459:

1456:

1451:

1448:

1445:

1441:

1437:

1434:

1431:

1428:

1425:

1405:

1402:

1399:

1396:

1393:

1390:

1370:

1367:

1364:

1361:

1356:

1352:

1348:

1345:

1342:

1339:

1336:

1333:

1328:

1324:

1320:

1317:

1314:

1311:

1308:

1295:

1291:

1273:

1269:

1264:

1260:

1257:

1254:

1251:

1248:

1243:

1239:

1235:

1230:

1226:

1203:

1199:

1189:

1173:

1169:

1165:

1162:

1159:

1156:

1153:

1131:

1128:

1125:

1122:

1119:

1116:

1113:

1110:

1107:

1104:

1101:

1098:

1092:

1089:

1086:

1079:

1069:

1052:

1049:

1046:

1040:

1035:

1032:

1010:

1007:

1004:

1001:

998:

995:

992:

983:

979:

973:

969:

963:

960:

957:

953:

949:

944:

939:

935:

929:

926:

923:

919:

915:

910:

905:

901:

895:

892:

889:

885:

881:

876:

872:

866:

863:

860:

856:

852:

847:

843:

837:

834:

831:

827:

823:

818:

815:

812:

808:

804:

796:

792:

788:

783:

779:

770:

767:

764:

761:

758:

755:

752:

749:

746:

743:

740:

737:

731:

728:

725:

718:

714:

711:

688:

685:

682:

676:

671:

668:

646:

643:

640:

620:

617:

614:

594:

591:

588:

585:

582:

579:

576:

573:

570:

567:

564:

556:

540:

537:

534:

531:

528:

525:

522:

519:

516:

513:

510:

488:

484:

478:

474:

470:

467:

462:

457:

453:

449:

446:

441:

436:

432:

428:

425:

420:

416:

412:

409:

404:

400:

396:

393:

390:

387:

379:

375:

371:

366:

362:

353:

350:

347:

344:

341:

338:

335:

332:

329:

326:

323:

319:

315:

312:

292:

289:

286:

277:

273:

259:

237:

233:

229:

226:

223:

220:

217:

212:

208:

187:

165:

162:

159:

156:

153:

143:

135:

132:

127:

123:

119:

116:

113:

110:

107:

102:

98:

82:

76:

71:

69:

67:

66:deep learning

62:

60:

56:

52:

48:

44:

40:

36:

31:

29:

19:

2898:

2890:

2829:

2825:

2819:

2794:

2790:

2784:

2765:

2761:

2748:

2737:. Retrieved

2730:the original

2721:

2714:

2705:

2699:

2690:

2684:

2676:

2671:

2646:

2642:

2632:

2621:

2613:

2602:. Retrieved

2595:the original

2586:

2533:

2529:

2523:

2506:

2493:

2468:

2464:

2454:

2429:

2425:

2401:. Retrieved

2397:the original

2381:

2320:Sciengy RPF!

2232:

2209:

2205:

2190:

2187:

2168:

2167:

2162:

2161:

2156:

2155:

2146:

2145:

2139:

2138:

2127:

2110:

2103:

2089:of pairwise

2080:

2073:

2070:

2039:

2029:

2022:

2015:

2013:

1893:

1891:

1565:

1522:

1300:

1190:

1070:

278:

274:

83:

80:

63:

58:

51:optimization

32:

27:

26:

2887:S.J. Farlow

35:data mining

2910:Categories

2739:2019-11-18

2604:2019-11-18

2536:: 85–117.

2403:2019-11-17

2361:References

2302:GMDH Shell

2255:Harmonical

2238:Algorithms

2180:networks.

2169:Since 1989

1562:In general

1023:obtaining

49:modeling,

43:prediction

2846:157150897

2770:CiteSeerX

2663:0232-9298

2543:1404.7828

2485:1045-9227

2446:0003-1305

2336:R Package

1962:∑

1926:…

1877:⋯

1804:∑

1782:∑

1760:∑

1700:∑

1678:∑

1632:∑

1596:…

1050:−

1008:≤

996:≤

990:∀

715:≈

686:−

316:≈

2811:44190434

2568:11715509

2560:25462637

2091:features

2116:History

2095:Gabor's

180:, with

2844:

2809:

2772:

2661:

2566:

2558:

2483:

2444:

2389:

2099:Beer's

2093:, the

2014:where

2842:S2CID

2807:S2CID

2758:(PDF)

2733:(PDF)

2726:(PDF)

2598:(PDF)

2591:(PDF)

2564:S2CID

2538:arXiv

2503:(PDF)

2326:wGMDH

2296:GEvom

1896:(1):

2659:ISSN

2556:PMID

2481:ISSN

2442:ISSN

2387:ISBN

2330:Weka

2134:Kyiv

2067:Idea

2059:set.

1457:>

1002:<

53:and

18:GMDH

2834:doi

2799:doi

2651:doi

2548:doi

2511:doi

2473:doi

2434:doi

2912::

2889:.

2840:.

2830:23

2828:.

2805:.

2795:23

2793:.

2764:.

2760:.

2657:.

2647:43

2645:.

2641:.

2576:^

2562:.

2554:.

2546:.

2534:61

2532:.

2505:.

2479:.

2469:14

2467:.

2463:.

2440:.

2430:35

2428:.

2424:.

2412:^

2369:^

2328:—

2249:GN

2136:.

2078:.

1558:.

1188:.

805::=

388::=

45:,

41:,

37:,

2848:.

2836::

2813:.

2801::

2778:.

2766:5

2742:.

2665:.

2653::

2607:.

2570:.

2550::

2540::

2517:.

2513::

2487:.

2475::

2448:.

2436::

2406:.

2030:m

2025:i

2023:a

2018:i

2016:f

1997:i

1993:f

1987:i

1983:a

1977:m

1972:1

1969:=

1966:i

1958:+

1953:0

1949:a

1945:=

1942:)

1937:n

1933:x

1929:,

1923:,

1918:1

1914:x

1910:(

1907:Y

1874:+

1869:k

1865:x

1859:j

1855:x

1849:i

1845:x

1836:k

1833:j

1830:i

1826:a

1819:n

1814:j

1811:=

1808:k

1797:n

1792:i

1789:=

1786:j

1775:n

1770:1

1767:=

1764:i

1756:+

1751:j

1747:x

1741:i

1737:x

1729:j

1726:i

1722:a

1715:n

1710:i

1707:=

1704:j

1693:n

1688:1

1685:=

1682:i

1674:+

1669:i

1665:x

1658:i

1654:a

1647:n

1642:1

1639:=

1636:i

1628:+

1623:0

1619:a

1615:=

1612:)

1607:n

1603:x

1599:,

1593:,

1588:1

1584:x

1580:(

1577:Y

1546:E

1543:S

1540:M

1537:n

1534:i

1531:m

1508:1

1505:+

1502:L

1480:L

1476:E

1472:S

1469:M

1466:n

1463:i

1460:m

1452:1

1449:+

1446:L

1442:E

1438:S

1435:M

1432:n

1429:i

1426:m

1406:E

1403:S

1400:M

1397:n

1394:i

1391:m

1371:.

1368:.

1365:.

1362:,

1357:2

1353:E

1349:S

1346:M

1343:n

1340:i

1337:m

1334:,

1329:1

1325:E

1321:S

1318:M

1315:n

1312:i

1309:m

1274:1

1270:k

1265:z

1261:,

1258:.

1255:.

1252:.

1249:,

1244:2

1240:z

1236:,

1231:1

1227:z

1204:1

1200:k

1174:1

1170:E

1166:S

1163:M

1160:n

1157:i

1154:m

1132:h

1129:,

1126:e

1123:,

1120:d

1117:,

1114:c

1111:,

1108:b

1105:,

1102:a

1099:;

1096:)

1093:j

1090:,

1087:i

1084:(

1080:f

1056:)

1053:1

1047:k

1044:(

1041:k

1036:2

1033:1

1011:k

1005:j

999:i

993:1

984:j

980:x

974:i

970:x

964:j

961:,

958:i

954:f

950:+

945:2

940:j

936:x

930:j

927:,

924:i

920:e

916:+

911:2

906:i

902:x

896:j

893:,

890:i

886:d

882:+

877:j

873:x

867:j

864:,

861:i

857:c

853:+

848:i

844:x

838:j

835:,

832:i

828:b

824:+

819:j

816:,

813:i

809:a

802:)

797:j

793:x

789:,

784:i

780:x

776:(

771:h

768:,

765:e

762:,

759:d

756:,

753:c

750:,

747:b

744:,

741:a

738:;

735:)

732:j

729:,

726:i

723:(

719:f

712:y

692:)

689:1

683:k

680:(

677:k

672:2

669:1

647:j

644:,

641:i

621:j

618:,

615:i

595:f

592:,

589:e

586:,

583:d

580:,

577:c

574:,

571:b

568:,

565:a

541:f

538:,

535:e

532:,

529:d

526:,

523:c

520:,

517:b

514:,

511:a

489:j

485:x

479:i

475:x

471:f

468:+

463:2

458:j

454:x

450:e

447:+

442:2

437:i

433:x

429:d

426:+

421:j

417:x

413:c

410:+

405:i

401:x

397:b

394:+

391:a

385:)

380:j

376:x

372:,

367:i

363:x

359:(

354:h

351:,

348:e

345:,

342:d

339:,

336:c

333:,

330:b

327:,

324:a

320:f

313:y

293:j

290:,

287:i

260:y

238:k

234:x

230:,

227:.

224:.

221:.

218:,

213:1

209:x

188:n

166:n

163::

160:1

157:=

154:s

150:}

144:s

140:)

136:y

133:;

128:k

124:x

120:,

117:.

114:.

111:.

108:,

103:1

99:x

95:(

92:{

20:)

Text is available under the Creative Commons Attribution-ShareAlike License. Additional terms may apply.