36:

479:

947:. Higher-degree polynomials would work in theory, but yield models that are not really in the spirit of LOESS. LOESS is based on the ideas that any function can be well approximated in a small neighborhood by a low-order polynomial and that simple models can be fit to data easily. High-degree polynomials would tend to overfit the data in each subset and are numerically unstable, making accurate computations difficult.

2710:, on the other hand, it is only necessary to write down a functional form in order to provide estimates of the unknown parameters and the estimated uncertainty. Depending on the application, this could be either a major or a minor drawback to using LOESS. In particular, the simple form of LOESS can not be used for mechanistic modelling where fitted parameters specify particular physical properties of a system.

2689:

addition, LOESS is very flexible, making it ideal for modeling complex processes for which no theoretical models exist. These two advantages, combined with the simplicity of the method, make LOESS one of the most attractive of the modern regression methods for applications that fit the general framework of least squares regression but which have a complex deterministic structure.

625:. It does this by fitting simple models to localized subsets of the data to build up a function that describes the deterministic part of the variation in the data, point by point. In fact, one of the chief attractions of this method is that the data analyst is not required to specify a global function of any form to fit a model to the data, only to fit segments of the data.

695:, giving more weight to points near the point whose response is being estimated and less weight to points further away. The value of the regression function for the point is then obtained by evaluating the local polynomial using the explanatory variable values for that data point. The LOESS fit is complete after regression function values have been computed for each of the

93:

3162:

3291:

956:

be related to each other in a simple way than points that are further apart. Following this logic, points that are likely to follow the local model best influence the local model parameter estimates the most. Points that are less likely to actually conform to the local model have less influence on the local model

955:

As mentioned above, the weight function gives the most weight to the data points nearest the point of estimation and the least weight to the data points that are furthest away. The use of the weights is based on the idea that points near each other in the explanatory variable space are more likely to

628:

The trade-off for these features is increased computation. Because it is so computationally intensive, LOESS would have been practically impossible to use in the era when least squares regression was being developed. Most other modern methods for process modeling are similar to LOESS in this respect.

2705:

Another disadvantage of LOESS is the fact that it does not produce a regression function that is easily represented by a mathematical formula. This can make it difficult to transfer the results of an analysis to other people. In order to transfer the regression function to another person, they would

2701:

LOESS makes less efficient use of data than other least squares methods. It requires fairly large, densely sampled data sets in order to produce good models. This is because LOESS relies on the local data structure when performing the local fitting. Thus, LOESS provides less complex data analysis in

1054:

However, any other weight function that satisfies the properties listed in

Cleveland (1979) could also be used. The weight for a specific point in any localized subset of data is obtained by evaluating the weight function at the distance between that point and the point of estimation, after scaling

2692:

Although it is less obvious than for some of the other methods related to linear least squares regression, LOESS also accrues most of the benefits typically shared by those procedures. The most important of those is the theory for computing uncertainties for prediction and calibration. Many other

2688:

As discussed above, the biggest advantage LOESS has over many other methods is the process of fitting a model to the sample data does not begin with the specification of a function. Instead the analyst only has to provide a smoothing parameter value and the degree of the local polynomial. In

728:

of data used for each weighted least squares fit in LOESS are determined by a nearest neighbors algorithm. A user-specified input to the procedure called the "bandwidth" or "smoothing parameter" determines how much of the data is used to fit each local polynomial. The smoothing parameter,

1478:

942:

The local polynomials fit to each subset of the data are almost always of first or second degree; that is, either locally linear (in the straight line sense) or locally quadratic. Using a zero degree polynomial turns LOESS into a weighted

1812:

933:

is, the closer the regression function will conform to the data. Using too small a value of the smoothing parameter is not desirable, however, since the regression function will eventually start to capture the random error in the data.

621:. They address situations in which the classical procedures do not perform well or cannot be effectively applied without undue labor. LOESS combines much of the simplicity of linear least squares regression with the flexibility of

3295:

715:

data points. Many of the details of this method, such as the degree of the polynomial model and the weights, are flexible. The range of choices for each part of the method and typical defaults are briefly discussed next.

2677:

2532:

2093:

2301:

1309:

2365:

2404:

1298:

849:

629:

These methods have been consciously designed to use our current computational ability to the fullest possible advantage to achieve goals not easily achieved by traditional approaches.

1161:

1603:

1042:

1245:

1210:

1120:

2713:

Finally, as discussed above, LOESS is a computationally intensive method (with the exception of evenly spaced data, where the regression can then be phrased as a non-causal

1875:

1959:

1539:

1988:

2142:

1901:

2178:

869:

774:

1680:

931:

911:

891:

805:

747:

2571:

1685:

1091:

2997:

2919:

1839:

2198:

2116:

1921:

1647:

1627:

1501:

1181:

713:

3282:

3224:

2754:

776:

points (rounded to the next largest integer) whose explanatory variables' values are closest to the point at which the response is being estimated.

509:

2582:

3229:

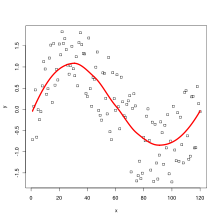

419:

2412:

1996:

3272:

3140:

3044:

3027:

409:

2206:

644:

criterion variable. When each smoothed value is given by a weighted linear least squares regression over the span, this is known as a

3313:

3210:

587:

79:

57:

2717:

filter). LOESS is also prone to the effects of outliers in the data set, like other least squares methods. There is an iterative,

636:, particularly when each smoothed value is given by a weighted quadratic least squares regression over the span of values of the

373:

3239:

3184:

2734:

424:

362:

182:

157:

2867:

2848:

753:

of data points that are used in each local fit. The subset of data used in each weighted least squares fit thus comprises the

284:

3087:

Garimella, Rao

Veerabhadra (22 June 2017). "A Simple Introduction to Moving Least Squares and Local Regression Estimation".

3132:

Regression

Modeling Strategies: With Applications to Linear Models, Logistic and Ordinal Regression, and Survival Analysis

243:

1051:

is the distance of a given data point from the point on the curve being fitted, scaled to lie in the range from 0 to 1.

893:

is called the smoothing parameter because it controls the flexibility of the LOESS regression function. Large values of

600:

502:

1473:{\displaystyle \operatorname {RSS} _{x}(A)=\sum _{i=1}^{N}(y_{i}-A{\hat {x}}_{i})^{T}w_{i}(x)(y_{i}-A{\hat {x}}_{i}).}

445:

414:

383:

310:

2764:

2310:

661:

607:

2759:

2370:

596:

404:

393:

357:

264:

1250:

1055:

the distance so that the maximum absolute distance over all of the points in the subset of data is exactly one.

465:

3262:

3253:

3192:

3188:

3172:

810:

668:

rediscovered the method in 1979 and gave it a distinct name. The method was further developed by

Cleveland and

618:

336:

259:

152:

131:

50:

44:

1125:

2714:

495:

388:

913:

produce the smoothest functions that wiggle the least in response to fluctuations in the data. The smaller

1544:

976:

960:

692:

352:

347:

289:

61:

1629:

is a metric, it is a symmetric, positive-definite matrix and, as such, there is another symmetric matrix

3244:

3108:

3066:

2693:

tests and procedures used for validation of least squares models can also be extended to LOESS models .

1215:

622:

542:

538:

440:

136:

3267:

3258:

478:

3249:

3061:. Laboratory for Computational Statistics. LCS Technical Report 5, SLAC PUB-3466. Stanford University.

1186:

1096:

2988:

2956:

2914:

2769:

2744:

2707:

684:

665:

614:

460:

450:

331:

299:

254:

233:

141:

1844:

660:

In 1964, Savitsky and Golay proposed a method equivalent to LOESS, which is commonly referred to as

1926:

1506:

967:

378:

279:

274:

228:

177:

167:

112:

3234:

2892:

3055:

3014:

2976:

2936:

632:

A smooth curve through a set of data points obtained with this statistical technique is called a

483:

212:

197:

1964:

2121:

1880:

1807:{\displaystyle y^{T}wy=(hy)^{T}(hy)=\operatorname {Tr} (hyy^{T}h)=\operatorname {Tr} (wyy^{T})}

3136:

3096:

3040:

2959:(1981). "LOWESS: A program for smoothing scatterplots by robust locally weighted regression".

2739:

2718:

2574:

2147:

854:

756:

688:

269:

172:

126:

3130:

1652:

916:

896:

876:

790:

732:

3088:

3006:

2995:(1988). "Locally-Weighted Regression: An Approach to Regression Analysis by Local Fitting".

2968:

2928:

2830:

2541:

1061:

564:

223:

2948:

1817:

3121:

3079:

2992:

2944:

669:

455:

162:

2749:

2183:

2101:

1906:

1632:

1612:

1486:

1166:

944:

698:

534:

207:

3307:

326:

202:

1058:

Consider the following generalisation of the linear regression model with a metric

192:

3034:

1183:

input parameters and that, as customary in these cases, we embed the input space

641:

238:

187:

680:

606:-based meta-model. In some fields, LOESS is known and commonly referred to as

100:

with uniform noise added. The LOESS curve approximates the original sine wave.

2672:{\displaystyle w(x,z)=\exp \left(-{\frac {\|x-z\|^{2}}{2\alpha ^{2}}}\right)}

3283:

Nate Silver, How

Opinion on Same-Sex Marriage Is Changing, and What It Means

957:

97:

3230:

Smoothing by Local

Regression: Principles and Methods (PostScript Document)

2725:, but too many extreme outliers can still overcome even the robust method.

2527:{\displaystyle A(x)=YW(x){\hat {X}}^{T}({\hat {X}}W(x){\hat {X}}^{T})^{-1}}

2088:{\displaystyle \operatorname {Tr} (W(x)(Y-A{\hat {X}})^{T}(Y-A{\hat {X}}))}

1682:. The above loss function can be rearranged into a trace by observing that

2917:(1979). "Robust Locally Weighted Regression and Smoothing Scatterplots".

2722:

2200:

and setting the result equal to 0 one finds the extremal matrix equation

676:

672:(1988). LOWESS is also known as locally weighted polynomial regression.

92:

3018:

2980:

2940:

3100:

17:

3092:

3010:

2972:

2932:

3191:

external links, and converting useful links where appropriate into

2721:

version of LOESS that can be used to reduce LOESS' sensitivity to

91:

27:

Moving average and polynomial regression method for smoothing data

2296:{\displaystyle A{\hat {X}}W(x){\hat {X}}^{T}=YW(x){\hat {X}}^{T}}

1609:

enumerates input and output vectors from a training set. Since

3155:

29:

1990:

respectively, the above loss function can then be written as

2849:"scipy.signal.savgol_filter — SciPy v0.16.1 Reference Guide"

576:

573:

2735:

Degrees of freedom (statistics)#In non-standard regression

2706:

need the data set and software for LOESS calculations. In

787: + 1 points for a fit, the smoothing parameter

3299:

3180:

966:

The traditional weight function used for LOESS is the

3235:

NIST Engineering

Statistics Handbook Section on LOESS

2585:

2544:

2415:

2373:

2313:

2209:

2186:

2150:

2124:

2104:

1999:

1967:

1929:

1909:

1883:

1847:

1820:

1688:

1655:

1635:

1615:

1547:

1509:

1489:

1312:

1253:

1218:

1189:

1169:

1128:

1099:

1064:

979:

919:

899:

879:

857:

813:

793:

759:

735:

701:

599:

methods that combine multiple regression models in a

588:

579:

2865:

Kristen Pavlik, US Environmental

Protection Agency,

570:

3268:

The supsmu function (Friedman's SuperSmoother) in R

691:is being estimated. The polynomial is fitted using

567:

541:. Its most common methods, initially developed for

2671:

2565:

2526:

2398:

2359:

2295:

2192:

2172:

2136:

2110:

2087:

1982:

1953:

1915:

1895:

1869:

1833:

1806:

1674:

1641:

1621:

1597:

1533:

1495:

1472:

1292:

1239:

1204:

1175:

1155:

1114:

1085:

1036:

925:

905:

885:

863:

843:

799:

768:

741:

707:

96:LOESS curve fitted to a population sampled from a

3175:may not follow Knowledge's policies or guidelines

2897:NIST/SEMATECH e-Handbook of Statistical Methods,

1163:. Assume that the linear hypothesis is based on

2998:Journal of the American Statistical Association

2920:Journal of the American Statistical Association

3300:National Institute of Standards and Technology

2831:"Savitzky–Golay filtering – MATLAB sgolayfilt"

871:denoting the degree of the local polynomial.

503:

8:

3275:– A method to perform Local regression on a

2637:

2624:

2360:{\displaystyle {\hat {X}}W(x){\hat {X}}^{T}}

2793:

2399:{\displaystyle \operatorname {RSS} _{x}(A)}

3285:– sample of LOESS versus linear regression

1293:{\displaystyle x\mapsto {\hat {x}}:=(1,x)}

510:

496:

103:

3211:Learn how and when to remove this message

3028:"Appendix: Nonparametric Regression in R"

2817:

2702:exchange for greater experimental costs.

2655:

2640:

2621:

2584:

2543:

2515:

2505:

2494:

2493:

2469:

2468:

2459:

2448:

2447:

2414:

2378:

2372:

2351:

2340:

2339:

2315:

2314:

2312:

2287:

2276:

2275:

2250:

2239:

2238:

2214:

2213:

2208:

2185:

2155:

2149:

2123:

2103:

2068:

2067:

2049:

2034:

2033:

1998:

1969:

1968:

1966:

1928:

1908:

1882:

1861:

1850:

1849:

1846:

1825:

1819:

1795:

1761:

1721:

1693:

1687:

1666:

1654:

1634:

1614:

1580:

1552:

1546:

1508:

1488:

1458:

1447:

1446:

1433:

1411:

1401:

1391:

1380:

1379:

1366:

1353:

1342:

1317:

1311:

1261:

1260:

1252:

1225:

1221:

1220:

1217:

1196:

1192:

1191:

1188:

1168:

1147:

1143:

1142:

1127:

1106:

1102:

1101:

1098:

1063:

1028:

1018:

1013:

1004:

978:

918:

898:

878:

856:

844:{\displaystyle \left(\lambda +1\right)/n}

833:

812:

792:

758:

734:

700:

80:Learn how and when to remove this message

2755:Multivariate adaptive regression splines

2307:Assuming further that the square matrix

683:is fitted to a subset of the data, with

43:This article includes a list of general

2805:

2786:

1156:{\displaystyle x,z\in \mathbb {R} ^{p}}

551:locally estimated scatterplot smoothing

432:

318:

118:

111:

3250:R: Local Polynomial Regression Fitting

3225:Local Regression and Election Modeling

3117:

3106:

3075:

3064:

2887:

2885:

2883:

2881:

749:, is the fraction of the total number

559:locally weighted scatterplot smoothing

3026:Fox, John; Weisberg, Sanford (2018).

7:

3036:An R Companion to Applied Regression

1598:{\displaystyle w_{i}(x):=w(x_{i},x)}

1037:{\displaystyle w(d)=(1-|d|^{3})^{3}}

2367:is non-singular, the loss function

2180:s. Differentiating with respect to

1240:{\displaystyle \mathbb {R} ^{p+1}}

675:At each point in the range of the

648:; however, some authorities treat

610:(proposed 15 years before LOESS).

49:it lacks sufficient corresponding

25:

3294: This article incorporates

3289:

3160:

1205:{\displaystyle \mathbb {R} ^{p}}

1122:that depends on two parameters,

1115:{\displaystyle \mathbb {R} ^{m}}

595:. They are two strongly related

563:

477:

34:

617:, such as linear and nonlinear

613:LOESS and LOWESS thus build on

425:Least-squares spectral analysis

363:Generalized estimating equation

183:Multinomial logistic regression

158:Vector generalized linear model

3129:Harrell, Frank E. Jr. (2015).

2601:

2589:

2560:

2548:

2512:

2499:

2489:

2483:

2474:

2465:

2453:

2443:

2437:

2425:

2419:

2393:

2387:

2345:

2335:

2329:

2320:

2281:

2271:

2265:

2244:

2234:

2228:

2219:

2167:

2161:

2082:

2079:

2073:

2055:

2046:

2039:

2021:

2018:

2012:

2006:

1974:

1942:

1930:

1870:{\displaystyle {\hat {x}}_{i}}

1855:

1801:

1782:

1770:

1748:

1736:

1727:

1718:

1708:

1592:

1573:

1564:

1558:

1528:

1516:

1464:

1452:

1426:

1423:

1417:

1398:

1385:

1359:

1332:

1326:

1287:

1275:

1266:

1257:

1080:

1068:

1025:

1014:

1005:

995:

989:

983:

1:

2144:matrix whose entries are the

1954:{\displaystyle (p+1)\times N}

1541:real matrix of coefficients,

1534:{\displaystyle m\times (p+1)}

1300:, and consider the following

779:Since a polynomial of degree

533:, is a generalization of the

244:Nonlinear mixed-effects model

3054:Friedman, Jerome H. (1984).

687:values near the point whose

3279:moving window (with R code)

1814:. By arranging the vectors

938:Degree of local polynomials

527:local polynomial regression

446:Mean and predicted response

3330:

3056:"A Variable Span Smoother"

1983:{\displaystyle {\hat {X}}}

239:Linear mixed-effects model

3259:R: Scatter Plot Smoothing

2961:The American Statistician

2760:Non-parametric statistics

2137:{\displaystyle N\times N}

1896:{\displaystyle m\times N}

720:Localized subsets of data

597:non-parametric regression

405:Least absolute deviations

3314:Nonparametric regression

2899:(accessed 14 April 2017)

2173:{\displaystyle w_{i}(x)}

968:tri-cube weight function

864:{\displaystyle \lambda }

769:{\displaystyle n\alpha }

619:least squares regression

153:Generalized linear model

3261:The Lowess function in

2794:Fox & Weisberg 2018

2715:finite impulse response

2406:attains its minimum at

2118:is the square diagonal

1675:{\displaystyle w=h^{2}}

926:{\displaystyle \alpha }

906:{\displaystyle \alpha }

886:{\displaystyle \alpha }

800:{\displaystyle \alpha }

742:{\displaystyle \alpha }

652:and loess as synonyms.

64:more precise citations.

3296:public domain material

3252:The Loess function in

3245:Scatter Plot Smoothing

3240:Local Fitting Software

3116:Cite journal requires

3074:Cite journal requires

3039:(3rd ed.). SAGE.

2673:

2567:

2566:{\displaystyle w(x,z)}

2528:

2400:

2361:

2297:

2194:

2174:

2138:

2112:

2089:

1984:

1955:

1917:

1897:

1877:into the columns of a

1871:

1835:

1808:

1676:

1643:

1623:

1599:

1535:

1497:

1474:

1358:

1294:

1241:

1206:

1177:

1157:

1116:

1087:

1086:{\displaystyle w(x,z)}

1038:

927:

907:

887:

865:

845:

801:

770:

743:

709:

693:weighted least squares

484:Mathematics portal

410:Iteratively reweighted

101:

2989:Cleveland, William S.

2957:Cleveland, William S.

2915:Cleveland, William S.

2765:Savitzky–Golay filter

2674:

2568:

2538:A typical choice for

2529:

2401:

2362:

2298:

2195:

2175:

2139:

2113:

2090:

1985:

1956:

1918:

1898:

1872:

1836:

1834:{\displaystyle y_{i}}

1809:

1677:

1644:

1624:

1600:

1536:

1498:

1475:

1338:

1295:

1242:

1207:

1178:

1158:

1117:

1088:

1039:

928:

908:

888:

866:

846:

802:

771:

744:

710:

662:Savitzky–Golay filter

608:Savitzky–Golay filter

543:scatterplot smoothing

539:polynomial regression

441:Regression validation

420:Bayesian multivariate

137:Polynomial regression

95:

3181:improve this article

2893:"LOESS (aka LOWESS)"

2770:Segmented regression

2745:Moving least squares

2708:nonlinear regression

2583:

2542:

2413:

2371:

2311:

2207:

2184:

2148:

2122:

2102:

1997:

1965:

1927:

1907:

1881:

1845:

1818:

1686:

1653:

1633:

1613:

1545:

1507:

1487:

1310:

1251:

1216:

1187:

1167:

1126:

1097:

1093:on the target space

1062:

977:

917:

897:

877:

855:

811:

791:

757:

733:

699:

685:explanatory variable

666:William S. Cleveland

623:nonlinear regression

466:Gauss–Markov theorem

461:Studentized residual

451:Errors and residuals

285:Principal components

255:Nonlinear regression

142:General linear model

3193:footnote references

2895:, section 4.1.4.4,

615:"classical" methods

561:), both pronounced

311:Errors-in-variables

178:Logistic regression

168:Binomial regression

113:Regression analysis

107:Part of a series on

2669:

2563:

2524:

2396:

2357:

2293:

2190:

2170:

2134:

2108:

2085:

1980:

1951:

1913:

1893:

1867:

1831:

1804:

1672:

1639:

1619:

1605:and the subscript

1595:

1531:

1493:

1470:

1290:

1237:

1202:

1173:

1153:

1112:

1083:

1034:

923:

903:

883:

861:

841:

797:

783:requires at least

766:

739:

705:

198:Multinomial probit

102:

3221:

3220:

3213:

3142:978-3-319-19425-7

3046:978-1-5443-3645-9

2868:Loess (or Lowess)

2740:Kernel regression

2662:

2502:

2477:

2456:

2348:

2323:

2284:

2247:

2222:

2193:{\displaystyle A}

2111:{\displaystyle W}

2076:

2042:

1977:

1916:{\displaystyle Y}

1858:

1642:{\displaystyle h}

1622:{\displaystyle w}

1496:{\displaystyle A}

1455:

1388:

1269:

1176:{\displaystyle p}

708:{\displaystyle n}

604:-nearest-neighbor

531:moving regression

520:

519:

173:Binary regression

132:Simple regression

127:Linear regression

90:

89:

82:

16:(Redirected from

3321:

3293:

3292:

3216:

3209:

3205:

3202:

3196:

3164:

3163:

3156:

3146:

3125:

3119:

3114:

3112:

3104:

3083:

3077:

3072:

3070:

3062:

3060:

3050:

3032:

3022:

3005:(403): 596–610.

2993:Devlin, Susan J.

2984:

2952:

2927:(368): 829–836.

2900:

2889:

2876:

2863:

2857:

2856:

2845:

2839:

2838:

2827:

2821:

2815:

2809:

2803:

2797:

2791:

2678:

2676:

2675:

2670:

2668:

2664:

2663:

2661:

2660:

2659:

2646:

2645:

2644:

2622:

2572:

2570:

2569:

2564:

2533:

2531:

2530:

2525:

2523:

2522:

2510:

2509:

2504:

2503:

2495:

2479:

2478:

2470:

2464:

2463:

2458:

2457:

2449:

2405:

2403:

2402:

2397:

2383:

2382:

2366:

2364:

2363:

2358:

2356:

2355:

2350:

2349:

2341:

2325:

2324:

2316:

2302:

2300:

2299:

2294:

2292:

2291:

2286:

2285:

2277:

2255:

2254:

2249:

2248:

2240:

2224:

2223:

2215:

2199:

2197:

2196:

2191:

2179:

2177:

2176:

2171:

2160:

2159:

2143:

2141:

2140:

2135:

2117:

2115:

2114:

2109:

2094:

2092:

2091:

2086:

2078:

2077:

2069:

2054:

2053:

2044:

2043:

2035:

1989:

1987:

1986:

1981:

1979:

1978:

1970:

1960:

1958:

1957:

1952:

1922:

1920:

1919:

1914:

1902:

1900:

1899:

1894:

1876:

1874:

1873:

1868:

1866:

1865:

1860:

1859:

1851:

1840:

1838:

1837:

1832:

1830:

1829:

1813:

1811:

1810:

1805:

1800:

1799:

1766:

1765:

1726:

1725:

1698:

1697:

1681:

1679:

1678:

1673:

1671:

1670:

1648:

1646:

1645:

1640:

1628:

1626:

1625:

1620:

1604:

1602:

1601:

1596:

1585:

1584:

1557:

1556:

1540:

1538:

1537:

1532:

1502:

1500:

1499:

1494:

1479:

1477:

1476:

1471:

1463:

1462:

1457:

1456:

1448:

1438:

1437:

1416:

1415:

1406:

1405:

1396:

1395:

1390:

1389:

1381:

1371:

1370:

1357:

1352:

1322:

1321:

1299:

1297:

1296:

1291:

1271:

1270:

1262:

1246:

1244:

1243:

1238:

1236:

1235:

1224:

1211:

1209:

1208:

1203:

1201:

1200:

1195:

1182:

1180:

1179:

1174:

1162:

1160:

1159:

1154:

1152:

1151:

1146:

1121:

1119:

1118:

1113:

1111:

1110:

1105:

1092:

1090:

1089:

1084:

1043:

1041:

1040:

1035:

1033:

1032:

1023:

1022:

1017:

1008:

932:

930:

929:

924:

912:

910:

909:

904:

892:

890:

889:

884:

870:

868:

867:

862:

850:

848:

847:

842:

837:

832:

828:

807:must be between

806:

804:

803:

798:

775:

773:

772:

767:

748:

746:

745:

740:

714:

712:

711:

706:

656:Model definition

591:

586:

585:

582:

581:

578:

575:

572:

569:

529:, also known as

523:Local regression

512:

505:

498:

482:

481:

389:Ridge regression

224:Multilevel model

104:

85:

78:

74:

71:

65:

60:this article by

51:inline citations

38:

37:

30:

21:

3329:

3328:

3324:

3323:

3322:

3320:

3319:

3318:

3304:

3303:

3290:

3217:

3206:

3200:

3197:

3178:

3169:This article's

3165:

3161:

3154:

3149:

3143:

3128:

3115:

3105:

3093:10.2172/1367799

3086:

3073:

3063:

3058:

3053:

3047:

3030:

3025:

3011:10.2307/2289282

2987:

2973:10.2307/2683591

2955:

2933:10.2307/2286407

2913:

2909:

2904:

2903:

2890:

2879:

2864:

2860:

2847:

2846:

2842:

2829:

2828:

2824:

2816:

2812:

2804:

2800:

2792:

2788:

2783:

2778:

2731:

2699:

2686:

2651:

2647:

2636:

2623:

2617:

2613:

2581:

2580:

2575:Gaussian weight

2540:

2539:

2511:

2492:

2446:

2411:

2410:

2374:

2369:

2368:

2338:

2309:

2308:

2274:

2237:

2205:

2204:

2182:

2181:

2151:

2146:

2145:

2120:

2119:

2100:

2099:

2045:

1995:

1994:

1963:

1962:

1925:

1924:

1905:

1904:

1879:

1878:

1848:

1843:

1842:

1821:

1816:

1815:

1791:

1757:

1717:

1689:

1684:

1683:

1662:

1651:

1650:

1631:

1630:

1611:

1610:

1576:

1548:

1543:

1542:

1505:

1504:

1485:

1484:

1445:

1429:

1407:

1397:

1378:

1362:

1313:

1308:

1307:

1249:

1248:

1219:

1214:

1213:

1190:

1185:

1184:

1165:

1164:

1141:

1124:

1123:

1100:

1095:

1094:

1060:

1059:

1024:

1012:

975:

974:

953:

951:Weight function

940:

915:

914:

895:

894:

875:

874:

853:

852:

818:

814:

809:

808:

789:

788:

755:

754:

731:

730:

722:

697:

696:

670:Susan J. Devlin

658:

589:

566:

562:

516:

476:

456:Goodness of fit

163:Discrete choice

86:

75:

69:

66:

56:Please help to

55:

39:

35:

28:

23:

22:

15:

12:

11:

5:

3327:

3325:

3317:

3316:

3306:

3305:

3287:

3286:

3280:

3273:Quantile LOESS

3270:

3265:

3256:

3247:

3242:

3237:

3232:

3227:

3219:

3218:

3173:external links

3168:

3166:

3159:

3153:

3152:External links

3150:

3148:

3147:

3141:

3126:

3118:|journal=

3084:

3076:|journal=

3051:

3045:

3023:

2985:

2953:

2910:

2908:

2905:

2902:

2901:

2877:

2873:Nutrient Steps

2858:

2853:Docs.scipy.org

2840:

2822:

2818:Garimella 2017

2810:

2798:

2785:

2784:

2782:

2779:

2777:

2774:

2773:

2772:

2767:

2762:

2757:

2752:

2750:Moving average

2747:

2742:

2737:

2730:

2727:

2698:

2695:

2685:

2682:

2681:

2680:

2667:

2658:

2654:

2650:

2643:

2639:

2635:

2632:

2629:

2626:

2620:

2616:

2612:

2609:

2606:

2603:

2600:

2597:

2594:

2591:

2588:

2562:

2559:

2556:

2553:

2550:

2547:

2536:

2535:

2521:

2518:

2514:

2508:

2501:

2498:

2491:

2488:

2485:

2482:

2476:

2473:

2467:

2462:

2455:

2452:

2445:

2442:

2439:

2436:

2433:

2430:

2427:

2424:

2421:

2418:

2395:

2392:

2389:

2386:

2381:

2377:

2354:

2347:

2344:

2337:

2334:

2331:

2328:

2322:

2319:

2305:

2304:

2290:

2283:

2280:

2273:

2270:

2267:

2264:

2261:

2258:

2253:

2246:

2243:

2236:

2233:

2230:

2227:

2221:

2218:

2212:

2189:

2169:

2166:

2163:

2158:

2154:

2133:

2130:

2127:

2107:

2096:

2095:

2084:

2081:

2075:

2072:

2066:

2063:

2060:

2057:

2052:

2048:

2041:

2038:

2032:

2029:

2026:

2023:

2020:

2017:

2014:

2011:

2008:

2005:

2002:

1976:

1973:

1950:

1947:

1944:

1941:

1938:

1935:

1932:

1912:

1892:

1889:

1886:

1864:

1857:

1854:

1828:

1824:

1803:

1798:

1794:

1790:

1787:

1784:

1781:

1778:

1775:

1772:

1769:

1764:

1760:

1756:

1753:

1750:

1747:

1744:

1741:

1738:

1735:

1732:

1729:

1724:

1720:

1716:

1713:

1710:

1707:

1704:

1701:

1696:

1692:

1669:

1665:

1661:

1658:

1638:

1618:

1594:

1591:

1588:

1583:

1579:

1575:

1572:

1569:

1566:

1563:

1560:

1555:

1551:

1530:

1527:

1524:

1521:

1518:

1515:

1512:

1492:

1481:

1480:

1469:

1466:

1461:

1454:

1451:

1444:

1441:

1436:

1432:

1428:

1425:

1422:

1419:

1414:

1410:

1404:

1400:

1394:

1387:

1384:

1377:

1374:

1369:

1365:

1361:

1356:

1351:

1348:

1345:

1341:

1337:

1334:

1331:

1328:

1325:

1320:

1316:

1289:

1286:

1283:

1280:

1277:

1274:

1268:

1265:

1259:

1256:

1234:

1231:

1228:

1223:

1199:

1194:

1172:

1150:

1145:

1140:

1137:

1134:

1131:

1109:

1104:

1082:

1079:

1076:

1073:

1070:

1067:

1045:

1044:

1031:

1027:

1021:

1016:

1011:

1007:

1003:

1000:

997:

994:

991:

988:

985:

982:

952:

949:

945:moving average

939:

936:

922:

902:

882:

860:

840:

836:

831:

827:

824:

821:

817:

796:

765:

762:

738:

721:

718:

704:

657:

654:

535:moving average

518:

517:

515:

514:

507:

500:

492:

489:

488:

487:

486:

471:

470:

469:

468:

463:

458:

453:

448:

443:

435:

434:

430:

429:

428:

427:

422:

417:

412:

407:

399:

398:

397:

396:

391:

386:

381:

376:

368:

367:

366:

365:

360:

355:

350:

342:

341:

340:

339:

334:

329:

321:

320:

316:

315:

314:

313:

305:

304:

303:

302:

297:

292:

287:

282:

277:

272:

267:

265:Semiparametric

262:

257:

249:

248:

247:

246:

241:

236:

234:Random effects

231:

226:

218:

217:

216:

215:

210:

208:Ordered probit

205:

200:

195:

190:

185:

180:

175:

170:

165:

160:

155:

147:

146:

145:

144:

139:

134:

129:

121:

120:

116:

115:

109:

108:

88:

87:

42:

40:

33:

26:

24:

14:

13:

10:

9:

6:

4:

3:

2:

3326:

3315:

3312:

3311:

3309:

3302:

3301:

3298:from the

3297:

3284:

3281:

3278:

3274:

3271:

3269:

3266:

3264:

3260:

3257:

3255:

3251:

3248:

3246:

3243:

3241:

3238:

3236:

3233:

3231:

3228:

3226:

3223:

3222:

3215:

3212:

3204:

3201:November 2021

3194:

3190:

3189:inappropriate

3186:

3182:

3176:

3174:

3167:

3158:

3157:

3151:

3144:

3138:

3134:

3133:

3127:

3123:

3110:

3102:

3098:

3094:

3090:

3085:

3081:

3068:

3057:

3052:

3048:

3042:

3038:

3037:

3029:

3024:

3020:

3016:

3012:

3008:

3004:

3000:

2999:

2994:

2990:

2986:

2982:

2978:

2974:

2970:

2966:

2962:

2958:

2954:

2950:

2946:

2942:

2938:

2934:

2930:

2926:

2922:

2921:

2916:

2912:

2911:

2906:

2898:

2894:

2888:

2886:

2884:

2882:

2878:

2874:

2870:

2869:

2862:

2859:

2854:

2850:

2844:

2841:

2836:

2835:Mathworks.com

2832:

2826:

2823:

2819:

2814:

2811:

2808:, p. 29.

2807:

2802:

2799:

2795:

2790:

2787:

2780:

2775:

2771:

2768:

2766:

2763:

2761:

2758:

2756:

2753:

2751:

2748:

2746:

2743:

2741:

2738:

2736:

2733:

2732:

2728:

2726:

2724:

2720:

2716:

2711:

2709:

2703:

2697:Disadvantages

2696:

2694:

2690:

2683:

2665:

2656:

2652:

2648:

2641:

2633:

2630:

2627:

2618:

2614:

2610:

2607:

2604:

2598:

2595:

2592:

2586:

2579:

2578:

2577:

2576:

2557:

2554:

2551:

2545:

2519:

2516:

2506:

2496:

2486:

2480:

2471:

2460:

2450:

2440:

2434:

2431:

2428:

2422:

2416:

2409:

2408:

2407:

2390:

2384:

2379:

2375:

2352:

2342:

2332:

2326:

2317:

2288:

2278:

2268:

2262:

2259:

2256:

2251:

2241:

2231:

2225:

2216:

2210:

2203:

2202:

2201:

2187:

2164:

2156:

2152:

2131:

2128:

2125:

2105:

2070:

2064:

2061:

2058:

2050:

2036:

2030:

2027:

2024:

2015:

2009:

2003:

2000:

1993:

1992:

1991:

1971:

1948:

1945:

1939:

1936:

1933:

1910:

1890:

1887:

1884:

1862:

1852:

1826:

1822:

1796:

1792:

1788:

1785:

1779:

1776:

1773:

1767:

1762:

1758:

1754:

1751:

1745:

1742:

1739:

1733:

1730:

1722:

1714:

1711:

1705:

1702:

1699:

1694:

1690:

1667:

1663:

1659:

1656:

1636:

1616:

1608:

1589:

1586:

1581:

1577:

1570:

1567:

1561:

1553:

1549:

1525:

1522:

1519:

1513:

1510:

1490:

1467:

1459:

1449:

1442:

1439:

1434:

1430:

1420:

1412:

1408:

1402:

1392:

1382:

1375:

1372:

1367:

1363:

1354:

1349:

1346:

1343:

1339:

1335:

1329:

1323:

1318:

1314:

1306:

1305:

1304:

1303:

1302:loss function

1284:

1281:

1278:

1272:

1263:

1254:

1232:

1229:

1226:

1197:

1170:

1148:

1138:

1135:

1132:

1129:

1107:

1077:

1074:

1071:

1065:

1056:

1052:

1050:

1029:

1019:

1009:

1001:

998:

992:

986:

980:

973:

972:

971:

969:

964:

962:

959:

950:

948:

946:

937:

935:

920:

900:

880:

872:

858:

838:

834:

829:

825:

822:

819:

815:

794:

786:

782:

777:

763:

760:

752:

736:

727:

719:

717:

702:

694:

690:

686:

682:

679:a low-degree

678:

673:

671:

667:

663:

655:

653:

651:

647:

643:

639:

635:

630:

626:

624:

620:

616:

611:

609:

605:

603:

598:

594:

593:

584:

560:

556:

552:

548:

544:

540:

536:

532:

528:

524:

513:

508:

506:

501:

499:

494:

493:

491:

490:

485:

480:

475:

474:

473:

472:

467:

464:

462:

459:

457:

454:

452:

449:

447:

444:

442:

439:

438:

437:

436:

431:

426:

423:

421:

418:

416:

413:

411:

408:

406:

403:

402:

401:

400:

395:

392:

390:

387:

385:

382:

380:

377:

375:

372:

371:

370:

369:

364:

361:

359:

356:

354:

351:

349:

346:

345:

344:

343:

338:

335:

333:

330:

328:

327:Least squares

325:

324:

323:

322:

317:

312:

309:

308:

307:

306:

301:

298:

296:

293:

291:

288:

286:

283:

281:

278:

276:

273:

271:

268:

266:

263:

261:

260:Nonparametric

258:

256:

253:

252:

251:

250:

245:

242:

240:

237:

235:

232:

230:

229:Fixed effects

227:

225:

222:

221:

220:

219:

214:

211:

209:

206:

204:

203:Ordered logit

201:

199:

196:

194:

191:

189:

186:

184:

181:

179:

176:

174:

171:

169:

166:

164:

161:

159:

156:

154:

151:

150:

149:

148:

143:

140:

138:

135:

133:

130:

128:

125:

124:

123:

122:

117:

114:

110:

106:

105:

99:

94:

84:

81:

73:

63:

59:

53:

52:

46:

41:

32:

31:

19:

3288:

3276:

3207:

3198:

3183:by removing

3170:

3135:. Springer.

3131:

3109:cite journal

3067:cite journal

3035:

3002:

2996:

2964:

2960:

2924:

2918:

2896:

2875:, July 2016.

2872:

2866:

2861:

2852:

2843:

2834:

2825:

2813:

2806:Harrell 2015

2801:

2789:

2712:

2704:

2700:

2691:

2687:

2537:

2306:

2097:

1606:

1482:

1301:

1057:

1053:

1048:

1046:

965:

954:

941:

873:

851:and 1, with

784:

780:

778:

750:

725:

723:

674:

659:

649:

646:lowess curve

645:

637:

633:

631:

627:

612:

601:

558:

554:

550:

546:

530:

526:

522:

521:

384:Non-negative

294:

76:

67:

48:

2796:, Appendix.

642:scattergram

634:loess curve

394:Regularized

358:Generalized

290:Least angle

188:Mixed logit

62:introducing

2776:References

2684:Advantages

1649:such that

681:polynomial

433:Background

337:Non-linear

319:Estimation

45:references

3185:excessive

2967:(1): 54.

2781:Citations

2653:α

2638:‖

2631:−

2625:‖

2619:−

2611:

2517:−

2500:^

2475:^

2454:^

2385:

2346:^

2321:^

2282:^

2245:^

2220:^

2129:×

2074:^

2062:−

2040:^

2028:−

2004:

1975:^

1946:×

1888:×

1856:^

1780:

1746:

1514:×

1453:^

1440:−

1386:^

1373:−

1340:∑

1324:

1267:^

1258:↦

1139:∈

1002:−

961:estimates

958:parameter

921:α

901:α

881:α

859:λ

820:λ

795:α

764:α

737:α

300:Segmented

98:sine wave

70:June 2011

3308:Category

3277:Quantile

2729:See also

2723:outliers

689:response

677:data set

415:Bayesian

353:Weighted

348:Ordinary

280:Isotonic

275:Quantile

3179:Please

3171:use of

3101:1367799

3019:2289282

2981:2683591

2949:0556476

2941:2286407

2907:Sources

2573:is the

1961:matrix

1923:and an

1903:matrix

726:subsets

374:Partial

213:Poisson

58:improve

3139:

3099:

3043:

3017:

2979:

2947:

2939:

2891:NIST,

2719:robust

2098:where

1503:is an

1483:Here,

1047:where

650:lowess

640:-axis

555:LOWESS

553:) and

545:, are

332:Linear

270:Robust

193:Probit

119:Models

47:, but

3059:(PDF)

3031:(PDF)

3015:JSTOR

2977:JSTOR

2937:JSTOR

1212:into

547:LOESS

379:Total

295:Local

18:LOESS

3137:ISBN

3122:help

3097:OSTI

3080:help

3041:ISBN

1841:and

724:The

592:-ess

537:and

3187:or

3089:doi

3007:doi

2969:doi

2929:doi

2608:exp

2376:RSS

1315:RSS

1247:as

590:LOH

525:or

3310::

3113::

3111:}}

3107:{{

3095:.

3071::

3069:}}

3065:{{

3033:.

3013:.

3003:83

3001:.

2991:;

2975:.

2965:35

2963:.

2945:MR

2943:.

2935:.

2925:74

2923:.

2880:^

2871:,

2851:.

2833:.

2001:Tr

1777:Tr

1743:Tr

1568::=

1273::=

970:,

963:.

664:.

574:oʊ

3263:R

3254:R

3214:)

3208:(

3203:)

3199:(

3195:.

3177:.

3145:.

3124:)

3120:(

3103:.

3091::

3082:)

3078:(

3049:.

3021:.

3009::

2983:.

2971::

2951:.

2931::

2855:.

2837:.

2820:.

2679:.

2666:)

2657:2

2649:2

2642:2

2634:z

2628:x

2615:(

2605:=

2602:)

2599:z

2596:,

2593:x

2590:(

2587:w

2561:)

2558:z

2555:,

2552:x

2549:(

2546:w

2534:.

2520:1

2513:)

2507:T

2497:X

2490:)

2487:x

2484:(

2481:W

2472:X

2466:(

2461:T

2451:X

2444:)

2441:x

2438:(

2435:W

2432:Y

2429:=

2426:)

2423:x

2420:(

2417:A

2394:)

2391:A

2388:(

2380:x

2353:T

2343:X

2336:)

2333:x

2330:(

2327:W

2318:X

2303:.

2289:T

2279:X

2272:)

2269:x

2266:(

2263:W

2260:Y

2257:=

2252:T

2242:X

2235:)

2232:x

2229:(

2226:W

2217:X

2211:A

2188:A

2168:)

2165:x

2162:(

2157:i

2153:w

2132:N

2126:N

2106:W

2083:)

2080:)

2071:X

2065:A

2059:Y

2056:(

2051:T

2047:)

2037:X

2031:A

2025:Y

2022:(

2019:)

2016:x

2013:(

2010:W

2007:(

1972:X

1949:N

1943:)

1940:1

1937:+

1934:p

1931:(

1911:Y

1891:N

1885:m

1863:i

1853:x

1827:i

1823:y

1802:)

1797:T

1793:y

1789:y

1786:w

1783:(

1774:=

1771:)

1768:h

1763:T

1759:y

1755:y

1752:h

1749:(

1740:=

1737:)

1734:y

1731:h

1728:(

1723:T

1719:)

1715:y

1712:h

1709:(

1706:=

1703:y

1700:w

1695:T

1691:y

1668:2

1664:h

1660:=

1657:w

1637:h

1617:w

1607:i

1593:)

1590:x

1587:,

1582:i

1578:x

1574:(

1571:w

1565:)

1562:x

1559:(

1554:i

1550:w

1529:)

1526:1

1523:+

1520:p

1517:(

1511:m

1491:A

1468:.

1465:)

1460:i

1450:x

1443:A

1435:i

1431:y

1427:(

1424:)

1421:x

1418:(

1413:i

1409:w

1403:T

1399:)

1393:i

1383:x

1376:A

1368:i

1364:y

1360:(

1355:N

1350:1

1347:=

1344:i

1336:=

1333:)

1330:A

1327:(

1319:x

1288:)

1285:x

1282:,

1279:1

1276:(

1264:x

1255:x

1233:1

1230:+

1227:p

1222:R

1198:p

1193:R

1171:p

1149:p

1144:R

1136:z

1133:,

1130:x

1108:m

1103:R

1081:)

1078:z

1075:,

1072:x

1069:(

1066:w

1049:d

1030:3

1026:)

1020:3

1015:|

1010:d

1006:|

999:1

996:(

993:=

990:)

987:d

984:(

981:w

839:n

835:/

830:)

826:1

823:+

816:(

785:k

781:k

761:n

751:n

703:n

638:y

602:k

583:/

580:s

577:ɛ

571:l

568:ˈ

565:/

557:(

549:(

511:e

504:t

497:v

83:)

77:(

72:)

68:(

54:.

20:)

Text is available under the Creative Commons Attribution-ShareAlike License. Additional terms may apply.