279:

1466:

2460:

47:

1320:

Yudkowsky helped create the

Singularity Institute (now called the Machine Intelligence Research Institute) to help mankind achieve a friendly Singularity. (Disclosure: I have contributed to the Singularity Institute.) Yudkowsky then founded the community blog

920:

1358:

Users wrote reviews of the best posts of 2018, and voted on them using the quadratic voting system, popularized by Glen Weyl and

Vitalik Buterin. From the 2000+ posts published that year, the Review narrowed down the 44 most interesting and valuable

1325:, which seeks to promote the art of rationality, to raise the sanity waterline, and to in part convince people to make considered, rational charitable donations, some of which, Yudkowsky (correctly) hoped, would go to his organization.

1170:

Thanks to LessWrong's discussions of eugenics and evolutionary psychology, it has attracted some readers and commenters affiliated with the alt-right and neoreaction, that broad cohort of neofascist, white nationalist and misogynist

213:

are "The

Sequences", a series of essays which aim to describe how to avoid the typical failure modes of human reasoning with the goal of improving decision-making and the evaluation of evidence. One suggestion is the use of

493:. A selection of posts by these and other contributors, selected through a community review process, were published as parts of the essay collections "A Map That Reflects the Territory" and "The Engines of Cognition".

469:

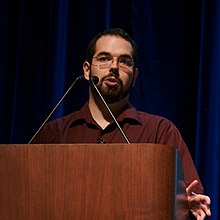

has been associated with several influential contributors. Founder

Eliezer Yudkowsky established the platform to promote rationality and raise awareness about potential risks associated with artificial intelligence.

1077:

902:

375:

users in 2016, 664 out of 3,060 respondents, or 21.7%, identified as "effective altruists". A separate survey of effective altruists in 2014 revealed that 31% of respondents had first heard of EA through

2118:

406:

tortures people who heard of the AI before it came into existence and failed to work tirelessly to bring it into existence, in order to incentivise said work. This idea came to be known as "

780:

585:

1204:

1008:

680:

1997:

1217:

Land and Yarvin are openly allies with the new reactionary movement, while

Yudkowsky counts many reactionaries among his fanbase despite finding their racist politics disgusting.

854:

262:

that would seem more at home in a 3D multiplex than a graduate seminar: the dire existential threat—or, with any luck, utopian promise—known as the technological

Singularity

1238:

1067:

2494:

1452:

644:

2292:

2182:

549:

2125:

1836:

1701:

1623:

1582:

1671:

474:

became one of the site's most popular writers before starting his own blog, Slate Star Codex, contributing discussions on AI safety and rationality.

1846:

1926:

1560:

770:

748:

575:

706:

1313:

1184:

2489:

1041:

1000:

670:

1118:

1841:

1499:

1279:

1157:

844:

2175:

983:

885:

1409:

1382:

1273:

955:

1304:

Miller, J.D. (2017). "Reflections on the

Singularity Journey". In Callaghan, V.; Miller, J.; Yampolskiy, R.; Armstrong, S. (eds.).

513:

1941:

1681:

1616:

434:(pen name Mencius Moldbug), the founder of the neoreactionary movement, and Hanson posted his side of a debate versus Moldbug on

403:

1230:

2083:

1851:

1589:

1105:

one of the sites where got his start as a commenter was on

Overcoming Bias, i.e. where Yudkowsky was writing before LessWrong.

308:

as the principal contributors. In

February 2009, Yudkowsky's posts were used as the seed material to create the community blog

316:

became Hanson's personal blog. In 2013, a significant portion of the rationalist community shifted focus to Scott

Alexander's

2047:

1550:

300:, an earlier group blog focused on human rationality, which began in November 2006, with artificial intelligence researcher

810:

2168:

1831:

1661:

634:

410:", based on Roko's idea that merely hearing about the idea would give the hypothetical AI system an incentive to try such

254:

in 2019 noted that "Despite describing itself as a forum on 'the art of human rationality,' the New York Less Wrong group

2410:

2365:

2297:

1946:

1871:

1826:

1555:

482:

266:... Branding themselves as 'rationalists,' as the Less Wrong crew has done, makes it a lot harder to dismiss them as a '

218:

as a decision-making tool. There is also a focus on psychological barriers that prevent good decision-making, including

278:

2499:

2097:

2042:

1609:

539:

2375:

1813:

1706:

145:

611:

2226:

2149:

2076:

1936:

1821:

245:

2484:

2231:

2210:

2104:

2017:

2002:

1876:

341:

223:

1340:

Gasarch, William (2022). "Review of "A Map that Reflects the Territory: Essays by the LessWrong Community"".

729:

1906:

1656:

1492:

793:

Since the late 1990s those worries have become more specific, and coalesced around Nick Bostrom's 2014 book

451:

198:

2463:

2132:

1961:

1696:

141:

2090:

2052:

1446:

675:

250:

2330:

1465:

2504:

2425:

2266:

1716:

1425:

Gasarch, William (2022). "Review of "The Engines of Cognition: Essays by the Less Wrong Community"".

2309:

2282:

1951:

286:

702:

2256:

2032:

1992:

1977:

1931:

1896:

1866:

1651:

1632:

1485:

427:

399:

368:

407:

389:

1031:

2445:

2435:

2415:

2069:

1921:

1916:

1792:

1757:

1732:

1646:

1534:

1405:

1378:

1309:

1269:

1196:

979:

951:

912:

881:

818:

775:

301:

282:

219:

215:

81:

2440:

2111:

1762:

1752:

1691:

1524:

1434:

1349:

1261:

1149:

1072:

1036:

544:

471:

317:

241:

2420:

2390:

2335:

1881:

1856:

1676:

1471:

259:

227:

1126:

2350:

2261:

2251:

2037:

2027:

2012:

1982:

1956:

1901:

1747:

1711:

1666:

1063:

849:

580:

490:

178:

174:

59:

509:

2478:

2380:

2355:

2246:

2191:

2007:

1987:

1911:

431:

267:

237:

446:, it too attracted some individuals affiliated with neoreaction with discussions of

2430:

2395:

2385:

2370:

2345:

2325:

2236:

1886:

1808:

1782:

1777:

1767:

1742:

1529:

1150:"The Strange and Conflicting World Views of Silicon Valley Billionaire Peter Thiel"

943:

639:

333:

305:

635:"This column will change your life: asked a tricky question? Answer an easier one"

458:

users in 2016, 28 out of 3060 respondents (0.92%) identified as "neoreactionary".

1399:

1372:

2340:

2241:

1861:

1686:

671:"Faith, Hope, and Singularity: Entering the Matrix with New York's Futurist Set"

194:

31:

371:(EA) movement, and the two communities are closely intertwined. In a survey of

324:

reported user activity on the site since returning to roughly pre-2013 levels.

2287:

2205:

1737:

1461:

186:

182:

137:

1200:

916:

822:

2405:

1787:

1438:

1353:

1308:. The Frontiers Collection. Berlin, Heidelberg: Springer. pp. 225–226.

907:

903:"The AI Does Not Hate You by Tom Chivers review — why the nerds are nervous"

411:

337:

190:

46:

30:

For the concept of choosing the least undesirable of available options, see

344:. Articles posted on LessWrong about AI have been cited in the news media.

1260:

Hermansson, Patrik; Lawrence, David; Mulhall, Joe; Murdoch, Simon (2020).

1032:"WARNING: Just Reading About This Thought Experiment Could Ruin Your Life"

749:"Data Analysis of LW: Activity Levels + Age Distribution of User Accounts"

454:. However, Yudkowsky has strongly rejected neoreaction. In a survey among

348:

and its surrounding movement work on AI are the subjects of the 2019 book

2400:

2022:

1891:

1772:

447:

435:

353:

17:

2360:

1565:

771:"What we've learned about the robot apocalypse from the OpenAI debacle"

607:

486:

149:

1601:

1374:

A Map That Reflects the Territory: Essays by the LessWrong Community

277:

320:. In 2017, a "Lesswrong 2.0" effort reinvigorated the site, with

2160:

170:

63:

2164:

1605:

1481:

845:"W&N wins Buzzfeed science reporter's debut after auction"

576:"Slate Star Codex and Silicon Valley's War Against the Media"

92:

1477:

974:

Chivers, Tom (2019). "Chapter 38: The Effective Altruists".

1401:

The Engines of Cognition: Essays by the LessWrong Community

1266:

The International Alt-Right. Fascism for the 21st Century?

1101:

Neoreaction a Basilisk: Essays On and Around the Alt-Right

1262:"The Dark Enlightenment: Neoreaction and Silicon Valley"

1322:

1185:"The Violence of Pure Reason: Neoreaction: A Basilisk"

1998:

Existential risk from artificial general intelligence

1818:

All-Party Parliamentary Group for Future Generations

1068:"The Most Terrifying Thought Experiment of All Time"

367:

played a significant role in the development of the

2318:

2275:

2219:

2198:

2142:

2061:

1970:

1801:

1725:

1639:

1574:

1543:

1517:

133:

125:

107:

99:

87:

77:

69:

53:

103:Optional, but is required for contributing content

703:"Where did Less Wrong come from? (LessWrong FAQ)"

533:

531:

380:, though that number had fallen to 8.2% by 2020.

236:is also concerned with artificial intelligence,

1189:Social Epistemology Review and Reply Collective

1001:"EA Survey 2020: How People Get Involved in EA"

2293:Institute for Ethics and Emerging Technologies

1837:Centre for Enabling EA Learning & Research

1371:Lagerros, J.; Pace, B.; LessWrong.com (2020).

1335:

1333:

1268:. Abingdon-on-Thames, England, UK: Routledge.

2176:

2126:Superintelligence: Paths, Dangers, Strategies

1617:

1493:

1299:

1297:

795:Superintelligence: Paths, Dangers, Strategies

8:

1702:Psychological barriers to effective altruism

1451:: CS1 maint: DOI inactive as of July 2024 (

969:

967:

39:

1583:Harry Potter and the Methods of Rationality

540:"You Can Learn How To Become More Rational"

332:Discussions of AI within LessWrong include

226:that have been studied by the psychologist

2183:

2169:

2161:

1672:Distributional cost-effectiveness analysis

1624:

1610:

1602:

1500:

1486:

1478:

664:

662:

38:

948:Utilitarianism: A Very Short Introduction

1847:Centre for the Study of Existential Risk

950:. Oxford University Press. p. 110.

843:Cowdrey, Katherine (21 September 2017).

2495:Internet properties established in 2009

1927:Machine Intelligence Research Institute

1561:Machine Intelligence Research Institute

797:and Eliezer Yudkowsky's blog LessWrong.

569:

567:

501:

1444:

1160:from the original on 13 February 2017

1044:from the original on 18 November 2018

857:from the original on 27 November 2018

764:

762:

7:

1235:Optimize Literally Everything (blog)

1080:from the original on 25 October 2018

1842:Center for High Impact Philanthropy

1207:from the original on 5 October 2016

574:Lewis-Kraus, Gideon (9 July 2020).

552:from the original on 10 August 2018

356:science correspondent Tom Chivers.

1404:. Center for Applied Rationality.

1377:. Center for Applied Rationality.

1229:Eliezer Yudkowsky (8 April 2016).

1183:Riggio, Adam (23 September 2016).

1103:(2nd ed.). Eruditorum Press.

923:from the original on 23 April 2020

709:from the original on 30 April 2019

683:from the original on 12 April 2019

647:from the original on 26 March 2014

516:from the original on 30 April 2019

402:to the site in which an otherwise

27:Rationality-focused community blog

25:

1282:from the original on 13 June 2022

1011:from the original on 28 July 2021

783:from the original on 3 March 2024

769:Chivers, Tom (22 November 2023).

633:Burkeman, Oliver (9 March 2012).

588:from the original on 10 July 2020

2459:

2458:

1682:Equal consideration of interests

1464:

1241:from the original on 26 May 2019

728:Habryka, Oliver (18 June 2017).

614:from the original on 6 July 2024

45:

2084:Famine, Affluence, and Morality

1852:Development Media International

1590:Rationality: From AI to Zombies

901:Marriott, James (31 May 2019).

2048:Risk of astronomical suffering

1551:Center for Applied Rationality

809:Newport, Cal (15 March 2024).

669:Tiku, Nitasha (25 July 2012).

538:Miller, James (28 July 2011).

258:... is fixated on a branch of

1:

1832:Centre for Effective Altruism

1662:Disability-adjusted life year

1306:The Technological Singularity

1030:Love, Dylan (6 August 2014).

978:. Weidenfeld & Nicolson.

880:. Weidenfeld & Nicolson.

2366:Nikolai Fyodorovich Fyodorov

2298:Future of Humanity Institute

1947:Raising for Effective Giving

1872:Future of Humanity Institute

1556:Future of Humanity Institute

1398:Pace, B.; LessWrong (2021).

1099:Sandifer, Elizabeth (2018).

942:de Lazari-Radek, Katarzyna;

2490:Transhumanist organizations

2098:Living High and Letting Die

2043:Neglected tropical diseases

1148:Keep, Elmo (22 June 2016).

999:Moss, David (20 May 2021).

404:benevolent future AI system

2521:

1814:Against Malaria Foundation

1707:Quality-adjusted life year

730:"Welcome to Lesswrong 2.0"

398:contributor Roko posted a

387:

29:

2454:

2150:Effective Altruism Global

2077:The End of Animal Farming

1937:Nuclear Threat Initiative

1822:Animal Charity Evaluators

811:"Can an A.I. Make Plans?"

477:Further notable users on

177:focused on discussion of

44:

2232:Eradication of suffering

2211:Transhumanism in fiction

2105:The Most Good You Can Do

2018:Intensive animal farming

2003:Global catastrophic risk

1877:Future of Life Institute

1441:(inactive 28 July 2024).

1005:Effective Altruism Forum

976:The AI Does Not Hate You

878:The AI Does Not Hate You

383:

350:The AI Does Not Hate You

209:The best known posts of

1907:The Good Food Institute

1657:Demandingness objection

1439:10.1145/3561066.3561064

1354:10.1145/3532737.3532741

452:evolutionary psychology

422:The comment section of

328:Artificial Intelligence

199:artificial intelligence

2310:US Transhumanist Party

2133:What We Owe the Future

1962:Wild Animal Initiative

1697:Moral circle expansion

608:"Sequences Highlights"

290:

201:, among other topics.

113:; 15 years ago

2091:The Life You Can Save

2053:Wild animal suffering

946:(27 September 2017).

876:Chivers, Tom (2019).

342:machine consciousness

281:

251:The New York Observer

111:February 1, 2009

2426:Gennady Stolyarov II

2267:Techno-progressivism

1717:Venture philanthropy

1323:http://LessWrong.com

753:Lesswrong forum post

747:Ruby (14 May 2019).

734:Lesswrong forum post

426:attracted prominent

352:, written by former

2283:Foresight Institute

1952:Sentience Institute

1119:"My Moldbug Debate"

287:Stanford University

242:existential threats

126:Current status

41:

2500:Effective altruism

2331:José Luis Cordeiro

2257:Singularitarianism

2033:Malaria prevention

1993:Economic stability

1978:Biotechnology risk

1932:Malaria Consortium

1897:Giving What We Can

1867:Fistula Foundation

1652:Charity assessment

1633:Effective altruism

1129:on 24 January 2010

400:thought experiment

369:effective altruism

360:Effective altruism

291:

2472:

2471:

2446:Eliezer Yudkowsky

2436:Natasha Vita-More

2416:Martine Rothblatt

2158:

2157:

2070:Doing Good Better

1942:Open Philanthropy

1922:Mercy for Animals

1917:The Humane League

1793:Eliezer Yudkowsky

1758:William MacAskill

1733:Sam Bankman-Fried

1647:Aid effectiveness

1599:

1598:

1535:Eliezer Yudkowsky

1315:978-3-662-54033-6

302:Eliezer Yudkowsky

283:Eliezer Yudkowsky

220:fear conditioning

169:) is a community

156:

155:

82:Eliezer Yudkowsky

70:Available in

16:(Redirected from

2512:

2462:

2461:

2441:Mark Alan Walker

2185:

2178:

2171:

2162:

2112:Practical Ethics

1763:Dustin Moskovitz

1753:Holden Karnofsky

1692:Marginal utility

1626:

1619:

1612:

1603:

1502:

1495:

1488:

1479:

1474:

1469:

1468:

1457:

1456:

1450:

1442:

1422:

1416:

1415:

1395:

1389:

1388:

1368:

1362:

1361:

1337:

1328:

1327:

1301:

1292:

1291:

1289:

1287:

1257:

1251:

1250:

1248:

1246:

1226:

1220:

1219:

1214:

1212:

1180:

1174:

1173:

1167:

1165:

1145:

1139:

1138:

1136:

1134:

1125:. Archived from

1114:

1108:

1107:

1096:

1090:

1089:

1087:

1085:

1066:(17 July 2014).

1060:

1054:

1053:

1051:

1049:

1037:Business Insider

1027:

1021:

1020:

1018:

1016:

996:

990:

989:

971:

962:

961:

939:

933:

932:

930:

928:

898:

892:

891:

873:

867:

866:

864:

862:

840:

834:

833:

831:

829:

806:

800:

799:

790:

788:

766:

757:

756:

744:

738:

737:

725:

719:

718:

716:

714:

699:

693:

692:

690:

688:

666:

657:

656:

654:

652:

630:

624:

623:

621:

619:

604:

598:

597:

595:

593:

571:

562:

561:

559:

557:

545:Business Insider

535:

526:

525:

523:

521:

510:"Less Wrong FAQ"

506:

428:neoreactionaries

318:Slate Star Codex

265:

257:

224:cognitive biases

179:cognitive biases

121:

119:

114:

95:

49:

42:

21:

2520:

2519:

2515:

2514:

2513:

2511:

2510:

2509:

2485:Internet forums

2475:

2474:

2473:

2468:

2450:

2421:Anders Sandberg

2391:Ole Martin Moen

2336:K. Eric Drexler

2314:

2271:

2215:

2194:

2189:

2159:

2154:

2138:

2057:

2023:Land use reform

1966:

1882:Founders Pledge

1857:Evidence Action

1797:

1721:

1677:Earning to give

1635:

1630:

1600:

1595:

1570:

1539:

1525:Scott Alexander

1513:

1506:

1472:Internet portal

1470:

1463:

1460:

1443:

1427:ACM SIGACT News

1424:

1423:

1419:

1412:

1397:

1396:

1392:

1385:

1370:

1369:

1365:

1342:ACM SIGACT News

1339:

1338:

1331:

1316:

1303:

1302:

1295:

1285:

1283:

1276:

1259:

1258:

1254:

1244:

1242:

1228:

1227:

1223:

1210:

1208:

1182:

1181:

1177:

1163:

1161:

1147:

1146:

1142:

1132:

1130:

1123:Overcoming Bias

1117:Hanson, Robin.

1116:

1115:

1111:

1098:

1097:

1093:

1083:

1081:

1064:Auerbach, David

1062:

1061:

1057:

1047:

1045:

1029:

1028:

1024:

1014:

1012:

998:

997:

993:

986:

973:

972:

965:

958:

941:

940:

936:

926:

924:

900:

899:

895:

888:

875:

874:

870:

860:

858:

842:

841:

837:

827:

825:

808:

807:

803:

786:

784:

768:

767:

760:

746:

745:

741:

727:

726:

722:

712:

710:

701:

700:

696:

686:

684:

668:

667:

660:

650:

648:

632:

631:

627:

617:

615:

606:

605:

601:

591:

589:

573:

572:

565:

555:

553:

537:

536:

529:

519:

517:

508:

507:

503:

499:

483:Paul Christiano

472:Scott Alexander

464:

444:Overcoming Bias

424:Overcoming Bias

420:

408:Roko's basilisk

392:

390:Roko's basilisk

386:

384:Roko's basilisk

362:

330:

314:Overcoming Bias

298:Overcoming Bias

296:developed from

276:

263:

255:

228:Daniel Kahneman

207:

134:Written in

117:

115:

112:

91:

78:Created by

56:

35:

28:

23:

22:

15:

12:

11:

5:

2518:

2516:

2508:

2507:

2502:

2497:

2492:

2487:

2477:

2476:

2470:

2469:

2467:

2466:

2455:

2452:

2451:

2449:

2448:

2443:

2438:

2433:

2428:

2423:

2418:

2413:

2408:

2403:

2398:

2393:

2388:

2383:

2378:

2373:

2368:

2363:

2358:

2353:

2351:Aubrey de Grey

2348:

2343:

2338:

2333:

2328:

2322:

2320:

2316:

2315:

2313:

2312:

2307:

2300:

2295:

2290:

2285:

2279:

2277:

2273:

2272:

2270:

2269:

2264:

2262:Technogaianism

2259:

2254:

2252:Postpoliticism

2249:

2244:

2239:

2234:

2229:

2227:Antinaturalism

2223:

2221:

2217:

2216:

2214:

2213:

2208:

2202:

2200:

2196:

2195:

2190:

2188:

2187:

2180:

2173:

2165:

2156:

2155:

2153:

2152:

2146:

2144:

2140:

2139:

2137:

2136:

2129:

2122:

2115:

2108:

2101:

2094:

2087:

2080:

2073:

2065:

2063:

2059:

2058:

2056:

2055:

2050:

2045:

2040:

2038:Mass deworming

2035:

2030:

2028:Life extension

2025:

2020:

2015:

2013:Global poverty

2010:

2005:

2000:

1995:

1990:

1985:

1983:Climate change

1980:

1974:

1972:

1968:

1967:

1965:

1964:

1959:

1957:Unlimit Health

1954:

1949:

1944:

1939:

1934:

1929:

1924:

1919:

1914:

1909:

1904:

1902:Good Food Fund

1899:

1894:

1889:

1884:

1879:

1874:

1869:

1864:

1859:

1854:

1849:

1844:

1839:

1834:

1829:

1824:

1819:

1816:

1811:

1805:

1803:

1799:

1798:

1796:

1795:

1790:

1785:

1780:

1775:

1770:

1765:

1760:

1755:

1750:

1748:Hilary Greaves

1745:

1740:

1735:

1729:

1727:

1723:

1722:

1720:

1719:

1714:

1712:Utilitarianism

1709:

1704:

1699:

1694:

1689:

1684:

1679:

1674:

1669:

1667:Disease burden

1664:

1659:

1654:

1649:

1643:

1641:

1637:

1636:

1631:

1629:

1628:

1621:

1614:

1606:

1597:

1596:

1594:

1593:

1586:

1578:

1576:

1572:

1571:

1569:

1568:

1563:

1558:

1553:

1547:

1545:

1541:

1540:

1538:

1537:

1532:

1527:

1521:

1519:

1515:

1514:

1507:

1505:

1504:

1497:

1490:

1482:

1476:

1475:

1459:

1458:

1417:

1410:

1390:

1383:

1363:

1329:

1314:

1293:

1274:

1252:

1221:

1175:

1140:

1109:

1091:

1055:

1022:

991:

985:978-1474608770

984:

963:

956:

934:

893:

887:978-1474608770

886:

868:

850:The Bookseller

835:

815:The New Yorker

801:

758:

739:

720:

694:

658:

625:

599:

581:The New Yorker

563:

527:

500:

498:

495:

491:Zvi Mowshowitz

463:

460:

419:

416:

394:In July 2010,

388:Main article:

385:

382:

361:

358:

329:

326:

304:and economist

275:

272:

216:Bayes' theorem

206:

203:

163:(also written

154:

153:

135:

131:

130:

127:

123:

122:

109:

105:

104:

101:

97:

96:

89:

85:

84:

79:

75:

74:

71:

67:

66:

60:Internet forum

57:

54:

51:

50:

26:

24:

14:

13:

10:

9:

6:

4:

3:

2:

2517:

2506:

2503:

2501:

2498:

2496:

2493:

2491:

2488:

2486:

2483:

2482:

2480:

2465:

2457:

2456:

2453:

2447:

2444:

2442:

2439:

2437:

2434:

2432:

2429:

2427:

2424:

2422:

2419:

2417:

2414:

2412:

2409:

2407:

2404:

2402:

2399:

2397:

2394:

2392:

2389:

2387:

2384:

2382:

2381:Julian Huxley

2379:

2377:

2374:

2372:

2369:

2367:

2364:

2362:

2359:

2357:

2356:Zoltan Istvan

2354:

2352:

2349:

2347:

2344:

2342:

2339:

2337:

2334:

2332:

2329:

2327:

2324:

2323:

2321:

2317:

2311:

2308:

2306:

2305:

2301:

2299:

2296:

2294:

2291:

2289:

2286:

2284:

2281:

2280:

2278:

2276:Organizations

2274:

2268:

2265:

2263:

2260:

2258:

2255:

2253:

2250:

2248:

2247:Postgenderism

2245:

2243:

2240:

2238:

2235:

2233:

2230:

2228:

2225:

2224:

2222:

2218:

2212:

2209:

2207:

2204:

2203:

2201:

2197:

2193:

2192:Transhumanism

2186:

2181:

2179:

2174:

2172:

2167:

2166:

2163:

2151:

2148:

2147:

2145:

2141:

2135:

2134:

2130:

2128:

2127:

2123:

2121:

2120:

2119:The Precipice

2116:

2114:

2113:

2109:

2107:

2106:

2102:

2100:

2099:

2095:

2093:

2092:

2088:

2086:

2085:

2081:

2079:

2078:

2074:

2072:

2071:

2067:

2066:

2064:

2060:

2054:

2051:

2049:

2046:

2044:

2041:

2039:

2036:

2034:

2031:

2029:

2026:

2024:

2021:

2019:

2016:

2014:

2011:

2009:

2008:Global health

2006:

2004:

2001:

1999:

1996:

1994:

1991:

1989:

1988:Cultured meat

1986:

1984:

1981:

1979:

1976:

1975:

1973:

1969:

1963:

1960:

1958:

1955:

1953:

1950:

1948:

1945:

1943:

1940:

1938:

1935:

1933:

1930:

1928:

1925:

1923:

1920:

1918:

1915:

1913:

1912:Good Ventures

1910:

1908:

1905:

1903:

1900:

1898:

1895:

1893:

1890:

1888:

1885:

1883:

1880:

1878:

1875:

1873:

1870:

1868:

1865:

1863:

1860:

1858:

1855:

1853:

1850:

1848:

1845:

1843:

1840:

1838:

1835:

1833:

1830:

1828:

1827:Animal Ethics

1825:

1823:

1820:

1817:

1815:

1812:

1810:

1807:

1806:

1804:

1802:Organizations

1800:

1794:

1791:

1789:

1786:

1784:

1781:

1779:

1776:

1774:

1771:

1769:

1766:

1764:

1761:

1759:

1756:

1754:

1751:

1749:

1746:

1744:

1741:

1739:

1736:

1734:

1731:

1730:

1728:

1724:

1718:

1715:

1713:

1710:

1708:

1705:

1703:

1700:

1698:

1695:

1693:

1690:

1688:

1685:

1683:

1680:

1678:

1675:

1673:

1670:

1668:

1665:

1663:

1660:

1658:

1655:

1653:

1650:

1648:

1645:

1644:

1642:

1638:

1634:

1627:

1622:

1620:

1615:

1613:

1608:

1607:

1604:

1592:

1591:

1587:

1585:

1584:

1580:

1579:

1577:

1573:

1567:

1564:

1562:

1559:

1557:

1554:

1552:

1549:

1548:

1546:

1544:Organizations

1542:

1536:

1533:

1531:

1528:

1526:

1523:

1522:

1520:

1516:

1512:

1511:

1503:

1498:

1496:

1491:

1489:

1484:

1483:

1480:

1473:

1467:

1462:

1454:

1448:

1440:

1436:

1432:

1428:

1421:

1418:

1413:

1411:9781736128510

1407:

1403:

1402:

1394:

1391:

1386:

1384:9781736128503

1380:

1376:

1375:

1367:

1364:

1360:

1355:

1351:

1347:

1343:

1336:

1334:

1330:

1326:

1324:

1317:

1311:

1307:

1300:

1298:

1294:

1281:

1277:

1275:9781138363861

1271:

1267:

1263:

1256:

1253:

1240:

1236:

1232:

1225:

1222:

1218:

1206:

1202:

1198:

1194:

1190:

1186:

1179:

1176:

1172:

1159:

1155:

1151:

1144:

1141:

1128:

1124:

1120:

1113:

1110:

1106:

1102:

1095:

1092:

1079:

1075:

1074:

1069:

1065:

1059:

1056:

1043:

1039:

1038:

1033:

1026:

1023:

1010:

1006:

1002:

995:

992:

987:

981:

977:

970:

968:

964:

959:

957:9780198728795

953:

949:

945:

944:Singer, Peter

938:

935:

922:

918:

914:

910:

909:

904:

897:

894:

889:

883:

879:

872:

869:

856:

852:

851:

846:

839:

836:

824:

820:

816:

812:

805:

802:

798:

796:

782:

778:

777:

772:

765:

763:

759:

754:

750:

743:

740:

735:

731:

724:

721:

708:

704:

698:

695:

682:

678:

677:

672:

665:

663:

659:

646:

642:

641:

636:

629:

626:

613:

610:. LessWrong.

609:

603:

600:

587:

583:

582:

577:

570:

568:

564:

551:

547:

546:

541:

534:

532:

528:

515:

512:. LessWrong.

511:

505:

502:

496:

494:

492:

488:

484:

480:

475:

473:

468:

462:Notable users

461:

459:

457:

453:

449:

445:

441:

437:

433:

432:Curtis Yarvin

429:

425:

417:

415:

413:

409:

405:

401:

397:

391:

381:

379:

374:

370:

366:

359:

357:

355:

351:

347:

343:

339:

335:

327:

325:

323:

319:

315:

311:

307:

303:

299:

295:

288:

284:

280:

273:

271:

269:

268:doomsday cult

261:

253:

252:

247:

243:

239:

238:transhumanism

235:

231:

229:

225:

221:

217:

212:

204:

202:

200:

196:

192:

188:

184:

180:

176:

172:

168:

167:

162:

161:

151:

147:

143:

139:

136:

132:

128:

124:

110:

106:

102:

98:

94:

93:LessWrong.com

90:

86:

83:

80:

76:

72:

68:

65:

61:

58:

52:

48:

43:

37:

33:

19:

2431:Vernor Vinge

2411:David Pearce

2396:Hans Moravec

2386:Ray Kurzweil

2376:James Hughes

2371:Robin Hanson

2346:Ben Goertzel

2326:Nick Bostrom

2303:

2302:

2237:Extropianism

2131:

2124:

2117:

2110:

2103:

2096:

2089:

2082:

2075:

2068:

1887:GiveDirectly

1809:80,000 Hours

1783:Peter Singer

1778:Derek Parfit

1768:Yew-Kwang Ng

1743:Nick Bostrom

1588:

1581:

1530:Robin Hanson

1509:

1508:

1447:cite journal

1430:

1426:

1420:

1400:

1393:

1373:

1366:

1357:

1348:(1): 13–24.

1345:

1341:

1319:

1305:

1284:. Retrieved

1265:

1255:

1243:. Retrieved

1234:

1224:

1216:

1209:. Retrieved

1195:(9): 34–41.

1192:

1188:

1178:

1169:

1162:. Retrieved

1153:

1143:

1131:. Retrieved

1127:the original

1122:

1112:

1104:

1100:

1094:

1082:. Retrieved

1071:

1058:

1046:. Retrieved

1035:

1025:

1013:. Retrieved

1004:

994:

975:

947:

937:

925:. Retrieved

906:

896:

877:

871:

861:21 September

859:. Retrieved

848:

838:

826:. Retrieved

814:

804:

794:

792:

785:. Retrieved

774:

752:

742:

733:

723:

711:. Retrieved

697:

685:. Retrieved

674:

649:. Retrieved

640:The Guardian

638:

628:

616:. Retrieved

602:

590:. Retrieved

579:

554:. Retrieved

543:

518:. Retrieved

504:

478:

476:

466:

465:

455:

443:

439:

423:

421:

395:

393:

377:

372:

364:

363:

349:

345:

334:AI alignment

331:

321:

313:

309:

306:Robin Hanson

297:

293:

292:

249:

233:

232:

210:

208:

165:

164:

159:

158:

157:

144:(powered by

100:Registration

55:Type of site

36:

2505:Rationalism

2341:David Gobel

2242:Immortalism

1971:Focus areas

1862:Faunalytics

1726:Key figures

1687:Longtermism

1433:(3): 6–16.

442:split from

418:Neoreaction

322:LessWrong's

246:singularity

195:rationality

32:lesser evil

2479:Categories

2206:Transhuman

2062:Literature

1738:Liv Boeree

1231:"Untitled"

1048:6 December

497:References

346:LessWrong,

187:psychology

183:philosophy

166:Less Wrong

138:JavaScript

118:2009-02-01

2406:Elon Musk

2304:LessWrong

2288:Humanity+

2199:Overviews

1788:Cari Tuna

1510:LessWrong

1286:2 October

1245:7 October

1211:5 October

1201:2471-9560

1164:5 October

917:0140-0460

908:The Times

823:0028-792X

479:LessWrong

467:LessWrong

456:LessWrong

440:LessWrong

412:blackmail

396:LessWrong

378:LessWrong

373:LessWrong

365:LessWrong

338:AI safety

310:LessWrong

294:LessWrong

234:LessWrong

211:LessWrong

191:economics

160:LessWrong

40:LessWrong

18:Lesswrong

2464:Category

2401:Max More

2220:Currents

1892:GiveWell

1773:Toby Ord

1640:Concepts

1280:Archived

1239:Archived

1205:Archived

1158:Archived

1078:Archived

1042:Archived

1009:Archived

921:Archived

855:Archived

781:Archived

713:25 March

707:Archived

687:12 April

681:Archived

676:Observer

651:25 March

645:Archived

612:Archived

592:4 August

586:Archived

556:25 March

550:Archived

520:25 March

514:Archived

481:include

448:eugenics

438:. After

436:futarchy

430:such as

354:BuzzFeed

260:futurism

244:and the

108:Launched

2361:FM-2030

1566:MetaMed

1171:trolls.

1133:13 July

1084:18 July

1015:28 July

828:14 July

787:14 July

776:Semafor

618:12 July

487:Wei Dai

289:in 2006

274:History

205:Purpose

150:GraphQL

116: (

73:English

2319:People

2143:Events

1518:People

1408:

1381:

1359:posts.

1312:

1272:

1199:

1154:Fusion

982:

954:

915:

884:

821:

340:, and

312:, and

264:

256:

197:, and

129:Active

1575:Works

1073:Slate

927:3 May

175:forum

146:React

1453:link

1406:ISBN

1379:ISBN

1310:ISBN

1288:2020

1270:ISBN

1247:2016

1213:2016

1197:ISSN

1166:2016

1135:2024

1086:2014

1050:2014

1017:2021

980:ISBN

952:ISBN

929:2020

913:ISSN

882:ISBN

863:2017

830:2024

819:ISSN

789:2024

715:2014

689:2019

653:2014

620:2024

594:2020

558:2014

522:2014

489:and

450:and

270:'."

222:and

173:and

171:blog

148:and

64:blog

1435:doi

1350:doi

285:at

142:CSS

88:URL

2481::

1449:}}

1445:{{

1431:53

1429:.

1356:.

1346:53

1344:.

1332:^

1318:.

1296:^

1278:.

1264:.

1237:.

1233:.

1215:.

1203:.

1191:.

1187:.

1168:.

1156:.

1152:.

1121:.

1076:.

1070:.

1040:.

1034:.

1007:.

1003:.

966:^

919:.

911:.

905:.

853:.

847:.

817:.

813:.

791:.

779:.

773:.

761:^

751:.

732:.

705:.

679:.

673:.

661:^

643:.

637:.

584:.

578:.

566:^

548:.

542:.

530:^

485:,

414:.

336:,

248:.

240:,

230:.

193:,

189:,

185:,

181:,

140:,

62:,

2184:e

2177:t

2170:v

1625:e

1618:t

1611:v

1501:e

1494:t

1487:v

1455:)

1437::

1414:.

1387:.

1352::

1290:.

1249:.

1193:5

1137:.

1088:.

1052:.

1019:.

988:.

960:.

931:.

890:.

865:.

832:.

755:.

736:.

717:.

691:.

655:.

622:.

596:.

560:.

524:.

152:)

120:)

34:.

20:)

Text is available under the Creative Commons Attribution-ShareAlike License. Additional terms may apply.