38:

1742:

This discrepancy shows that the probability distribution for tomorrow's color depends not only on the present value, but is also affected by information about the past. This stochastic process of observed colors doesn't have the Markov property. Using the same experiment above, if sampling "without

154:

Note that there is a subtle, often overlooked and very important point that is often missed in the plain

English statement of the definition. Namely that the statespace of the process is constant through time. The conditional description involves a fixed "bandwidth". For example, without this

907:

1424:

155:

restriction we could augment any process to one which includes the complete history from a given initial condition and it would be made to be

Markovian. But the state space would be of increasing dimensionality over time and does not meet the definition.

1687:

Suppose you know that today's ball was red, but you have no information about yesterday's ball. The chance that tomorrow's ball will be red is 1/2. That's because the only two remaining outcomes for this random experiment are:

128:

of future states of the process (conditional on both past and present values) depends only upon the present state; that is, given the present, the future does not depend on the past. A process with this property is said to be

638:

701:

1683:

Assume that an urn contains two red balls and one green ball. One ball was drawn yesterday, one ball was drawn today, and the final ball will be drawn tomorrow. All of the draws are "without replacement".

1039:

1320:

437:

1269:

1816:. Imprint Moscow, Academy of Sciences of the USSR, 1954 Added t.p. in Russian Translation of Works of the Mathematical Institute, Academy of Sciences of the USSR, v. 42. Original title:

273:

1108:

1216:

214:

1593:

1503:

1667:, the Markov property is considered desirable since it may enable the reasoning and resolution of the problem that otherwise would not be possible to be resolved because of its

474:

373:

333:

1167:

693:

1074:

1562:

1620:

506:

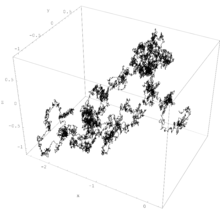

1649:

1529:

1312:

532:

105:

extends this property to two or more dimensions or to random variables defined for an interconnected network of items. An example of a model for such a field is the

1471:

1292:

1448:

661:

297:

540:

902:{\displaystyle P(X_{n+1}=x_{n+1}\mid X_{n}=x_{n},\dots ,X_{1}=x_{1})=P(X_{n+1}=x_{n+1}\mid X_{n}=x_{n}){\text{ for all }}n\in \mathbb {N} .}

45:

for times 0 ≤ t ≤ 2. Brownian motion has the Markov property, as the displacement of the particle does not depend on its past displacements.

125:

1739:

On the other hand, if you know that both today and yesterday's balls were red, then you are guaranteed to get a green ball tomorrow.

1889:

1845:

1768:

221:

83:

is similar to the Markov property, except that the meaning of "present" is defined in terms of a random variable known as a

923:

30:

This article is about the property of a stochastic process. For the class of properties of a finitely presented group, see

1419:{\displaystyle {\mathcal {F}}_{\tau }=\{A\in {\mathcal {F}}:\forall t\geq 0,\{\tau \leq t\}\cap A\in {\mathcal {F}}_{t}\}}

378:

1743:

replacement" is changed to sampling "with replacement," the process of observed colors will have the Markov property.

1668:

1224:

1864:

226:

1956:

1664:

1079:

1179:

177:

1747:

31:

1837:

1788:

1763:

1567:

1951:

1476:

449:

342:

302:

1121:

1660:

670:

1751:

1047:

102:

95:

1625:

The strong Markov property implies the ordinary Markov property since by taking the stopping time

1534:

1219:

1170:

66:

50:

1598:

1885:

1877:

1841:

1174:

479:

217:

1628:

1508:

1297:

69:, which means that its future evolution is independent of its history. It is named after the

511:

336:

1456:

1277:

1905:

439:

276:

148:

42:

1925:

1778:

1433:

646:

282:

139:

17:

1945:

1829:

1451:

1272:

664:

84:

76:

73:

94:

is used to describe a model where the Markov property is assumed to hold, such as a

1856:

1793:

1783:

1672:

164:

144:

113:

112:

A discrete-time stochastic process satisfying the Markov property is known as a

106:

37:

1773:

62:

54:

633:{\displaystyle P(X_{t}\in A\mid {\mathcal {F}}_{s})=P(X_{t}\in A\mid X_{s}).}

1922:"Example of a stochastic process which does not have the Markov property"

1921:

1911:. Wiley Series in Probability and Mathematical Statistics, 1986, p. 158.

70:

1882:

Stochastic

Differential Equations: An Introduction with Applications

1746:

An application of the Markov property in a generalized form is in

36:

917:

Alternatively, the Markov property can be formulated as follows.

1574:

1402:

1352:

1327:

1234:

1194:

961:

572:

461:

357:

317:

236:

192:

1450:

is said to have the strong Markov property if, for each

1631:

1601:

1570:

1537:

1511:

1479:

1459:

1436:

1323:

1300:

1280:

1227:

1182:

1124:

1082:

1050:

1034:{\displaystyle \operatorname {E} =\operatorname {E} }

926:

704:

673:

649:

543:

514:

482:

452:

381:

345:

305:

285:

229:

180:

124:

A stochastic process has the Markov property if the

1909:Markov Processes: Characterization and Convergence

1643:

1614:

1587:

1556:

1523:

1497:

1465:

1442:

1418:

1306:

1286:

1263:

1210:

1161:

1102:

1068:

1033:

901:

687:

655:

632:

526:

500:

468:

431:

367:

327:

291:

267:

208:

432:{\displaystyle X=\{X_{t}:\Omega \to S\}_{t\in I}}

1264:{\displaystyle \{{\mathcal {F}}_{t}\}_{t\geq 0}}

1651:, the ordinary Markov property can be deduced.

143:. Two famous classes of Markov process are the

268:{\displaystyle ({\mathcal {F}}_{s},\ s\in I)}

8:

1492:

1480:

1413:

1387:

1375:

1341:

1246:

1228:

414:

388:

1103:{\displaystyle f:S\rightarrow \mathbb {R} }

27:Memoryless property of a stochastic process

1834:The Oxford Dictionary of Statistical Terms

1211:{\displaystyle (\Omega ,{\mathcal {F}},P)}

209:{\displaystyle (\Omega ,{\mathcal {F}},P)}

41:A single realisation of three-dimensional

1630:

1606:

1600:

1579:

1573:

1572:

1569:

1542:

1536:

1510:

1478:

1458:

1435:

1407:

1401:

1400:

1351:

1350:

1332:

1326:

1325:

1322:

1299:

1279:

1249:

1239:

1233:

1232:

1226:

1193:

1192:

1181:

1138:

1123:

1096:

1095:

1081:

1049:

1019:

997:

966:

960:

959:

946:

925:

892:

891:

880:

871:

858:

839:

820:

798:

785:

766:

753:

734:

715:

703:

681:

680:

672:

648:

618:

599:

577:

571:

570:

554:

542:

513:

481:

460:

459:

451:

417:

395:

380:

356:

355:

344:

316:

315:

304:

284:

241:

235:

234:

228:

191:

190:

179:

1690:

1805:

695:, this can be reformulated as follows:

1588:{\displaystyle {\mathcal {F}}_{\tau }}

7:

1498:{\displaystyle \{\tau <\infty \}}

126:conditional probability distribution

469:{\displaystyle A\in {\mathcal {S}}}

1489:

1360:

1301:

1186:

978:

927:

404:

368:{\displaystyle (S,{\mathcal {S}})}

328:{\displaystyle (S,{\mathcal {S}})}

184:

25:

1162:{\displaystyle X=(X_{t}:t\geq 0)}

1861:Probability: Theory and Examples

1750:computations in the context of

1205:

1183:

1156:

1131:

1092:

1028:

1025:

1012:

1003:

990:

984:

972:

952:

939:

933:

877:

813:

804:

708:

688:{\displaystyle I=\mathbb {N} }

624:

592:

583:

547:

407:

362:

346:

322:

306:

262:

230:

203:

181:

1:

1671:. Such a model is known as a

1069:{\displaystyle t\geq s\geq 0}

1732:

1729:

1726:

1721:

1718:

1715:

1710:

1707:

1704:

1699:

1696:

1693:

1769:Chapman–Kolmogorov equation

1557:{\displaystyle X_{\tau +t}}

1473:, conditional on the event

663:is a discrete set with the

375:-valued stochastic process

165:Markov chain § History

1973:

1865:Cambridge University Press

162:

29:

1665:probabilistic forecasting

1615:{\displaystyle X_{\tau }}

440:adapted to the filtration

1748:Markov chain Monte Carlo

1505:, we have that for each

1110:bounded and measurable.

913:Alternative formulations

501:{\displaystyle s,t\in I}

1904:Ethier, Stewart N. and

1838:Oxford University Press

1789:Markov decision process

1764:Causal Markov condition

1644:{\displaystyle \tau =t}

1524:{\displaystyle t\geq 0}

1307:{\displaystyle \Omega }

442:is said to possess the

1812:Markov, A. A. (1954).

1645:

1616:

1589:

1558:

1525:

1499:

1467:

1444:

1420:

1308:

1288:

1265:

1212:

1163:

1114:Strong Markov property

1104:

1070:

1035:

903:

689:

665:discrete sigma algebra

657:

634:

528:

527:{\displaystyle s<t}

502:

470:

433:

369:

329:

293:

269:

210:

81:strong Markov property

46:

18:Strong Markov property

1646:

1617:

1590:

1559:

1526:

1500:

1468:

1466:{\displaystyle \tau }

1445:

1421:

1309:

1289:

1287:{\displaystyle \tau }

1266:

1213:

1164:

1105:

1071:

1036:

904:

690:

658:

635:

529:

503:

471:

434:

370:

330:

294:

270:

211:

40:

1884:. Springer, Berlin.

1814:Theory of Algorithms

1661:predictive modelling

1629:

1599:

1568:

1535:

1509:

1477:

1457:

1434:

1321:

1298:

1278:

1225:

1180:

1122:

1080:

1048:

924:

702:

671:

647:

541:

512:

480:

450:

379:

343:

303:

283:

227:

178:

1752:Bayesian statistics

882: for all

103:Markov random field

96:hidden Markov model

32:Adian–Rabin theorem

1878:Øksendal, Bernt K.

1863:. Fourth Edition.

1818:Teoriya algorifmov

1641:

1612:

1585:

1564:is independent of

1554:

1521:

1495:

1463:

1440:

1416:

1304:

1284:

1261:

1220:natural filtration

1208:

1171:stochastic process

1159:

1100:

1066:

1031:

899:

685:

653:

643:In the case where

630:

524:

498:

466:

429:

365:

325:

289:

265:

206:

67:stochastic process

51:probability theory

47:

1737:

1736:

1659:In the fields of

1443:{\displaystyle X}

1175:probability space

883:

656:{\displaystyle S}

292:{\displaystyle I}

252:

218:probability space

92:Markov assumption

16:(Redirected from

1964:

1957:Markov processes

1936:

1935:

1933:

1932:

1918:

1912:

1906:Kurtz, Thomas G.

1902:

1896:

1895:

1874:

1868:

1854:

1848:

1827:

1821:

1810:

1691:

1650:

1648:

1647:

1642:

1621:

1619:

1618:

1613:

1611:

1610:

1594:

1592:

1591:

1586:

1584:

1583:

1578:

1577:

1563:

1561:

1560:

1555:

1553:

1552:

1530:

1528:

1527:

1522:

1504:

1502:

1501:

1496:

1472:

1470:

1469:

1464:

1449:

1447:

1446:

1441:

1425:

1423:

1422:

1417:

1412:

1411:

1406:

1405:

1356:

1355:

1337:

1336:

1331:

1330:

1314:, we can define

1313:

1311:

1310:

1305:

1293:

1291:

1290:

1285:

1270:

1268:

1267:

1262:

1260:

1259:

1244:

1243:

1238:

1237:

1217:

1215:

1214:

1209:

1198:

1197:

1168:

1166:

1165:

1160:

1143:

1142:

1109:

1107:

1106:

1101:

1099:

1075:

1073:

1072:

1067:

1040:

1038:

1037:

1032:

1024:

1023:

1002:

1001:

971:

970:

965:

964:

951:

950:

908:

906:

905:

900:

895:

884:

881:

876:

875:

863:

862:

850:

849:

831:

830:

803:

802:

790:

789:

771:

770:

758:

757:

745:

744:

726:

725:

694:

692:

691:

686:

684:

662:

660:

659:

654:

639:

637:

636:

631:

623:

622:

604:

603:

582:

581:

576:

575:

559:

558:

533:

531:

530:

525:

507:

505:

504:

499:

475:

473:

472:

467:

465:

464:

438:

436:

435:

430:

428:

427:

400:

399:

374:

372:

371:

366:

361:

360:

337:measurable space

334:

332:

331:

326:

321:

320:

298:

296:

295:

290:

274:

272:

271:

266:

250:

246:

245:

240:

239:

215:

213:

212:

207:

196:

195:

21:

1972:

1971:

1967:

1966:

1965:

1963:

1962:

1961:

1942:

1941:

1940:

1939:

1930:

1928:

1920:

1919:

1915:

1903:

1899:

1892:

1876:

1875:

1871:

1855:

1851:

1828:

1824:

1811:

1807:

1802:

1760:

1681:

1657:

1627:

1626:

1602:

1597:

1596:

1571:

1566:

1565:

1538:

1533:

1532:

1507:

1506:

1475:

1474:

1455:

1454:

1432:

1431:

1399:

1324:

1319:

1318:

1296:

1295:

1276:

1275:

1271:. Then for any

1245:

1231:

1223:

1222:

1178:

1177:

1134:

1120:

1119:

1116:

1078:

1077:

1046:

1045:

1015:

993:

958:

942:

922:

921:

915:

867:

854:

835:

816:

794:

781:

762:

749:

730:

711:

700:

699:

669:

668:

645:

644:

614:

595:

569:

550:

539:

538:

510:

509:

478:

477:

448:

447:

444:Markov property

413:

391:

377:

376:

341:

340:

301:

300:

281:

280:

277:totally ordered

233:

225:

224:

176:

175:

172:

167:

161:

149:Brownian motion

137:and known as a

122:

59:Markov property

43:Brownian motion

35:

28:

23:

22:

15:

12:

11:

5:

1970:

1968:

1960:

1959:

1954:

1944:

1943:

1938:

1937:

1926:Stack Exchange

1913:

1897:

1890:

1869:

1849:

1830:Dodge, Yadolah

1822:

1804:

1803:

1801:

1798:

1797:

1796:

1791:

1786:

1781:

1779:Markov blanket

1776:

1771:

1766:

1759:

1756:

1735:

1734:

1731:

1728:

1724:

1723:

1720:

1717:

1713:

1712:

1709:

1706:

1702:

1701:

1698:

1695:

1680:

1677:

1669:intractability

1656:

1655:In forecasting

1653:

1640:

1637:

1634:

1609:

1605:

1582:

1576:

1551:

1548:

1545:

1541:

1520:

1517:

1514:

1494:

1491:

1488:

1485:

1482:

1462:

1439:

1428:

1427:

1415:

1410:

1404:

1398:

1395:

1392:

1389:

1386:

1383:

1380:

1377:

1374:

1371:

1368:

1365:

1362:

1359:

1354:

1349:

1346:

1343:

1340:

1335:

1329:

1303:

1283:

1258:

1255:

1252:

1248:

1242:

1236:

1230:

1207:

1204:

1201:

1196:

1191:

1188:

1185:

1158:

1155:

1152:

1149:

1146:

1141:

1137:

1133:

1130:

1127:

1115:

1112:

1098:

1094:

1091:

1088:

1085:

1065:

1062:

1059:

1056:

1053:

1042:

1041:

1030:

1027:

1022:

1018:

1014:

1011:

1008:

1005:

1000:

996:

992:

989:

986:

983:

980:

977:

974:

969:

963:

957:

954:

949:

945:

941:

938:

935:

932:

929:

914:

911:

910:

909:

898:

894:

890:

887:

879:

874:

870:

866:

861:

857:

853:

848:

845:

842:

838:

834:

829:

826:

823:

819:

815:

812:

809:

806:

801:

797:

793:

788:

784:

780:

777:

774:

769:

765:

761:

756:

752:

748:

743:

740:

737:

733:

729:

724:

721:

718:

714:

710:

707:

683:

679:

676:

652:

641:

640:

629:

626:

621:

617:

613:

610:

607:

602:

598:

594:

591:

588:

585:

580:

574:

568:

565:

562:

557:

553:

549:

546:

523:

520:

517:

497:

494:

491:

488:

485:

463:

458:

455:

426:

423:

420:

416:

412:

409:

406:

403:

398:

394:

390:

387:

384:

364:

359:

354:

351:

348:

324:

319:

314:

311:

308:

288:

264:

261:

258:

255:

249:

244:

238:

232:

205:

202:

199:

194:

189:

186:

183:

171:

168:

163:Main article:

160:

157:

140:Markov process

121:

118:

65:property of a

61:refers to the

26:

24:

14:

13:

10:

9:

6:

4:

3:

2:

1969:

1958:

1955:

1953:

1952:Markov models

1950:

1949:

1947:

1927:

1923:

1917:

1914:

1910:

1907:

1901:

1898:

1893:

1891:3-540-04758-1

1887:

1883:

1879:

1873:

1870:

1866:

1862:

1858:

1857:Durrett, Rick

1853:

1850:

1847:

1846:0-19-850994-4

1843:

1839:

1835:

1831:

1826:

1823:

1819:

1815:

1809:

1806:

1799:

1795:

1792:

1790:

1787:

1785:

1782:

1780:

1777:

1775:

1772:

1770:

1767:

1765:

1762:

1761:

1757:

1755:

1753:

1749:

1744:

1740:

1725:

1714:

1703:

1692:

1689:

1685:

1678:

1676:

1674:

1670:

1666:

1662:

1654:

1652:

1638:

1635:

1632:

1623:

1607:

1603:

1580:

1549:

1546:

1543:

1539:

1518:

1515:

1512:

1486:

1483:

1460:

1453:

1452:stopping time

1437:

1408:

1396:

1393:

1390:

1384:

1381:

1378:

1372:

1369:

1366:

1363:

1357:

1347:

1344:

1338:

1333:

1317:

1316:

1315:

1281:

1274:

1273:stopping time

1256:

1253:

1250:

1240:

1221:

1202:

1199:

1189:

1176:

1172:

1153:

1150:

1147:

1144:

1139:

1135:

1128:

1125:

1118:Suppose that

1113:

1111:

1089:

1086:

1083:

1063:

1060:

1057:

1054:

1051:

1020:

1016:

1009:

1006:

998:

994:

987:

981:

975:

967:

955:

947:

943:

936:

930:

920:

919:

918:

912:

896:

888:

885:

872:

868:

864:

859:

855:

851:

846:

843:

840:

836:

832:

827:

824:

821:

817:

810:

807:

799:

795:

791:

786:

782:

778:

775:

772:

767:

763:

759:

754:

750:

746:

741:

738:

735:

731:

727:

722:

719:

716:

712:

705:

698:

697:

696:

677:

674:

666:

650:

627:

619:

615:

611:

608:

605:

600:

596:

589:

586:

578:

566:

563:

560:

555:

551:

544:

537:

536:

535:

521:

518:

515:

495:

492:

489:

486:

483:

456:

453:

446:if, for each

445:

441:

424:

421:

418:

410:

401:

396:

392:

385:

382:

352:

349:

338:

312:

309:

286:

278:

259:

256:

253:

247:

242:

223:

219:

200:

197:

187:

169:

166:

158:

156:

152:

150:

146:

142:

141:

136:

132:

127:

119:

117:

115:

110:

108:

104:

99:

97:

93:

88:

86:

85:stopping time

82:

78:

77:Andrey Markov

75:

74:mathematician

72:

68:

64:

60:

56:

52:

44:

39:

33:

19:

1929:. Retrieved

1916:

1908:

1900:

1881:

1872:

1860:

1852:

1833:

1825:

1817:

1813:

1808:

1794:Markov model

1784:Markov chain

1745:

1741:

1738:

1686:

1682:

1673:Markov model

1658:

1624:

1429:

1117:

1043:

916:

642:

443:

279:) index set

275:, for some (

173:

153:

145:Markov chain

138:

134:

130:

123:

120:Introduction

114:Markov chain

111:

100:

91:

89:

80:

58:

48:

107:Ising model

79:. The term

57:, the term

1946:Categories

1931:2020-07-07

1800:References

1774:Hysteresis

1700:Outcome 2

299:; and let

222:filtration

170:Definition

63:memoryless

55:statistics

1832:. (2006)

1705:Yesterday

1697:Outcome 1

1633:τ

1608:τ

1581:τ

1544:τ

1516:≥

1490:∞

1484:τ

1461:τ

1397:∈

1391:∩

1382:≤

1379:τ

1367:≥

1361:∀

1348:∈

1334:τ

1302:Ω

1282:τ

1254:≥

1187:Ω

1151:≥

1093:→

1061:≥

1055:≥

1010:σ

1007:∣

982:

956:∣

931:

889:∈

852:∣

776:…

747:∣

612:∣

606:∈

567:∣

561:∈

493:∈

476:and each

457:∈

422:∈

408:→

405:Ω

257:∈

185:Ω

135:Markovian

90:The term

1880:(2003).

1758:See also

1727:Tomorrow

1679:Examples

1044:for all

1867:, 2010.

220:with a

159:History

71:Russian

1888:

1844:

1711:Green

1595:given

251:

131:Markov

1730:Green

1716:Today

1430:Then

1218:with

1173:on a

1169:is a

508:with

335:be a

216:be a

1886:ISBN

1842:ISBN

1733:Red

1722:Red

1663:and

1487:<

1076:and

667:and

519:<

339:. A

174:Let

147:and

53:and

1719:Red

1708:Red

1694:Day

1294:on

133:or

49:In

1948::

1924:.

1859:.

1840:.

1836:,

1820:.

1754:.

1675:.

1622:.

1531:,

534:,

151:.

116:.

109:.

101:A

98:.

87:.

1934:.

1894:.

1639:t

1636:=

1604:X

1575:F

1550:t

1547:+

1540:X

1519:0

1513:t

1493:}

1481:{

1438:X

1426:.

1414:}

1409:t

1403:F

1394:A

1388:}

1385:t

1376:{

1373:,

1370:0

1364:t

1358::

1353:F

1345:A

1342:{

1339:=

1328:F

1257:0

1251:t

1247:}

1241:t

1235:F

1229:{

1206:)

1203:P

1200:,

1195:F

1190:,

1184:(

1157:)

1154:0

1148:t

1145::

1140:t

1136:X

1132:(

1129:=

1126:X

1097:R

1090:S

1087::

1084:f

1064:0

1058:s

1052:t

1029:]

1026:)

1021:s

1017:X

1013:(

1004:)

999:t

995:X

991:(

988:f

985:[

979:E

976:=

973:]

968:s

962:F

953:)

948:t

944:X

940:(

937:f

934:[

928:E

897:.

893:N

886:n

878:)

873:n

869:x

865:=

860:n

856:X

847:1

844:+

841:n

837:x

833:=

828:1

825:+

822:n

818:X

814:(

811:P

808:=

805:)

800:1

796:x

792:=

787:1

783:X

779:,

773:,

768:n

764:x

760:=

755:n

751:X

742:1

739:+

736:n

732:x

728:=

723:1

720:+

717:n

713:X

709:(

706:P

682:N

678:=

675:I

651:S

628:.

625:)

620:s

616:X

609:A

601:t

597:X

593:(

590:P

587:=

584:)

579:s

573:F

564:A

556:t

552:X

548:(

545:P

522:t

516:s

496:I

490:t

487:,

484:s

462:S

454:A

425:I

419:t

415:}

411:S

402::

397:t

393:X

389:{

386:=

383:X

363:)

358:S

353:,

350:S

347:(

323:)

318:S

313:,

310:S

307:(

287:I

263:)

260:I

254:s

248:,

243:s

237:F

231:(

204:)

201:P

198:,

193:F

188:,

182:(

34:.

20:)

Text is available under the Creative Commons Attribution-ShareAlike License. Additional terms may apply.