4061:

2734:." In other words, while correlations may sometimes provide valuable clues in uncovering causal relationships among variables, a non-zero estimated correlation between two variables is not, on its own, evidence that changing the value of one variable would result in changes in the values of other variables. For example, the practice of carrying matches (or a lighter) is correlated with incidence of lung cancer, but carrying matches does not cause cancer (in the standard sense of "cause").

163:

47:

407:

4076:. When we consider the performance of a model, a lower error represents a better performance. When the model becomes more complex, the variance will increase whereas the square of bias will decrease, and these two metrices add up to be the total error. Combining these two trends, the bias-variance tradeoff describes a relationship between the performance of the model and its complexity, which is shown as a u-shape curve on the right. For the adjusted

4762:

182:

3098:

2073:

3439:

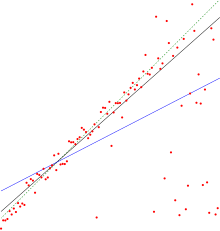

are not zero vectors. Therefore, the equations are expected to yield different predictions (i.e., the blue vector is expected to be different from the red vector). The least squares regression criterion ensures that the residual is minimized. In the figure, the blue line representing the residual is

299:

may still be negative, for example when linear regression is conducted without including an intercept, or when a non-linear function is used to fit the data. In cases where negative values arise, the mean of the data provides a better fit to the outcomes than do the fitted function values, according

4565:

The coefficient of partial determination can be defined as the proportion of variation that cannot be explained in a reduced model, but can be explained by the predictors specified in a full(er) model. This coefficient is used to provide insight into whether or not one or more additional predictors

4094:

indicates a lower bias error because the model can better explain the change of Y with predictors. For this reason, we make fewer (erroneous) assumptions, and this results in a lower bias error. Meanwhile, to accommodate fewer assumptions, the model tends to be more complex. Based on bias-variance

4117:

On the other hand, the term/frac term is reversely affected by the model complexity. The term/frac will increase when adding regressors (i.e. increased model complexity) and lead to worse performance. Based on bias-variance tradeoff, a higher model complexity (beyond the optimal line) leads to

3472:

The smaller model space is a subspace of the larger one, and thereby the residual of the smaller model is guaranteed to be larger. Comparing the red and blue lines in the figure, the blue line is orthogonal to the space, and any other line would be larger than the blue one. Considering the

5390:

2280:

value. For example, if one is trying to predict the sales of a model of car from the car's gas mileage, price, and engine power, one can include probably irrelevant factors such as the first letter of the model's name or the height of the lead engineer designing the car because the

1936:

2233:

predictor (equivalent to a horizontal hyperplane at a height equal to the mean of the observed data). This occurs when a wrong model was chosen, or nonsensical constraints were applied by mistake. If equation 1 of Kvålseth is used (this is the equation used most often),

3829:

6453:

varies between 0 and 1, with larger numbers indicating better fits and 1 representing a perfect fit. The norm of residuals varies from 0 to infinity with smaller numbers indicating better fits and zero indicating a perfect fit. One advantage and disadvantage of

6181:

1446:

4381:

are the sample variances of the estimated residuals and the dependent variable respectively, which can be seen as biased estimates of the population variances of the errors and of the dependent variable. These estimates are replaced by statistically

5940:

1153:. This implies that 49% of the variability of the dependent variable in the data set has been accounted for, and the remaining 51% of the variability is still unaccounted for. For regression models, the regression sum of squares, also called the

4049:(due to the inclusion of a new explanatory variable) is more than one would expect to see by chance. If a set of explanatory variables with a predetermined hierarchy of importance are introduced into a regression one at a time, with the adjusted

4652:

3301:

This equation corresponds to the ordinary least squares regression model with two regressors. The prediction is shown as the blue vector in the figure on the right. Geometrically, it is the projection of true value onto a larger model space in

481:

294:

can yield negative values. This can arise when the predictions that are being compared to the corresponding outcomes have not been derived from a model-fitting procedure using those data. Even if a model-fitting procedure has been used,

2933:

5056:

2183:

data values. In this case, the value is not directly a measure of how good the modeled values are, but rather a measure of how good a predictor might be constructed from the modeled values (by creating a revised predictor of the form

3296:

4270:

2442:

91:, is a useful starting point for translations, but translators must revise errors as necessary and confirm that the translation is accurate, rather than simply copy-pasting machine-translated text into the English Knowledge.

836:

4753:

sufficiently increases to determine if a new regressor should be added to the model. If a regressor is added to the model that is highly correlated with other regressors which have already been included, then the total

6436:

4728:

3700:

automatically increasing when extra explanatory variables are added to the model. There are many different ways of adjusting. By far the most used one, to the point that it is typically just referred to as adjusted

2715: = 0.7 may be interpreted as follows: "Seventy percent of the variance in the response variable can be explained by the explanatory variables. The remaining thirty percent can be attributed to unknown,

1580:

3172:

4514:

4449:

1315:

1239:

927:

488:

is to 1. The areas of the blue squares represent the squared residuals with respect to the linear regression. The areas of the red squares represent the squared residuals with respect to the average value.

240:, on the basis of other related information. It provides a measure of how well observed outcomes are replicated by the model, based on the proportion of total variation of outcomes explained by the model.

1016:

716:

2068:{\displaystyle \rho _{{\widehat {\alpha }},{\widehat {\beta }}}={\operatorname {cov} \left({\widehat {\alpha }},{\widehat {\beta }}\right) \over \sigma _{\widehat {\alpha }}\sigma _{\widehat {\beta }}},}

4379:

4326:

4013:

1094:

can be seen to be related to the fraction of variance unexplained (FVU), since the second term compares the unexplained variance (variance of the model's errors) with the total variance (of the data):

6345:

7767:

6020:

2994:) are added, by the fact that less constrained minimization leads to an optimal cost which is weakly smaller than more constrained minimization does. Given the previous conclusion and noting that

6263:

5767:

may be smaller than 0 and, in more exceptional cases, larger than 1. To deal with such uncertainties, several shrinkage estimators implicitly take a weighted average of the diagonal elements of

3719:

101:

5207:

5451:

6032:

2617:

2682: = 0 indicates no 'linear' relationship (for straight line regression, this means that the straight line model is a constant line (slope = 0, intercept =

77:

7379:

1730:

4836:

2536:

1334:

1643:

1133:

1672:

3694:

5976:

5738:

5703:

1054:

5837:

4758:

will hardly increase, even if the new regressor is of relevance. As a result, the above-mentioned heuristics will ignore relevant regressors when cross-correlations are high.

4547:

concluded that in most situations either an approximate version of the Olkin–Pratt estimator or the exact Olkin–Pratt estimator should be preferred over (Ezekiel) adjusted

3662:

3467:

3329:

2567:

6486:

3073:

2199:). According to Everitt, this usage is specifically the definition of the term "coefficient of determination": the square of the correlation between two (general) variables.

4188:

is more appropriate when evaluating model fit (the variance in the dependent variable accounted for by the independent variables) and in comparing alternative models in the

3630:

1881:

1788:

5536:

4587:

2130:

6576:

of the residuals, or standard deviation of the residuals. This would have a value of 0.135 for the above example given that the fit was linear with an unforced intercept.

5792:

5765:

5563:

5478:

5159:

3201:

6907:

Ritter, A.; Muñoz-Carpena, R. (2013). "Performance evaluation of hydrological models: statistical significance for reducing subjectivity in goodness-of-fit assessments".

7760:

5509:

5121:

5094:

4890:

3383:

3356:

2709:

643:

3181:

model with one regressor. The prediction is shown as the red vector in the figure on the right. Geometrically, it is the projection of true value onto a model space in

3511:

3028:

6612:

2478:

733:

412:

5668:

5641:

5594:

3437:

3410:

323:

evaluation, as the former can be expressed as a percentage, whereas the latter measures have arbitrary ranges. It also proved more robust for poor fits compared to

2826:

2784:

alone cannot be used as a meaningful comparison of models with very different numbers of independent variables. For a meaningful comparison between two models, an

6622:

2742:

2140:

268:

5614:

5199:

5179:

4910:

4863:

2981:

2676:

2181:

2161:

1921:

1901:

1832:

1812:

4797:

when they gradually shrink parameters from the unrestricted OLS solutions towards the hypothesized values. Let us first define the linear regression model as

7753:

3209:

6216:

The values are between 0 and 1, with 0 denoting that model does not explain any variation and 1 denoting that it perfectly explains the observed variation;

4209:

3079:. When the extra variable is included, the data always have the option of giving it an estimated coefficient of zero, leaving the predicted values and the

2776:

is at least weakly increasing with an increase in number of regressors in the model. Because increases in the number of regressors increase the value of

2341:

4057:

reaches a maximum, and decreases afterward, would be the regression with the ideal combination of having the best fit without excess/unnecessary terms.

4918:

2135:

Under more general modeling conditions, where the predicted values might be generated from a model different from linear least squares regression, an

851:

7803:

111:

Do not translate text that appears unreliable or low-quality. If possible, verify the text with references provided in the foreign-language article.

935:

648:

6384:

4663:

7216:

Shieh, Gwowen (2008-04-01). "Improved shrinkage estimation of squared multiple correlation coefficient and squared cross-validity coefficient".

484:

The better the linear regression (on the right) fits the data in comparison to the simple average (on the left graph), the closer the value of

221:

and pronounced "R squared", is the proportion of the variation in the dependent variable that is predictable from the independent variable(s).

7653:

7598:

7531:

7456:

6951:

6742:

6714:

6689:

2754:

2731:

197:. Because of the many outliers, neither of the regression lines fits the data well, as measured by the fact that neither gives a very high

6864:

Legates, D.R.; McCabe, G.J. (1999). "Evaluating the use of "goodness-of-fit" measures in hydrologic and hydroclimatic model validation".

6786:

Colin

Cameron, A.; Windmeijer, Frank A.G. (1997). "An R-squared measure of goodness of fit for some common nonlinear regression models".

2299:

but it penalizes the statistic as extra variables are included in the model. For cases other than fitting by ordinary least squares, the

1518:

4536:

284:

3111:

7703:"The coefficient of determination R-squared is more informative than SMAPE, MAE, MAPE, MSE and RMSE in regression analysis evaluation"

6815:"The coefficient of determination R-squared is more informative than SMAPE, MAE, MAPE, MSE and RMSE in regression analysis evaluation"

2570:

1678:

119:

4454:

4389:

1258:

1163:

7901:

7896:

7623:

7059:

6976:

6770:

3178:

141:

2218:

coefficient of determination is a statistical measure of how well the regression predictions approximate the real data points. An

6602:

4331:

4278:

4159:

will increase only when the bias eliminated by the added regressor is greater than the variance introduced simultaneously. Using

3919:

1085:

6761:(1987). "The Coeffecient of Determination for Regression without a Constant Term". In Heijmans, Risto; Neudecker, Heinz (eds.).

2749:

is the square of the correlation between the constructed predictor and the response variable. With more than one regressor, the

8157:

8123:

7984:

6272:

3841:

1791:

7808:

6627:

2963:

The optimal value of the objective is weakly smaller as more explanatory variables are added and hence additional columns of

1324:

for a derivation of this result for one case where the relation holds. When this relation does hold, the above definition of

5981:

3824:{\displaystyle {\bar {R}}^{2}={1-{SS_{\text{res}}/{\text{df}}_{\text{res}} \over SS_{\text{tot}}/{\text{df}}_{\text{tot}}}}}

3101:

This is an example of residuals of regression models in smaller and larger spaces based on ordinary least square regression.

8074:

7818:

6226:

1097:

5385:{\displaystyle R^{\otimes }=(X'{\tilde {y}}_{0})(X'{\tilde {y}}_{0})'(X'X)^{-1}({\tilde {y}}_{0}'{\tilde {y}}_{0})^{-1},}

7672:

7156:

4527:, which results by using the population variances of the errors and the dependent variable instead of estimating them.

3083:

unchanged. The only way that the optimization problem will give a non-zero coefficient is if doing so improves the

8162:

8040:

7969:

7906:

7080:"Methodology review: Estimation of population validity and cross-validity, and the use of equal weights in prediction"

6632:

6375:

6176:{\displaystyle R^{2}=1-e^{{\frac {2}{n}}(\ln({\mathcal {L}}(0))-\ln({\mathcal {L}}({\widehat {\theta }}))}=1-e^{-D/n}}

4060:

7027:

5398:

4095:

tradeoff, a higher complexity will lead to a decrease in bias and a better performance (below the optimal line). In

384:, while for the goodness-of-fit evaluation only one specific linear correlation should be taken into consideration:

132:

Content in this edit is translated from the existing German

Knowledge article at ]; see its history for attribution.

7853:

7828:

7813:

6637:

6573:

6489:

4782:

35:

4519:

Despite using unbiased estimators for the population variances of the error and the dependent variable, adjusted

2576:

2268:

with the number of variables included—it will never decrease). This illustrates a drawback to one possible use of

7843:

7838:

7359:

2308:

7939:

1677:

This set of conditions is an important one and it has a number of implications for the properties of the fitted

2654:

is often interpreted as the proportion of response variation "explained" by the regressors in the model. Thus,

1441:{\displaystyle R^{2}={\frac {SS_{\text{reg}}}{SS_{\text{tot}}}}={\frac {SS_{\text{reg}}/n}{SS_{\text{tot}}/n}}}

1321:

1245:

1154:

248:

190:

127:

31:

1687:

186:

4803:

8030:

7934:

7929:

2789:

2483:

2273:

727:

8097:

8167:

7871:

4778:

4073:

2304:

2083:

1619:

575:

166:

96:

5935:{\displaystyle R^{2}=1-\left({{\mathcal {L}}(0) \over {\mathcal {L}}({\widehat {\theta }})}\right)^{2/n}}

1648:

6191:

3667:

3543:

148:

6597:

6374:

of residuals is used for indicating goodness of fit. This term is calculated as the square-root of the

6022:

is the likelihood of the estimated model (i.e., the model with a given set of parameter estimates) and

5948:

5708:

5673:

3566:

the model might be improved by using transformed versions of the existing set of independent variables;

2285:

will never decrease as variables are added and will likely experience an increase due to chance alone.

1023:

7046:

4647:{\displaystyle {\frac {SS_{\text{ res, reduced}}-SS_{\text{ res, full}}}{SS_{\text{ res, reduced}}}},}

3635:

3443:

3305:

2545:

8092:

7992:

7881:

7876:

6916:

6873:

6499:

values are all multiplied by a constant, the norm of residuals will also change by that constant but

6461:

4383:

4090:

can be interpreted as the variance of the model, which is influenced by the model complexity. A high

3048:

2641:

1927:

1509:

1249:

841:

260:

7351:

4149:, which is in consistent with the u-shape trend of model complexity vs. overall performance. Unlike

3599:

2820:

To demonstrate this property, first recall that the objective of least squares linear regression is

2249:

is used, the predictors are calculated by ordinary least-squares regression: that is, by minimizing

1844:

1751:

7798:

7785:

7582:

6642:

5818:

5810:

5514:

4560:

4084:

and the term / frac and thereby captures their attributes in the overall performance of the model.

3550:

2332:

2089:

1741:

1463:

320:

304:

162:

5770:

5743:

5541:

5456:

5137:

3184:

8118:

8084:

7964:

7793:

7689:

7663:

7402:

7241:

7191:

7107:

7009:

6889:

6371:

5814:

5795:

4790:

3537:

2265:

2079:

719:

7645:

7638:

5487:

5099:

5072:

4868:

3361:

3334:

3094:. Next, an example based on ordinary least square from a geometric perspective is shown below.

2685:

619:

476:{\displaystyle R^{2}=1-{\frac {\color {blue}{SS_{\text{res}}}}{\color {red}{SS_{\text{tot}}}}}}

406:

8113:

8002:

7949:

7734:

7649:

7619:

7594:

7527:

7452:

7333:

7282:

7233:

7183:

7133:

7099:

7055:

6972:

6947:

6846:

6766:

6738:

6710:

6685:

4189:

3560:

3480:

2997:

2793:

1838:

1505:

312:

229:

123:

59:

6503:

will stay the same. As a basic example, for the linear least squares fit to the set of data:

4543:, which is known as Olkin–Pratt estimator. Comparisons of different approaches for adjusting

2928:{\displaystyle \min _{b}SS_{\text{res}}(b)\Rightarrow \min _{b}\sum _{i}(y_{i}-X_{i}b)^{2}\,}

2454:

2323:

can be calculated for any type of predictive model, which need not have a statistical basis.

8136:

7921:

7886:

7823:

7776:

7724:

7714:

7681:

7504:

7394:

7323:

7313:

7272:

7225:

7175:

7137:

7125:

7091:

7001:

6924:

6881:

6836:

6826:

6795:

4786:

4777:

to quantify the relevance of deviating from a hypothesis. As

Hoornweg (2018) shows, several

3706:

2716:

17:

7615:

7609:

6204:

It is consistent with the classical coefficient of determination when both can be computed;

5646:

5619:

5572:

3415:

3388:

8069:

8012:

7891:

6607:

6197:

2229:

outside the range 0 to 1 occur when the model fits the data worse than the worst possible

2211:

6663:

Principles and

Procedures of Statistics with Special Reference to the Biological Sciences

4143:. These two trends construct a reverse u-shape relationship between model complexity and

4022:

is the total number of explanatory variables in the model (excluding the intercept), and

3850:

is the degrees of freedom of the estimate of the population variance around the mean. df

2303:

statistic can be calculated as above and may still be a useful measure. If fitting is by

1020:

In the best case, the modeled values exactly match the observed values, which results in

7547:

6920:

6877:

7729:

7702:

7586:

6841:

6814:

6584:

The creation of the coefficient of determination has been attributed to the geneticist

5599:

5184:

5164:

4895:

4848:

3291:{\displaystyle Y=\beta _{0}+\beta _{1}\cdot X_{1}+\beta _{2}\cdot X_{2}+\varepsilon \,}

2966:

2661:

2166:

2146:

1906:

1886:

1817:

1797:

7745:

6799:

8151:

7954:

7911:

7195:

7111:

6893:

6585:

6562:

6446:

4532:

4265:{\displaystyle R^{2}={1-{{\text{VAR}}_{\text{res}} \over {\text{VAR}}_{\text{tot}}}}}

4137:. Nevertheless, adding more parameters will increase the term/frac and thus decrease

2769:

2620:

2230:

170:

7245:

4761:

3097:

3041:

The intuitive reason that using an additional explanatory variable cannot lower the

2796:, though this is not always appropriate. As a reminder of this, some authors denote

287:. In both such cases, the coefficient of determination normally ranges from 0 to 1.

7508:

7260:

6758:

4528:

2437:{\displaystyle Y_{i}=\beta _{0}+\sum _{j=1}^{p}\beta _{j}X_{i,j}+\varepsilon _{i},}

181:

6928:

6971:(Second ed.). Pacific Grove, Calif.: Duxbury/Thomson Learning. p. 556.

5051:{\displaystyle R^{2}=1-{\frac {(y-Xb)'(y-Xb)}{(y-X\beta _{0})'(y-X\beta _{0})}}.}

275:) between the observed outcomes and the observed predictor values. If additional

7473:

6666:

3587:

2727:

7161:

Shrinkage in

Multiple Regression: A Comparison of Different Analytical Methods"

5794:

to quantify the relevance of deviating from a hypothesized value. Click on the

5065:

of 75% means that the in-sample accuracy improves by 75% if the data-optimized

2315:

can be calculated appropriate to those statistical frameworks, while the "raw"

831:{\displaystyle SS_{\text{res}}=\sum _{i}(y_{i}-f_{i})^{2}=\sum _{i}e_{i}^{2}\,}

8048:

7959:

7944:

7633:

7398:

7277:

7179:

7095:

1495:

This partition of the sum of squares holds for instance when the model values

237:

233:

206:

173:. Since the regression line does not miss any of the points by very much, the

7337:

7286:

7237:

7229:

7187:

7103:

6213:

The interpretation is the proportion of the variation explained by the model;

4573:

is relatively straightforward after estimating two models and generating the

1479:) to the total variance (sample variance of the dependent variable, which is

247:

that are only sometimes equivalent. One class of such cases includes that of

7866:

7141:

7079:

6616:

4033:

can be negative, and its value will always be less than or equal to that of

276:

225:

7738:

7078:

Raju, Nambury S.; Bilgic, Reyhan; Edwards, Jack E.; Fleer, Paul F. (1997).

6850:

6431:{\displaystyle {\text{norm of residuals}}={\sqrt {SS_{\text{res}}}}=\|e\|.}

4723:{\displaystyle {\frac {SS_{\text{tot}}-SS_{\text{res}}}{SS_{\text{tot}}}}.}

4080:

specifically, the model complexity (i.e. number of parameters) affects the

2711:) between the response variable and regressors). An interior value such as

2658: = 1 indicates that the fitted model explains all variability in

1883:

the squared

Pearson correlation coefficient between the dependent variable

7685:

4892:

refer to the hypothesized regression parameters and let the column vector

303:

The coefficient of determination can be more intuitively informative than

7719:

7420:

7318:

7301:

6885:

6831:

2078:

where the covariance between two coefficient estimates, as well as their

845:

493:

7693:

7328:

7974:

7861:

7495:

measures based on Wald and likelihood ratio joint significance tests".

7421:"regression – R implementation of coefficient of partial determination"

7406:

7013:

6207:

Its value is maximised by the maximum likelihood estimation of a model;

3385:

are not the same as in the equation for smaller model space as long as

2222:

of 1 indicates that the regression predictions perfectly fit the data.

1926:

It should not be confused with the correlation coefficient between two

194:

130:

to the source of your translation. A model attribution edit summary is

6347:: thus, Nagelkerke suggested the possibility to define a scaled

4746:

2785:

2640:

that may be attributed to some linear combination of the regressors (

7380:"A Note on a General Definition of the Coefficient of Determination"

7005:

5831:

originally proposed by Cox & Snell, and independently by Magee:

4741:

As explained above, model selection heuristics such as the adjusted

4064:

Schemetic of the bias and variance contribution into the total error

531:= ), each associated with a fitted (or modeled, or predicted) value

7524:

Maximum

Likelihood Estimation of Functional Relationships, Pays-Bas

3844:

of the estimate of the population variance around the model, and df

1575:{\displaystyle f_{i}={\widehat {\alpha }}+{\widehat {\beta }}q_{i}}

932:

The most general definition of the coefficient of determination is

4760:

4574:

4184:

is a positively biased estimate of the population value. Adjusted

4059:

3556:

the most appropriate set of independent variables has been chosen;

3096:

2745:

relating the regressor and the response variable. More generally,

2631:

is an element of and represents the proportion of variability in

324:

180:

3167:{\displaystyle Y=\beta _{0}+\beta _{1}\cdot X_{1}+\varepsilon \,}

2953:

is a column vector of coefficients of the respective elements of

2319:

may still be useful if it is more easily interpreted. Values for

8053:

8025:

8020:

7997:

7444:

6737:(8th ed.). Boston, MA: Cengage Learning. pp. 508–510.

4176:

can be interpreted as a less biased estimator of the population

2260:

increases as the number of variables in the model is increased (

1681:

and the modelled values. In particular, under these conditions:

316:

308:

88:

7749:

7701:

Chicco, Davide; Warrens, Matthijs J.; Jurman, Giuseppe (2021).

7593:(Fifth ed.). New York: McGraw-Hill/Irwin. pp. 73–78.

6813:

Chicco, Davide; Warrens, Matthijs J.; Jurman, Giuseppe (2021).

4509:{\displaystyle {\text{VAR}}_{\text{tot}}=SS_{\text{tot}}/(n-1)}

4444:{\displaystyle {\text{VAR}}_{\text{res}}=SS_{\text{res}}/(n-p)}

4108:) will be lower with high complexity and resulting in a higher

1310:{\displaystyle SS_{\text{res}}+SS_{\text{reg}}=SS_{\text{tot}}}

1252:

equals the sum of the two other sums of squares defined above:

1234:{\displaystyle SS_{\text{reg}}=\sum _{i}(f_{i}-{\bar {y}})^{2}}

922:{\displaystyle SS_{\text{tot}}=\sum _{i}(y_{i}-{\bar {y}})^{2}}

4657:

which is analogous to the usual coefficient of determination:

4153:, which will always increase when model complexity increases,

3090:

The above gives an analytical explanation of the inflation of

718:

then the variability of the data set can be measured with two

40:

3909:

Inserting the degrees of freedom and using the definition of

3517:, meaning that adding regressors will result in inflation of

1011:{\displaystyle R^{2}=1-{SS_{\rm {res}} \over SS_{\rm {tot}}}}

711:{\displaystyle {\bar {y}}={\frac {1}{n}}\sum _{i=1}^{n}y_{i}}

7560:

Wright, Sewall (January 1921). "Correlation and causation".

6305:

6232:

6115:

6084:

5988:

5954:

5888:

5870:

3536:

the independent variables are a cause of the changes in the

3203:(without intercept). The residual is shown as the red line.

2945:

is a row vector of values of explanatory variables for case

6735:

Probability and

Statistics for Engineering and the Sciences

4374:{\displaystyle {\text{VAR}}_{\text{tot}}=SS_{\text{tot}}/n}

4321:{\displaystyle {\text{VAR}}_{\text{res}}=SS_{\text{res}}/n}

4008:{\displaystyle {\bar {R}}^{2}=1-(1-R^{2}){n-1 \over n-p-1}}

2627:

is a measure of the global fit of the model. Specifically,

6445:

and the norm of residuals have their relative merits. For

4865:

is centered to have a mean of zero. Let the column vector

4773:

Alternatively, one can decompose a generalized version of

4566:

may be useful in a more fully specified regression model.

2295:. The explanation of this statistic is almost the same as

2238:

can be less than zero. If equation 2 of Kvålseth is used,

1841:(with fitted intercept and slope), this is also equal to

100:

to this template: there are already 1,886 articles in the

7261:"Unbiased estimation of certain correlation coefficients"

6557:= 0.998, and norm of residuals = 0.302. If all values of

6340:{\displaystyle R_{\max }^{2}=1-({\mathcal {L}}(0))^{2/n}}

4845:

is standardized with Z-scores and that the column vector

2288:

This leads to the alternative approach of looking at the

1616:

is just one special case), and the coefficient estimates

6992:

Kvalseth, Tarald O. (1985). "Cautionary Note about R2".

5978:

is the likelihood of the model with only the intercept,

3569:

there are enough data points to make a solid conclusion.

2737:

In case of a single regressor, fitted by least squares,

2619:

are unknown coefficients, whose values are estimated by

1839:

linear least squares regression with a single explanator

1674:

are obtained by minimizing the residual sum of squares.

1455:

is the number of observations (cases) on the variables.

370:

quantifies the degree of any linear correlation between

6015:{\displaystyle {{\mathcal {L}}({\widehat {\theta }})}}

27:

Indicator for how well data points fit a line or curve

7548:

http://www.originlab.com/doc/Origin-Help/LR-Algorithm

6682:

Primer of

Applied Regression and Analysis of Variance

6464:

6387:

6275:

6229:

6035:

5984:

5951:

5840:

5773:

5746:

5711:

5676:

5649:

5622:

5602:

5575:

5544:

5517:

5490:

5459:

5401:

5210:

5187:

5167:

5140:

5102:

5075:

4921:

4912:

denote the estimated parameters. We can then define

4898:

4871:

4851:

4806:

4666:

4590:

4457:

4392:

4334:

4281:

4212:

3922:

3722:

3696:) is an attempt to account for the phenomenon of the

3670:

3638:

3602:

3483:

3446:

3418:

3391:

3364:

3337:

3308:

3212:

3187:

3114:

3051:

3000:

2969:

2829:

2688:

2664:

2579:

2548:

2486:

2457:

2344:

2169:

2149:

2092:

1939:

1909:

1889:

1847:

1820:

1800:

1754:

1690:

1651:

1622:

1521:

1337:

1261:

1166:

1100:

1026:

938:

854:

736:

651:

622:

415:

6258:{\displaystyle {\mathcal {L}}({\widehat {\theta }})}

6210:

It is asymptotically independent of the sample size;

5134:

of deviating from a hypothesis can be computed with

2817:(the number of explanators including the constant).

1146:

implies a more successful regression model. Suppose

344:) values, it is not appropriate to base this on the

84:

8106:

8083:

8062:

8039:

8011:

7983:

7920:

7852:

7784:

1594:are arbitrary values that may or may not depend on

80:

a machine-translated version of the German article.

7637:

7438:

7436:

7434:

7373:

7371:

7369:

6480:

6430:

6339:

6257:

6175:

6014:

5970:

5934:

5786:

5759:

5732:

5697:

5662:

5635:

5608:

5588:

5557:

5530:

5503:

5472:

5445:

5384:

5193:

5173:

5153:

5115:

5088:

5050:

4904:

4884:

4857:

4830:

4722:

4646:

4508:

4443:

4373:

4320:

4264:

4007:

3823:

3688:

3656:

3624:

3505:

3461:

3431:

3404:

3377:

3350:

3323:

3290:

3195:

3166:

3067:

3022:

2975:

2927:

2703:

2670:

2611:

2561:

2530:

2472:

2436:

2175:

2155:

2124:

2067:

1915:

1895:

1875:

1826:

1806:

1782:

1724:

1666:

1637:

1574:

1440:

1309:

1233:

1127:

1048:

1010:

921:

830:

710:

637:

475:

330:When evaluating the goodness-of-fit of simulated (

7644:(Second ed.). New York: Macmillan. pp.

6572:Another single-parameter indicator of fit is the

4577:tables for them. The calculation for the partial

3563:present in the data on the explanatory variables;

726:The sum of squares of residuals, also called the

6281:

6223:However, in the case of a logistic model, where

5123:is a vector of zeros, we obtain the traditional

4199:statistic can be seen by rewriting the ordinary

4114:, consistently indicating a better performance.

4053:computed each time, the level at which adjusted

2866:

2831:

6569:remains the same, but norm of residuals = 302.

6026:is the sample size. It is easily rewritten to:

5069:solutions are used instead of the hypothesized

4523:is not an unbiased estimator of the population

3469:, giving the minimal distance from the space.

3331:(without intercept). Noticeably, the values of

1598:or on other free parameters (the common choice

1466:(variance of the model's predictions, which is

6623:Pearson product-moment correlation coefficient

5446:{\displaystyle {\tilde {y}}_{0}=y-X\beta _{0}}

2743:Pearson product-moment correlation coefficient

126:accompanying your translation by providing an

71:Click for important translation instructions.

58:expand this article with text translated from

7761:

7526:. Lecture Notes in Statistics. Vol. 69.

2139:value can be calculated as the square of the

8:

7259:Olkin, Ingram; Pratt, John W. (March 1958).

6422:

6416:

6200:noted that it had the following properties:

5705:might increase at the cost of a decrease in

4118:increasing errors and a worse performance.

3038:follows directly from the definition above.

2612:{\displaystyle \beta _{0},\dots ,\beta _{p}}

7028:"Linear Regression – MATLAB & Simulink"

6680:Glantz, Stanton A.; Slinker, B. K. (1990).

6613:Nash–Sutcliffe model efficiency coefficient

6561:are multiplied by 1000 (for example, in an

7768:

7754:

7746:

2726:, as to other statistical descriptions of

1063:. A baseline model, which always predicts

7728:

7718:

7327:

7317:

7276:

6840:

6830:

6472:

6463:

6405:

6396:

6388:

6386:

6327:

6323:

6304:

6303:

6285:

6280:

6274:

6241:

6240:

6231:

6230:

6228:

6163:

6156:

6124:

6123:

6114:

6113:

6083:

6082:

6060:

6059:

6040:

6034:

5997:

5996:

5987:

5986:

5985:

5983:

5953:

5952:

5950:

5922:

5918:

5897:

5896:

5887:

5886:

5869:

5868:

5865:

5845:

5839:

5778:

5772:

5751:

5745:

5724:

5716:

5710:

5689:

5681:

5675:

5654:

5648:

5627:

5621:

5601:

5580:

5574:

5549:

5543:

5522:

5516:

5495:

5489:

5464:

5458:

5437:

5415:

5404:

5403:

5400:

5370:

5360:

5349:

5348:

5338:

5327:

5326:

5313:

5281:

5270:

5269:

5248:

5237:

5236:

5215:

5209:

5186:

5166:

5145:

5139:

5107:

5101:

5080:

5074:

5033:

5003:

4941:

4926:

4920:

4897:

4876:

4870:

4850:

4805:

4708:

4693:

4677:

4667:

4665:

4632:

4617:

4601:

4591:

4589:

4486:

4480:

4464:

4459:

4456:

4421:

4415:

4399:

4394:

4391:

4363:

4357:

4341:

4336:

4333:

4310:

4304:

4288:

4283:

4280:

4253:

4248:

4241:

4236:

4233:

4226:

4217:

4211:

4072:can be interpreted as an instance of the

3973:

3964:

3936:

3925:

3924:

3921:

3811:

3806:

3800:

3794:

3779:

3774:

3768:

3762:

3752:

3745:

3736:

3725:

3724:

3721:

3680:

3675:

3669:

3648:

3643:

3637:

3632:, pronounced "R bar squared"; another is

3616:

3605:

3604:

3601:

3491:

3482:

3453:

3449:

3448:

3445:

3423:

3417:

3396:

3390:

3369:

3363:

3342:

3336:

3315:

3311:

3310:

3307:

3287:

3275:

3262:

3249:

3236:

3223:

3211:

3189:

3188:

3186:

3163:

3151:

3138:

3125:

3113:

3059:

3050:

3008:

2999:

2968:

2924:

2918:

2905:

2892:

2879:

2869:

2847:

2834:

2828:

2690:

2689:

2687:

2663:

2603:

2584:

2578:

2553:

2547:

2516:

2491:

2485:

2463:

2458:

2456:

2425:

2406:

2396:

2386:

2375:

2362:

2349:

2343:

2272:, where one might keep adding variables (

2168:

2148:

2113:

2100:

2091:

2047:

2046:

2030:

2029:

2007:

2006:

1992:

1991:

1977:

1961:

1960:

1946:

1945:

1944:

1938:

1908:

1888:

1852:

1846:

1819:

1799:

1759:

1753:

1721:

1707:

1706:

1692:

1691:

1689:

1653:

1652:

1650:

1624:

1623:

1621:

1566:

1551:

1550:

1536:

1535:

1526:

1520:

1512:reads as follows: The model has the form

1427:

1421:

1404:

1398:

1388:

1376:

1361:

1351:

1342:

1336:

1301:

1285:

1269:

1260:

1225:

1210:

1209:

1200:

1187:

1174:

1165:

1120:

1105:

1099:

1034:

1025:

992:

991:

969:

968:

958:

943:

937:

913:

898:

897:

888:

875:

862:

853:

827:

821:

816:

806:

793:

783:

770:

757:

744:

735:

702:

692:

681:

667:

653:

652:

650:

624:

623:

621:

463:

455:

446:

438:

435:

420:

414:

7363:, Volume 48, Issue 2, 1994, pp. 113–117.

6728:

6726:

6507:

5740:. As a result, the diagonal elements of

1725:{\displaystyle {\bar {f}}={\bar {y}}.\,}

405:

161:

7378:Nagelkerke, N. J. D. (September 1991).

7073:

7071:

6765:. Dordrecht: Kluwer. pp. 181–189.

6661:Steel, R. G. D.; Torrie, J. H. (1960).

6653:

4831:{\displaystyle y=X\beta +\varepsilon .}

2333:more than a single explanatory variable

1834:data values of the dependent variable.

7445:"Part II: On Keeping Parameters Fixed"

7155:Yin, Ping; Fan, Xitao (January 2001).

3105:A simple case to be considered first:

2531:{\displaystyle X_{i,1},\dots ,X_{i,p}}

105:

7611:Statistics: A Foundation for Analysis

7608:Hughes, Ann; Grawoig, Dennis (1971).

7265:The Annals of Mathematical Statistics

7211:

7209:

7207:

7205:

7168:The Journal of Experimental Education

5484:. If regressors are uncorrelated and

3856:is given in terms of the sample size

2755:coefficient of multiple determination

1322:Partitioning in the general OLS model

327:on the test datasets in the article.

236:of future outcomes or the testing of

177:of the regression is relatively high.

7:

7614:. Reading: Addison-Wesley. pp.

4793:– make use of this decomposition of

4555:Coefficient of partial determination

4169:could thereby prevent overfitting.

4127:, more parameters will increase the

4045:increases only when the increase in

2732:correlation does not imply causation

1638:{\displaystyle {\widehat {\alpha }}}

1128:{\displaystyle R^{2}=1-{\text{FVU}}}

4537:minimum-variance unbiased estimator

4172:Following the same logic, adjusted

3888:is given in the same way, but with

2983:(the explanatory data matrix whose

2623:. The coefficient of determination

1744:(with fitted intercept and slope),

1667:{\displaystyle {\widehat {\beta }}}

285:coefficient of multiple correlation

267:is simply the square of the sample

6944:Cambridge Dictionary of Statistics

4195:The principle behind the adjusted

3689:{\displaystyle R_{\text{adj}}^{2}}

1736:As squared correlation coefficient

999:

996:

993:

976:

973:

970:

645:is the mean of the observed data:

437:

138:{{Translated|de|Bestimmtheitsmaß}}

25:

7480:(2nd ed.). Chapman and Hall.

7302:"Improving on Adjusted R-Squared"

7084:Applied Psychological Measurement

6946:(2nd ed.). CUP. p. 78.

6705:Draper, N. R.; Smith, H. (1998).

6588:and was first published in 1921.

6366:Comparison with norm of residuals

5971:{\displaystyle {\mathcal {L}}(0)}

5733:{\displaystyle R_{jj}^{\otimes }}

5698:{\displaystyle R_{ii}^{\otimes }}

5096:values. In the special case that

3440:orthogonal to the model space in

3179:ordinary least squares regression

3034:, the non-decreasing property of

1462:is expressed as the ratio of the

1049:{\displaystyle SS_{\text{res}}=0}

454:

243:There are several definitions of

232:whose main purpose is either the

7666:; Skalaban, Andrew (1990). "The

7562:Journal of Agricultural Research

6603:Fraction of variance unexplained

4569:The calculation for the partial

3705:, is the correction proposed by

3657:{\displaystyle R_{\text{a}}^{2}}

3462:{\displaystyle \mathbb {R} ^{2}}

3324:{\displaystyle \mathbb {R} ^{2}}

2562:{\displaystyle \varepsilon _{i}}

2289:

1086:Fraction of variance unexplained

1080:Relation to unexplained variance

348:of the linear regression (i.e.,

193:(blue) for a set of points with

45:

8124:Pearson correlation coefficient

7670:-Squared: Some Straight Talk".

7522:Nagelkerke, Nico J. D. (1992).

7218:Organizational Research Methods

7130:Methods Of Correlation Analysis

6481:{\displaystyle SS_{\text{tot}}}

5817:, there are several choices of

5511:is a vector of zeros, then the

4121:Considering the calculation of

3892:being unity for the mean, i.e.

3513:will lead to a larger value of

3068:{\displaystyle SS_{\text{res}}}

2772:regression using typical data,

2214:of a model. In regression, the

1792:Pearson correlation coefficient

7509:10.1080/00031305.1990.10475731

7045:Faraway, Julian James (2005).

6628:Proportional reduction in loss

6320:

6316:

6310:

6300:

6252:

6237:

6138:

6135:

6120:

6110:

6098:

6095:

6089:

6079:

6070:

6008:

5993:

5965:

5959:

5908:

5893:

5881:

5875:

5409:

5367:

5354:

5332:

5322:

5310:

5295:

5288:

5275:

5257:

5254:

5242:

5224:

5039:

5017:

5010:

4987:

4982:

4967:

4960:

4944:

4841:It is assumed that the matrix

4503:

4491:

4438:

4426:

4180:, whereas the observed sample

3970:

3951:

3930:

3730:

3625:{\displaystyle {\bar {R}}^{2}}

3610:

2915:

2885:

2862:

2859:

2853:

2695:

2110:

2093:

2086:of the coefficient estimates,

1876:{\displaystyle \rho ^{2}(y,x)}

1870:

1858:

1783:{\displaystyle \rho ^{2}(y,f)}

1777:

1765:

1712:

1697:

1222:

1215:

1193:

910:

903:

881:

790:

763:

658:

629:

300:to this particular criterion.

136:You may also add the template

1:

8063:Deep Learning Related Metrics

7550:. Retrieved February 9, 2016.

6929:10.1016/j.jhydrol.2012.12.004

6800:10.1016/S0304-4076(96)01818-0

6190:is the test statistic of the

5531:{\displaystyle j^{\text{th}}}

4734:Generalizing and decomposing

2331:Consider a linear model with

2125:{\displaystyle (X^{T}X)^{-1}}

7350:Richard Anderson-Sprecher, "

7300:Karch, Julian (2020-09-29).

6763:The Practice of Econometrics

5787:{\displaystyle R^{\otimes }}

5760:{\displaystyle R^{\otimes }}

5558:{\displaystyle R^{\otimes }}

5473:{\displaystyle R^{\otimes }}

5154:{\displaystyle R^{\otimes }}

4765:Geometric representation of

3860:and the number of variables

3196:{\displaystyle \mathbb {R} }

3177:This equation describes the

3075:is equivalent to maximizing

2813:is the number of columns in

2792:, similar to the F-tests in

211:coefficient of determination

18:Squared multiple correlation

7907:Sensitivity and specificity

7478:The Analysis of Binary Data

6707:Applied Regression Analysis

6633:Regression model validation

6376:sum of squares of residuals

5453:. The diagonal elements of

4131:and lead to an increase in

3532:does not indicate whether:

108:will aid in categorization.

8184:

7054:. Chapman & Hall/CRC.

6638:Root mean square deviation

6265:cannot be greater than 1,

6219:It does not have any unit.

5565:simply corresponds to the

5504:{\displaystyle \beta _{0}}

5116:{\displaystyle \beta _{0}}

5089:{\displaystyle \beta _{0}}

4885:{\displaystyle \beta _{0}}

4783:Bayesian linear regression

4749:examine whether the total

4558:

3913:, it can be rewritten as:

3585:

3378:{\displaystyle \beta _{0}}

3351:{\displaystyle \beta _{0}}

2753:can be referred to as the

2722:A caution that applies to

2719:or inherent variability."

2704:{\displaystyle {\bar {y}}}

2480:is the response variable,

2327:In a multiple linear model

2311:, alternative versions of

1083:

638:{\displaystyle {\bar {y}}}

398:+ 0 (i.e., the 1:1 line).

83:Machine translation, like

36:Coefficient of correlation

29:

8132:

7497:The American Statistician

7449:Science: Under Submission

7443:Hoornweg, Victor (2018).

7360:The American Statistician

7180:10.1080/00220970109600656

7096:10.1177/01466216970214001

6994:The American Statistician

6967:Casella, Georges (2002).

6617:hydrological applications

5130:The individual effect on

4192:stage of model building.

2730:and association is that "

2309:generalized least squares

2242:can be greater than one.

1903:and explanatory variable

60:the corresponding article

7640:Elements of Econometrics

7230:10.1177/1094428106292901

3596:(one common notation is

3506:{\displaystyle SS_{tot}}

3023:{\displaystyle SS_{tot}}

2788:can be performed on the

2082:, are obtained from the

1814:and modeled (predicted)

1740:In linear least squares

1246:simple linear regression

1155:explained sum of squares

249:simple linear regression

191:simple linear regression

32:Coefficient of variation

30:Not to be confused with

7935:Calinski-Harabasz index

7399:10.1093/biomet/78.3.691

7278:10.1214/aoms/1177706717

6942:Everitt, B. S. (2002).

6788:Journal of Econometrics

6733:Devore, Jay L. (2011).

5827:One is the generalized

3592:The use of an adjusted

2790:residual sum of squares

2473:{\displaystyle {Y_{i}}}

2274:kitchen sink regression

2245:In all instances where

2141:correlation coefficient

728:residual sum of squares

516:(collectively known as

269:correlation coefficient

228:used in the context of

147:For more guidance, see

8158:Regression diagnostics

7707:PeerJ Computer Science

7664:Lewis-Beck, Michael S.

7352:Model Comparisons and

6819:PeerJ Computer Science

6709:. Wiley-Interscience.

6482:

6432:

6341:

6259:

6177:

6016:

5972:

5936:

5805:in logistic regression

5788:

5761:

5734:

5699:

5664:

5637:

5610:

5590:

5559:

5532:

5505:

5474:

5447:

5386:

5195:

5175:

5155:

5117:

5090:

5052:

4906:

4886:

4859:

4832:

4770:

4724:

4648:

4510:

4445:

4375:

4322:

4266:

4074:bias-variance tradeoff

4065:

4009:

3825:

3690:

3658:

3626:

3507:

3463:

3433:

3406:

3379:

3352:

3325:

3292:

3197:

3168:

3102:

3069:

3024:

2977:

2929:

2705:

2672:

2613:

2563:

2532:

2474:

2438:

2391:

2305:weighted least squares

2177:

2157:

2126:

2069:

1917:

1897:

1877:

1828:

1808:

1784:

1726:

1668:

1639:

1576:

1504:have been obtained by

1442:

1311:

1235:

1129:

1050:

1012:

923:

832:

712:

697:

639:

489:

477:

290:There are cases where

202:

178:

167:Ordinary least squares

8098:Intra-list Similarity

6969:Statistical inference

6483:

6433:

6342:

6260:

6192:likelihood ratio test

6178:

6017:

5973:

5937:

5789:

5762:

5735:

5700:

5665:

5663:{\displaystyle x_{j}}

5638:

5636:{\displaystyle x_{i}}

5611:

5591:

5589:{\displaystyle x_{j}}

5560:

5533:

5506:

5475:

5448:

5387:

5196:

5176:

5156:

5118:

5091:

5053:

4907:

4887:

4860:

4833:

4789:, and the (adaptive)

4764:

4725:

4649:

4511:

4446:

4376:

4323:

4267:

4063:

4010:

3826:

3691:

3659:

3627:

3544:omitted-variable bias

3508:

3477:, a smaller value of

3464:

3434:

3432:{\displaystyle X_{2}}

3407:

3405:{\displaystyle X_{1}}

3380:

3353:

3326:

3293:

3198:

3169:

3100:

3070:

3025:

2978:

2930:

2741:is the square of the

2706:

2673:

2642:explanatory variables

2614:

2573:term. The quantities

2564:

2533:

2475:

2439:

2371:

2178:

2158:

2143:between the original

2127:

2070:

1928:explanatory variables

1918:

1898:

1878:

1829:

1809:

1794:between the observed

1785:

1727:

1669:

1640:

1577:

1443:

1312:

1244:In some cases, as in

1236:

1138:As explained variance

1130:

1051:

1013:

924:

844:(proportional to the

833:

713:

677:

640:

478:

409:

283:is the square of the

184:

165:

149:Knowledge:Translation

120:copyright attribution

7720:10.7717/peerj-cs.623

7583:Gujarati, Damodar N.

7319:10.1525/collabra.343

7306:Collabra: Psychology

7048:Linear models with R

6909:Journal of Hydrology

6886:10.1029/1998WR900018

6832:10.7717/peerj-cs.623

6462:

6385:

6273:

6227:

6033:

5982:

5949:

5838:

5771:

5744:

5709:

5674:

5647:

5620:

5600:

5573:

5542:

5538:diagonal element of

5515:

5488:

5457:

5399:

5208:

5185:

5165:

5138:

5100:

5073:

4919:

4896:

4869:

4849:

4804:

4779:shrinkage estimators

4664:

4588:

4455:

4390:

4332:

4279:

4210:

4026:is the sample size.

3920:

3720:

3668:

3636:

3600:

3481:

3444:

3416:

3389:

3362:

3335:

3306:

3210:

3185:

3112:

3049:

3045:is this: Minimizing

2998:

2967:

2827:

2686:

2662:

2577:

2546:

2484:

2455:

2342:

2210:is a measure of the

2167:

2147:

2090:

1937:

1907:

1887:

1845:

1818:

1798:

1752:

1688:

1649:

1620:

1519:

1510:sufficient condition

1335:

1259:

1250:total sum of squares

1164:

1098:

1024:

936:

852:

842:total sum of squares

734:

649:

620:

413:

7686:10.1093/pan/2.1.153

7546:OriginLab webpage,

7491:Magee, L. (1990). "

6921:2013JHyd..480...33R

6878:1999WRR....35..233L

6643:Stepwise regression

6290:

5811:logistic regression

5729:

5694:

5346:

5201:matrix is given by

4561:Partial correlation

4539:for the population

3685:

3653:

2266:monotone increasing

2080:standard deviations

1742:multiple regression

1090:In a general form,

826:

321:regression analysis

255:is used instead of

187:Theil–Sen estimator

8163:Statistical ratios

8119:Euclidean distance

8085:Recommender system

7965:Similarity measure

7779:evaluation metrics

7673:Political Analysis

7591:Basic Econometrics

7451:. Hoornweg Press.

6598:Anscombe's quartet

6492:the value. If the

6478:

6428:

6370:Occasionally, the

6337:

6276:

6255:

6173:

6012:

5968:

5932:

5815:maximum likelihood

5784:

5757:

5730:

5712:

5695:

5677:

5660:

5633:

5616:. When regressors

5606:

5586:

5555:

5528:

5501:

5480:exactly add up to

5470:

5443:

5382:

5325:

5191:

5171:

5161:('R-outer'). This

5151:

5113:

5086:

5048:

4902:

4882:

4855:

4828:

4771:

4745:criterion and the

4720:

4644:

4634: res, reduced

4603: res, reduced

4506:

4441:

4371:

4318:

4262:

4066:

4005:

3842:degrees of freedom

3821:

3686:

3671:

3654:

3639:

3622:

3538:dependent variable

3503:

3459:

3429:

3402:

3375:

3348:

3321:

3288:

3193:

3164:

3103:

3065:

3020:

2973:

2925:

2884:

2874:

2839:

2701:

2668:

2609:

2559:

2528:

2470:

2434:

2276:) to increase the

2173:

2153:

2122:

2065:

1913:

1893:

1873:

1824:

1804:

1790:the square of the

1780:

1722:

1664:

1635:

1572:

1464:explained variance

1438:

1307:

1231:

1192:

1142:A larger value of

1125:

1046:

1008:

919:

880:

828:

812:

811:

762:

708:

635:

607:(forming a vector

490:

473:

470:

453:

263:is included, then

230:statistical models

203:

185:Comparison of the

179:

128:interlanguage link

8145:

8144:

8114:Cosine similarity

7950:Hopkins statistic

7655:978-0-02-365070-3

7600:978-0-07-337577-9

7533:978-0-387-97721-8

7458:978-90-829188-0-9

7032:www.mathworks.com

6953:978-0-521-81099-9

6866:Water Resour. Res

6744:978-0-538-73352-6

6716:978-0-471-17082-2

6691:978-0-07-023407-9

6550:

6549:

6475:

6411:

6408:

6391:

6390:norm of residuals

6269:is between 0 and

6249:

6132:

6068:

6005:

5912:

5905:

5813:, usually fit by

5609:{\displaystyle y}

5525:

5412:

5357:

5335:

5278:

5245:

5194:{\displaystyle p}

5174:{\displaystyle p}

5043:

4905:{\displaystyle b}

4858:{\displaystyle y}

4715:

4711:

4696:

4680:

4639:

4635:

4620:

4604:

4483:

4467:

4462:

4418:

4402:

4397:

4360:

4344:

4339:

4307:

4291:

4286:

4259:

4256:

4251:

4244:

4239:

4190:feature selection

4003:

3933:

3818:

3814:

3809:

3797:

3782:

3777:

3765:

3733:

3678:

3646:

3613:

3588:Omega-squared (ω)

3062:

2976:{\displaystyle X}

2875:

2865:

2850:

2830:

2794:Granger causality

2717:lurking variables

2698:

2671:{\displaystyle y}

2176:{\displaystyle f}

2156:{\displaystyle y}

2084:covariance matrix

2060:

2055:

2038:

2015:

2000:

1969:

1954:

1916:{\displaystyle x}

1896:{\displaystyle y}

1827:{\displaystyle f}

1807:{\displaystyle y}

1715:

1700:

1661:

1632:

1559:

1544:

1506:linear regression

1436:

1424:

1401:

1383:

1379:

1364:

1328:is equivalent to

1304:

1288:

1272:

1218:

1183:

1177:

1123:

1037:

1006:

906:

871:

865:

802:

753:

747:

675:

661:

632:

471:

466:

449:

160:

159:

72:

68:

16:(Redirected from

8175:

8137:Confusion matrix

7912:Logarithmic Loss

7777:Machine learning

7770:

7763:

7756:

7747:

7742:

7732:

7722:

7697:

7659:

7643:

7629:

7604:

7570:

7569:

7557:

7551:

7544:

7538:

7537:

7519:

7513:

7512:

7488:

7482:

7481:

7469:

7463:

7462:

7440:

7429:

7428:

7417:

7411:

7410:

7384:

7375:

7364:

7348:

7342:

7341:

7331:

7321:

7297:

7291:

7290:

7280:

7256:

7250:

7249:

7213:

7200:

7199:

7165:

7152:

7146:

7144:

7126:Mordecai Ezekiel

7122:

7116:

7115:

7075:

7066:

7065:

7053:

7042:

7036:

7035:

7024:

7018:

7017:

6989:

6983:

6982:

6964:

6958:

6957:

6939:

6933:

6932:

6904:

6898:

6897:

6861:

6855:

6854:

6844:

6834:

6810:

6804:

6803:

6783:

6777:

6776:

6759:Barten, Anton P.

6755:

6749:

6748:

6730:

6721:

6720:

6702:

6696:

6695:

6677:

6671:

6670:

6658:

6508:

6487:

6485:

6484:

6479:

6477:

6476:

6473:

6437:

6435:

6434:

6429:

6412:

6410:

6409:

6406:

6397:

6392:

6389:

6346:

6344:

6343:

6338:

6336:

6335:

6331:

6309:

6308:

6289:

6284:

6264:

6262:

6261:

6256:

6251:

6250:

6242:

6236:

6235:

6182:

6180:

6179:

6174:

6172:

6171:

6167:

6142:

6141:

6134:

6133:

6125:

6119:

6118:

6088:

6087:

6069:

6061:

6045:

6044:

6021:

6019:

6018:

6013:

6011:

6007:

6006:

5998:

5992:

5991:

5977:

5975:

5974:

5969:

5958:

5957:

5941:

5939:

5938:

5933:

5931:

5930:

5926:

5917:

5913:

5911:

5907:

5906:

5898:

5892:

5891:

5884:

5874:

5873:

5866:

5850:

5849:

5798:for an example.

5793:

5791:

5790:

5785:

5783:

5782:

5766:

5764:

5763:

5758:

5756:

5755:

5739:

5737:

5736:

5731:

5728:

5723:

5704:

5702:

5701:

5696:

5693:

5688:

5670:are correlated,

5669:

5667:

5666:

5661:

5659:

5658:

5642:

5640:

5639:

5634:

5632:

5631:

5615:

5613:

5612:

5607:

5595:

5593:

5592:

5587:

5585:

5584:

5564:

5562:

5561:

5556:

5554:

5553:

5537:

5535:

5534:

5529:

5527:

5526:

5523:

5510:

5508:

5507:

5502:

5500:

5499:

5479:

5477:

5476:

5471:

5469:

5468:

5452:

5450:

5449:

5444:

5442:

5441:

5420:

5419:

5414:

5413:

5405:

5391:

5389:

5388:

5383:

5378:

5377:

5365:

5364:

5359:

5358:

5350:

5342:

5337:

5336:

5328:

5321:

5320:

5305:

5294:

5286:

5285:

5280:

5279:

5271:

5267:

5253:

5252:

5247:

5246:

5238:

5234:

5220:

5219:

5200:

5198:

5197:

5192:

5180:

5178:

5177:

5172:

5160:

5158:

5157:

5152:

5150:

5149:

5122:

5120:

5119:

5114:

5112:

5111:

5095:

5093:

5092:

5087:

5085:

5084:

5057:

5055:

5054:

5049:

5044:

5042:

5038:

5037:

5016:

5008:

5007:

4985:

4966:

4942:

4931:

4930:

4911:

4909:

4908:

4903:

4891:

4889:

4888:

4883:

4881:

4880:

4864:

4862:

4861:

4856:

4837:

4835:

4834:

4829:

4787:ridge regression

4729:

4727:

4726:

4721:

4716:

4714:

4713:

4712:

4709:

4699:

4698:

4697:

4694:

4682:

4681:

4678:

4668:

4653:

4651:

4650:

4645:

4640:

4638:

4637:

4636:

4633:

4623:

4622:

4621:

4618:

4606:

4605:

4602:

4592:

4515:

4513:

4512:

4507:

4490:

4485:

4484:

4481:

4469:

4468:

4465:

4463:

4460:

4450:

4448:

4447:

4442:

4425:

4420:

4419:

4416:

4404:

4403:

4400:

4398:

4395:

4380:

4378:

4377:

4372:

4367:

4362:

4361:

4358:

4346:

4345:

4342:

4340:

4337:

4327:

4325:

4324:

4319:

4314:

4309:

4308:

4305:

4293:

4292:

4289:

4287:

4284:

4271:

4269:

4268:

4263:

4261:

4260:

4258:

4257:

4254:

4252:

4249:

4246:

4245:

4242:

4240:

4237:

4234:

4222:

4221:

4164:

4158:

4148:

4142:

4136:

4126:

4113:

4107:

4100:

4014:

4012:

4011:

4006:

4004:

4002:

3985:

3974:

3969:

3968:

3941:

3940:

3935:

3934:

3926:

3905:

3881:

3830:

3828:

3827:

3822:

3820:

3819:

3817:

3816:

3815:

3812:

3810:

3807:

3804:

3799:

3798:

3795:

3785:

3784:

3783:

3780:

3778:

3775:

3772:

3767:

3766:

3763:

3753:

3741:

3740:

3735:

3734:

3726:

3709:. The adjusted

3707:Mordecai Ezekiel

3695:

3693:

3692:

3687:

3684:

3679:

3676:

3663:

3661:

3660:

3655:

3652:

3647:

3644:

3631:

3629:

3628:

3623:

3621:

3620:

3615:

3614:

3606:

3512:

3510:

3509:

3504:

3502:

3501:

3473:calculation for

3468:

3466:

3465:

3460:

3458:

3457:

3452:

3438:

3436:

3435:

3430:

3428:

3427:

3411:

3409:

3408:

3403:

3401:

3400:

3384:

3382:

3381:

3376:

3374:

3373:

3357:

3355:

3354:

3349:

3347:

3346:

3330:

3328:

3327:

3322:

3320:

3319:

3314:

3297:

3295:

3294:

3289:

3280:

3279:

3267:

3266:

3254:

3253:

3241:

3240:

3228:

3227:

3202:

3200:

3199:

3194:

3192:

3173:

3171:

3170:

3165:

3156:

3155:

3143:

3142:

3130:

3129:

3074:

3072:

3071:

3066:

3064:

3063:

3060:

3030:depends only on

3029:

3027:

3026:

3021:

3019:

3018:

2982:

2980:

2979:

2974:

2934:

2932:

2931:

2926:

2923:

2922:

2910:

2909:

2897:

2896:

2883:

2873:

2852:

2851:

2848:

2838:

2710:

2708:

2707:

2702:

2700:

2699:

2691:

2677:

2675:

2674:

2669:

2618:

2616:

2615:

2610:

2608:

2607:

2589:

2588:

2568:

2566:

2565:

2560:

2558:

2557:

2542:regressors, and

2537:

2535:

2534:

2529:

2527:

2526:

2502:

2501:

2479:

2477:

2476:

2471:

2469:

2468:

2467:

2443:

2441:

2440:

2435:

2430:

2429:

2417:

2416:

2401:

2400:

2390:

2385:

2367:

2366:

2354:

2353:

2256:. In this case,

2198:

2182:

2180:

2179:

2174:

2162:

2160:

2159:

2154:

2131:

2129:

2128:

2123:

2121:

2120:

2105:

2104:

2074:

2072:

2071:

2066:

2061:

2059:

2058:

2057:

2056:

2048:

2041:

2040:

2039:

2031:

2023:

2022:

2018:

2017:

2016:

2008:

2002:

2001:

1993:

1978:

1973:

1972:

1971:

1970:

1962:

1956:

1955:

1947:

1922:

1920:

1919:

1914:

1902:

1900:

1899:

1894:

1882:

1880:

1879:

1874:

1857:

1856:

1833:

1831:

1830:

1825:

1813:

1811:

1810:

1805:

1789:

1787:

1786:

1781:

1764:

1763:

1731:

1729:

1728:

1723:

1717:

1716:

1708:

1702:

1701:

1693:

1673:

1671:

1670:

1665:

1663:

1662:

1654:

1644:

1642:

1641:

1636:

1634:

1633:

1625:

1581:

1579:

1578:

1573:

1571:

1570:

1561:

1560:

1552:

1546:

1545:

1537:

1531:

1530:

1491:

1478:

1447:

1445:

1444:

1439:

1437:

1435:

1431:

1426:

1425:

1422:

1412:

1408:

1403:

1402:

1399:

1389:

1384:

1382:

1381:

1380:

1377:

1367:

1366:

1365:

1362:

1352:

1347:

1346:

1316:

1314:

1313:

1308:

1306:

1305:

1302:

1290:

1289:

1286:

1274:

1273:

1270:

1240:

1238:

1237:

1232:

1230:

1229:

1220:

1219:

1211:

1205:

1204:

1191:

1179:

1178:

1175:

1157:, is defined as

1152:

1134:

1132:

1131:

1126:

1124:

1121:

1110:

1109:

1075:

1068:

1062:

1055:

1053:

1052:

1047:

1039:

1038:

1035:

1017:

1015:

1014:

1009:

1007:

1005:

1004:

1003:

1002:

982:

981:

980:

979:

959:

948:

947:

928:

926:

925:

920:

918:

917:

908:

907:

899:

893:

892:

879:

867:

866:

863:

837:

835:

834:

829:

825:

820:

810:

798:

797:

788:

787:

775:

774:

761:

749:

748:

745:

717:

715:

714:

709:

707:

706:

696:

691:

676:

668:

663:

662:

654:

644:

642:

641:

636:

634:

633:

625:

606:

482:

480:

479:

474:

472:

469:

468:

467:

464:

452:

451:

450:

447:

436:

425:

424:

337:) vs. measured (

139:

133:

107:

106:|topic=

104:, and specifying

89:Google Translate

70:

67:(September 2019)

66:

49:

48:

41:

21:

8183:

8182:

8178:

8177:

8176:

8174:

8173:

8172:

8148:

8147:

8146:

8141:

8128:

8102:

8079:

8070:Inception score

8058:

8035:

8013:Computer Vision

8007:

7979:

7916:

7848:

7780:

7774:

7700:

7662:

7656:

7632:

7626:

7607:

7601:

7587:Porter, Dawn C.

7581:

7578:

7576:Further reading

7573:

7559:

7558:

7554:

7545:

7541:

7534:

7521:

7520:

7516:

7490:

7489:

7485:

7471:

7470:

7466:

7459:

7442:

7441:

7432:

7425:Cross Validated

7419:

7418:

7414:

7382:

7377:

7376:

7367:

7349:

7345:

7299:

7298:

7294:

7258:

7257:

7253:

7215:

7214:

7203:

7163:

7154:

7153:

7149:

7124:

7123:

7119:

7077:

7076:

7069:

7062:

7051:

7044:

7043:

7039:

7026:

7025:

7021:

7006:10.2307/2683704

6991:

6990:

6986:

6979:

6966:

6965:

6961:

6954:

6941:

6940: