2345:(in the given document) and a low document frequency of the term in the whole collection of documents; the weights hence tend to filter out common terms. Since the ratio inside the idf's log function is always greater than or equal to 1, the value of idf (and tf–idf) is greater than or equal to 0. As a term appears in more documents, the ratio inside the logarithm approaches 1, bringing the idf and tf–idf closer to 0.

5244:

different domains. Another derivate is TF–IDuF. In TF–IDuF, idf is not calculated based on the document corpus that is to be searched or recommended. Instead, idf is calculated on users' personal document collections. The authors report that TF–IDuF was equally effective as tf–idf but could also be applied in situations when, e.g., a user modeling system has no access to a global document corpus.

1934:

5217:

5235:

addition, tf–idf was applied to "visual words" with the purpose of conducting object matching in videos, and entire sentences. However, the concept of tf–idf did not prove to be more effective in all cases than a plain tf scheme (without idf). When tf–idf was applied to citations, researchers could find no improvement over a simple citation-count weight that had no idf component.

3777:

4972:

3170:

3448:

3486:

3782:

This expression shows that summing the Tf–idf of all possible terms and documents recovers the mutual information between documents and term taking into account all the specificities of their joint distribution. Each Tf–idf hence carries the "bit of information" attached to a term x document pair.

2659:

5243:

A number of term-weighting schemes have derived from tf–idf. One of them is TF–PDF (term frequency * proportional document frequency). TF–PDF was introduced in 2001 in the context of identifying emerging topics in the media. The PDF component measures the difference of how often a term occurs in

5234:

The idea behind tf–idf also applies to entities other than terms. In 1998, the concept of idf was applied to citations. The authors argued that "if a very uncommon citation is shared by two documents, this should be weighted more highly than a citation made by a large number of documents". In

6024:

MATLAB toolbox that can be used for various tasks in text mining (TM) specifically i) indexing, ii) retrieval, iii) dimensionality reduction, iv) clustering, v) classification. The indexing step offers the user the ability to apply local and global weighting methods, including

4978:

4722:

2871:

4187:

4611:

4076:

4733:

1133:

2135:

3233:

4510:

4404:

4298:

3984:

3772:{\displaystyle M({\cal {T}};{\cal {D}})=\sum _{t,d}p_{t|d}\cdot p_{d}\cdot \mathrm {idf} (t)=\sum _{t,d}\mathrm {tf} (t,d)\cdot {\frac {1}{|D|}}\cdot \mathrm {idf} (t)={\frac {1}{|D|}}\sum _{t,d}\mathrm {tf} (t,d)\cdot \mathrm {idf} (t).}

881:

258:", and "salad" appears in very few plays, so seeing these words, one could get a good idea as to which play it might be. In contrast, "good" and "sweet" appears in every play and are completely uninformative as to which play it is.

1656:

1472:

619:

2336:

2485:

735:

455:

5719:

1301:

3891:

In its raw frequency form, tf is just the frequency of the "this" for each document. In each document, the word "this" appears once; but as the document 2 has more words, its relative frequency is smaller.

1548:

inverse fraction of the documents that contain the word (obtained by dividing the total number of documents by the number of documents containing the term, and then taking the logarithm of that quotient):

2218:

2474:

1369:

2813:

2019:

2490:

5212:{\displaystyle \mathrm {tfidf} ({\mathsf {''example''}},d_{2},D)=\mathrm {tf} ({\mathsf {''example''}},d_{2})\times \mathrm {idf} ({\mathsf {''example''}},D)=0.429\times 0.301\approx 0.129}

3165:{\displaystyle H({\cal {D}}|{\cal {T}}=t)=-\sum _{d}p_{d|t}\log p_{d|t}=-\log {\frac {1}{|\{d\in D:t\in d\}|}}=\log {\frac {|\{d\in D:t\in d\}|}{|D|}}+\log |D|=-\mathrm {idf} (t)+\log |D|}

1533:

6012:

1217:

1841:

1927:

1775:

4967:{\displaystyle \mathrm {tfidf} ({\mathsf {''example''}},d_{1},D)=\mathrm {tf} ({\mathsf {''example''}},d_{1})\times \mathrm {idf} ({\mathsf {''example''}},D)=0\times 0.301=0}

512:

93:. A survey conducted in 2015 showed that 83% of text-based recommender systems in digital libraries used tf–idf. Variations of the tf–idf weighting scheme were often used by

3443:{\displaystyle M({\cal {T}};{\cal {D}})=H({\cal {D}})-H({\cal {D}}|{\cal {T}})=\sum _{t}p_{t}\cdot (H({\cal {D}})-H({\cal {D}}|W=t))=\sum _{t}p_{t}\cdot \mathrm {idf} (t)}

2030:

3221:

3197:

6044:

1007:

augmented frequency, to prevent a bias towards longer documents, e.g. raw frequency divided by the raw frequency of the most frequently occurring term in the document:

4617:

1720:

363:

5730:

2724:

3478:

324:

2691:; it helps to understand why their product has a meaning in terms of joint informational content of a document. A characteristic assumption about the distribution

1867:

1014:

2863:

2843:

1795:

1682:

4091:

4516:

1555:

2654:{\displaystyle {\begin{aligned}\mathrm {idf} &=-\log P(t|D)\\&=\log {\frac {1}{P(t|D)}}\\&=\log {\frac {N}{|\{d\in D:t\in d\}|}}\end{aligned}}}

3990:

2231:

123:(1972) conceived a statistical interpretation of term-specificity called Inverse Document Frequency (idf), which became a cornerstone of term weighting:

777:

2671:. However, applying such information-theoretic notions to problems in information retrieval leads to problems when trying to define the appropriate

1380:

4415:

4304:

523:

4198:

4085:

for the ratio of documents that include the word "this". In this case, we have a corpus of two documents and all of them include the word "this".

3898:

5561:

5531:

5846:

5781:

5676:

5633:

5496:

5349:

630:

5304:

1237:

5720:"Evaluating the CC-IDF citation-weighting scheme – How effectively can 'Inverse Document Frequency' (IDF) be applied to references?"

112:

is computed by summing the tf–idf for each query term; many more sophisticated ranking functions are variants of this simple model.

98:

2146:

66:, adjusted for the fact that some words appear more frequently in general. Like the bag-of-words model, it models a document as a

374:

5259:

2361:, its theoretical foundations have been troublesome for at least three decades afterward, with many researchers trying to find

2390:

1312:

2732:

4192:

So tf–idf is zero for the word "this", which implies that the word is not very informative as it appears in all documents.

1965:

1843:). If the term is not in the corpus, this will lead to a division-by-zero. It is therefore common to adjust the numerator

5947:

Wu, H. C.; Luk, R.W.P.; Wong, K.F.; Kwok, K.L. (2008). "Interpreting TF-IDF term weights as making relevance decisions".

6003:

5756:

Proceedings Third

International Workshop on Advanced Issues of E-Commerce and Web-Based Information Systems. WECWIS 2001

1544:

is a measure of how much information the word provides, i.e., how common or rare it is across all documents. It is the

6054:

5657:

Sivic, Josef; Zisserman, Andrew (2003-01-01). "Video Google: A text retrieval approach to object matching in videos".

5264:

6049:

5701:

1483:

127:

The specificity of a term can be quantified as an inverse function of the number of documents in which it occurs.

5269:

2676:

1155:

920:(counting each occurrence of the same term separately). There are various other ways to define term frequency:

6028:

3480:, the unconditional probability to draw a term, with respect to the (random) choice of a document, to obtain:

1800:

3791:

Suppose that we have term count tables of a corpus consisting of only two documents, as listed on the right.

1872:

5759:

5424:

2818:

This assumption and its implications, according to Aizawa: "represent the heuristic that tf–idf employs."

2342:

131:

For example, the df (document frequency) and idf for some words in

Shakespeare's 37 plays are as follows:

1726:

5807:"TF-IDuF: A Novel Term-Weighting Scheme for User Modeling based on Users' Personal Document Collections"

102:

31:

5412:

2354:

120:

466:

5429:

2130:{\displaystyle \left(0.5+0.5{\frac {f_{t,q}}{\max _{t}f_{t,q}}}\right)\cdot \log {\frac {N}{n_{t}}}}

278:

A formula that aims to define the importance of a keyword or phrase within a document or a web page.

5764:

5512:

2822:

2664:

Namely, the inverse document frequency is the logarithm of "inverse" relative document frequency.

5974:

5935:

5886:

5787:

5682:

5639:

5598:

5442:

5394:

5294:

5274:

4409:

The word "example" is more interesting - it occurs three times, but only in the second document:

3224:

2688:

2373:

2362:

75:

5998:

5902:

5806:

4717:{\displaystyle \mathrm {idf} ({\mathsf {''example''}},D)=\log \left({\frac {2}{1}}\right)=0.301}

3202:

3178:

396:

5842:

5777:

5672:

5629:

5492:

5386:

5345:

5223:

1545:

976:

950:

5459:

Speech and

Language Processing (3rd ed. draft), Dan Jurafsky and James H. Martin, chapter 14.

5415:(1972). "A Statistical Interpretation of Term Specificity and Its Application in Retrieval".

1687:

335:

5964:

5956:

5925:

5917:

5876:

5868:

5769:

5664:

5621:

5590:

5543:

5484:

5434:

5378:

5337:

2694:

2668:

109:

82:

3456:

1128:{\displaystyle \mathrm {tf} (t,d)=0.5+0.5\cdot {\frac {f_{t,d}}{\max\{f_{t',d}:t'\in d\}}}}

301:

4182:{\displaystyle \mathrm {idf} ({\mathsf {''this''}},D)=\log \left({\frac {2}{2}}\right)=0}

1846:

5754:

Khoo Khyou Bun; Bun, Khoo Khyou; Ishizuka, M. (2001). "Emerging Topic

Tracking System".

4606:{\displaystyle \mathrm {tf} ({\mathsf {''example''}},d_{2})={\frac {3}{7}}\approx 0.429}

1937:

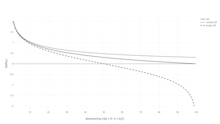

Plot of different inverse document frequency functions: standard, smooth, probabilistic.

1651:{\displaystyle \mathrm {idf} (t,D)=\log {\frac {N}{|\{d:d\in D{\text{ and }}t\in d\}|}}}

5254:

2848:

2828:

2368:

Spärck Jones's own explanation did not propose much theory, aside from a connection to

1780:

1667:

5594:

5534:(2004). "Understanding inverse document frequency: On theoretical arguments for IDF".

5366:

6038:

5921:

5898:

5890:

5856:

5831:

5826:

5398:

2369:

255:

94:

90:

5978:

5686:

5618:

Proceedings of the second international conference on

Autonomous agents - AGENTS '98

5602:

4071:{\displaystyle \mathrm {tf} ({\mathsf {''this''}},d_{2})={\frac {1}{7}}\approx 0.14}

3223:

are "random variables" corresponding to respectively draw a document or a term. The

6016:

5939:

5791:

5643:

5446:

2331:{\displaystyle \mathrm {tfidf} (t,d,D)=\mathrm {tf} (t,d)\cdot \mathrm {idf} (t,D)}

5658:

1933:

5581:

Aizawa, Akiko (2003). "An information-theoretic perspective of tf–idf measures".

5488:

5473:

5460:

5341:

2687:

Both term frequency and inverse document frequency can be formulated in terms of

5838:

5279:

2679:: not only documents need to be taken into account, but also queries and terms.

2672:

86:

63:

5773:

876:{\displaystyle \mathrm {tf} (t,d)={\frac {f_{t,d}}{\sum _{t'\in d}{f_{t',d}}}}}

5702:"Sentence Extraction by tf/idf and Position Weighting from Newspaper Articles"

5668:

5547:

5382:

5299:

5284:

1467:{\displaystyle \log \left({\frac {\max _{\{t'\in d\}}n_{t'}}{1+n_{t}}}\right)}

71:

5616:

Bollacker, Kurt D.; Lawrence, Steve; Giles, C. Lee (1998-01-01). "CiteSeer".

5390:

5326:

4505:{\displaystyle \mathrm {tf} ({\mathsf {''example''}},d_{1})={\frac {0}{5}}=0}

4399:{\displaystyle \mathrm {tfidf} ({\mathsf {''this''}},d_{2},D)=0.14\times 0=0}

614:{\displaystyle 0.5+0.5\cdot {\frac {f_{t,d}}{\max _{\{t'\in d\}}{f_{t',d}}}}}

275:. There are various ways for determining the exact values of both statistics.

5995:

is a Python library for vector space modeling and includes tf–idf weighting.

5960:

4293:{\displaystyle \mathrm {tfidf} ({\mathsf {''this''}},d_{1},D)=0.2\times 0=0}

3979:{\displaystyle \mathrm {tf} ({\mathsf {''this''}},d_{1})={\frac {1}{5}}=0.2}

2358:

6021:

5625:

2865:(and assuming that all documents have equal probability to be chosen) is:

5289:

2667:

This probabilistic interpretation in turn takes the same form as that of

67:

59:

5969:

5872:

5859:; Fox, E. A.; Wu, H. (1983). "Extended Boolean information retrieval".

3888:

The calculation of tf–idf for the term "this" is performed as follows:

1947:

Variants of term frequency-inverse document frequency (tf–idf) weights

914:. Note the denominator is simply the total number of terms in document

730:{\displaystyle K+(1-K){\frac {f_{t,d}}{\max _{\{t'\in d\}}{f_{t',d}}}}}

78:, by allowing the weight of words to depend on the rest of the corpus.

17:

6007:

5992:

5930:

5881:

5438:

5660:

Proceedings Ninth IEEE International

Conference on Computer Vision

1932:

251:

1296:{\displaystyle \log {\frac {N}{n_{t}}}=-\log {\frac {n_{t}}{N}}}

5365:

Breitinger, Corinna; Gipp, Bela; Langer, Stefan (2015-07-26).

2376:

footing, by estimating the probability that a given document

2213:{\displaystyle (1+\log f_{t,d})\cdot \log {\frac {N}{n_{t}}}}

906:

of a term in a document, i.e., the number of times that term

3508:

3498:

3369:

3350:

3305:

3293:

3274:

3255:

3245:

3208:

3184:

2895:

2883:

450:{\displaystyle f_{t,d}{\Bigg /}{\sum _{t'\in d}{f_{t',d}}}}

5367:"Research-paper recommender systems: a literature survey"

2469:{\displaystyle P(t|D)={\frac {|\{d\in D:t\in d\}|}{N}},}

1364:{\displaystyle \log \left({\frac {N}{1+n_{t}}}\right)+1}

5903:"Term-weighting approaches in automatic text retrieval"

2808:{\displaystyle p(d|t)={\frac {1}{|\{d\in D:t\in d\}|}}}

2845:, conditional to the fact it contains a specific term

97:

as a central tool in scoring and ranking a document's

5474:"Scoring, term weighting, and the vector space model"

4981:

4736:

4620:

4519:

4418:

4307:

4201:

4094:

3993:

3901:

3489:

3459:

3236:

3205:

3181:

2874:

2851:

2831:

2735:

2697:

2488:

2393:

2234:

2149:

2033:

1968:

1875:

1849:

1803:

1783:

1729:

1690:

1670:

1558:

1486:

1383:

1315:

1240:

1158:

1017:

780:

633:

526:

469:

377:

338:

304:

2014:{\displaystyle f_{t,d}\cdot \log {\frac {N}{n_{t}}}}

1144:

Variants of inverse document frequency (idf) weight

5576:

5574:

5830:

5211:

4966:

4716:

4605:

4504:

4398:

4292:

4181:

4070:

3978:

3771:

3472:

3442:

3215:

3191:

3164:

2857:

2837:

2807:

2718:

2653:

2468:

2357:in a 1972 paper. Although it has worked well as a

2341:A high weight in tf–idf is reached by a high term

2330:

2212:

2129:

2013:

1921:

1861:

1835:

1789:

1769:

1714:

1676:

1650:

1527:

1466:

1363:

1295:

1211:

1127:

875:

729:

613:

506:

449:

357:

318:

27:Estimate of the importance of a word in a document

5472:Manning, C.D.; Raghavan, P.; Schutze, H. (2008).

2068:

1398:

1075:

675:

559:

5526:

5524:

5522:

125:

5461:https://web.stanford.edu/~jurafsky/slp3/14.pdf

2825:of a "randomly chosen" document in the corpus

1528:{\displaystyle \log {\frac {N-n_{t}}{n_{t}}}}

267:The tf–idf is the product of two statistics,

58:, is a measure of importance of a word to a

8:

5833:Introduction to modern information retrieval

3066:

3042:

3014:

2990:

2794:

2770:

2636:

2612:

2449:

2425:

2353:Idf was introduced as "term specificity" by

1911:

1887:

1759:

1735:

1637:

1605:

1419:

1402:

1201:

1177:

1119:

1078:

696:

679:

580:

563:

1777: : number of documents where the term

5718:Beel, Joeran; Breitinger, Corinna (2017).

5371:International Journal on Digital Libraries

2372:. Attempts have been made to put idf on a

1684:: total number of documents in the corpus

5968:

5929:

5880:

5763:

5428:

5146:

5145:

5131:

5119:

5076:

5075:

5064:

5046:

5003:

5002:

4982:

4980:

4901:

4900:

4886:

4874:

4831:

4830:

4819:

4801:

4758:

4757:

4737:

4735:

4694:

4636:

4635:

4621:

4619:

4587:

4575:

4532:

4531:

4520:

4518:

4486:

4474:

4431:

4430:

4419:

4417:

4363:

4329:

4328:

4308:

4306:

4257:

4223:

4222:

4202:

4200:

4159:

4110:

4109:

4095:

4093:

4052:

4040:

4006:

4005:

3994:

3992:

3960:

3948:

3914:

3913:

3902:

3900:

3746:

3720:

3708:

3696:

3688:

3682:

3659:

3648:

3640:

3634:

3608:

3596:

3569:

3560:

3543:

3539:

3523:

3507:

3506:

3497:

3496:

3488:

3464:

3458:

3420:

3411:

3401:

3374:

3368:

3367:

3349:

3348:

3330:

3320:

3304:

3303:

3298:

3292:

3291:

3273:

3272:

3254:

3253:

3244:

3243:

3235:

3207:

3206:

3204:

3183:

3182:

3180:

3157:

3149:

3120:

3109:

3101:

3084:

3076:

3069:

3037:

3034:

3017:

2985:

2979:

2957:

2953:

2933:

2929:

2919:

2894:

2893:

2888:

2882:

2881:

2873:

2850:

2830:

2797:

2765:

2759:

2745:

2734:

2696:

2639:

2607:

2601:

2571:

2556:

2529:

2493:

2489:

2487:

2452:

2420:

2417:

2403:

2392:

2302:

2276:

2235:

2233:

2202:

2193:

2169:

2148:

2119:

2110:

2081:

2071:

2054:

2048:

2032:

2003:

1994:

1973:

1967:

1942:Term frequency–inverse document frequency

1914:

1882:

1874:

1848:

1804:

1802:

1782:

1762:

1730:

1728:

1706:

1698:

1697:

1689:

1669:

1640:

1623:

1600:

1594:

1559:

1557:

1517:

1506:

1493:

1485:

1451:

1428:

1401:

1394:

1382:

1342:

1326:

1314:

1282:

1276:

1256:

1247:

1239:

1212:{\displaystyle n_{t}=|\{d\in D:t\in d\}|}

1204:

1172:

1163:

1157:

1085:

1062:

1056:

1018:

1016:

852:

847:

830:

813:

807:

781:

779:

706:

701:

678:

661:

655:

632:

590:

585:

562:

545:

539:

525:

489:

468:

428:

423:

406:

401:

395:

394:

382:

376:

343:

337:

305:

303:

56:term frequency–inverse document frequency

3840:

3793:

1945:

1836:{\displaystyle \mathrm {tf} (t,d)\neq 0}

1478:probabilistic inverse document frequency

1142:

281:

133:

6045:Statistical natural language processing

5949:ACM Transactions on Information Systems

5910:Information Processing & Management

5317:

283:Variants of term frequency (tf) weight

5173:

5169:

5166:

5163:

5160:

5157:

5154:

5103:

5099:

5096:

5093:

5090:

5087:

5084:

5030:

5026:

5023:

5020:

5017:

5014:

5011:

4928:

4924:

4921:

4918:

4915:

4912:

4909:

4858:

4854:

4851:

4848:

4845:

4842:

4839:

4785:

4781:

4778:

4775:

4772:

4769:

4766:

4663:

4659:

4656:

4653:

4650:

4647:

4644:

4559:

4555:

4552:

4549:

4546:

4543:

4540:

4458:

4454:

4451:

4448:

4445:

4442:

4439:

4347:

4343:

4340:

4337:

4241:

4237:

4234:

4231:

4128:

4124:

4121:

4118:

4024:

4020:

4017:

4014:

3932:

3928:

3925:

3922:

85:in searches of information retrieval,

5583:Information Processing and Management

5566:Introduction to Information Retrieval

5481:Introduction to Information Retrieval

1922:{\displaystyle 1+|\{d\in D:t\in d\}|}

74:. It is a refinement over the simple

7:

5987:External links and suggested reading

5707:. National Institute of Informatics.

5325:Rajaraman, A.; Ullman, J.D. (2011).

2384:as the relative document frequency,

759:, is the relative frequency of term

5805:Langer, Stefan; Gipp, Bela (2017).

5727:Proceedings of the 12th IConference

4081:An idf is constant per corpus, and

1770:{\displaystyle |\{d\in D:t\in d\}|}

5305:SMART Information Retrieval System

5138:

5135:

5132:

5068:

5065:

4995:

4992:

4989:

4986:

4983:

4893:

4890:

4887:

4823:

4820:

4750:

4747:

4744:

4741:

4738:

4628:

4625:

4622:

4524:

4521:

4423:

4420:

4321:

4318:

4315:

4312:

4309:

4215:

4212:

4209:

4206:

4203:

4102:

4099:

4096:

3998:

3995:

3906:

3903:

3753:

3750:

3747:

3724:

3721:

3666:

3663:

3660:

3612:

3609:

3576:

3573:

3570:

3427:

3424:

3421:

3127:

3124:

3121:

2500:

2497:

2494:

2309:

2306:

2303:

2280:

2277:

2248:

2245:

2242:

2239:

2236:

1808:

1805:

1566:

1563:

1560:

1022:

1019:

785:

782:

25:

5562:Probability estimates in practice

1307:inverse document frequency smooth

507:{\displaystyle \log(1+f_{t,d})}

6022:Text to Matrix Generator (TMG)

6004:tf–idf and related definitions

5188:

5142:

5125:

5072:

5058:

4999:

4943:

4897:

4880:

4827:

4813:

4754:

4678:

4632:

4581:

4528:

4480:

4427:

4375:

4325:

4269:

4219:

4143:

4106:

4046:

4002:

3954:

3910:

3763:

3757:

3740:

3728:

3697:

3689:

3676:

3670:

3649:

3641:

3628:

3616:

3586:

3580:

3544:

3513:

3493:

3437:

3431:

3391:

3388:

3375:

3364:

3355:

3345:

3339:

3310:

3299:

3288:

3279:

3269:

3260:

3240:

3158:

3150:

3137:

3131:

3110:

3102:

3085:

3077:

3070:

3038:

3018:

2986:

2958:

2934:

2906:

2889:

2878:

2798:

2766:

2753:

2746:

2739:

2713:

2701:

2640:

2608:

2579:

2572:

2565:

2537:

2530:

2523:

2453:

2421:

2411:

2404:

2397:

2325:

2313:

2296:

2284:

2270:

2252:

2181:

2150:

1915:

1883:

1824:

1812:

1763:

1731:

1707:

1699:

1641:

1601:

1582:

1570:

1375:inverse document frequency max

1205:

1173:

1038:

1026:

801:

789:

652:

640:

501:

476:

1:

6031:Explanation of term-frequency

5595:10.1016/S0306-4573(02)00021-3

2479:so that we can define idf as

2225:Then tf–idf is calculated as

5922:10.1016/0306-4573(88)90021-0

5663:. ICCV '03. pp. 1470–.

5513:"TFIDF statistics | SAX-VSM"

5489:10.1017/CBO9780511809071.007

5342:10.1017/CBO9781139058452.002

2683:Link with information theory

5265:Latent Dirichlet allocation

5260:Kullback–Leibler divergence

3453:The last step is to expand

6071:

5999:Anatomy of a search engine

5774:10.1109/wecwis.2001.933900

5334:Mining of Massive Datasets

3216:{\displaystyle {\cal {T}}}

3192:{\displaystyle {\cal {D}}}

1542:inverse document frequency

1232:inverse document frequency

1139:Inverse document frequency

273:inverse document frequency

5861:Communications of the ACM

5669:10.1109/ICCV.2003.1238663

5548:10.1108/00220410410560582

5383:10.1007/s00799-015-0156-0

2677:probability distributions

2025:double normalization-idf

6029:Term-frequency explained

5829:; McGill, M. J. (1986).

5536:Journal of Documentation

5417:Journal of Documentation

5270:Latent semantic analysis

518:double normalization 0.5

5961:10.1145/1361684.1361686

2365:justifications for it.

1715:{\displaystyle N={|D|}}

358:{\displaystyle f_{t,d}}

81:It was often used as a

5901:; Buckley, C. (1988).

5213:

4968:

4718:

4607:

4506:

4400:

4294:

4183:

4072:

3980:

3773:

3474:

3444:

3217:

3193:

3175:In terms of notation,

3166:

2859:

2839:

2809:

2720:

2719:{\displaystyle p(d,t)}

2655:

2470:

2332:

2214:

2141:log normalization-idf

2131:

2015:

1938:

1923:

1863:

1837:

1791:

1771:

1716:

1678:

1652:

1546:logarithmically scaled

1529:

1468:

1365:

1297:

1213:

1129:

977:logarithmically scaled

924:the raw count itself:

877:

731:

625:double normalization K

615:

508:

451:

359:

320:

129:

5626:10.1145/280765.280786

5214:

4969:

4719:

4608:

4507:

4401:

4295:

4184:

4073:

3981:

3774:

3475:

3473:{\displaystyle p_{t}}

3445:

3218:

3194:

3167:

2860:

2840:

2810:

2721:

2656:

2471:

2363:information theoretic

2333:

2215:

2132:

2016:

1936:

1924:

1864:

1838:

1792:

1772:

1717:

1679:

1653:

1530:

1469:

1366:

1298:

1214:

1130:

878:

732:

616:

509:

452:

360:

321:

319:{\displaystyle {0,1}}

32:information retrieval

5620:. pp. 116–123.

4979:

4734:

4618:

4517:

4416:

4305:

4199:

4092:

3991:

3899:

3487:

3457:

3234:

3227:can be expressed as

3203:

3179:

2872:

2849:

2829:

2733:

2695:

2486:

2391:

2349:Justification of idf

2232:

2147:

2031:

1966:

1873:

1847:

1801:

1781:

1727:

1688:

1668:

1556:

1484:

1381:

1313:

1238:

1156:

1015:

778:

631:

524:

467:

375:

336:

302:

108:One of the simplest

3843:

3796:

2823:conditional entropy

1948:

1869:and denominator to

1862:{\displaystyle 1+N}

1145:

910:occurs in document

284:

135:

62:in a collection or

6055:Vector space model

5873:10.1145/182.358466

5295:Vector space model

5275:Mutual information

5209:

4964:

4714:

4603:

4502:

4396:

4290:

4179:

4068:

3976:

3841:

3794:

3769:

3719:

3607:

3534:

3470:

3440:

3406:

3325:

3225:mutual information

3213:

3189:

3162:

2924:

2855:

2835:

2805:

2716:

2689:information theory

2651:

2649:

2466:

2355:Karen Spärck Jones

2328:

2210:

2127:

2076:

2011:

1946:

1939:

1919:

1859:

1833:

1787:

1767:

1712:

1674:

1648:

1525:

1464:

1423:

1361:

1293:

1209:

1143:

1125:

873:

846:

727:

700:

611:

584:

504:

447:

422:

355:

316:

282:

134:

121:Karen Spärck Jones

76:bag-of-words model

70:of words, without

6050:Ranking functions

5867:(11): 1022–1036.

5848:978-0-07-054484-0

5783:978-0-7695-1224-2

5758:. pp. 2–11.

5678:978-0-7695-1950-0

5635:978-0-89791-983-8

5498:978-0-511-80907-1

5351:978-1-139-05845-2

5336:. pp. 1–17.

5224:base 10 logarithm

4702:

4595:

4494:

4167:

4060:

3968:

3886:

3885:

3839:

3838:

3787:Example of tf–idf

3704:

3702:

3654:

3592:

3519:

3397:

3316:

3090:

3023:

2915:

2858:{\displaystyle t}

2838:{\displaystyle D}

2803:

2675:for the required

2645:

2583:

2461:

2223:

2222:

2208:

2125:

2094:

2067:

2009:

1952:weighting scheme

1790:{\displaystyle t}

1677:{\displaystyle N}

1646:

1626:

1538:

1537:

1523:

1458:

1397:

1349:

1291:

1262:

1149:weighting scheme

1123:

871:

826:

740:

739:

725:

674:

609:

558:

461:log normalization

402:

288:weighting scheme

248:

247:

110:ranking functions

16:(Redirected from

6062:

6013:TfidfTransformer

5982:

5972:

5943:

5933:

5907:

5894:

5884:

5852:

5836:

5818:

5817:

5811:

5802:

5796:

5795:

5767:

5751:

5745:

5744:

5742:

5741:

5735:

5729:. Archived from

5724:

5715:

5709:

5708:

5706:

5697:

5691:

5690:

5654:

5648:

5647:

5613:

5607:

5606:

5578:

5569:

5558:

5552:

5551:

5528:

5517:

5516:

5509:

5503:

5502:

5478:

5469:

5463:

5457:

5451:

5450:

5439:10.1108/eb026526

5432:

5413:Spärck Jones, K.

5409:

5403:

5402:

5362:

5356:

5355:

5331:

5322:

5218:

5216:

5215:

5210:

5181:

5180:

5179:

5153:

5141:

5124:

5123:

5111:

5110:

5109:

5083:

5071:

5051:

5050:

5038:

5037:

5036:

5010:

4998:

4973:

4971:

4970:

4965:

4936:

4935:

4934:

4908:

4896:

4879:

4878:

4866:

4865:

4864:

4838:

4826:

4806:

4805:

4793:

4792:

4791:

4765:

4753:

4723:

4721:

4720:

4715:

4707:

4703:

4695:

4671:

4670:

4669:

4643:

4631:

4612:

4610:

4609:

4604:

4596:

4588:

4580:

4579:

4567:

4566:

4565:

4539:

4527:

4511:

4509:

4508:

4503:

4495:

4487:

4479:

4478:

4466:

4465:

4464:

4438:

4426:

4405:

4403:

4402:

4397:

4368:

4367:

4355:

4354:

4353:

4336:

4324:

4299:

4297:

4296:

4291:

4262:

4261:

4249:

4248:

4247:

4230:

4218:

4188:

4186:

4185:

4180:

4172:

4168:

4160:

4136:

4135:

4134:

4117:

4105:

4077:

4075:

4074:

4069:

4061:

4053:

4045:

4044:

4032:

4031:

4030:

4013:

4001:

3985:

3983:

3982:

3977:

3969:

3961:

3953:

3952:

3940:

3939:

3938:

3921:

3909:

3844:

3797:

3778:

3776:

3775:

3770:

3756:

3727:

3718:

3703:

3701:

3700:

3692:

3683:

3669:

3655:

3653:

3652:

3644:

3635:

3615:

3606:

3579:

3565:

3564:

3552:

3551:

3547:

3533:

3512:

3511:

3502:

3501:

3479:

3477:

3476:

3471:

3469:

3468:

3449:

3447:

3446:

3441:

3430:

3416:

3415:

3405:

3378:

3373:

3372:

3354:

3353:

3335:

3334:

3324:

3309:

3308:

3302:

3297:

3296:

3278:

3277:

3259:

3258:

3249:

3248:

3222:

3220:

3219:

3214:

3212:

3211:

3198:

3196:

3195:

3190:

3188:

3187:

3171:

3169:

3168:

3163:

3161:

3153:

3130:

3113:

3105:

3091:

3089:

3088:

3080:

3074:

3073:

3041:

3035:

3024:

3022:

3021:

2989:

2980:

2966:

2965:

2961:

2942:

2941:

2937:

2923:

2899:

2898:

2892:

2887:

2886:

2864:

2862:

2861:

2856:

2844:

2842:

2841:

2836:

2814:

2812:

2811:

2806:

2804:

2802:

2801:

2769:

2760:

2749:

2725:

2723:

2722:

2717:

2669:self-information

2660:

2658:

2657:

2652:

2650:

2646:

2644:

2643:

2611:

2602:

2588:

2584:

2582:

2575:

2557:

2543:

2533:

2503:

2475:

2473:

2472:

2467:

2462:

2457:

2456:

2424:

2418:

2407:

2383:

2380:contains a term

2379:

2337:

2335:

2334:

2329:

2312:

2283:

2251:

2219:

2217:

2216:

2211:

2209:

2207:

2206:

2194:

2180:

2179:

2136:

2134:

2133:

2128:

2126:

2124:

2123:

2111:

2100:

2096:

2095:

2093:

2092:

2091:

2075:

2065:

2064:

2049:

2020:

2018:

2017:

2012:

2010:

2008:

2007:

1995:

1984:

1983:

1949:

1928:

1926:

1925:

1920:

1918:

1886:

1868:

1866:

1865:

1860:

1842:

1840:

1839:

1834:

1811:

1796:

1794:

1793:

1788:

1776:

1774:

1773:

1768:

1766:

1734:

1721:

1719:

1718:

1713:

1711:

1710:

1702:

1683:

1681:

1680:

1675:

1657:

1655:

1654:

1649:

1647:

1645:

1644:

1627:

1624:

1604:

1595:

1569:

1534:

1532:

1531:

1526:

1524:

1522:

1521:

1512:

1511:

1510:

1494:

1473:

1471:

1470:

1465:

1463:

1459:

1457:

1456:

1455:

1439:

1438:

1437:

1436:

1422:

1412:

1395:

1370:

1368:

1367:

1362:

1354:

1350:

1348:

1347:

1346:

1327:

1302:

1300:

1299:

1294:

1292:

1287:

1286:

1277:

1263:

1261:

1260:

1248:

1218:

1216:

1215:

1210:

1208:

1176:

1168:

1167:

1146:

1134:

1132:

1131:

1126:

1124:

1122:

1112:

1101:

1100:

1093:

1073:

1072:

1057:

1025:

1003:

973:and 0 otherwise;

972:

968:

964:

947:

919:

913:

909:

901:

882:

880:

879:

874:

872:

870:

869:

868:

867:

860:

845:

838:

824:

823:

808:

788:

770:

765:within document

764:

758:

747:Term frequency,

736:

734:

733:

728:

726:

724:

723:

722:

721:

714:

699:

689:

672:

671:

656:

620:

618:

617:

612:

610:

608:

607:

606:

605:

598:

583:

573:

556:

555:

540:

513:

511:

510:

505:

500:

499:

456:

454:

453:

448:

446:

445:

444:

443:

436:

421:

414:

400:

399:

393:

392:

364:

362:

361:

356:

354:

353:

325:

323:

322:

317:

315:

285:

136:

83:weighting factor

21:

6070:

6069:

6065:

6064:

6063:

6061:

6060:

6059:

6035:

6034:

5989:

5946:

5905:

5897:

5855:

5849:

5825:

5822:

5821:

5809:

5804:

5803:

5799:

5784:

5753:

5752:

5748:

5739:

5737:

5733:

5722:

5717:

5716:

5712:

5704:

5699:

5698:

5694:

5679:

5656:

5655:

5651:

5636:

5615:

5614:

5610:

5580:

5579:

5572:

5559:

5555:

5530:

5529:

5520:

5511:

5510:

5506:

5499:

5483:. p. 100.

5476:

5471:

5470:

5466:

5458:

5454:

5430:10.1.1.115.8343

5411:

5410:

5406:

5364:

5363:

5359:

5352:

5329:

5324:

5323:

5319:

5314:

5309:

5250:

5241:

5232:

5172:

5147:

5115:

5102:

5077:

5042:

5029:

5004:

4977:

4976:

4927:

4902:

4870:

4857:

4832:

4797:

4784:

4759:

4732:

4731:

4690:

4662:

4637:

4616:

4615:

4571:

4558:

4533:

4515:

4514:

4470:

4457:

4432:

4414:

4413:

4359:

4346:

4330:

4303:

4302:

4253:

4240:

4224:

4197:

4196:

4155:

4127:

4111:

4090:

4089:

4036:

4023:

4007:

3989:

3988:

3944:

3931:

3915:

3897:

3896:

3789:

3687:

3639:

3556:

3535:

3485:

3484:

3460:

3455:

3454:

3407:

3326:

3232:

3231:

3201:

3200:

3177:

3176:

3075:

3036:

2984:

2949:

2925:

2870:

2869:

2847:

2846:

2827:

2826:

2764:

2731:

2730:

2693:

2692:

2685:

2648:

2647:

2606:

2586:

2585:

2561:

2541:

2540:

2504:

2484:

2483:

2419:

2389:

2388:

2381:

2377:

2351:

2230:

2229:

2198:

2165:

2145:

2144:

2115:

2077:

2066:

2050:

2038:

2034:

2029:

2028:

1999:

1969:

1964:

1963:

1944:

1871:

1870:

1845:

1844:

1799:

1798:

1797:appears (i.e.,

1779:

1778:

1725:

1724:

1686:

1685:

1666:

1665:

1625: and

1599:

1554:

1553:

1513:

1502:

1495:

1482:

1481:

1447:

1440:

1429:

1424:

1405:

1396:

1390:

1379:

1378:

1338:

1331:

1322:

1311:

1310:

1278:

1252:

1236:

1235:

1159:

1154:

1153:

1141:

1105:

1086:

1081:

1074:

1058:

1013:

1012:

1001:

980:

970:

966:

954:

953:"frequencies":

946:

925:

915:

911:

907:

900:

888:

853:

848:

831:

825:

809:

776:

775:

766:

760:

748:

745:

707:

702:

682:

673:

657:

629:

628:

591:

586:

566:

557:

541:

522:

521:

485:

465:

464:

429:

424:

407:

378:

373:

372:

369:term frequency

339:

334:

333:

300:

299:

264:

118:

28:

23:

22:

15:

12:

11:

5:

6068:

6066:

6058:

6057:

6052:

6047:

6037:

6036:

6033:

6032:

6026:

6019:

6010:

6001:

5996:

5988:

5985:

5984:

5983:

5944:

5916:(5): 513–523.

5895:

5853:

5847:

5820:

5819:

5797:

5782:

5765:10.1.1.16.7986

5746:

5710:

5692:

5677:

5649:

5634:

5608:

5570:

5553:

5542:(5): 503–520.

5518:

5504:

5497:

5464:

5452:

5404:

5377:(4): 305–338.

5357:

5350:

5316:

5315:

5313:

5310:

5308:

5307:

5302:

5297:

5292:

5287:

5282:

5277:

5272:

5267:

5262:

5257:

5255:Word embedding

5251:

5249:

5246:

5240:

5237:

5231:

5228:

5220:

5219:

5208:

5205:

5202:

5199:

5196:

5193:

5190:

5187:

5184:

5178:

5175:

5171:

5168:

5165:

5162:

5159:

5156:

5152:

5149:

5144:

5140:

5137:

5134:

5130:

5127:

5122:

5118:

5114:

5108:

5105:

5101:

5098:

5095:

5092:

5089:

5086:

5082:

5079:

5074:

5070:

5067:

5063:

5060:

5057:

5054:

5049:

5045:

5041:

5035:

5032:

5028:

5025:

5022:

5019:

5016:

5013:

5009:

5006:

5001:

4997:

4994:

4991:

4988:

4985:

4974:

4963:

4960:

4957:

4954:

4951:

4948:

4945:

4942:

4939:

4933:

4930:

4926:

4923:

4920:

4917:

4914:

4911:

4907:

4904:

4899:

4895:

4892:

4889:

4885:

4882:

4877:

4873:

4869:

4863:

4860:

4856:

4853:

4850:

4847:

4844:

4841:

4837:

4834:

4829:

4825:

4822:

4818:

4815:

4812:

4809:

4804:

4800:

4796:

4790:

4787:

4783:

4780:

4777:

4774:

4771:

4768:

4764:

4761:

4756:

4752:

4749:

4746:

4743:

4740:

4725:

4724:

4713:

4710:

4706:

4701:

4698:

4693:

4689:

4686:

4683:

4680:

4677:

4674:

4668:

4665:

4661:

4658:

4655:

4652:

4649:

4646:

4642:

4639:

4634:

4630:

4627:

4624:

4613:

4602:

4599:

4594:

4591:

4586:

4583:

4578:

4574:

4570:

4564:

4561:

4557:

4554:

4551:

4548:

4545:

4542:

4538:

4535:

4530:

4526:

4523:

4512:

4501:

4498:

4493:

4490:

4485:

4482:

4477:

4473:

4469:

4463:

4460:

4456:

4453:

4450:

4447:

4444:

4441:

4437:

4434:

4429:

4425:

4422:

4407:

4406:

4395:

4392:

4389:

4386:

4383:

4380:

4377:

4374:

4371:

4366:

4362:

4358:

4352:

4349:

4345:

4342:

4339:

4335:

4332:

4327:

4323:

4320:

4317:

4314:

4311:

4300:

4289:

4286:

4283:

4280:

4277:

4274:

4271:

4268:

4265:

4260:

4256:

4252:

4246:

4243:

4239:

4236:

4233:

4229:

4226:

4221:

4217:

4214:

4211:

4208:

4205:

4190:

4189:

4178:

4175:

4171:

4166:

4163:

4158:

4154:

4151:

4148:

4145:

4142:

4139:

4133:

4130:

4126:

4123:

4120:

4116:

4113:

4108:

4104:

4101:

4098:

4079:

4078:

4067:

4064:

4059:

4056:

4051:

4048:

4043:

4039:

4035:

4029:

4026:

4022:

4019:

4016:

4012:

4009:

4004:

4000:

3997:

3986:

3975:

3972:

3967:

3964:

3959:

3956:

3951:

3947:

3943:

3937:

3934:

3930:

3927:

3924:

3920:

3917:

3912:

3908:

3905:

3884:

3883:

3880:

3876:

3875:

3872:

3868:

3867:

3864:

3860:

3859:

3856:

3852:

3851:

3848:

3837:

3836:

3833:

3829:

3828:

3825:

3821:

3820:

3817:

3813:

3812:

3809:

3805:

3804:

3801:

3788:

3785:

3780:

3779:

3768:

3765:

3762:

3759:

3755:

3752:

3749:

3745:

3742:

3739:

3736:

3733:

3730:

3726:

3723:

3717:

3714:

3711:

3707:

3699:

3695:

3691:

3686:

3681:

3678:

3675:

3672:

3668:

3665:

3662:

3658:

3651:

3647:

3643:

3638:

3633:

3630:

3627:

3624:

3621:

3618:

3614:

3611:

3605:

3602:

3599:

3595:

3591:

3588:

3585:

3582:

3578:

3575:

3572:

3568:

3563:

3559:

3555:

3550:

3546:

3542:

3538:

3532:

3529:

3526:

3522:

3518:

3515:

3510:

3505:

3500:

3495:

3492:

3467:

3463:

3451:

3450:

3439:

3436:

3433:

3429:

3426:

3423:

3419:

3414:

3410:

3404:

3400:

3396:

3393:

3390:

3387:

3384:

3381:

3377:

3371:

3366:

3363:

3360:

3357:

3352:

3347:

3344:

3341:

3338:

3333:

3329:

3323:

3319:

3315:

3312:

3307:

3301:

3295:

3290:

3287:

3284:

3281:

3276:

3271:

3268:

3265:

3262:

3257:

3252:

3247:

3242:

3239:

3210:

3186:

3173:

3172:

3160:

3156:

3152:

3148:

3145:

3142:

3139:

3136:

3133:

3129:

3126:

3123:

3119:

3116:

3112:

3108:

3104:

3100:

3097:

3094:

3087:

3083:

3079:

3072:

3068:

3065:

3062:

3059:

3056:

3053:

3050:

3047:

3044:

3040:

3033:

3030:

3027:

3020:

3016:

3013:

3010:

3007:

3004:

3001:

2998:

2995:

2992:

2988:

2983:

2978:

2975:

2972:

2969:

2964:

2960:

2956:

2952:

2948:

2945:

2940:

2936:

2932:

2928:

2922:

2918:

2914:

2911:

2908:

2905:

2902:

2897:

2891:

2885:

2880:

2877:

2854:

2834:

2816:

2815:

2800:

2796:

2793:

2790:

2787:

2784:

2781:

2778:

2775:

2772:

2768:

2763:

2758:

2755:

2752:

2748:

2744:

2741:

2738:

2715:

2712:

2709:

2706:

2703:

2700:

2684:

2681:

2662:

2661:

2642:

2638:

2635:

2632:

2629:

2626:

2623:

2620:

2617:

2614:

2610:

2605:

2600:

2597:

2594:

2591:

2589:

2587:

2581:

2578:

2574:

2570:

2567:

2564:

2560:

2555:

2552:

2549:

2546:

2544:

2542:

2539:

2536:

2532:

2528:

2525:

2522:

2519:

2516:

2513:

2510:

2507:

2505:

2502:

2499:

2496:

2492:

2491:

2477:

2476:

2465:

2460:

2455:

2451:

2448:

2445:

2442:

2439:

2436:

2433:

2430:

2427:

2423:

2416:

2413:

2410:

2406:

2402:

2399:

2396:

2350:

2347:

2339:

2338:

2327:

2324:

2321:

2318:

2315:

2311:

2308:

2305:

2301:

2298:

2295:

2292:

2289:

2286:

2282:

2279:

2275:

2272:

2269:

2266:

2263:

2260:

2257:

2254:

2250:

2247:

2244:

2241:

2238:

2221:

2220:

2205:

2201:

2197:

2192:

2189:

2186:

2183:

2178:

2175:

2172:

2168:

2164:

2161:

2158:

2155:

2152:

2142:

2138:

2137:

2122:

2118:

2114:

2109:

2106:

2103:

2099:

2090:

2087:

2084:

2080:

2074:

2070:

2063:

2060:

2057:

2053:

2047:

2044:

2041:

2037:

2026:

2022:

2021:

2006:

2002:

1998:

1993:

1990:

1987:

1982:

1979:

1976:

1972:

1961:

1957:

1956:

1953:

1943:

1940:

1931:

1930:

1917:

1913:

1910:

1907:

1904:

1901:

1898:

1895:

1892:

1889:

1885:

1881:

1878:

1858:

1855:

1852:

1832:

1829:

1826:

1823:

1820:

1817:

1814:

1810:

1807:

1786:

1765:

1761:

1758:

1755:

1752:

1749:

1746:

1743:

1740:

1737:

1733:

1722:

1709:

1705:

1701:

1696:

1693:

1673:

1659:

1658:

1643:

1639:

1636:

1633:

1630:

1622:

1619:

1616:

1613:

1610:

1607:

1603:

1598:

1593:

1590:

1587:

1584:

1581:

1578:

1575:

1572:

1568:

1565:

1562:

1536:

1535:

1520:

1516:

1509:

1505:

1501:

1498:

1492:

1489:

1479:

1475:

1474:

1462:

1454:

1450:

1446:

1443:

1435:

1432:

1427:

1421:

1418:

1415:

1411:

1408:

1404:

1400:

1393:

1389:

1386:

1376:

1372:

1371:

1360:

1357:

1353:

1345:

1341:

1337:

1334:

1330:

1325:

1321:

1318:

1308:

1304:

1303:

1290:

1285:

1281:

1275:

1272:

1269:

1266:

1259:

1255:

1251:

1246:

1243:

1233:

1229:

1228:

1225:

1221:

1220:

1207:

1203:

1200:

1197:

1194:

1191:

1188:

1185:

1182:

1179:

1175:

1171:

1166:

1162:

1150:

1140:

1137:

1136:

1135:

1121:

1118:

1115:

1111:

1108:

1104:

1099:

1096:

1092:

1089:

1084:

1080:

1077:

1071:

1068:

1065:

1061:

1055:

1052:

1049:

1046:

1043:

1040:

1037:

1034:

1031:

1028:

1024:

1021:

1009:

1008:

1005:

993:

974:

948:

938:

892:

885:

884:

866:

863:

859:

856:

851:

844:

841:

837:

834:

829:

822:

819:

816:

812:

806:

803:

800:

797:

794:

791:

787:

784:

744:

743:Term frequency

741:

738:

737:

720:

717:

713:

710:

705:

698:

695:

692:

688:

685:

681:

677:

670:

667:

664:

660:

654:

651:

648:

645:

642:

639:

636:

626:

622:

621:

604:

601:

597:

594:

589:

582:

579:

576:

572:

569:

565:

561:

554:

551:

548:

544:

538:

535:

532:

529:

519:

515:

514:

503:

498:

495:

492:

488:

484:

481:

478:

475:

472:

462:

458:

457:

442:

439:

435:

432:

427:

420:

417:

413:

410:

405:

398:

391:

388:

385:

381:

370:

366:

365:

352:

349:

346:

342:

331:

327:

326:

314:

311:

308:

297:

293:

292:

289:

280:

279:

276:

269:term frequency

263:

260:

246:

245:

242:

239:

235:

234:

231:

228:

224:

223:

220:

217:

213:

212:

209:

206:

202:

201:

198:

195:

191:

190:

187:

184:

180:

179:

176:

173:

169:

168:

165:

162:

158:

157:

154:

151:

147:

146:

143:

140:

117:

114:

95:search engines

26:

24:

14:

13:

10:

9:

6:

4:

3:

2:

6067:

6056:

6053:

6051:

6048:

6046:

6043:

6042:

6040:

6030:

6027:

6023:

6020:

6018:

6014:

6011:

6009:

6005:

6002:

6000:

5997:

5994:

5991:

5990:

5986:

5980:

5976:

5971:

5966:

5962:

5958:

5954:

5950:

5945:

5941:

5937:

5932:

5927:

5923:

5919:

5915:

5911:

5904:

5900:

5896:

5892:

5888:

5883:

5878:

5874:

5870:

5866:

5862:

5858:

5854:

5850:

5844:

5840:

5835:

5834:

5828:

5824:

5823:

5815:

5808:

5801:

5798:

5793:

5789:

5785:

5779:

5775:

5771:

5766:

5761:

5757:

5750:

5747:

5736:on 2020-09-22

5732:

5728:

5721:

5714:

5711:

5703:

5700:Seki, Yohei.

5696:

5693:

5688:

5684:

5680:

5674:

5670:

5666:

5662:

5661:

5653:

5650:

5645:

5641:

5637:

5631:

5627:

5623:

5619:

5612:

5609:

5604:

5600:

5596:

5592:

5588:

5584:

5577:

5575:

5571:

5567:

5563:

5557:

5554:

5549:

5545:

5541:

5537:

5533:

5532:Robertson, S.

5527:

5525:

5523:

5519:

5514:

5508:

5505:

5500:

5494:

5490:

5486:

5482:

5475:

5468:

5465:

5462:

5456:

5453:

5448:

5444:

5440:

5436:

5431:

5426:

5422:

5418:

5414:

5408:

5405:

5400:

5396:

5392:

5388:

5384:

5380:

5376:

5372:

5368:

5361:

5358:

5353:

5347:

5343:

5339:

5335:

5328:

5327:"Data Mining"

5321:

5318:

5311:

5306:

5303:

5301:

5298:

5296:

5293:

5291:

5288:

5286:

5283:

5281:

5278:

5276:

5273:

5271:

5268:

5266:

5263:

5261:

5258:

5256:

5253:

5252:

5247:

5245:

5238:

5236:

5229:

5227:

5225:

5206:

5203:

5200:

5197:

5194:

5191:

5185:

5182:

5176:

5150:

5148:

5128:

5120:

5116:

5112:

5106:

5080:

5078:

5061:

5055:

5052:

5047:

5043:

5039:

5033:

5007:

5005:

4975:

4961:

4958:

4955:

4952:

4949:

4946:

4940:

4937:

4931:

4905:

4903:

4883:

4875:

4871:

4867:

4861:

4835:

4833:

4816:

4810:

4807:

4802:

4798:

4794:

4788:

4762:

4760:

4730:

4729:

4728:

4711:

4708:

4704:

4699:

4696:

4691:

4687:

4684:

4681:

4675:

4672:

4666:

4640:

4638:

4614:

4600:

4597:

4592:

4589:

4584:

4576:

4572:

4568:

4562:

4536:

4534:

4513:

4499:

4496:

4491:

4488:

4483:

4475:

4471:

4467:

4461:

4435:

4433:

4412:

4411:

4410:

4393:

4390:

4387:

4384:

4381:

4378:

4372:

4369:

4364:

4360:

4356:

4350:

4333:

4331:

4301:

4287:

4284:

4281:

4278:

4275:

4272:

4266:

4263:

4258:

4254:

4250:

4244:

4227:

4225:

4195:

4194:

4193:

4176:

4173:

4169:

4164:

4161:

4156:

4152:

4149:

4146:

4140:

4137:

4131:

4114:

4112:

4088:

4087:

4086:

4084:

4065:

4062:

4057:

4054:

4049:

4041:

4037:

4033:

4027:

4010:

4008:

3987:

3973:

3970:

3965:

3962:

3957:

3949:

3945:

3941:

3935:

3918:

3916:

3895:

3894:

3893:

3889:

3881:

3878:

3877:

3873:

3870:

3869:

3865:

3862:

3861:

3857:

3854:

3853:

3849:

3846:

3845:

3834:

3831:

3830:

3826:

3823:

3822:

3818:

3815:

3814:

3810:

3807:

3806:

3802:

3799:

3798:

3792:

3786:

3784:

3766:

3760:

3743:

3737:

3734:

3731:

3715:

3712:

3709:

3705:

3693:

3684:

3679:

3673:

3656:

3645:

3636:

3631:

3625:

3622:

3619:

3603:

3600:

3597:

3593:

3589:

3583:

3566:

3561:

3557:

3553:

3548:

3540:

3536:

3530:

3527:

3524:

3520:

3516:

3503:

3490:

3483:

3482:

3481:

3465:

3461:

3434:

3417:

3412:

3408:

3402:

3398:

3394:

3385:

3382:

3379:

3361:

3358:

3342:

3336:

3331:

3327:

3321:

3317:

3313:

3285:

3282:

3266:

3263:

3250:

3237:

3230:

3229:

3228:

3226:

3154:

3146:

3143:

3140:

3134:

3117:

3114:

3106:

3098:

3095:

3092:

3081:

3063:

3060:

3057:

3054:

3051:

3048:

3045:

3031:

3028:

3025:

3011:

3008:

3005:

3002:

2999:

2996:

2993:

2981:

2976:

2973:

2970:

2967:

2962:

2954:

2950:

2946:

2943:

2938:

2930:

2926:

2920:

2916:

2912:

2909:

2903:

2900:

2875:

2868:

2867:

2866:

2852:

2832:

2824:

2819:

2791:

2788:

2785:

2782:

2779:

2776:

2773:

2761:

2756:

2750:

2742:

2736:

2729:

2728:

2727:

2710:

2707:

2704:

2698:

2690:

2682:

2680:

2678:

2674:

2670:

2665:

2633:

2630:

2627:

2624:

2621:

2618:

2615:

2603:

2598:

2595:

2592:

2590:

2576:

2568:

2562:

2558:

2553:

2550:

2547:

2545:

2534:

2526:

2520:

2517:

2514:

2511:

2508:

2506:

2482:

2481:

2480:

2463:

2458:

2446:

2443:

2440:

2437:

2434:

2431:

2428:

2414:

2408:

2400:

2394:

2387:

2386:

2385:

2375:

2374:probabilistic

2371:

2366:

2364:

2360:

2356:

2348:

2346:

2344:

2322:

2319:

2316:

2299:

2293:

2290:

2287:

2273:

2267:

2264:

2261:

2258:

2255:

2228:

2227:

2226:

2203:

2199:

2195:

2190:

2187:

2184:

2176:

2173:

2170:

2166:

2162:

2159:

2156:

2153:

2143:

2140:

2139:

2120:

2116:

2112:

2107:

2104:

2101:

2097:

2088:

2085:

2082:

2078:

2072:

2061:

2058:

2055:

2051:

2045:

2042:

2039:

2035:

2027:

2024:

2023:

2004:

2000:

1996:

1991:

1988:

1985:

1980:

1977:

1974:

1970:

1962:

1959:

1958:

1954:

1951:

1950:

1941:

1935:

1908:

1905:

1902:

1899:

1896:

1893:

1890:

1879:

1876:

1856:

1853:

1850:

1830:

1827:

1821:

1818:

1815:

1784:

1756:

1753:

1750:

1747:

1744:

1741:

1738:

1723:

1703:

1694:

1691:

1671:

1664:

1663:

1662:

1634:

1631:

1628:

1620:

1617:

1614:

1611:

1608:

1596:

1591:

1588:

1585:

1579:

1576:

1573:

1552:

1551:

1550:

1547:

1543:

1518:

1514:

1507:

1503:

1499:

1496:

1490:

1487:

1480:

1477:

1476:

1460:

1452:

1448:

1444:

1441:

1433:

1430:

1425:

1416:

1413:

1409:

1406:

1391:

1387:

1384:

1377:

1374:

1373:

1358:

1355:

1351:

1343:

1339:

1335:

1332:

1328:

1323:

1319:

1316:

1309:

1306:

1305:

1288:

1283:

1279:

1273:

1270:

1267:

1264:

1257:

1253:

1249:

1244:

1241:

1234:

1231:

1230:

1226:

1223:

1222:

1198:

1195:

1192:

1189:

1186:

1183:

1180:

1169:

1164:

1160:

1151:

1148:

1147:

1138:

1116:

1113:

1109:

1106:

1102:

1097:

1094:

1090:

1087:

1082:

1069:

1066:

1063:

1059:

1053:

1050:

1047:

1044:

1041:

1035:

1032:

1029:

1011:

1010:

1006:

1000:

996:

992:

989:) = log (1 +

988:

984:

978:

975:

962:

958:

952:

949:

945:

941:

937:

933:

929:

923:

922:

921:

918:

905:

899:

895:

891:

864:

861:

857:

854:

849:

842:

839:

835:

832:

827:

820:

817:

814:

810:

804:

798:

795:

792:

774:

773:

772:

769:

763:

756:

752:

742:

718:

715:

711:

708:

703:

693:

690:

686:

683:

668:

665:

662:

658:

649:

646:

643:

637:

634:

627:

624:

623:

602:

599:

595:

592:

587:

577:

574:

570:

567:

552:

549:

546:

542:

536:

533:

530:

527:

520:

517:

516:

496:

493:

490:

486:

482:

479:

473:

470:

463:

460:

459:

440:

437:

433:

430:

425:

418:

415:

411:

408:

403:

389:

386:

383:

379:

371:

368:

367:

350:

347:

344:

340:

332:

329:

328:

312:

309:

306:

298:

295:

294:

290:

287:

286:

277:

274:

270:

266:

265:

261:

259:

257:

253:

250:We see that "

243:

240:

237:

236:

232:

229:

226:

225:

221:

218:

215:

214:

210:

207:

204:

203:

199:

196:

193:

192:

188:

185:

182:

181:

177:

174:

171:

170:

166:

163:

160:

159:

155:

152:

149:

148:

144:

141:

138:

137:

132:

128:

124:

122:

115:

113:

111:

106:

104:

101:given a user

100:

96:

92:

91:user modeling

88:

84:

79:

77:

73:

69:

65:

61:

57:

54:), short for

53:

49:

45:

41:

37:

33:

19:

6017:scikit-learn

5952:

5948:

5913:

5909:

5864:

5860:

5832:

5813:

5800:

5755:

5749:

5738:. Retrieved

5731:the original

5726:

5713:

5695:

5659:

5652:

5617:

5611:

5589:(1): 45–65.

5586:

5582:

5565:

5556:

5539:

5535:

5507:

5480:

5467:

5455:

5423:(1): 11–21.

5420:

5416:

5407:

5374:

5370:

5360:

5333:

5320:

5242:

5233:

5230:Beyond terms

5221:

4726:

4408:

4191:

4082:

4080:

3890:

3887:

3790:

3781:

3452:

3174:

2820:

2817:

2686:

2673:event spaces

2666:

2663:

2478:

2367:

2352:

2340:

2224:

1660:

1541:

1539:

1152:idf weight (

998:

994:

990:

986:

982:

960:

956:

943:

939:

935:

931:

927:

916:

903:

897:

893:

889:

886:

767:

761:

754:

750:

746:

272:

268:

249:

130:

126:

119:

107:

80:

55:

51:

47:

43:

39:

35:

29:

6006:as used in

5970:10397/10130

5839:McGraw-Hill

5814:IConference

5280:Noun phrase

5239:Derivatives

5222:(using the

3850:Term Count

3842:Document 1

3803:Term Count

3795:Document 2

979:frequency:

116:Motivations

87:text mining

6039:Categories

5899:Salton, G.

5857:Salton, G.

5740:2017-01-29

5312:References

5300:Word count

5285:Okapi BM25

2370:Zipf's law

1960:count-idf

969:occurs in

291:tf weight

262:Definition

72:word order

5931:1813/6721

5891:207180535

5882:1813/6351

5827:Salton, G

5760:CiteSeerX

5560:See also

5425:CiteSeerX

5399:207035184

5391:1432-5012

5204:≈

5198:×

5129:×

4953:×

4884:×

4727:Finally,

4688:

4598:≈

4385:×

4279:×

4153:

4063:≈

3744:⋅

3706:∑

3657:⋅

3632:⋅

3594:∑

3567:⋅

3554:⋅

3521:∑

3418:⋅

3399:∑

3359:−

3337:⋅

3318:∑

3283:−

3147:

3118:−

3099:

3061:∈

3049:∈

3032:

3009:∈

2997:∈

2977:

2971:−

2947:

2917:∑

2913:−

2789:∈

2777:∈

2726:is that:

2631:∈

2619:∈

2599:

2554:

2518:

2512:−

2444:∈

2432:∈

2359:heuristic

2343:frequency

2300:⋅

2191:

2185:⋅

2163:

2108:

2102:⋅

1992:

1986:⋅

1906:∈

1894:∈

1828:≠

1754:∈

1742:∈

1632:∈

1618:∈

1592:

1500:−

1491:

1414:∈

1388:

1320:

1274:

1268:−

1245:

1196:∈

1184:∈

1114:∈

1054:⋅

904:raw count

840:∈

828:∑

691:∈

647:−

575:∈

537:⋅

474:

416:∈

404:∑

330:raw count

172:Falstaff

99:relevance

5979:18303048

5955:(3): 1.

5687:14457153

5603:45793141

5290:PageRank

5248:See also

5177:″

5151:″

5107:″

5081:″

5034:″

5008:″

4932:″

4906:″

4862:″

4836:″

4789:″

4763:″

4667:″

4641:″

4563:″

4537:″

4462:″

4436:″

4351:″

4334:″

4245:″

4228:″

4132:″

4115:″

4083:accounts

4028:″

4011:″

3936:″

3919:″

3832:example

3824:another

1434:′

1410:′

1110:′

1091:′

858:′

836:′

712:′

687:′

596:′

571:′

434:′

412:′

256:Falstaff

68:multiset

60:document

6025:tf–idf.

5940:7725217

5792:1049263

5644:3526393

5447:2996187

3879:sample