98:. The output for the network was an artifact free heat map of the targets axial and lateral position. The network had a mean error rate of less than 30 microns when localizing target below 40 mm and had a mean error rate of 1.06 mm for localizing targets between 40 mm and 60 mm. With a slight modification to the network, the model was able to accommodate multi target localization. A validation experiment was performed in which pencil lead was submerged into an intralipid solution at a depth of 32 mm. The network was able to localize the lead's position when the solution had a reduced scattering coefficient of 0, 5, 10, and 15 cm. The results of the network show improvements over standard delay-and-sum or frequency-domain beamforming algorithms and Johnstonbaugh proposes that this technology could be used for

169:

The typical network architectures used to remove these sparse sampling artifacts are U-net and Fully Dense (FD) U-net. Both of these architectures contain a compression and decompression phase. The compression phase learns to compress the image to a latent representation that lacks the imaging artifacts and other details. The decompression phase then combines with information passed by the residual connections in order to add back image details without adding in the details associated with the artifacts. FD U-net modifies the original U-net architecture by including dense blocks that allow layers to utilize information learned by previous layers within the dense block. Another technique was proposed using a simple CNN based architecture for removal of artifacts and improving the k-wave image reconstruction.

350:

can be up to two orders of magnitude lower than the conventional time-domain systems. To overcome the inherent SNR limitation of frequency-domain PAM, a U-Net neural network has been utilized to augment the generated images without the need for excessive averaging or the application of high optical power on the sample. In this context, the accessibility of PAM is improved as the system’s cost is dramatically reduced while retaining sufficiently high image quality standards for demanding biological observations.

35:. Photoacoustic imaging is based on the photoacoustic effect, in which optical absorption causes a rise in temperature, which causes a subsequent rise in pressure via thermo-elastic expansion. This pressure rise propagates through the tissue and is sensed via ultrasonic transducers. Due to the proportionality between the optical absorption, the rise in temperature, and the rise in pressure, the ultrasound pressure wave signal can be used to quantify the original optical energy deposition within the tissue.

237:

can cause artifacts and limit the axial resolution of the imaging system. The primary deep neural network architectures used to remove limited-bandwidth artifacts have been WGAN-GP and modified U-net. The typical method to remove artifacts and denoise limited-bandwidth reconstructions before deep learning was Wiener filtering, which helps to expand the PA signal's frequency spectrum. The primary advantage of the deep learning method over Wiener filtering is that Wiener filtering requires a high initial

64:

implementation, the applications of deep learning in PACT have branched out primarily into removing artifacts from acoustic reflections, sparse sampling, limited-view, and limited-bandwidth. There has also been some recent work in PACT toward using deep learning for wavefront localization. There have been networks based on fusion of information from two different reconstructions to improve the reconstruction using deep learning fusion based networks.

17:

51:

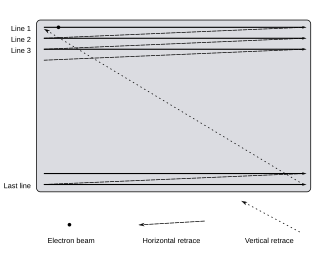

tissue. PAM on the other hand uses focused ultrasound detection combined with weakly-focused optical excitation (acoustic resolution PAM or AR-PAM) or tightly-focused optical excitation (optical resolution PAM or OR-PAM). PAM typically captures images point-by-point via a mechanical raster scanning pattern. At each scanned point, the acoustic time-of-flight provides axial resolution while the acoustic focusing yields lateral resolution.

216:

pixel-wise interpolation, the time-of-flight for each pixel was calculated using the wave propagation equation. Next, a reconstruction grid was created from pressure measurements calculated from the pixels' time-of-flight. Using the reconstruction grid as an input, the FD U-net was able to create artifact free reconstructed images. This pixel-wise interpolation method was faster and achieved better

295:

338:

220:(PSNR) and structural similarity index measures (SSIM) than artifact free images created when the time-reversal images served as the input to the FD U-net. This pixel-wise interpolation method was significantly faster and had comparable PSNR and SSIM than the images reconstructed from the computationally intensive

250:

is used. A novel fusion based architecture was proposed to combine the output of two different reconstructions and give a better image quality as compared to any of those reconstructions. It includes weight sharing, and fusion of characteristics to achieve the desired improvement in the output image quality.

349:

Frequency-domain PAM constitutes a powerful cost-efficient imaging method integrating intensity-modulated laser beams emitted by continuous wave sources for the excitation of single-frequency PA signals. Nevertheless, this imaging approach generally provides smaller signal-to-noise ratios (SNR) which

324:

used a simple three layer convolutional neural network, with each layer represented by a weight matrix and a bias vector, in order to remove the PAM motion artifacts. Two of the convolutional layers contain RELU activation functions, while the last has no activation function. Using this architecture,

249:

The complementary information is utilized using fusion based architectures for improving the photoacoustic image reconstruction. Since different reconstructions promote different characteristics in the output and hence the image quality and characteristics vary if a different reconstruction technique

168:

When the density of uniform tomographic view angles is under what is prescribed by the

Nyquist-Shannon's sampling theorem, it is said that the imaging system is performing sparse sampling. Sparse sampling typically occurs as a way of keeping production costs low and improving image acquisition speed.

151:

knowledge from network training to remove artifacts. In the deep learning methods that seek to remove these sparse sampling, limited-bandwidth, and limited-view artifacts, the typical workflow involves first performing the ill-posed reconstruction technique to transform the pre-beamformed data into a

258:

High energy lasers allow for light to reach deep into tissue and they allow for deep structures to be visible in PA images. High energy lasers provide a greater penetration depth than low energy lasers. Around an 8 mm greater penetration depth for lasers with a wavelength between 690 to 900 nm. The

236:

The limited-bandwidth problem occurs as a result of the ultrasound transducer array's limited detection frequency bandwidth. This transducer array acts like a band-pass filter in the frequency domain, attenuating both high and low frequencies within the photoacoustic signal. This limited-bandwidth

206:

was able to apply a FD U-net to remove artifacts from simulated limited-view reconstructed PA images. PA images reconstructed with the time-reversal process and PA data collected with either 16, 32, or 64 sensors served as the input to the network and the ground truth images served as the desired

185:

of the reconstructed image. Limited-view, similar to sparse sampling, makes the initial reconstruction algorithm ill-posed. Prior to deep learning, the limited-view problem was addressed with complex hardware such as acoustic deflectors and full ring-shaped transducer arrays, as well as solutions

50:

methods, the sample is imaged at multiple view angles, which are then used to perform an inverse reconstruction algorithm based on the detection geometry (typically through universal backprojection, modified delay-and-sum, or time reversal ) to elicit the initial pressure distribution within the

72:

Traditional photoacoustic beamforming techniques modeled photoacoustic wave propagation by using detector array geometry and the time-of-flight to account for differences in the PA signal arrival time. However, this technique failed to account for reverberant acoustic signals caused by acoustic

215:

proposed to use a pixel-wise interpolation as an input to the network instead of a reconstructed image. Using a pixel-wise interpolation would remove the need to produce an initial image that may remove small details or make details unrecoverable by obscuring them with artifacts. To create the

90:

convolution layer and differentiable spatial-to-numerical transform layer were also used within the architecture. Simulated PA wavefronts served as the input for training the model. To create the wavefronts, the forward simulation of light propagation was done with the NIRFast toolbox and the

63:

in which a deep neural network was trained to learn spatial impulse responses and locate photoacoustic point sources. The resulting mean axial and lateral point location errors on 2,412 of their randomly selected test images were 0.28 mm and 0.37 mm respectively. After this initial

89:

was able to localize the source of photoacoustic wavefronts with a deep neural network. The network used was an encoder-decoder style convolutional neural network. The encoder-decoder network was made of residual convolution, upsampling, and high field-of-view convolution modules. A Nyquist

272:

sheep brain created by a low energy laser of 20 mJ as the input to the network and images of the same sheep brain created by a high energy laser of 100 mJ, 20 mJ above the MPE, as the desired output. A perceptually sensitive loss function was used to train the network to increase the low

186:

like compressed sensing, weighted factor, and iterative filtered backprojection. The result of this ill-posed reconstruction is imaging artifacts that can be removed by CNNs. The deep learning algorithms used to remove limited-view artifacts include U-net and FD U-net, as well as

207:

output. The network was able to remove artifacts created in the time-reversal process from synthetic, mouse brain, fundus, and lung vasculature phantoms. This process was similar to the work done for clearing artifacts from sparse and limited view images done by

Davoudi

138:

In PACT, tomographic reconstruction is performed, in which the projections from multiple solid angles are combined to form an image. When reconstruction methods like filtered backprojection or time reversal, are ill-posed inverse problems due to sampling under the

302:

Photoacoustic microscopy differs from other forms of photoacoustic tomography in that it uses focused ultrasound detection to acquire images pixel-by-pixel. PAM images are acquired as time-resolved volumetric data that is typically mapped to a 2-D projection via a

122:

utilized a full VGG-16 architecture to locate point sources and remove reflection artifacts within raw photoacoustic channel data (in the presence of multiple sources and channel noise). This utilization of deep learning trained on simulated data produced in the

1243:

Waibel, Dominik; Gröhl, Janek; Isensee, Fabian; Kirchner, Thomas; Maier-Hein, Klaus; Maier-Hein, Lena (2018-02-19). "Reconstruction of initial pressure from limited view photoacoustic images using deep learning". In Wang, Lihong V; Oraevsky, Alexander A (eds.).

325:

kernel sizes of 3 × 3, 4 × 4, and 5 × 5 were tested, with the largest kernel size of 5 × 5 yielding the best results. After training, the performance of the motion correction model was tested and performed well on both simulation and

1354:

Awasthi, Navchetan; Pardasani, Rohit; Sandeep Kumar Kalva; Pramanik, Manojit; Yalavarthy, Phaneendra K. (2020). "Sinogram super-resolution and denoising convolutional neural network (SRCN) for limited data photoacoustic tomography".

2013:

Haltmeier, Markus; Sandbichler, Michael; Berer, Thomas; Bauer-Marschallinger, Johannes; Burgholzer, Peter; Nguyen, Linh (June 2018). "A sparsification and reconstruction strategy for compressed sensing photoacoustic tomography".

77:, a convolutional neural network (similar to a simple VGG-16 style architecture) was used that took pre-beamformed photoacoustic data as input and outputted a classification result specifying the 2-D point source location.

2274:

Liu, Xueyan; Peng, Dong; Ma, Xibo; Guo, Wei; Liu, Zhenyu; Han, Dong; Yang, Xin; Tian, Jie (2013-05-14). "Limited-view photoacoustic imaging based on an iterative adaptive weighted filtered backprojection approach".

267:

was able to increase the penetration of depth of low energy lasers that meet the MPE standard by applying a U-net architecture to the images created by a low energy laser. The network was trained with images of an

277:

in PA images created by the low energy laser. The trained network was able to increase the peak-to-background ratio by 4.19 dB and penetration depth by 5.88% for photos created by the low energy laser of an

307:

and maximum amplitude projection (MAP). The first application of deep learning to PAM, took the form of a motion-correction algorithm. This procedure was posed to correct the PAM artifacts that occur when an

263:

has set a maximal permissible exposure (MPE) for different biological tissues. Lasers with specifications above the MPE can cause mechanical or thermal damage to the tissue they are imaging. Manwar

177:

When a region of partial solid angles are not captured, generally due to geometric limitations, the image acquisition is said to have limited-view. As illustrated by the experiments of

Davoudi

809:

Reiter, Austin; Bell, Muyinatu A Lediju (2017-03-03). Oraevsky, Alexander A; Wang, Lihong V (eds.). "A machine learning approach to identifying point source locations in photoacoustic data".

143:'s sampling requirement or with limited-bandwidth/view, the resulting reconstruction contains image artifacts. Traditionally these artifacts were removed with slow iterative methods like

2148:

Duarte, Marco F.; Davenport, Mark A.; Takhar, Dharmpal; Laska, Jason N.; Sun, Ting; Kelly, Kevin F.; Baraniuk, Richard G. (March 2008). "Single-pixel imaging via compressive sampling".

1591:

Agranovsky, Mark; Kuchment, Peter (2007-08-28). "Uniqueness of reconstruction and an inversion procedure for thermoacoustic and photoacoustic tomography with variable sound speed".

2326:

Ma, Songbo; Yang, Sihua; Guo, Hua (2009-12-15). "Limited-view photoacoustic imaging based on linear-array detection and filtered mean-backprojection-iterative reconstruction".

2703:

Tserevelakis, George J.; Barmparis, Georgios D.; Kokosalis, Nikolaos; Giosa, Eirini Smaro; Pavlopoulos, Anastasios; Tsironis, Giorgos P.; Zacharakis, Giannis (2023-05-15).

1454:

Johnstonbaugh, Kerrick; Agrawal, Sumit; Durairaj, Deepit

Abhishek; Fadden, Christopher; Dangi, Ajay; Karri, Sri Phani Krishna; Kothapalli, Sri-Rajasekhar (December 2020).

1298:

Awasthi, Navchetan (28 February 2020). "Deep Neural

Network Based Sinogram Super-resolution and Bandwidth Enhancement for Limited-data Photoacoustic Tomography".

94:, while the forward simulation of sound propagation was done with the K-Wave toolbox. The simulated wavefronts were subjected to different scattering mediums and

979:

Guan, Steven; Khan, Amir A.; Sikdar, Siddhartha; Chitnis, Parag V. (February 2020). "Fully Dense UNet for 2-D Sparse

Photoacoustic Tomography Artifact Removal".

190:(GANs) and volumetric versions of U-net. One GAN implementation of note improved upon U-net by using U-net as a generator and VGG as a discriminator, with the

1901:

Sandbichler, M.; Krahmer, F.; Berer, T.; Burgholzer, P.; Haltmeier, M. (January 2015). "A Novel

Compressed Sensing Scheme for Photoacoustic Tomography".

1376:

Gutta, Sreedevi; Kadimesetty, Venkata

Suryanarayana; Kalva, Sandeep Kumar; Pramanik, Manojit; Ganapathy, Sriram; Yalavarthy, Phaneendra K. (2017-11-02).

639:

1097:

Hauptmann, Andreas; Lucka, Felix; Betcke, Marta; Huynh, Nam; Adler, Jonas; Cox, Ben; Beard, Paul; Ourselin, Sebastien; Arridge, Simon (June 2018).

573:"Experimental validation of tangential resolution improvement in photoacoustic tomography using modified delay-and-sum reconstruction algorithm"

638:

Bossy, Emmanuel; Daoudi, Khalid; Boccara, Albert-Claude; Tanter, Mickael; Aubry, Jean-François; Montaldo, Gabriel; Fink, Mathias (2006-10-30).

2792:

1690:

260:

1181:"Feature article: A generative adversarial network for artifact removal in photoacoustic computed tomography with a linear-array transducer"

140:

73:

reflection, resulting in acoustic reflection artifacts that corrupt the true photoacoustic point source location information. In Reiter

696:

Treeby, Bradley E; Zhang, Edward Z; Cox, B T (2010-09-24). "Photoacoustic tomography in absorbing acoustic media using time reversal".

320:

The two primary motion artifact types addressed by deep learning in PAM are displacements in the vertical and tilted directions. Chen

1269:

238:

1655:

Ronneberger, Olaf; Fischer, Philipp; Brox, Thomas (2015), "U-Net: Convolutional

Networks for Biomedical Image Segmentation",

1051:

Davoudi, Neda; Deán-Ben, Xosé Luís; Razansky, Daniel (2019-09-16). "Deep learning optoacoustic tomography with sparse data".

187:

341:

Noisy input, denoised output through U-Net and averaged ground truth frequency-domain PA amplitude images of two label-free

2083:

Liang, Jinyang; Zhou, Yong; Winkler, Amy W.; Wang, Lidai; Maslov, Konstantin I.; Li, Chiye; Wang, Lihong V. (2013-07-22).

518:

Xu, Minghua; Wang, Lihong V. (2005-01-19). "Universal back-projection algorithm for photoacoustic computed tomography".

228:

experiments with homogenous medium, but Guan posits that the pixel-wise method can be used for real time PAT rendering.

153:

1570:

Simonyan, Karen; Zisserman, Andrew (2015-04-10). "Very Deep

Convolutional Networks for Large-Scale Image Recognition".

1520:"PA-Fuse: deep supervised approach for the fusion of photoacoustic images with distinct reconstruction characteristics"

1836:

Xia, Jun; Chatni, Muhammad R.; Maslov, Konstantin; Guo, Zijian; Wang, Kun; Anastasio, Mark; Wang, Lihong V. (2012).

217:

211:

To improve the speed of reconstruction and to allow for the FD U-net to use more information from the sensor, Guan

144:

91:

46:(PAM). PACT utilizes wide-field optical excitation and an array of unfocused ultrasound transducers. Similar to

2802:

2773:

2593:"Full image reconstruction in frequency-domain photoacoustic microscopy by means of a low-cost I/Q demodulator"

364:

221:

43:

1962:

Provost, J.; Lesage, F. (April 2009). "The Application of Compressed Sensing for Photo-Acoustic Tomography".

2797:

1659:, Lecture Notes in Computer Science, vol. 9351, Springer International Publishing, pp. 234–241,

2378:

Manwar, Rayyan; Li, Xin; Mahmoodkalayeh, Sadreddin; Asano, Eishi; Zhu, Dongxiao; Avanaki, Kamran (2020).

2768:

359:

274:

39:

28:

2778:

2716:

2604:

2479:

2335:

2284:

2222:

2157:

2096:

2033:

1920:

1849:

1784:

1729:

1670:

1610:

1389:

1249:

818:

705:

654:

584:

527:

409:

369:

156:(CNN) is trained to remove the artifacts, in order to produce an artifact-free representation of the

282:

sheep brain. Manwar claims that this technology could be beneficial in neonatal brain imaging where

2591:

Tserevelakis, George J.; Mavrakis, Kostas G.; Kakakios, Nikitas; Zacharakis, Giannis (2021-10-01).

2748:

2646:

Langer, Gregor; Buchegger, Bianca; Jacak, Jaroslaw; Klar, Thomas A.; Berer, Thomas (2016-07-01).

2469:

2425:

2191:

2065:

2023:

1995:

1944:

1910:

1696:

1660:

1634:

1600:

1571:

1356:

1333:

1275:

1146:

1076:

1022:

988:

834:

729:

678:

283:

191:

2534:"Deep-learning-based motion-correction algorithm in optical resolution photoacoustic microscopy"

1714:

16:

2740:

2732:

2685:

2667:

2628:

2620:

2573:

2555:

2505:

2417:

2399:

2351:

2308:

2300:

2256:

2238:

2183:

2130:

2112:

2085:"Random-access optical-resolution photoacoustic microscopy using a digital micromirror device"

2057:

2049:

1987:

1979:

1936:

1883:

1865:

1818:

1800:

1753:

1745:

1686:

1626:

1549:

1493:

1475:

1425:

1417:

1325:

1265:

1218:

1200:

1138:

1120:

1068:

1014:

1006:

956:

938:

894:

876:

786:

768:

721:

670:

620:

612:

553:

500:

482:

443:

425:

304:

110:

Removing acoustic reflection artifacts (in the presence of multiple sources and channel noise)

312:

model moves during scanning. This movement creates the appearance of vessel discontinuities.

2724:

2675:

2659:

2612:

2563:

2545:

2495:

2487:

2407:

2391:

2343:

2292:

2246:

2230:

2173:

2165:

2120:

2104:

2041:

1971:

1928:

1873:

1857:

1838:"Whole-body ring-shaped confocal photoacoustic computed tomography of small animals in vivo"

1808:

1792:

1737:

1678:

1618:

1539:

1531:

1483:

1467:

1407:

1397:

1315:

1307:

1257:

1208:

1192:

1128:

1110:

1060:

998:

946:

930:

884:

868:

826:

776:

760:

713:

662:

602:

592:

543:

535:

490:

474:

433:

417:

241:(SNR), which is not always possible, while the deep learning model has no such restriction.

198:

Pixel-wise interpolation and deep learning for faster reconstruction of limited-view signals

182:

2704:

99:

857:"Photoacoustic Source Detection and Reflection Artifact Removal Enabled by Deep Learning"

717:

152:

2-D representation of the initial pressure distribution that contains artifacts. Then, a

2720:

2608:

2592:

2483:

2412:

2379:

2339:

2288:

2226:

2161:

2100:

2037:

1924:

1853:

1788:

1733:

1674:

1614:

1456:"A Deep Learning approach to Photoacoustic Wavefront Localization in Deep-Tissue Medium"

1393:

1253:

822:

709:

658:

588:

531:

413:

2680:

2647:

2568:

2533:

2500:

2457:

2251:

2210:

2125:

2084:

1878:

1837:

1813:

1772:

1544:

1519:

1488:

1455:

1213:

1180:

1133:

951:

918:

889:

856:

781:

748:

495:

462:

438:

397:

95:

2458:"Limited View and Sparse Photoacoustic Tomography for Neuroimaging with Deep Learning"

2234:

2786:

2752:

2429:

1622:

1337:

1300:

Published in: IEEE Transactions on Ultrasonics, Ferroelectrics, and Frequency Control

1248:. Vol. 10494. International Society for Optics and Photonics. pp. 104942S.

1080:

682:

32:

2195:

2069:

1999:

1948:

1638:

1279:

1026:

838:

733:

1700:

1150:

157:

147:, but the advent of deep learning approaches has opened a new avenue that utilizes

934:

345:

embryos. Yellow arrows indicate the cell membranes. Scalebars are equal to 100 μm.

245:

Fusion of information for improving photoacoustic Images with deep neural networks

181:, limited-view corruptions can be directly observed as missing information in the

1682:

1099:"Model-Based Learning for Accelerated, Limited-View 3-D Photoacoustic Tomography"

2491:

1796:

1471:

1402:

1377:

1311:

539:

2550:

1861:

1713:

Xu, Yuan; Wang, Lihong V.; Ambartsoumian, Gaik; Kuchment, Peter (2004-03-11).

1064:

1002:

597:

572:

2736:

2671:

2624:

2559:

2403:

2355:

2304:

2242:

2187:

2116:

2053:

1983:

1975:

1940:

1869:

1804:

1749:

1630:

1479:

1421:

1204:

1196:

1124:

1115:

1072:

1010:

942:

880:

872:

772:

725:

674:

616:

486:

429:

421:

2169:

1773:"Improving limited-view photoacoustic tomography with an acoustic reflector"

1179:

Vu, Tri; Li, Mucong; Humayun, Hannah; Zhou, Yuan; Yao, Junjie (2020-03-25).

2744:

2689:

2632:

2577:

2509:

2456:

Guan, Steven; Khan, Amir A.; Sikdar, Siddhartha; Chitnis, Parag V. (2020).

2421:

2395:

2312:

2260:

2134:

2061:

1991:

1887:

1822:

1757:

1553:

1497:

1429:

1329:

1222:

1142:

1018:

960:

898:

790:

624:

557:

504:

478:

447:

1535:

2663:

2296:

2108:

548:

103:

1771:

Huang, Bin; Xia, Jun; Maslov, Konstantin; Wang, Lihong V. (2013-11-27).

1657:

Medical Image Computing and Computer-Assisted Intervention – MICCAI 2015

1320:

294:

1460:

IEEE Transactions on Ultrasonics, Ferroelectrics, and Frequency Control

1412:

1378:"Deep neural network-based bandwidth enhancement of photoacoustic data"

1261:

830:

764:

607:

47:

2728:

2616:

2347:

2178:

2045:

1932:

1741:

917:

Antholzer, Stephan; Haltmeier, Markus; Schwab, Johannes (2019-07-03).

666:

337:

855:

Allman, Derek; Reiter, Austin; Bell, Muyinatu A. Lediju (June 2018).

124:

749:"A Practical Guide to Photoacoustic Tomography in the Life Sciences"

2474:

2028:

1915:

1665:

1361:

993:

224:. The pixel-wise method proposed in this study was only proven for

2705:"Deep learning-assisted frequency-domain photoacoustic microscopy"

1605:

1576:

2380:"Deep learning protocol for improved photoacoustic brain imaging"

130:, and then later reaffirmed their results on experimental data.

38:

Photoacoustic imaging has applications of deep learning in both

1098:

919:"Deep learning for photoacoustic tomography from sparse data"

398:"Multiscale photoacoustic microscopy and computed tomography"

59:

The first application of deep learning in PACT was by Reiter

2648:"Frequency domain photoacoustic and fluorescence microscopy"

232:

Limited-bandwidth artifact removal with deep neural networks

2211:"Weight factors for limited angle photoacoustic tomography"

1715:"Reconstructions in limited-view thermoacoustic tomography"

817:. International Society for Optics and Photonics: 100643J.

127:

68:

Using deep learning to locate photoacoustic point sources

571:

Kalva, Sandeep Kumar; Pramanik, Manojit (August 2016).

254:

Deep learning to improve penetration depth of PA images

164:

Using deep learning to remove sparse sampling artifacts

194:

and gradient penalty to stabilize training (WGAN-GP).

2538:

Visual Computing for Industry, Biomedicine, and Art

2209:Paltauf, G; Nuster, R; Burgholzer, P (2009-05-08).

2532:Chen, Xingxing; Qi, Weizhi; Xi, Lei (2019-10-29).

173:Removing limited-view artifacts with deep learning

106:cell detection, and real-time vascular surgeries.

1246:Photons Plus Ultrasound: Imaging and Sensing 2018

981:IEEE Journal of Biomedical and Health Informatics

811:Photons Plus Ultrasound: Imaging and Sensing 2017

2016:The Journal of the Acoustical Society of America

286:is possible to look for any lessions or injury.

316:Deep learning to remove motion artifacts in PAM

298:Depiction of mechanical raster scanning method

8:

923:Inverse Problems in Science and Engineering

747:Wang, Lihong V.; Yao, Junjie (2016-07-28).

333:Deep learning-assisted frequency-domain PAM

81:Deep learning for PA wavefront localization

2679:

2567:

2549:

2499:

2473:

2411:

2250:

2177:

2124:

2027:

1914:

1877:

1812:

1664:

1604:

1575:

1543:

1487:

1411:

1401:

1360:

1319:

1212:

1132:

1114:

992:

950:

888:

780:

606:

596:

547:

494:

437:

336:

293:

31:(PA) with the rapidly evolving field of

27:combines the hybrid imaging modality of

15:

1524:Published in: Biomedical Optics Express

381:

2527:

2525:

2523:

2521:

2519:

2451:

2449:

2447:

2445:

2443:

2441:

2439:

2373:

2371:

2369:

2367:

2365:

1650:

1648:

1513:

1511:

1509:

1507:

1449:

1447:

1445:

1443:

1441:

1439:

1349:

1347:

1293:

1291:

1289:

640:"Time reversal of photoacoustic waves"

25:Deep learning in photoacoustic imaging

1565:

1563:

1238:

1236:

1234:

1232:

1174:

1172:

1170:

1168:

1166:

1164:

1162:

1160:

1092:

1090:

1046:

1044:

1042:

1040:

1038:

1036:

974:

972:

970:

912:

910:

908:

261:American National Standards Institute

55:Applications of deep learning in PACT

20:Depiction of photoacoustic tomography

7:

1964:IEEE Transactions on Medical Imaging

1103:IEEE Transactions on Medical Imaging

861:IEEE Transactions on Medical Imaging

850:

848:

804:

802:

800:

391:

389:

387:

385:

290:Applications of deep learning in PAM

1903:SIAM Journal on Applied Mathematics

1518:Awasthi, Navchetan (3 April 2019).

463:"Biomedical photoacoustic imaging"

14:

1185:Experimental Biology and Medicine

40:photoacoustic computed tomography

2215:Physics in Medicine and Biology

2150:IEEE Signal Processing Magazine

188:generative adversarial networks

160:initial pressure distribution.

114:Building on the work of Reiter

718:10.1088/0266-5611/26/11/115003

396:Wang, Lihong V. (2009-08-29).

1:

935:10.1080/17415977.2018.1518444

134:Ill-posed PACT reconstruction

92:light-diffusion approximation

2793:Computing in medical imaging

1842:Journal of Biomedical Optics

1777:Journal of Biomedical Optics

1683:10.1007/978-3-319-24574-4_28

1382:Journal of Biomedical Optics

577:Journal of Biomedical Optics

154:convolutional neural network

145:total variation minimization

2235:10.1088/0031-9155/54/11/002

1053:Nature Machine Intelligence

218:peak signal to noise ratios

2819:

2492:10.1038/s41598-020-65235-2

2328:Journal of Applied Physics

1797:10.1117/1.jbo.18.11.110505

1623:10.1088/0266-5611/23/5/016

1472:10.1109/tuffc.2020.2964698

1403:10.1117/1.jbo.22.11.116001

1312:10.1109/TUFFC.2020.2977210

540:10.1103/PhysRevE.71.016706

461:Beard, Paul (2011-08-06).

2652:Biomedical Optics Express

2551:10.1186/s42492-019-0022-9

1862:10.1117/1.jbo.17.5.050506

1065:10.1038/s42256-019-0095-3

1003:10.1109/jbhi.2019.2912935

598:10.1117/1.JBO.21.8.086011

100:optical wavefront shaping

48:other computed tomography

2774:Photoacoustic microscopy

1976:10.1109/tmi.2008.2007825

1197:10.1177/1535370220914285

1116:10.1109/TMI.2018.2820382

873:10.1109/TMI.2018.2829662

422:10.1038/nphoton.2009.157

365:Photoacoustic microscopy

44:photoacoustic microscopy

2384:Journal of Biophotonics

2334:(12): 123104–123104–6.

2170:10.1109/msp.2007.914730

647:Applied Physics Letters

284:transfontanelle imaging

2396:10.1002/jbio.202000212

479:10.1098/rsfs.2011.0028

346:

299:

21:

2769:Photoacoustic imaging

1536:10.1364/BOE.10.002227

360:Photoacoustic imaging

340:

297:

275:signal-to-noise ratio

239:signal-to-noise ratio

29:photoacoustic imaging

19:

2779:Photoacoustic effect

2664:10.1364/BOE.7.002692

2297:10.1364/ao.52.003477

2109:10.1364/ol.38.002683

370:Photoacoustic effect

2721:2023OptL...48.2720T

2609:2021OptL...46.4718T

2484:2020NatSR..10.8510G

2340:2009JAP...106l3104M

2289:2013ApOpt..52.3477L

2227:2009PMB....54.3303P

2162:2008ISPM...25...83D

2101:2013OptL...38.2683L

2038:2018ASAJ..143.3838H

1925:2015arXiv150104305S

1854:2012JBO....17e0506X

1789:2013JBO....18k0505H

1734:2004MedPh..31..724X

1675:2015arXiv150504597R

1615:2007InvPr..23.2089A

1394:2017JBO....22k6001G

1254:2018SPIE10494E..2SW

823:2017SPIE10064E..3JR

710:2010InvPr..26k5003T

659:2006ApPhL..89r4108B

589:2016JBO....21h6011K

532:2005PhRvE..71a6706X

414:2009NaPho...3..503W

343:Parhyale hawaiensis

2462:Scientific Reports

2390:(10): e202000212.

1262:10.1117/12.2288353

831:10.1117/12.2255098

765:10.1038/nmeth.3925

347:

300:

222:iterative approach

192:Wasserstein metric

22:

2729:10.1364/OL.486624

2715:(10): 2720–2723.

2617:10.1364/OL.435146

2603:(19): 4718–4721.

2348:10.1063/1.3273322

2221:(11): 3303–3314.

2046:10.1121/1.5042230

1933:10.1137/141001408

1742:10.1118/1.1644531

1692:978-3-319-24573-7

1466:(12): 2649–2659.

1306:(12): 2660–2673.

667:10.1063/1.2382732

520:Physical Review E

305:Hilbert transform

2810:

2757:

2756:

2700:

2694:

2693:

2683:

2658:(7): 2692–3302.

2643:

2637:

2636:

2588:

2582:

2581:

2571:

2553:

2529:

2514:

2513:

2503:

2477:

2453:

2434:

2433:

2415:

2375:

2360:

2359:

2323:

2317:

2316:

2271:

2265:

2264:

2254:

2206:

2200:

2199:

2181:

2145:

2139:

2138:

2128:

2080:

2074:

2073:

2031:

2022:(6): 3838–3848.

2010:

2004:

2003:

1959:

1953:

1952:

1918:

1909:(6): 2475–2494.

1898:

1892:

1891:

1881:

1833:

1827:

1826:

1816:

1768:

1762:

1761:

1719:

1710:

1704:

1703:

1668:

1652:

1643:

1642:

1608:

1599:(5): 2089–2102.

1593:Inverse Problems

1588:

1582:

1581:

1579:

1567:

1558:

1557:

1547:

1530:(5): 2227–2243.

1515:

1502:

1501:

1491:

1451:

1434:

1433:

1415:

1405:

1373:

1367:

1366:

1364:

1351:

1342:

1341:

1323:

1295:

1284:

1283:

1240:

1227:

1226:

1216:

1176:

1155:

1154:

1136:

1118:

1109:(6): 1382–1393.

1094:

1085:

1084:

1048:

1031:

1030:

996:

976:

965:

964:

954:

914:

903:

902:

892:

867:(6): 1464–1477.

852:

843:

842:

806:

795:

794:

784:

744:

738:

737:

698:Inverse Problems

693:

687:

686:

644:

635:

629:

628:

610:

600:

568:

562:

561:

551:

515:

509:

508:

498:

458:

452:

451:

441:

402:Nature Photonics

393:

183:frequency domain

2818:

2817:

2813:

2812:

2811:

2809:

2808:

2807:

2803:Medical imaging

2783:

2782:

2766:

2761:

2760:

2702:

2701:

2697:

2645:

2644:

2640:

2590:

2589:

2585:

2531:

2530:

2517:

2455:

2454:

2437:

2377:

2376:

2363:

2325:

2324:

2320:

2283:(15): 3477–83.

2273:

2272:

2268:

2208:

2207:

2203:

2147:

2146:

2142:

2082:

2081:

2077:

2012:

2011:

2007:

1961:

1960:

1956:

1900:

1899:

1895:

1835:

1834:

1830:

1770:

1769:

1765:

1722:Medical Physics

1717:

1712:

1711:

1707:

1693:

1654:

1653:

1646:

1590:

1589:

1585:

1569:

1568:

1561:

1517:

1516:

1505:

1453:

1452:

1437:

1375:

1374:

1370:

1353:

1352:

1345:

1297:

1296:

1287:

1272:

1242:

1241:

1230:

1178:

1177:

1158:

1096:

1095:

1088:

1059:(10): 453–460.

1050:

1049:

1034:

978:

977:

968:

929:(7): 987–1005.

916:

915:

906:

854:

853:

846:

808:

807:

798:

746:

745:

741:

695:

694:

690:

642:

637:

636:

632:

570:

569:

565:

517:

516:

512:

467:Interface Focus

460:

459:

455:

395:

394:

383:

378:

356:

335:

318:

292:

256:

234:

200:

175:

166:

141:Nyquist-Shannon

136:

112:

83:

70:

57:

12:

11:

5:

2816:

2814:

2806:

2805:

2800:

2795:

2785:

2784:

2765:

2764:External links

2762:

2759:

2758:

2709:Optics Letters

2695:

2638:

2597:Optics Letters

2583:

2515:

2435:

2361:

2318:

2277:Applied Optics

2266:

2201:

2140:

2095:(15): 2683–6.

2089:Optics Letters

2075:

2005:

1970:(4): 585–594.

1954:

1893:

1828:

1783:(11): 110505.

1763:

1728:(4): 724–733.

1705:

1691:

1644:

1583:

1559:

1503:

1435:

1388:(11): 116001.

1368:

1343:

1285:

1270:

1228:

1191:(7): 597–605.

1156:

1086:

1032:

987:(2): 568–576.

966:

904:

844:

796:

759:(8): 627–638.

753:Nature Methods

739:

704:(11): 115003.

688:

653:(18): 184108.

630:

563:

510:

473:(4): 602–631.

453:

408:(9): 503–509.

380:

379:

377:

374:

373:

372:

367:

362:

355:

352:

334:

331:

317:

314:

291:

288:

255:

252:

233:

230:

199:

196:

174:

171:

165:

162:

135:

132:

128:k-wave library

111:

108:

102:, circulating

96:Gaussian noise

85:Johnstonbaugh

82:

79:

69:

66:

56:

53:

13:

10:

9:

6:

4:

3:

2:

2815:

2804:

2801:

2799:

2798:Deep learning

2796:

2794:

2791:

2790:

2788:

2781:

2780:

2776:

2775:

2771:

2770:

2763:

2754:

2750:

2746:

2742:

2738:

2734:

2730:

2726:

2722:

2718:

2714:

2710:

2706:

2699:

2696:

2691:

2687:

2682:

2677:

2673:

2669:

2665:

2661:

2657:

2653:

2649:

2642:

2639:

2634:

2630:

2626:

2622:

2618:

2614:

2610:

2606:

2602:

2598:

2594:

2587:

2584:

2579:

2575:

2570:

2565:

2561:

2557:

2552:

2547:

2543:

2539:

2535:

2528:

2526:

2524:

2522:

2520:

2516:

2511:

2507:

2502:

2497:

2493:

2489:

2485:

2481:

2476:

2471:

2467:

2463:

2459:

2452:

2450:

2448:

2446:

2444:

2442:

2440:

2436:

2431:

2427:

2423:

2419:

2414:

2409:

2405:

2401:

2397:

2393:

2389:

2385:

2381:

2374:

2372:

2370:

2368:

2366:

2362:

2357:

2353:

2349:

2345:

2341:

2337:

2333:

2329:

2322:

2319:

2314:

2310:

2306:

2302:

2298:

2294:

2290:

2286:

2282:

2278:

2270:

2267:

2262:

2258:

2253:

2248:

2244:

2240:

2236:

2232:

2228:

2224:

2220:

2216:

2212:

2205:

2202:

2197:

2193:

2189:

2185:

2180:

2175:

2171:

2167:

2163:

2159:

2155:

2151:

2144:

2141:

2136:

2132:

2127:

2122:

2118:

2114:

2110:

2106:

2102:

2098:

2094:

2090:

2086:

2079:

2076:

2071:

2067:

2063:

2059:

2055:

2051:

2047:

2043:

2039:

2035:

2030:

2025:

2021:

2017:

2009:

2006:

2001:

1997:

1993:

1989:

1985:

1981:

1977:

1973:

1969:

1965:

1958:

1955:

1950:

1946:

1942:

1938:

1934:

1930:

1926:

1922:

1917:

1912:

1908:

1904:

1897:

1894:

1889:

1885:

1880:

1875:

1871:

1867:

1863:

1859:

1855:

1851:

1848:(5): 050506.

1847:

1843:

1839:

1832:

1829:

1824:

1820:

1815:

1810:

1806:

1802:

1798:

1794:

1790:

1786:

1782:

1778:

1774:

1767:

1764:

1759:

1755:

1751:

1747:

1743:

1739:

1735:

1731:

1727:

1723:

1716:

1709:

1706:

1702:

1698:

1694:

1688:

1684:

1680:

1676:

1672:

1667:

1662:

1658:

1651:

1649:

1645:

1640:

1636:

1632:

1628:

1624:

1620:

1616:

1612:

1607:

1602:

1598:

1594:

1587:

1584:

1578:

1573:

1566:

1564:

1560:

1555:

1551:

1546:

1541:

1537:

1533:

1529:

1525:

1521:

1514:

1512:

1510:

1508:

1504:

1499:

1495:

1490:

1485:

1481:

1477:

1473:

1469:

1465:

1461:

1457:

1450:

1448:

1446:

1444:

1442:

1440:

1436:

1431:

1427:

1423:

1419:

1414:

1409:

1404:

1399:

1395:

1391:

1387:

1383:

1379:

1372:

1369:

1363:

1358:

1350:

1348:

1344:

1339:

1335:

1331:

1327:

1322:

1317:

1313:

1309:

1305:

1301:

1294:

1292:

1290:

1286:

1281:

1277:

1273:

1271:9781510614734

1267:

1263:

1259:

1255:

1251:

1247:

1239:

1237:

1235:

1233:

1229:

1224:

1220:

1215:

1210:

1206:

1202:

1198:

1194:

1190:

1186:

1182:

1175:

1173:

1171:

1169:

1167:

1165:

1163:

1161:

1157:

1152:

1148:

1144:

1140:

1135:

1130:

1126:

1122:

1117:

1112:

1108:

1104:

1100:

1093:

1091:

1087:

1082:

1078:

1074:

1070:

1066:

1062:

1058:

1054:

1047:

1045:

1043:

1041:

1039:

1037:

1033:

1028:

1024:

1020:

1016:

1012:

1008:

1004:

1000:

995:

990:

986:

982:

975:

973:

971:

967:

962:

958:

953:

948:

944:

940:

936:

932:

928:

924:

920:

913:

911:

909:

905:

900:

896:

891:

886:

882:

878:

874:

870:

866:

862:

858:

851:

849:

845:

840:

836:

832:

828:

824:

820:

816:

812:

805:

803:

801:

797:

792:

788:

783:

778:

774:

770:

766:

762:

758:

754:

750:

743:

740:

735:

731:

727:

723:

719:

715:

711:

707:

703:

699:

692:

689:

684:

680:

676:

672:

668:

664:

660:

656:

652:

648:

641:

634:

631:

626:

622:

618:

614:

609:

604:

599:

594:

590:

586:

583:(8): 086011.

582:

578:

574:

567:

564:

559:

555:

550:

549:1969.1/180492

545:

541:

537:

533:

529:

526:(1): 016706.

525:

521:

514:

511:

506:

502:

497:

492:

488:

484:

480:

476:

472:

468:

464:

457:

454:

449:

445:

440:

435:

431:

427:

423:

419:

415:

411:

407:

403:

399:

392:

390:

388:

386:

382:

375:

371:

368:

366:

363:

361:

358:

357:

353:

351:

344:

339:

332:

330:

328:

323:

315:

313:

311:

306:

296:

289:

287:

285:

281:

276:

271:

266:

262:

253:

251:

247:

246:

242:

240:

231:

229:

227:

223:

219:

214:

210:

205:

197:

195:

193:

189:

184:

180:

172:

170:

163:

161:

159:

155:

150:

146:

142:

133:

131:

129:

126:

121:

117:

109:

107:

105:

101:

97:

93:

88:

80:

78:

76:

67:

65:

62:

54:

52:

49:

45:

41:

36:

34:

33:deep learning

30:

26:

18:

2777:

2772:

2767:

2712:

2708:

2698:

2655:

2651:

2641:

2600:

2596:

2586:

2541:

2537:

2465:

2461:

2387:

2383:

2331:

2327:

2321:

2280:

2276:

2269:

2218:

2214:

2204:

2156:(2): 83–91.

2153:

2149:

2143:

2092:

2088:

2078:

2019:

2015:

2008:

1967:

1963:

1957:

1906:

1902:

1896:

1845:

1841:

1831:

1780:

1776:

1766:

1725:

1721:

1708:

1656:

1596:

1592:

1586:

1527:

1523:

1463:

1459:

1385:

1381:

1371:

1321:10356/146551

1303:

1299:

1245:

1188:

1184:

1106:

1102:

1056:

1052:

984:

980:

926:

922:

864:

860:

814:

810:

756:

752:

742:

701:

697:

691:

650:

646:

633:

580:

576:

566:

523:

519:

513:

470:

466:

456:

405:

401:

348:

342:

326:

321:

319:

309:

301:

279:

269:

264:

257:

248:

244:

243:

235:

225:

212:

208:

203:

201:

178:

176:

167:

158:ground truth

148:

137:

119:

115:

113:

86:

84:

74:

71:

60:

58:

37:

24:

23:

2468:(1): 8510.

1413:10356/86305

608:10356/82178

42:(PACT) and

2787:Categories

2475:1911.04357

2179:1911/21682

2029:1801.00117

1916:1501.04305

1666:1505.04597

1362:2001.06434

994:1808.10848

376:References

2753:258229033

2737:1539-4794

2672:2156-7085

2625:0146-9592

2560:2524-4442

2544:(1): 12.

2430:224845812

2404:1864-0648

2356:0021-8979

2305:1559-128X

2243:0031-9155

2188:1053-5888

2117:0146-9592

2054:0001-4966

1984:0278-0062

1941:0036-1399

1870:1083-3668

1805:1083-3668

1750:0094-2405

1631:0266-5611

1606:0706.0598

1577:1409.1556

1480:0885-3010

1422:1083-3668

1338:212621872

1205:1535-3702

1125:1558-254X

1081:202640890

1073:2522-5839

1011:2168-2194

943:1741-5977

881:1558-254X

773:1548-7091

726:0266-5611

683:121195599

675:0003-6951

617:1083-3668

487:2042-8898

430:1749-4885

226:in silico

118:, Allman

2745:37186749

2690:27446698

2633:34598182

2578:32240397

2510:32444649

2422:33405275

2413:10906453

2313:23736232

2261:19430108

2196:11454318

2135:23903111

2070:49643233

2062:29960458

2000:11398335

1992:19272991

1949:15701831

1888:22612121

1823:24285421

1758:15124989

1639:17810059

1554:31149371

1498:31944951

1430:29098811

1330:32142429

1280:57745829

1223:32208974

1143:29870367

1027:52143594

1019:31021809

961:31057659

899:29870374

839:35030143

791:27467726

734:14745088

625:27548773

558:15697763

505:22866233

448:20161535

354:See also

149:a priori

104:melanoma

2717:Bibcode

2681:4948622

2605:Bibcode

2569:7099543

2501:7244747

2480:Bibcode

2336:Bibcode

2285:Bibcode

2252:3166844

2223:Bibcode

2158:Bibcode

2126:3784350

2097:Bibcode

2034:Bibcode

1921:Bibcode

1879:3382342

1850:Bibcode

1814:3818029

1785:Bibcode

1730:Bibcode

1701:3719281

1671:Bibcode

1611:Bibcode

1545:6524595

1489:7769001

1390:Bibcode

1250:Bibcode

1214:7153213

1151:4321879

1134:7613684

952:6474723

890:6075868

819:Bibcode

782:4980387

706:Bibcode

655:Bibcode

585:Bibcode

528:Bibcode

496:3262268

439:2802217

410:Bibcode

327:in vivo

310:in vivo

280:in vivo

270:ex vivo

2751:

2743:

2735:

2688:

2678:

2670:

2631:

2623:

2576:

2566:

2558:

2508:

2498:

2428:

2420:

2410:

2402:

2354:

2311:

2303:

2259:

2249:

2241:

2194:

2186:

2133:

2123:

2115:

2068:

2060:

2052:

1998:

1990:

1982:

1947:

1939:

1886:

1876:

1868:

1821:

1811:

1803:

1756:

1748:

1699:

1689:

1637:

1629:

1552:

1542:

1496:

1486:

1478:

1428:

1420:

1336:

1328:

1278:

1268:

1221:

1211:

1203:

1149:

1141:

1131:

1123:

1079:

1071:

1025:

1017:

1009:

959:

949:

941:

897:

887:

879:

837:

789:

779:

771:

732:

724:

681:

673:

623:

615:

556:

503:

493:

485:

446:

436:

428:

329:data.

322:et al.

265:et al.

213:et al.

209:et al.

204:et al.

179:et al.

125:MATLAB

120:et al.

116:et al.

87:et al.

75:et al.

61:et al.

2749:S2CID

2470:arXiv

2426:S2CID

2192:S2CID

2066:S2CID

2024:arXiv

1996:S2CID

1945:S2CID

1911:arXiv

1718:(PDF)

1697:S2CID

1661:arXiv

1635:S2CID

1601:arXiv

1572:arXiv

1357:arXiv

1334:S2CID

1276:S2CID

1147:S2CID

1077:S2CID

1023:S2CID

989:arXiv

835:S2CID

815:10064

730:S2CID

679:S2CID

643:(PDF)

202:Guan

2741:PMID

2733:ISSN

2686:PMID

2668:ISSN

2629:PMID

2621:ISSN

2574:PMID

2556:ISSN

2506:PMID

2418:PMID

2400:ISSN

2352:ISSN

2309:PMID

2301:ISSN

2257:PMID

2239:ISSN

2184:ISSN

2131:PMID

2113:ISSN

2058:PMID

2050:ISSN

1988:PMID

1980:ISSN

1937:ISSN

1884:PMID

1866:ISSN

1819:PMID

1801:ISSN

1754:PMID

1746:ISSN

1687:ISBN

1627:ISSN

1550:PMID

1494:PMID

1476:ISSN

1426:PMID

1418:ISSN

1326:PMID

1266:ISBN

1219:PMID

1201:ISSN

1139:PMID

1121:ISSN

1069:ISSN

1015:PMID

1007:ISSN

957:PMID

939:ISSN

895:PMID

877:ISSN

787:PMID

769:ISSN

722:ISSN

671:ISSN

621:PMID

613:ISSN

554:PMID

501:PMID

483:ISSN

444:PMID

426:ISSN

2725:doi

2676:PMC

2660:doi

2613:doi

2564:PMC

2546:doi

2496:PMC

2488:doi

2408:PMC

2392:doi

2344:doi

2332:106

2293:doi

2247:PMC

2231:doi

2174:hdl

2166:doi

2121:PMC

2105:doi

2042:doi

2020:143

1972:doi

1929:doi

1874:PMC

1858:doi

1809:PMC

1793:doi

1738:doi

1679:doi

1619:doi

1540:PMC

1532:doi

1484:PMC

1468:doi

1408:hdl

1398:doi

1316:hdl

1308:doi

1258:doi

1209:PMC

1193:doi

1189:245

1129:PMC

1111:doi

1061:doi

999:doi

947:PMC

931:doi

885:PMC

869:doi

827:doi

777:PMC

761:doi

714:doi

663:doi

603:hdl

593:doi

544:hdl

536:doi

491:PMC

475:doi

434:PMC

418:doi

2789::

2747:.

2739:.

2731:.

2723:.

2713:48

2711:.

2707:.

2684:.

2674:.

2666:.

2654:.

2650:.

2627:.

2619:.

2611:.

2601:46

2599:.

2595:.

2572:.

2562:.

2554:.

2540:.

2536:.

2518:^

2504:.

2494:.

2486:.

2478:.

2466:10

2464:.

2460:.

2438:^

2424:.

2416:.

2406:.

2398:.

2388:13

2386:.

2382:.

2364:^

2350:.

2342:.

2330:.

2307:.

2299:.

2291:.

2281:52

2279:.

2255:.

2245:.

2237:.

2229:.

2219:54

2217:.

2213:.

2190:.

2182:.

2172:.

2164:.

2154:25

2152:.

2129:.

2119:.

2111:.

2103:.

2093:38

2091:.

2087:.

2064:.

2056:.

2048:.

2040:.

2032:.

2018:.

1994:.

1986:.

1978:.

1968:28

1966:.

1943:.

1935:.

1927:.

1919:.

1907:75

1905:.

1882:.

1872:.

1864:.

1856:.

1846:17

1844:.

1840:.

1817:.

1807:.

1799:.

1791:.

1781:18

1779:.

1775:.

1752:.

1744:.

1736:.

1726:31

1724:.

1720:.

1695:,

1685:,

1677:,

1669:,

1647:^

1633:.

1625:.

1617:.

1609:.

1597:23

1595:.

1562:^

1548:.

1538:.

1528:10

1526:.

1522:.

1506:^

1492:.

1482:.

1474:.

1464:67

1462:.

1458:.

1438:^

1424:.

1416:.

1406:.

1396:.

1386:22

1384:.

1380:.

1346:^

1332:.

1324:.

1314:.

1304:67

1302:.

1288:^

1274:.

1264:.

1256:.

1231:^

1217:.

1207:.

1199:.

1187:.

1183:.

1159:^

1145:.

1137:.

1127:.

1119:.

1107:37

1105:.

1101:.

1089:^

1075:.

1067:.

1055:.

1035:^

1021:.

1013:.

1005:.

997:.

985:24

983:.

969:^

955:.

945:.

937:.

927:27

925:.

921:.

907:^

893:.

883:.

875:.

865:37

863:.

859:.

847:^

833:.

825:.

813:.

799:^

785:.

775:.

767:.

757:13

755:.

751:.

728:.

720:.

712:.

702:26

700:.

677:.

669:.

661:.

651:89

649:.

645:.

619:.

611:.

601:.

591:.

581:21

579:.

575:.

552:.

542:.

534:.

524:71

522:.

499:.

489:.

481:.

469:.

465:.

442:.

432:.

424:.

416:.

404:.

400:.

384:^

2755:.

2727::

2719::

2692:.

2662::

2656:7

2635:.

2615::

2607::

2580:.

2548::

2542:2

2512:.

2490::

2482::

2472::

2432:.

2394::

2358:.

2346::

2338::

2315:.

2295::

2287::

2263:.

2233::

2225::

2198:.

2176::

2168::

2160::

2137:.

2107::

2099::

2072:.

2044::

2036::

2026::

2002:.

1974::

1951:.

1931::

1923::

1913::

1890:.

1860::

1852::

1825:.

1795::

1787::

1760:.

1740::

1732::

1681::

1673::

1663::

1641:.

1621::

1613::

1603::

1580:.

1574::

1556:.

1534::

1500:.

1470::

1432:.

1410::

1400::

1392::

1365:.

1359::

1340:.

1318::

1310::

1282:.

1260::

1252::

1225:.

1195::

1153:.

1113::

1083:.

1063::

1057:1

1029:.

1001::

991::

963:.

933::

901:.

871::

841:.

829::

821::

793:.

763::

736:.

716::

708::

685:.

665::

657::

627:.

605::

595::

587::

560:.

546::

538::

530::

507:.

477::

471:1

450:.

420::

412::

406:3

Text is available under the Creative Commons Attribution-ShareAlike License. Additional terms may apply.