2527:

determine the character value of that particular leaf. The process continues recursively until the last leaf node is reached; at that point, the

Huffman tree will thus be faithfully reconstructed. The overhead using such a method ranges from roughly 2 to 320 bytes (assuming an 8-bit alphabet). Many other techniques are possible as well. In any case, since the compressed data can include unused "trailing bits" the decompressor must be able to determine when to stop producing output. This can be accomplished by either transmitting the length of the decompressed data along with the compression model or by defining a special code symbol to signify the end of input (the latter method can adversely affect code length optimality, however).

2485:

particular byte value). Before this can take place, however, the

Huffman tree must be somehow reconstructed. In the simplest case, where character frequencies are fairly predictable, the tree can be preconstructed (and even statistically adjusted on each compression cycle) and thus reused every time, at the expense of at least some measure of compression efficiency. Otherwise, the information to reconstruct the tree must be sent a priori. A naive approach might be to prepend the frequency count of each character to the compression stream. Unfortunately, the overhead in such a case could amount to several kilobytes, so this method has little practical use. If the data is compressed using

2049:

5170:

5160:

2003:, the entropy is a measure of the smallest codeword length that is theoretically possible for the given alphabet with associated weights. In this example, the weighted average codeword length is 2.25 bits per symbol, only slightly larger than the calculated entropy of 2.205 bits per symbol. So not only is this code optimal in the sense that no other feasible code performs better, but it is very close to the theoretical limit established by Shannon.

2060:

3000:, where a 'dash' takes longer to send than a 'dot', and therefore the cost of a dash in transmission time is higher. The goal is still to minimize the weighted average codeword length, but it is no longer sufficient just to minimize the number of symbols used by the message. No algorithm is known to solve this in the same manner or with the same efficiency as conventional Huffman coding, though it has been solved by

2179:. A binary tree is generated from left to right taking the two least probable symbols and putting them together to form another equivalent symbol having a probability that equals the sum of the two symbols. The process is repeated until there is just one symbol. The tree can then be read backwards, from right to left, assigning different bits to different branches. The final Huffman code is:

370:

31:

467:

356:(sometimes called "prefix-free codes", that is, the bit string representing some particular symbol is never a prefix of the bit string representing any other symbol). Huffman coding is such a widespread method for creating prefix codes that the term "Huffman code" is widely used as a synonym for "prefix code" even when such a code is not produced by Huffman's algorithm.

2602:

approaches the entropy limit, i.e., optimal compression. However, blocking arbitrarily large groups of symbols is impractical, as the complexity of a

Huffman code is linear in the number of possibilities to be encoded, a number that is exponential in the size of a block. This limits the amount of blocking that is done in practice.

2598:. Prefix codes, and thus Huffman coding in particular, tend to have inefficiency on small alphabets, where probabilities often fall between these optimal (dyadic) points. The worst case for Huffman coding can happen when the probability of the most likely symbol far exceeds 2 = 0.5, making the upper limit of inefficiency unbounded.

3345:

If weights corresponding to the alphabetically ordered inputs are in numerical order, the

Huffman code has the same lengths as the optimal alphabetic code, which can be found from calculating these lengths, rendering Hu–Tucker coding unnecessary. The code resulting from numerically (re-)ordered input

2720:

involves calculating the probabilities dynamically based on recent actual frequencies in the sequence of source symbols, and changing the coding tree structure to match the updated probability estimates. It is used rarely in practice, since the cost of updating the tree makes it slower than optimized

2601:

There are two related approaches for getting around this particular inefficiency while still using

Huffman coding. Combining a fixed number of symbols together ("blocking") often increases (and never decreases) compression. As the size of the block approaches infinity, Huffman coding theoretically

2570:

Although both aforementioned methods can combine an arbitrary number of symbols for more efficient coding and generally adapt to the actual input statistics, arithmetic coding does so without significantly increasing its computational or algorithmic complexities (though the simplest version is slower

2526:

is the number of bits per symbol). Another method is to simply prepend the

Huffman tree, bit by bit, to the output stream. For example, assuming that the value of 0 represents a parent node and 1 a leaf node, whenever the latter is encountered the tree building routine simply reads the next 8 bits to

34:

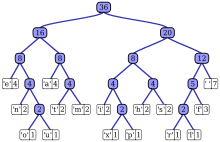

Huffman tree generated from the exact frequencies of the text "this is an example of a huffman tree". Encoding the sentence with this code requires 135 (or 147) bits, as opposed to 288 (or 180) bits if 36 characters of 8 (or 5) bits were used (This assumes that the code tree structure is known to the

3473:

and

Huffman coding produce equivalent results — achieving entropy — when every symbol has a probability of the form 1/2. In other circumstances, arithmetic coding can offer better compression than Huffman coding because — intuitively — its "code words" can have effectively

2629:

Many variations of

Huffman coding exist, some of which use a Huffman-like algorithm, and others of which find optimal prefix codes (while, for example, putting different restrictions on the output). Note that, in the latter case, the method need not be Huffman-like, and, indeed, need not even be

2342:

The process begins with the leaf nodes containing the probabilities of the symbol they represent. Then, the process takes the two nodes with smallest probability, and creates a new internal node having these two nodes as children. The weight of the new node is set to the sum of the weight of the

2484:

Generally speaking, the process of decompression is simply a matter of translating the stream of prefix codes to individual byte values, usually by traversing the

Huffman tree node by node as each bit is read from the input stream (reaching a leaf node necessarily terminates the search for that

2475:

It is generally beneficial to minimize the variance of codeword length. For example, a communication buffer receiving

Huffman-encoded data may need to be larger to deal with especially long symbols if the tree is especially unbalanced. To minimize variance, simply break ties between queues by

2054:

BED". In steps 2 to 6, the letters are sorted by increasing frequency, and the least frequent two at each step are combined and reinserted into the list, and a partial tree is constructed. The final tree in step 6 is traversed to generate the dictionary in step 7. Step 8 uses it to encode the

2575:

issues. Thus many technologies have historically avoided arithmetic coding in favor of Huffman and other prefix coding techniques. As of mid-2010, the most commonly used techniques for this alternative to Huffman coding have passed into the public domain as the early patents have expired.

3478:

only optimally matches a symbol of probability 1/2 and other probabilities are not represented optimally; whereas the code word length in arithmetic coding can be made to exactly match the true probability of the symbol. This difference is especially striking for small alphabet sizes.

1926:

2414:, the first one containing the initial weights (along with pointers to the associated leaves), and combined weights (along with pointers to the trees) being put in the back of the second queue. This assures that the lowest weight is always kept at the front of one of the two queues:

2571:

and more complex than Huffman coding). Such flexibility is especially useful when input probabilities are not precisely known or vary significantly within the stream. However, Huffman coding is usually faster and arithmetic coding was historically a subject of some concern over

2554:

Huffman's original algorithm is optimal for a symbol-by-symbol coding with a known input probability distribution, i.e., separately encoding unrelated symbols in such a data stream. However, it is not optimal when the symbol-by-symbol restriction is dropped, or when the

2228:

of the source is 1.74 bits/symbol. If this Huffman code is used to represent the signal, then the average length is lowered to 1.85 bits/symbol; it is still far from the theoretical limit because the probabilities of the symbols are different from negative powers of

1065:

2744:

enables one to use any kind of weights (costs, frequencies, pairs of weights, non-numerical weights) and one of many combining methods (not just addition). Such algorithms can solve other minimization problems, such as minimizing

772:

1714:

328:

on the problem of finding the most efficient binary code. Huffman, unable to prove any codes were the most efficient, was about to give up and start studying for the final when he hit upon the idea of using a frequency-sorted

2343:

children. We then apply the process again, on the new internal node and on the remaining nodes (i.e., we exclude the two leaf nodes), we repeat this process until only one node remains, which is the root of the Huffman tree.

1159:

3012:

In the standard Huffman coding problem, it is assumed that any codeword can correspond to any input symbol. In the alphabetic version, the alphabetic order of inputs and outputs must be identical. Thus, for example,

2476:

choosing the item in the first queue. This modification will retain the mathematical optimality of the Huffman coding while both minimizing variance and minimizing the length of the longest character code.

2989:, no matter how many of those digits are 0s, how many are 1s, etc. When working under this assumption, minimizing the total cost of the message and minimizing the total number of digits are the same thing.

2995:

is the generalization without this assumption: the letters of the encoding alphabet may have non-uniform lengths, due to characteristics of the transmission medium. An example is the encoding alphabet of

2535:

The probabilities used can be generic ones for the application domain that are based on average experience, or they can be the actual frequencies found in the text being compressed. This requires that a

862:

3260:

3193:

3126:

3510:

followed by the use of prefix codes; these are often called "Huffman codes" even though most applications use pre-defined variable-length codes rather than codes designed using Huffman's algorithm.

2468:

here is the number of symbols in the alphabet, which is typically a very small number (compared to the length of the message to be encoded); whereas complexity analysis concerns the behavior when

2733:

Most often, the weights used in implementations of Huffman coding represent numeric probabilities, but the algorithm given above does not require this; it requires only that the weights form a

1674:

2844:

is a variant where the goal is still to achieve a minimum weighted path length, but there is an additional restriction that the length of each codeword must be less than a given constant. The

1994:

953:

681:

588:

2133:

2591:

coding. This reflects the fact that compression is not possible with such an input, no matter what the compression method, i.e., doing nothing to the data is the optimal thing to do.

3807:

2981:

In the standard Huffman coding problem, it is assumed that each symbol in the set that the code words are constructed from has an equal cost to transmit: a code word whose length is

3059:

2177:

3460:

3406:

1575:, the sum of the probability budgets across all symbols is always less than or equal to one. In this example, the sum is strictly equal to one; as a result, the code is termed a

1579:

code. If this is not the case, one can always derive an equivalent code by adding extra symbols (with associated null probabilities), to make the code complete while keeping it

2594:

Huffman coding is optimal among all methods in any case where each input symbol is a known independent and identically distributed random variable having a probability that is

966:

1190:

2831:

2520:

281:

methods, more common symbols are generally represented using fewer bits than less common symbols. Huffman's method can be efficiently implemented, finding a code in time

3311:

2970:

35:

decoder and thus does not need to be counted as part of the transmitted information). The frequencies and codes of each character are shown in the accompanying table.

2702:

to 1 contractor; for binary coding, this is a 2 to 1 contractor, and any sized set can form such a contractor. If the number of source words is congruent to 1 modulo

3315:

solution to this optimal binary alphabetic problem, which has some similarities to Huffman algorithm, but is not a variation of this algorithm. A later method, the

2882:

889:

2932:

2033:

2684:

2337:

2006:

In general, a Huffman code need not be unique. Thus the set of Huffman codes for a given probability distribution is a non-empty subset of the codes minimizing

273:

table for encoding a source symbol (such as a character in a file). The algorithm derives this table from the estimated probability or frequency of occurrence (

3994:

2902:

2609:. This technique adds one step in advance of entropy coding, specifically counting (runs) of repeated symbols, which are then encoded. For the simple case of

2311:

2259:

1085:

608:

686:

3474:

non-integer bit lengths, whereas code words in prefix codes such as Huffman codes can only have an integer number of bits. Therefore, a code word of length

2617:

is optimal among prefix codes for coding run length, a fact proved via the techniques of Huffman coding. A similar approach is taken by fax machines using

4662:

4473:

2540:

must be stored with the compressed text. See the Decompression section above for more information about the various techniques employed for this purpose.

2035:

for that probability distribution. (However, for each minimizing codeword length assignment, there exists at least one Huffman code with those lengths.)

4362:

2430:

Create a new internal node, with the two just-removed nodes as children (either node can be either child) and the sum of their weights as the new weight.

4176:

2293:

node. As a common convention, bit '0' represents following the left child and bit '1' represents following the right child. A finished tree has up to

1090:

5209:

4868:

4691:

4485:

3684:

2560:

3327:(1977), uses simpler logic to perform the same comparisons in the same total time bound. These optimal alphabetic binary trees are often used as

285:

to the number of input weights if these weights are sorted. However, although optimal among methods encoding symbols separately, Huffman coding

4873:

4450:

1587:

1921:{\displaystyle H(A)=\sum _{w_{i}>0}w_{i}h(a_{i})=\sum _{w_{i}>0}w_{i}\log _{2}{1 \over w_{i}}=-\sum _{w_{i}>0}w_{i}\log _{2}{w_{i}}.}

3609:

2461:

The final encoding of any symbol is then read by a concatenation of the labels on the edges along the path from the root node to the symbol.

263:

4603:

2421:

Enqueue all leaf nodes into the first queue (by probability in increasing order so that the least likely item is in the head of the queue).

1200:

We give an example of the result of Huffman coding for a code with five characters and given weights. We will not verify that it minimizes

2698:-ary tree for Huffman coding. In these cases, additional 0-probability place holders must be added. This is because the tree must form an

4980:

4718:

4657:

4468:

4418:

4241:

4086:

2442:

Once the Huffman tree has been generated, it is traversed to generate a dictionary which maps the symbols to binary codes as follows:

2000:

4101:

3987:

3944:

3746:

453:

3350:

and is often the code used in practice, due to ease of encoding/decoding. The technique for finding this code is sometimes called

2363:

Create a new internal node with these two nodes as children and with probability equal to the sum of the two nodes' probabilities.

5093:

3507:

785:

5103:

4941:

4792:

4711:

4505:

387:

3198:

3131:

3064:

5076:

4696:

4490:

4278:

434:

391:

4209:

4838:

4166:

406:

5173:

484:

3531:

5163:

5066:

4608:

3980:

1607:

502:

340:

to develop a similar code. Building the tree from the bottom up guaranteed optimality, unlike the top-down approach of

4156:

4151:

413:

5098:

3316:

1934:

2660:-ary tree. This approach was considered by Huffman in his original paper. The same algorithm applies as for binary (

5204:

5025:

4863:

4843:

4787:

4445:

4236:

4039:

3935:

894:

294:

3682:

Gallager, R.G.; van Voorhis, D.C. (1975). "Optimal source codes for geometrically distributed integer alphabets".

615:

522:

380:

5199:

5108:

5049:

4975:

4823:

4413:

4408:

4263:

4181:

4106:

2556:

2066:

247:

3711:

Abrahams, J. (1997-06-11). "Code and parse trees for lossless source encoding". Written at Arlington, VA, USA.

420:

5113:

4686:

4480:

4312:

3716:

4081:

3355:

341:

2052:

Visualisation of the use of Huffman coding to encode the message "A_DEAD_DAD_CEDED_A_BAD_BABE_A_BEADED_ABACA_

5054:

4425:

4268:

4064:

4054:

3016:

2845:

2716:

2621:. However, run-length coding is not as adaptable to as many input types as other compression technologies.

2618:

2281:

node which makes it easy to read the code (in reverse) starting from a leaf node. Internal nodes contain a

2138:

402:

4679:

4430:

4214:

4059:

3724:

3539:

3415:

3361:

3340:

2486:

2411:

1060:{\textstyle L\left(C\left(W\right)\right)=\sum _{i=1}^{n}{w_{i}\operatorname {length} \left(c_{i}\right)}}

5214:

5083:

3808:"A Dynamic Programming Algorithm for Constructing Optimal Prefix-Free Codes with Unequal Letter Costs"

352:

Huffman coding uses a specific method for choosing the representation for each symbol, resulting in a

4767:

4229:

4191:

4012:

3922:

1570:

1164:

270:

3729:

2748:

683:, which is the tuple of the (positive) symbol weights (usually proportional to probabilities), i.e.

4998:

4848:

4833:

4802:

4797:

4706:

4613:

4515:

4500:

4283:

3646:

2606:

2595:

2225:

1680:

2492:

5071:

5041:

5020:

4926:

4858:

4752:

4440:

4256:

4246:

4141:

4121:

4116:

3878:

3830:

3752:

3662:

3328:

2339:

internal nodes. A Huffman tree that omits unused symbols produces the most optimal code lengths.

313:

235:

4652:

3775:

3278:

2937:

2579:

For a set of symbols with a uniform probability distribution and a number of members which is a

2690:

least probable symbols are taken together, instead of just the 2 least probable. Note that for

2048:

266:, and published in the 1952 paper "A Method for the Construction of Minimum-Redundancy Codes".

5015:

5003:

4985:

4853:

4737:

4674:

4520:

4435:

4391:

4352:

4034:

3940:

3742:

3605:

3483:

3470:

3324:

2722:

2610:

2564:

2549:

290:

259:

4990:

4946:

4919:

4914:

4889:

4772:

4757:

4667:

4576:

4571:

4546:

4400:

4133:

4111:

4003:

3962:

3926:

3918:

3870:

3822:

3788:

3734:

3693:

3661:

Gribov, Alexander (2017-04-10). "Optimal Compression of a Polyline with Segments and Arcs".

3641:

3548:

3527:

3408:, which, having the same codeword lengths as the original solution, is also optimal. But in

2849:

2464:

In many cases, time complexity is not very important in the choice of algorithm here, since

2224:

The standard way to represent a signal made of 4 symbols is by using 2 bits/symbol, but the

427:

306:

278:

255:

231:

2855:

1996:. So for simplicity, symbols with zero probability can be left out of the formula above.)

867:

4909:

4723:

4647:

4628:

4598:

4566:

4532:

4091:

4029:

3770:

3566:

3482:

Prefix codes nevertheless remain in wide use because of their simplicity, high speed, and

3275:

3001:

2908:

2631:

2537:

2238:

2009:

1209:

2663:

2316:

4701:

4495:

4224:

4219:

4076:

4021:

3930:

3570:

3320:

2905:

2887:

2347:

2296:

2244:

2059:

1070:

767:{\displaystyle w_{i}=\operatorname {weight} \left(a_{i}\right),\,i\in \{1,2,\dots ,n\}}

593:

498:

337:

321:

3354:, since it is optimal like Huffman coding, but alphabetic in weight probability, like

5193:

5008:

4956:

4623:

4618:

4593:

4525:

4146:

4044:

3756:

2973:

time, unlike the presorted and unsorted conventional Huffman problems, respectively.

2614:

2266:

3004:

whose solution has been refined for the case of integer costs by Mordecai J. Golin.

2904:

is the maximum length of a codeword. No algorithm is known to solve this problem in

2427:

Dequeue the two nodes with the lowest weight by examining the fronts of both queues.

5129:

4096:

4071:

3896:

3834:

3627:

The use of asymmetric numeral systems as an accurate replacement for Huffman coding

2580:

2852:

approach very similar to that used by Huffman's algorithm. Its time complexity is

1931:(Note: A symbol with zero probability has zero contribution to the entropy, since

3599:

5088:

4966:

4762:

4638:

4588:

3861:(1971). "Optimal Computer Search Trees and Variable-Length Alphabetical Codes".

3858:

3271:

2734:

2403:

2234:

494:

369:

353:

330:

282:

243:

3738:

3624:

3552:

1154:{\displaystyle L\left(C\left(W\right)\right)\leq L\left(T\left(W\right)\right)}

5145:

4936:

4931:

4818:

4777:

4583:

3713:

Proceedings. Compression and Complexity of SEQUENCES 1997 (Cat. No.97TB100171)

2997:

2584:

325:

3792:

3697:

2360:

Remove the two nodes of highest priority (lowest probability) from the queue

2262:

466:

30:

17:

3972:

2563:, a single code may be insufficient for optimality. Other methods such as

5059:

4904:

4561:

3899:(1998), "Algorithm G (Garsia–Wachs algorithm for optimum binary trees)",

3854:

3267:

3957:

2706:−1, then the set of source words will form a proper Huffman tree.

4828:

4302:

4251:

3882:

3642:"Profile: David A. Huffman: Encoding the "Neatness" of Ones and Zeroes"

3487:

3826:

3486:. They are often used as a "back-end" to other compression methods.

4342:

2737:

2572:

2438:

The remaining node is the root node; the tree has now been generated.

3874:

2354:

Create a leaf node for each symbol and add it to the priority queue.

2277:(frequency of appearance) of the symbol and optionally, a link to a

3667:

5177:

4951:

4782:

4375:

4322:

3967:

3495:

3491:

2694:

greater than 2, not all sets of source words can properly form an

2588:

2350:

where the node with lowest probability is given highest priority:

2058:

465:

29:

2489:, the compression model can be precisely reconstructed with just

4332:

4186:

4171:

4161:

3720:

3715:. Division of Mathematics, Computer & Information Sciences,

3499:

3358:. The Huffman–Shannon–Fano code corresponding to the example is

2457:. Repeat the process at both the left child and the right child.

2449:

If node is not a leaf node, label the edge to the left child as

317:

3976:

4307:

4273:

3901:

The Art of Computer Programming, Vol. 3: Sorting and Searching

3776:"Minimum-redundancy coding for the discrete noiseless channel"

3503:

363:

310:

2837:

Length-limited Huffman coding/minimum variance Huffman coding

2375:

Since efficient priority queue data structures require O(log

2371:

The remaining node is the root node and the tree is complete.

277:) for each possible value of the source symbol. As in other

316:

classmates were given the choice of a term paper or a final

3532:"A Method for the Construction of Minimum-Redundancy Codes"

1215:

of the given set of weights; the result is nearly optimal.

857:{\displaystyle C\left(W\right)=(c_{1},c_{2},\dots ,c_{n})}

2269:. Initially, all nodes are leaf nodes, which contain the

3255:{\displaystyle H\left(A,C\right)=\left\{0,10,11\right\}}

3188:{\displaystyle H\left(A,C\right)=\left\{00,01,1\right\}}

3121:{\displaystyle H\left(A,C\right)=\left\{00,1,01\right\}}

3598:

Ze-Nian Li; Mark S. Drew; Jiangchuan Liu (2014-04-09).

2433:

Enqueue the new node into the rear of the second queue.

269:

The output from Huffman's algorithm can be viewed as a

2740:, meaning a way to order weights and to add them. The

2241:, the size of which depends on the number of symbols,

969:

336:

In doing so, Huffman outdid Fano, who had worked with

3418:

3364:

3281:

3201:

3134:

3067:

3019:

2940:

2911:

2890:

2858:

2751:

2725:, which is more flexible and has better compression.

2666:

2495:

2402:

If the symbols are sorted by probability, there is a

2319:

2299:

2247:

2141:

2069:

2012:

1937:

1717:

1610:

1167:

1093:

1073:

897:

870:

788:

689:

618:

596:

525:

289:

among all compression methods - it is replaced with

5138:

5122:

5040:

4965:

4897:

4888:

4811:

4745:

4736:

4637:

4554:

4545:

4461:

4399:

4390:

4292:

4202:

4132:

4020:

4011:

3958:

Huffman coding in various languages on Rosetta Code

3939:, Second Edition. MIT Press and McGraw-Hill, 2001.

501:codeword length (equivalently, a tree with minimum

394:. Unsourced material may be challenged and removed.

333:and quickly proved this method the most efficient.

3774:

3454:

3400:

3305:

3254:

3187:

3120:

3053:

3008:Optimal alphabetic binary trees (Hu–Tucker coding)

2964:

2926:

2896:

2876:

2825:

2678:

2514:

2331:

2305:

2253:

2171:

2127:

2027:

1988:

1920:

1686:(in bits) is the weighted sum, across all symbols

1669:{\displaystyle h(a_{i})=\log _{2}{1 \over w_{i}}.}

1668:

1184:

1153:

1079:

1059:

947:

883:

864:, which is the tuple of (binary) codewords, where

856:

766:

675:

602:

582:

3905:. See also History and bibliography, pp. 453–454.

2424:While there is more than one node in the queues:

250:. The process of finding or using such a code is

3903:(2nd ed.), Addison–Wesley, pp. 451–453

3274:, the authors of the paper presenting the first

2753:

2583:, Huffman coding is equivalent to simple binary

2357:While there is more than one node in the queue:

1939:

2605:A practical alternative, in widespread use, is

2418:Start with as many leaves as there are symbols.

1989:{\displaystyle \lim _{w\to 0^{+}}w\log _{2}w=0}

3625:J. Duda, K. Tahboub, N. J. Gadil, E. J. Delp,

3988:

2833:, a problem first applied to circuit design.

2656:− 1} alphabet to encode message and build an

2410:)) method to create a Huffman tree using two

1708:, of the information content of each symbol:

948:{\displaystyle a_{i},\,i\in \{1,2,\dots ,n\}}

8:

3449:

3419:

3395:

3365:

2166:

2142:

2122:

2070:

942:

918:

761:

737:

676:{\displaystyle W=(w_{1},w_{2},\dots ,w_{n})}

583:{\displaystyle A=(a_{1},a_{2},\dots ,a_{n})}

483:A set of symbols and their weights (usually

2346:The simplest construction algorithm uses a

2237:of nodes. These can be stored in a regular

2128:{\displaystyle \{a_{1},a_{2},a_{3},a_{4}\}}

297:if a better compression ratio is required.

4894:

4742:

4551:

4396:

4017:

3995:

3981:

3973:

2567:often have better compression capability.

36:

3728:

3666:

3604:. Springer Science & Business Media.

3417:

3363:

3280:

3200:

3133:

3066:

3018:

2939:

2910:

2889:

2857:

2808:

2780:

2771:

2756:

2750:

2665:

2506:

2494:

2318:

2298:

2246:

2140:

2116:

2103:

2090:

2077:

2068:

2011:

1968:

1953:

1942:

1936:

1908:

1903:

1894:

1884:

1866:

1861:

1843:

1834:

1825:

1815:

1797:

1792:

1776:

1760:

1742:

1737:

1716:

1655:

1646:

1637:

1621:

1609:

1166:

1092:

1072:

1046:

1026:

1021:

1015:

1004:

968:

911:

902:

896:

875:

869:

845:

826:

813:

787:

730:

717:

694:

688:

664:

645:

632:

617:

595:

571:

552:

539:

524:

454:Learn how and when to remove this message

3128:, but instead should be assigned either

2993:Huffman coding with unequal letter costs

2977:Huffman coding with unequal letter costs

2446:Start with current node set to the root.

2180:

2047:

1217:

3821:(5) (published 1998-09-01): 1770–1781.

3815:IEEE Transactions on Information Theory

3685:IEEE Transactions on Information Theory

3519:

2561:independent and identically distributed

2559:are unknown. Also, if symbols are not

2550:Arithmetic coding § Huffman coding

2387:−1 nodes, this algorithm operates in O(

2063:A source generates 4 different symbols

590:, which is the symbol alphabet of size

3781:IRE Transactions on Information Theory

3571:"On the construction of Huffman trees"

3054:{\displaystyle A=\left\{a,b,c\right\}}

2379:) time per insertion, and a tree with

3963:Huffman codes (python implementation)

2172:{\displaystyle \{0.4;0.35;0.2;0.05\}}

7:

3455:{\displaystyle \{110,111,00,01,10\}}

3401:{\displaystyle \{000,001,01,10,11\}}

1383:Contribution to weighted path length

1204:over all codes, but we will compute

1067:be the weighted path length of code

392:adding citations to reliable sources

3863:SIAM Journal on Applied Mathematics

3806:Golin, Mordekai J. (January 1998).

2453:and the edge to the right child as

2985:digits will always have a cost of

2848:solves this problem with a simple

2796:

2793:

2790:

2787:

2784:

2781:

2233:The technique works by creating a

503:weighted path length from the root

497:(a set of codewords) with minimum

25:

3968:A visualization of Huffman coding

3947:. Section 16.3, pp. 385–392.

3630:, Picture Coding Symposium, 2015.

5169:

5168:

5159:

5158:

368:

242:is a particular type of optimal

5210:Lossless compression algorithms

2001:Shannon's source coding theorem

1185:{\displaystyle T\left(W\right)}

379:needs additional citations for

286:

3300:

3285:

2959:

2944:

2921:

2915:

2871:

2862:

2826:{\displaystyle \max _{i}\left}

2652:algorithm uses the {0, 1,...,

2366:Add the new node to the queue.

2022:

2016:

1946:

1782:

1769:

1727:

1721:

1627:

1614:

851:

806:

670:

625:

577:

532:

1:

3494:'s algorithm) and multimedia

2842:Length-limited Huffman coding

1601:with non-null probability is

1468:Information content (in bits)

3262:. This is also known as the

2515:{\displaystyle B\cdot 2^{B}}

1551:

1548:

1545:

1542:

1539:

1536:

1502:

1499:

1496:

1493:

1490:

1487:

1462:

1459:

1456:

1453:

1450:

1447:

1423:

1420:

1417:

1414:

1411:

1408:

1377:

1374:

1371:

1368:

1365:

1339:

1334:

1329:

1324:

1319:

1294:

1291:

1288:

1285:

1282:

1279:

1257:

1254:

1251:

1248:

1245:

254:, an algorithm developed by

3506:have a front-end model and

3352:Huffman–Shannon–Fano coding

3061:could not be assigned code

2522:bits of information (where

1569:, meaning that the code is

470:Constructing a Huffman Tree

5233:

5050:Compressed data structures

4372:RLE + BWT + MTF + Huffman

4040:Asymmetric numeral systems

3936:Introduction to Algorithms

3739:10.1109/SEQUEN.1997.666911

3601:Fundamentals of Multimedia

3553:10.1109/JRPROC.1952.273898

3338:

3335:The canonical Huffman code

3306:{\displaystyle O(n\log n)}

2965:{\displaystyle O(n\log n)}

2742:Huffman template algorithm

2729:Huffman template algorithm

2723:adaptive arithmetic coding

2557:probability mass functions

2547:

2399:is the number of symbols.

2289:and an optional link to a

1697:with non-zero probability

1590:, the information content

295:asymmetric numeral systems

246:that is commonly used for

27:Technique to compress data

5154:

4409:Discrete cosine transform

4339:LZ77 + Huffman + context

2686:) codes, except that the

2261:. A node can be either a

1594:(in bits) of each symbol

1435:

1350:Codeword length (in bits)

1344:

1299:

1220:

248:lossless data compression

5114:Smallest grammar problem

3793:10.1109/TIT.1961.1057615

3717:Office of Naval Research

3698:10.1109/TIT.1975.1055357

3346:is sometimes called the

2472:grows to be very large.

5055:Compressed suffix array

4604:Nyquist–Shannon theorem

3484:lack of patent coverage

2846:package-merge algorithm

2717:adaptive Huffman coding

2710:Adaptive Huffman coding

2619:modified Huffman coding

1508:Contribution to entropy

495:prefix-free binary code

3540:Proceedings of the IRE

3456:

3410:canonical Huffman code

3402:

3348:canonical Huffman code

3341:Canonical Huffman code

3317:Garsia–Wachs algorithm

3307:

3256:

3189:

3122:

3055:

2966:

2928:

2898:

2878:

2827:

2680:

2516:

2333:

2307:

2255:

2230:

2173:

2129:

2056:

2029:

1990:

1922:

1670:

1208:and compare it to the

1186:

1155:

1081:

1061:

1020:

949:

885:

858:

768:

677:

604:

584:

510:Formalized description

471:

227:

5084:Kolmogorov complexity

4952:Video characteristics

4329:LZ77 + Huffman + ANS

3640:Huffman, Ken (1991).

3457:

3403:

3308:

3257:

3190:

3123:

3056:

2967:

2929:

2899:

2879:

2877:{\displaystyle O(nL)}

2828:

2681:

2517:

2334:

2308:

2256:

2174:

2130:

2062:

2051:

2030:

1991:

1923:

1671:

1565:For any code that is

1187:

1156:

1082:

1062:

1000:

950:

886:

884:{\displaystyle c_{i}}

859:

769:

678:

605:

585:

469:

287:is not always optimal

33:

5174:Compression software

4768:Compression artifact

4724:Psychoacoustic model

3923:Charles E. Leiserson

3723:. pp. 145–171.

3416:

3362:

3279:

3199:

3132:

3065:

3017:

2938:

2927:{\displaystyle O(n)}

2909:

2888:

2856:

2749:

2664:

2493:

2317:

2297:

2245:

2139:

2067:

2028:{\displaystyle L(C)}

2010:

1999:As a consequence of

1935:

1715:

1608:

1165:

1091:

1071:

967:

895:

891:is the codeword for

868:

786:

687:

616:

594:

523:

475:Informal description

388:improve this article

271:variable-length code

5164:Compression formats

4803:Texture compression

4798:Standard test image

4614:Silence compression

3647:Scientific American

3356:Shannon–Fano coding

3329:binary search trees

2714:A variation called

2679:{\displaystyle n=2}

2641:-ary Huffman coding

2611:Bernoulli processes

2607:run-length encoding

2332:{\displaystyle n-1}

1572:uniquely decodeable

342:Shannon–Fano coding

5072:Information theory

4927:Display resolution

4753:Chroma subsampling

4142:Byte pair encoding

4087:Shannon–Fano–Elias

3787:(1). IEEE: 27–38.

3452:

3398:

3303:

3252:

3185:

3118:

3051:

2962:

2924:

2894:

2874:

2823:

2761:

2738:commutative monoid

2676:

2512:

2487:canonical encoding

2329:

2303:

2251:

2231:

2169:

2125:

2057:

2025:

1986:

1960:

1918:

1879:

1810:

1755:

1666:

1439:Probability budget

1182:

1151:

1077:

1057:

945:

881:

854:

764:

673:

600:

580:

487:to probabilities).

472:

360:Problem definition

314:information theory

236:information theory

228:

5205:1952 in computing

5187:

5186:

5036:

5035:

4986:Deblocking filter

4884:

4883:

4732:

4731:

4541:

4540:

4386:

4385:

3827:10.1109/18.705558

3795:– via IEEE.

3611:978-3-319-05290-8

3471:Arithmetic coding

3325:Michelle L. Wachs

2897:{\displaystyle L}

2752:

2565:arithmetic coding

2306:{\displaystyle n}

2254:{\displaystyle n}

2223:

2222:

2135:with probability

1938:

1857:

1849:

1788:

1733:

1661:

1563:

1562:

1080:{\displaystyle C}

603:{\displaystyle n}

464:

463:

456:

438:

320:. The professor,

291:arithmetic coding

226:

225:

16:(Redirected from

5222:

5200:Data compression

5172:

5171:

5162:

5161:

4991:Lapped transform

4895:

4773:Image resolution

4758:Coding tree unit

4743:

4552:

4397:

4018:

4004:Data compression

3997:

3990:

3983:

3974:

3927:Ronald L. Rivest

3919:Thomas H. Cormen

3906:

3904:

3897:Knuth, Donald E.

3893:

3887:

3886:

3851:

3845:

3844:

3842:

3841:

3812:

3803:

3797:

3796:

3778:

3771:Karp, Richard M.

3767:

3761:

3760:

3732:

3719:(ONR). Salerno:

3708:

3702:

3701:

3679:

3673:

3672:

3670:

3658:

3652:

3651:

3637:

3631:

3622:

3616:

3615:

3595:

3589:

3588:

3586:

3585:

3575:

3567:Van Leeuwen, Jan

3563:

3557:

3556:

3547:(9): 1098–1101.

3536:

3524:

3461:

3459:

3458:

3453:

3412:, the result is

3407:

3405:

3404:

3399:

3312:

3310:

3309:

3304:

3261:

3259:

3258:

3253:

3251:

3247:

3223:

3219:

3194:

3192:

3191:

3186:

3184:

3180:

3156:

3152:

3127:

3125:

3124:

3119:

3117:

3113:

3089:

3085:

3060:

3058:

3057:

3052:

3050:

3046:

2971:

2969:

2968:

2963:

2933:

2931:

2930:

2925:

2903:

2901:

2900:

2895:

2883:

2881:

2880:

2875:

2832:

2830:

2829:

2824:

2822:

2818:

2817:

2813:

2812:

2799:

2776:

2775:

2760:

2685:

2683:

2682:

2677:

2525:

2521:

2519:

2518:

2513:

2511:

2510:

2338:

2336:

2335:

2330:

2312:

2310:

2309:

2304:

2260:

2258:

2257:

2252:

2181:

2178:

2176:

2175:

2170:

2134:

2132:

2131:

2126:

2121:

2120:

2108:

2107:

2095:

2094:

2082:

2081:

2034:

2032:

2031:

2026:

1995:

1993:

1992:

1987:

1973:

1972:

1959:

1958:

1957:

1927:

1925:

1924:

1919:

1914:

1913:

1912:

1899:

1898:

1889:

1888:

1878:

1871:

1870:

1850:

1848:

1847:

1835:

1830:

1829:

1820:

1819:

1809:

1802:

1801:

1781:

1780:

1765:

1764:

1754:

1747:

1746:

1707:

1696:

1675:

1673:

1672:

1667:

1662:

1660:

1659:

1647:

1642:

1641:

1626:

1625:

1533:

1484:

1444:

1405:

1395:

1362:

1342:

1337:

1332:

1327:

1322:

1316:

1276:

1242:

1218:

1191:

1189:

1188:

1183:

1181:

1160:

1158:

1157:

1152:

1150:

1146:

1145:

1120:

1116:

1115:

1086:

1084:

1083:

1078:

1066:

1064:

1063:

1058:

1056:

1055:

1051:

1050:

1031:

1030:

1019:

1014:

996:

992:

991:

954:

952:

951:

946:

907:

906:

890:

888:

887:

882:

880:

879:

863:

861:

860:

855:

850:

849:

831:

830:

818:

817:

802:

773:

771:

770:

765:

726:

722:

721:

699:

698:

682:

680:

679:

674:

669:

668:

650:

649:

637:

636:

609:

607:

606:

601:

589:

587:

586:

581:

576:

575:

557:

556:

544:

543:

459:

452:

448:

445:

439:

437:

403:"Huffman coding"

396:

372:

364:

307:David A. Huffman

279:entropy encoding

256:David A. Huffman

232:computer science

37:

21:

5232:

5231:

5225:

5224:

5223:

5221:

5220:

5219:

5190:

5189:

5188:

5183:

5150:

5134:

5118:

5099:Rate–distortion

5032:

4961:

4880:

4807:

4728:

4633:

4629:Sub-band coding

4537:

4462:Predictive type

4457:

4382:

4349:LZSS + Huffman

4299:LZ77 + Huffman

4288:

4198:

4134:Dictionary type

4128:

4030:Adaptive coding

4007:

4001:

3954:

3915:

3910:

3909:

3895:

3894:

3890:

3875:10.1137/0121057

3853:

3852:

3848:

3839:

3837:

3810:

3805:

3804:

3800:

3769:

3768:

3764:

3749:

3730:10.1.1.589.4726

3710:

3709:

3705:

3681:

3680:

3676:

3660:

3659:

3655:

3639:

3638:

3634:

3623:

3619:

3612:

3597:

3596:

3592:

3583:

3581:

3573:

3565:

3564:

3560:

3534:

3526:

3525:

3521:

3516:

3468:

3414:

3413:

3360:

3359:

3343:

3337:

3277:

3276:

3266:problem, after

3231:

3227:

3209:

3205:

3197:

3196:

3164:

3160:

3142:

3138:

3130:

3129:

3097:

3093:

3075:

3071:

3063:

3062:

3030:

3026:

3015:

3014:

3010:

3002:Richard M. Karp

2979:

2936:

2935:

2907:

2906:

2886:

2885:

2854:

2853:

2839:

2804:

2800:

2767:

2766:

2762:

2747:

2746:

2735:totally ordered

2731:

2712:

2662:

2661:

2643:

2632:polynomial time

2627:

2552:

2546:

2538:frequency table

2533:

2531:Main properties

2523:

2502:

2491:

2490:

2482:

2315:

2314:

2313:leaf nodes and

2295:

2294:

2287:two child nodes

2243:

2242:

2137:

2136:

2112:

2099:

2086:

2073:

2065:

2064:

2053:

2046:

2041:

2039:Basic technique

2008:

2007:

1964:

1949:

1933:

1932:

1904:

1890:

1880:

1862:

1839:

1821:

1811:

1793:

1772:

1756:

1738:

1713:

1712:

1706:

1698:

1695:

1687:

1651:

1633:

1617:

1606:

1605:

1600:

1532:

1524:

1520:

1511:

1509:

1483:

1475:

1471:

1469:

1442:

1440:

1404:

1396:

1394:

1386:

1384:

1361:

1353:

1351:

1340:

1335:

1330:

1325:

1320:

1315:

1307:

1275:

1267:

1241:

1233:

1210:Shannon entropy

1198:

1171:

1163:

1162:

1135:

1131:

1127:

1105:

1101:

1097:

1089:

1088:

1069:

1068:

1042:

1038:

1022:

981:

977:

973:

965:

964:

962:

957:

956:

898:

893:

892:

871:

866:

865:

841:

822:

809:

792:

784:

783:

781:

776:

775:

713:

709:

690:

685:

684:

660:

641:

628:

614:

613:

611:

592:

591:

567:

548:

535:

521:

520:

518:

512:

477:

460:

449:

443:

440:

397:

395:

385:

373:

362:

350:

303:

258:while he was a

28:

23:

22:

15:

12:

11:

5:

5230:

5229:

5226:

5218:

5217:

5212:

5207:

5202:

5192:

5191:

5185:

5184:

5182:

5181:

5166:

5155:

5152:

5151:

5149:

5148:

5142:

5140:

5136:

5135:

5133:

5132:

5126:

5124:

5120:

5119:

5117:

5116:

5111:

5106:

5101:

5096:

5091:

5086:

5081:

5080:

5079:

5069:

5064:

5063:

5062:

5057:

5046:

5044:

5038:

5037:

5034:

5033:

5031:

5030:

5029:

5028:

5023:

5013:

5012:

5011:

5006:

5001:

4993:

4988:

4983:

4978:

4972:

4970:

4963:

4962:

4960:

4959:

4954:

4949:

4944:

4939:

4934:

4929:

4924:

4923:

4922:

4917:

4912:

4901:

4899:

4892:

4886:

4885:

4882:

4881:

4879:

4878:

4877:

4876:

4871:

4866:

4861:

4851:

4846:

4841:

4836:

4831:

4826:

4821:

4815:

4813:

4809:

4808:

4806:

4805:

4800:

4795:

4790:

4785:

4780:

4775:

4770:

4765:

4760:

4755:

4749:

4747:

4740:

4734:

4733:

4730:

4729:

4727:

4726:

4721:

4716:

4715:

4714:

4709:

4704:

4699:

4694:

4684:

4683:

4682:

4672:

4671:

4670:

4665:

4655:

4650:

4644:

4642:

4635:

4634:

4632:

4631:

4626:

4621:

4616:

4611:

4606:

4601:

4596:

4591:

4586:

4581:

4580:

4579:

4574:

4569:

4558:

4556:

4549:

4543:

4542:

4539:

4538:

4536:

4535:

4533:Psychoacoustic

4530:

4529:

4528:

4523:

4518:

4510:

4509:

4508:

4503:

4498:

4493:

4488:

4478:

4477:

4476:

4465:

4463:

4459:

4458:

4456:

4455:

4454:

4453:

4448:

4443:

4433:

4428:

4423:

4422:

4421:

4416:

4405:

4403:

4401:Transform type

4394:

4388:

4387:

4384:

4383:

4381:

4380:

4379:

4378:

4370:

4369:

4368:

4365:

4357:

4356:

4355:

4347:

4346:

4345:

4337:

4336:

4335:

4327:

4326:

4325:

4317:

4316:

4315:

4310:

4305:

4296:

4294:

4290:

4289:

4287:

4286:

4281:

4276:

4271:

4266:

4261:

4260:

4259:

4254:

4244:

4239:

4234:

4233:

4232:

4222:

4217:

4212:

4206:

4204:

4200:

4199:

4197:

4196:

4195:

4194:

4189:

4184:

4179:

4174:

4169:

4164:

4159:

4154:

4144:

4138:

4136:

4130:

4129:

4127:

4126:

4125:

4124:

4119:

4114:

4109:

4099:

4094:

4089:

4084:

4079:

4074:

4069:

4068:

4067:

4062:

4057:

4047:

4042:

4037:

4032:

4026:

4024:

4015:

4009:

4008:

4002:

4000:

3999:

3992:

3985:

3977:

3971:

3970:

3965:

3960:

3953:

3952:External links

3950:

3949:

3948:

3931:Clifford Stein

3914:

3911:

3908:

3907:

3888:

3846:

3798:

3773:(1961-01-31).

3762:

3747:

3703:

3692:(2): 228–230.

3674:

3653:

3632:

3617:

3610:

3590:

3558:

3518:

3517:

3515:

3512:

3467:

3464:

3451:

3448:

3445:

3442:

3439:

3436:

3433:

3430:

3427:

3424:

3421:

3397:

3394:

3391:

3388:

3385:

3382:

3379:

3376:

3373:

3370:

3367:

3339:Main article:

3336:

3333:

3321:Adriano Garsia

3302:

3299:

3296:

3293:

3290:

3287:

3284:

3250:

3246:

3243:

3240:

3237:

3234:

3230:

3226:

3222:

3218:

3215:

3212:

3208:

3204:

3183:

3179:

3176:

3173:

3170:

3167:

3163:

3159:

3155:

3151:

3148:

3145:

3141:

3137:

3116:

3112:

3109:

3106:

3103:

3100:

3096:

3092:

3088:

3084:

3081:

3078:

3074:

3070:

3049:

3045:

3042:

3039:

3036:

3033:

3029:

3025:

3022:

3009:

3006:

2978:

2975:

2961:

2958:

2955:

2952:

2949:

2946:

2943:

2923:

2920:

2917:

2914:

2893:

2873:

2870:

2867:

2864:

2861:

2838:

2835:

2821:

2816:

2811:

2807:

2803:

2798:

2795:

2792:

2789:

2786:

2783:

2779:

2774:

2770:

2765:

2759:

2755:

2730:

2727:

2711:

2708:

2675:

2672:

2669:

2642:

2636:

2626:

2623:

2585:block encoding

2545:

2542:

2532:

2529:

2509:

2505:

2501:

2498:

2481:

2478:

2459:

2458:

2447:

2440:

2439:

2436:

2435:

2434:

2431:

2428:

2422:

2419:

2395:) time, where

2373:

2372:

2369:

2368:

2367:

2364:

2361:

2355:

2348:priority queue

2328:

2325:

2322:

2302:

2250:

2221:

2220:

2217:

2213:

2212:

2209:

2205:

2204:

2201:

2197:

2196:

2193:

2189:

2188:

2185:

2168:

2165:

2162:

2159:

2156:

2153:

2150:

2147:

2144:

2124:

2119:

2115:

2111:

2106:

2102:

2098:

2093:

2089:

2085:

2080:

2076:

2072:

2045:

2042:

2040:

2037:

2024:

2021:

2018:

2015:

1985:

1982:

1979:

1976:

1971:

1967:

1963:

1956:

1952:

1948:

1945:

1941:

1929:

1928:

1917:

1911:

1907:

1902:

1897:

1893:

1887:

1883:

1877:

1874:

1869:

1865:

1860:

1856:

1853:

1846:

1842:

1838:

1833:

1828:

1824:

1818:

1814:

1808:

1805:

1800:

1796:

1791:

1787:

1784:

1779:

1775:

1771:

1768:

1763:

1759:

1753:

1750:

1745:

1741:

1736:

1732:

1729:

1726:

1723:

1720:

1702:

1691:

1677:

1676:

1665:

1658:

1654:

1650:

1645:

1640:

1636:

1632:

1629:

1624:

1620:

1616:

1613:

1598:

1588:Shannon (1948)

1586:As defined by

1561:

1560:

1550:

1547:

1544:

1541:

1538:

1535:

1528:

1522:

1516:

1505:

1504:

1501:

1498:

1495:

1492:

1489:

1486:

1479:

1473:

1465:

1464:

1461:

1458:

1455:

1452:

1449:

1446:

1437:

1433:

1432:

1422:

1419:

1416:

1413:

1410:

1407:

1400:

1390:

1380:

1379:

1376:

1373:

1370:

1367:

1364:

1357:

1347:

1346:

1343:

1338:

1333:

1328:

1323:

1318:

1311:

1304:

1297:

1296:

1293:

1290:

1287:

1284:

1281:

1278:

1271:

1263:

1262:

1259:

1256:

1253:

1250:

1247:

1244:

1237:

1230:

1197:

1194:

1180:

1177:

1174:

1170:

1149:

1144:

1141:

1138:

1134:

1130:

1126:

1123:

1119:

1114:

1111:

1108:

1104:

1100:

1096:

1076:

1054:

1049:

1045:

1041:

1037:

1034:

1029:

1025:

1018:

1013:

1010:

1007:

1003:

999:

995:

990:

987:

984:

980:

976:

972:

944:

941:

938:

935:

932:

929:

926:

923:

920:

917:

914:

910:

905:

901:

878:

874:

853:

848:

844:

840:

837:

834:

829:

825:

821:

816:

812:

808:

805:

801:

798:

795:

791:

763:

760:

757:

754:

751:

748:

745:

742:

739:

736:

733:

729:

725:

720:

716:

712:

708:

705:

702:

697:

693:

672:

667:

663:

659:

656:

653:

648:

644:

640:

635:

631:

627:

624:

621:

599:

579:

574:

570:

566:

563:

560:

555:

551:

547:

542:

538:

534:

531:

528:

511:

508:

507:

506:

491:

488:

481:

476:

473:

462:

461:

376:

374:

367:

361:

358:

349:

346:

338:Claude Shannon

322:Robert M. Fano

302:

299:

252:Huffman coding

224:

223:

220:

217:

213:

212:

209:

206:

202:

201:

198:

195:

191:

190:

187:

184:

180:

179:

176:

173:

169:

168:

165:

162:

158:

157:

154:

151:

147:

146:

143:

140:

136:

135:

132:

129:

125:

124:

121:

118:

114:

113:

110:

107:

103:

102:

99:

96:

92:

91:

88:

85:

81:

80:

77:

74:

70:

69:

66:

63:

59:

58:

55:

52:

48:

47:

44:

41:

26:

24:

14:

13:

10:

9:

6:

4:

3:

2:

5228:

5227:

5216:

5213:

5211:

5208:

5206:

5203:

5201:

5198:

5197:

5195:

5179:

5175:

5167:

5165:

5157:

5156:

5153:

5147:

5144:

5143:

5141:

5137:

5131:

5128:

5127:

5125:

5121:

5115:

5112:

5110:

5107:

5105:

5102:

5100:

5097:

5095:

5092:

5090:

5087:

5085:

5082:

5078:

5075:

5074:

5073:

5070:

5068:

5065:

5061:

5058:

5056:

5053:

5052:

5051:

5048:

5047:

5045:

5043:

5039:

5027:

5024:

5022:

5019:

5018:

5017:

5014:

5010:

5007:

5005:

5002:

5000:

4997:

4996:

4994:

4992:

4989:

4987:

4984:

4982:

4979:

4977:

4974:

4973:

4971:

4968:

4964:

4958:

4957:Video quality

4955:

4953:

4950:

4948:

4945:

4943:

4940:

4938:

4935:

4933:

4930:

4928:

4925:

4921:

4918:

4916:

4913:

4911:

4908:

4907:

4906:

4903:

4902:

4900:

4896:

4893:

4891:

4887:

4875:

4872:

4870:

4867:

4865:

4862:

4860:

4857:

4856:

4855:

4852:

4850:

4847:

4845:

4842:

4840:

4837:

4835:

4832:

4830:

4827:

4825:

4822:

4820:

4817:

4816:

4814:

4810:

4804:

4801:

4799:

4796:

4794:

4791:

4789:

4786:

4784:

4781:

4779:

4776:

4774:

4771:

4769:

4766:

4764:

4761:

4759:

4756:

4754:

4751:

4750:

4748:

4744:

4741:

4739:

4735:

4725:

4722:

4720:

4717:

4713:

4710:

4708:

4705:

4703:

4700:

4698:

4695:

4693:

4690:

4689:

4688:

4685:

4681:

4678:

4677:

4676:

4673:

4669:

4666:

4664:

4661:

4660:

4659:

4656:

4654:

4651:

4649:

4646:

4645:

4643:

4640:

4636:

4630:

4627:

4625:

4624:Speech coding

4622:

4620:

4619:Sound quality

4617:

4615:

4612:

4610:

4607:

4605:

4602:

4600:

4597:

4595:

4594:Dynamic range

4592:

4590:

4587:

4585:

4582:

4578:

4575:

4573:

4570:

4568:

4565:

4564:

4563:

4560:

4559:

4557:

4553:

4550:

4548:

4544:

4534:

4531:

4527:

4524:

4522:

4519:

4517:

4514:

4513:

4511:

4507:

4504:

4502:

4499:

4497:

4494:

4492:

4489:

4487:

4484:

4483:

4482:

4479:

4475:

4472:

4471:

4470:

4467:

4466:

4464:

4460:

4452:

4449:

4447:

4444:

4442:

4439:

4438:

4437:

4434:

4432:

4429:

4427:

4424:

4420:

4417:

4415:

4412:

4411:

4410:

4407:

4406:

4404:

4402:

4398:

4395:

4393:

4389:

4377:

4374:

4373:

4371:

4366:

4364:

4361:

4360:

4359:LZ77 + Range

4358:

4354:

4351:

4350:

4348:

4344:

4341:

4340:

4338:

4334:

4331:

4330:

4328:

4324:

4321:

4320:

4318:

4314:

4311:

4309:

4306:

4304:

4301:

4300:

4298:

4297:

4295:

4291:

4285:

4282:

4280:

4277:

4275:

4272:

4270:

4267:

4265:

4262:

4258:

4255:

4253:

4250:

4249:

4248:

4245:

4243:

4240:

4238:

4235:

4231:

4228:

4227:

4226:

4223:

4221:

4218:

4216:

4213:

4211:

4208:

4207:

4205:

4201:

4193:

4190:

4188:

4185:

4183:

4180:

4178:

4175:

4173:

4170:

4168:

4165:

4163:

4160:

4158:

4155:

4153:

4150:

4149:

4148:

4145:

4143:

4140:

4139:

4137:

4135:

4131:

4123:

4120:

4118:

4115:

4113:

4110:

4108:

4105:

4104:

4103:

4100:

4098:

4095:

4093:

4090:

4088:

4085:

4083:

4080:

4078:

4075:

4073:

4070:

4066:

4063:

4061:

4058:

4056:

4053:

4052:

4051:

4048:

4046:

4043:

4041:

4038:

4036:

4033:

4031:

4028:

4027:

4025:

4023:

4019:

4016:

4014:

4010:

4005:

3998:

3993:

3991:

3986:

3984:

3979:

3978:

3975:

3969:

3966:

3964:

3961:

3959:

3956:

3955:

3951:

3946:

3945:0-262-03293-7

3942:

3938:

3937:

3932:

3928:

3924:

3920:

3917:

3916:

3912:

3902:

3898:

3892:

3889:

3884:

3880:

3876:

3872:

3868:

3864:

3860:

3859:Tucker, A. C.

3856:

3850:

3847:

3836:

3832:

3828:

3824:

3820:

3816:

3809:

3802:

3799:

3794:

3790:

3786:

3782:

3777:

3772:

3766:

3763:

3758:

3754:

3750:

3748:0-8186-8132-2

3744:

3740:

3736:

3731:

3726:

3722:

3718:

3714:

3707:

3704:

3699:

3695:

3691:

3687:

3686:

3678:

3675:

3669:

3664:

3657:

3654:

3649:

3648:

3643:

3636:

3633:

3629:

3628:

3621:

3618:

3613:

3607:

3603:

3602:

3594:

3591:

3579:

3572:

3568:

3562:

3559:

3554:

3550:

3546:

3542:

3541:

3533:

3529:

3523:

3520:

3513:

3511:

3509:

3505:

3501:

3497:

3493:

3489:

3485:

3480:

3477:

3472:

3465:

3463:

3446:

3443:

3440:

3437:

3434:

3431:

3428:

3425:

3422:

3411:

3392:

3389:

3386:

3383:

3380:

3377:

3374:

3371:

3368:

3357:

3353:

3349:

3342:

3334:

3332:

3330:

3326:

3322:

3318:

3314:

3297:

3294:

3291:

3288:

3282:

3273:

3269:

3265:

3248:

3244:

3241:

3238:

3235:

3232:

3228:

3224:

3220:

3216:

3213:

3210:

3206:

3202:

3181:

3177:

3174:

3171:

3168:

3165:

3161:

3157:

3153:

3149:

3146:

3143:

3139:

3135:

3114:

3110:

3107:

3104:

3101:

3098:

3094:

3090:

3086:

3082:

3079:

3076:

3072:

3068:

3047:

3043:

3040:

3037:

3034:

3031:

3027:

3023:

3020:

3007:

3005:

3003:

2999:

2994:

2990:

2988:

2984:

2976:

2974:

2972:

2956:

2953:

2950:

2947:

2941:

2918:

2912:

2891:

2868:

2865:

2859:

2851:

2847:

2843:

2836:

2834:

2819:

2814:

2809:

2805:

2801:

2777:

2772:

2768:

2763:

2757:

2743:

2739:

2736:

2728:

2726:

2724:

2719:

2718:

2709:

2707:

2705:

2701:

2697:

2693:

2689:

2673:

2670:

2667:

2659:

2655:

2651:

2649:

2640:

2637:

2635:

2633:

2624:

2622:

2620:

2616:

2615:Golomb coding

2612:

2608:

2603:

2599:

2597:

2592:

2590:

2586:

2582:

2577:

2574:

2568:

2566:

2562:

2558:

2551:

2543:

2541:

2539:

2530:

2528:

2507:

2503:

2499:

2496:

2488:

2480:Decompression

2479:

2477:

2473:

2471:

2467:

2462:

2456:

2452:

2448:

2445:

2444:

2443:

2437:

2432:

2429:

2426:

2425:

2423:

2420:

2417:

2416:

2415:

2413:

2409:

2405:

2400:

2398:

2394:

2390:

2386:

2382:

2378:

2370:

2365:

2362:

2359:

2358:

2356:

2353:

2352:

2351:

2349:

2344:

2340:

2326:

2323:

2320:

2300:

2292:

2288:

2284:

2280:

2276:

2272:

2268:

2267:internal node

2264:

2248:

2240:

2236:

2227:

2218:

2215:

2214:

2210:

2207:

2206:

2202:

2199:

2198:

2194:

2191:

2190:

2186:

2183:

2182:

2163:

2160:

2157:

2154:

2151:

2148:

2145:

2117:

2113:

2109:

2104:

2100:

2096:

2091:

2087:

2083:

2078:

2074:

2061:

2050:

2043:

2038:

2036:

2019:

2013:

2004:

2002:

1997:

1983:

1980:

1977:

1974:

1969:

1965:

1961:

1954:

1950:

1943:

1915:

1909:

1905:

1900:

1895:

1891:

1885:

1881:

1875:

1872:

1867:

1863:

1858:

1854:

1851:

1844:

1840:

1836:

1831:

1826:

1822:

1816:

1812:

1806:

1803:

1798:

1794:

1789:

1785:

1777:

1773:

1766:

1761:

1757:

1751:

1748:

1743:

1739:

1734:

1730:

1724:

1718:

1711:

1710:

1709:

1705:

1701:

1694:

1690:

1685:

1682:

1663:

1656:

1652:

1648:

1643:

1638:

1634:

1630:

1622:

1618:

1611:

1604:

1603:

1602:

1597:

1593:

1589:

1584:

1582:

1578:

1574:

1573:

1568:

1558:

1554:

1531:

1527:

1519:

1515:

1507:

1506:

1482:

1478:

1467:

1466:

1438:

1434:

1430:

1426:

1403:

1399:

1393:

1389:

1382:

1381:

1360:

1356:

1349:

1348:

1314:

1310:

1305:

1303:

1298:

1274:

1270:

1265:

1264:

1260:

1240:

1236:

1231:

1228:

1224:

1219:

1216:

1214:

1211:

1207:

1203:

1195:

1193:

1178:

1175:

1172:

1168:

1161:for any code

1147:

1142:

1139:

1136:

1132:

1128:

1124:

1121:

1117:

1112:

1109:

1106:

1102:

1098:

1094:

1087:. Condition:

1074:

1052:

1047:

1043:

1039:

1035:

1032:

1027:

1023:

1016:

1011:

1008:

1005:

1001:

997:

993:

988:

985:

982:

978:

974:

970:

960:

939:

936:

933:

930:

927:

924:

921:

915:

912:

908:

903:

899:

876:

872:

846:

842:

838:

835:

832:

827:

823:

819:

814:

810:

803:

799:

796:

793:

789:

779:

758:

755:

752:

749:

746:

743:

740:

734:

731:

727:

723:

718:

714:

710:

706:

703:

700:

695:

691:

665:

661:

657:

654:

651:

646:

642:

638:

633:

629:

622:

619:

597:

572:

568:

564:

561:

558:

553:

549:

545:

540:

536:

529:

526:

516:

509:

504:

500:

496:

492:

489:

486:

482:

479:

478:

474:

468:

458:

455:

447:

444:December 2021

436:

433:

429:

426:

422:

419:

415:

412:

408:

405: –

404:

400:

399:Find sources:

393:

389:

383:

382:

377:This article

375:

371:

366:

365:

359:

357:

355:

347:

345:

343:

339:

334:

332:

327:

324:, assigned a

323:

319:

315:

312:

308:

300:

298:

296:

292:

288:

284:

280:

276:

272:

267:

265:

261:

257:

253:

249:

245:

241:

237:

233:

221: