3767:) controls the relative density or sparseness of the resulting transition matrix. A choice of 1 yields a uniform distribution. Values greater than 1 produce a dense matrix, in which the transition probabilities between pairs of states are likely to be nearly equal. Values less than 1 result in a sparse matrix in which, for each given source state, only a small number of destination states have non-negligible transition probabilities. It is also possible to use a two-level prior Dirichlet distribution, in which one Dirichlet distribution (the upper distribution) governs the parameters of another Dirichlet distribution (the lower distribution), which in turn governs the transition probabilities. The upper distribution governs the overall distribution of states, determining how likely each state is to occur; its concentration parameter determines the density or sparseness of states. Such a two-level prior distribution, where both concentration parameters are set to produce sparse distributions, might be useful for example in

3510:

3501:(MCMC) sampling are proven to be favorable over finding a single maximum likelihood model both in terms of accuracy and stability. Since MCMC imposes significant computational burden, in cases where computational scalability is also of interest, one may alternatively resort to variational approximations to Bayesian inference, e.g. Indeed, approximate variational inference offers computational efficiency comparable to expectation-maximization, while yielding an accuracy profile only slightly inferior to exact MCMC-type Bayesian inference.

5010:

2743:

6117:

4201:

methods such as the

Forward-Backward and Viterbi algorithms, which require knowledge of the joint law of the HMM and can be computationally intensive to learn, the Discriminative Forward-Backward and Discriminative Viterbi algorithms circumvent the need for the observation's law. This breakthrough allows the HMM to be applied as a discriminative model, offering a more efficient and versatile approach to leveraging Hidden Markov Models in various applications.

4220:

1723:

2105:

4197:

the observed data. This information, encoded in the form of a high-dimensional vector, is used as a conditioning variable of the HMM state transition probabilities. Under such a setup, we eventually obtain a nonstationary HMM the transition probabilities of which evolve over time in a manner that is inferred from the data itself, as opposed to some unrealistic ad-hoc model of temporal evolution.

2756:

3834:

state and its associated observation; rather, features of nearby observations, of combinations of the associated observation and nearby observations, or in fact of arbitrary observations at any distance from a given hidden state can be included in the process used to determine the value of a hidden state. Furthermore, there is no need for these features to be

1755:

unique label y1, y2, y3, ... . The genie chooses an urn in that room and randomly draws a ball from that urn. It then puts the ball onto a conveyor belt, where the observer can observe the sequence of the balls but not the sequence of urns from which they were drawn. The genie has some procedure to choose urns; the choice of the urn for the

913:

1347:

2768:

We can find the most likely sequence by evaluating the joint probability of both the state sequence and the observations for each case (simply by multiplying the probability values, which here correspond to the opacities of the arrows involved). In general, this type of problem (i.e. finding the most

4196:

Finally, a different rationale towards addressing the problem of modeling nonstationary data by means of hidden Markov models was suggested in 2012. It consists in employing a small recurrent neural network (RNN), specifically a reservoir network, to capture the evolution of the temporal dynamics in

3833:

model"). The advantage of this type of model is that arbitrary features (i.e. functions) of the observations can be modeled, allowing domain-specific knowledge of the problem at hand to be injected into the model. Models of this sort are not limited to modeling direct dependencies between a hidden

2759:

The state transition and output probabilities of an HMM are indicated by the line opacity in the upper part of the diagram. Given that we have observed the output sequence in the lower part of the diagram, we may be interested in the most likely sequence of states that could have produced it. Based

4049:

All of the above models can be extended to allow for more distant dependencies among hidden states, e.g. allowing for a given state to be dependent on the previous two or three states rather than a single previous state; i.e. the transition probabilities are extended to encompass sets of three or

4204:

The model suitable in the context of longitudinal data is named latent Markov model. The basic version of this model has been extended to include individual covariates, random effects and to model more complex data structures such as multilevel data. A complete overview of the latent Markov

3838:

of each other, as would be the case if such features were used in a generative model. Finally, arbitrary features over pairs of adjacent hidden states can be used rather than simple transition probabilities. The disadvantages of such models are: (1) The types of prior distributions that can be

1770:

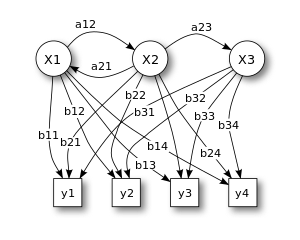

The Markov process itself cannot be observed, only the sequence of labeled balls, thus this arrangement is called a "hidden Markov process". This is illustrated by the lower part of the diagram shown in Figure 1, where one can see that balls y1, y2, y3, y4 can be drawn at each state. Even if the

2479:

possible states, there is a set of emission probabilities governing the distribution of the observed variable at a particular time given the state of the hidden variable at that time. The size of this set depends on the nature of the observed variable. For example, if the observed variable is

1754:

with replacement (where each item from the urn is returned to the original urn before the next step). Consider this example: in a room that is not visible to an observer there is a genie. The room contains urns X1, X2, X3, ... each of which contains a known mix of balls, with each ball having a

4200:

In 2023, two innovative algorithms were introduced for the Hidden Markov Model. These algorithms enable the computation of the posterior distribution of the HMM without the necessity of explicitly modeling the joint distribution, utilizing only the conditional distributions. Unlike traditional

3464:

will have an HMM probability (in the case of the forward algorithm) or a maximum state sequence probability (in the case of the

Viterbi algorithm) at least as large as that of a particular output sequence? When an HMM is used to evaluate the relevance of a hypothesis for a particular output

1791:

Consider two friends, Alice and Bob, who live far apart from each other and who talk together daily over the telephone about what they did that day. Bob is only interested in three activities: walking in the park, shopping, and cleaning his apartment. The choice of what to do is determined

3431:

sequence of hidden states that generated a particular sequence of observations (see illustration on the right). This task is generally applicable when HMM's are applied to different sorts of problems from those for which the tasks of filtering and smoothing are applicable. An example is

3793:

in place of a

Dirichlet distribution. This type of model allows for an unknown and potentially infinite number of states. It is common to use a two-level Dirichlet process, similar to the previously described model with two levels of Dirichlet distributions. Such a model is called a

2738:

is small, it may be more practical to restrict the nature of the covariances between individual elements of the observation vector, e.g. by assuming that the elements are independent of each other, or less restrictively, are independent of all but a fixed number of adjacent elements.)

2087:

represents Alice's belief about which state the HMM is in when Bob first calls her (all she knows is that it tends to be rainy on average). The particular probability distribution used here is not the equilibrium one, which is (given the transition probabilities) approximately

3207:. This task is used when the sequence of latent variables is thought of as the underlying states that a process moves through at a sequence of points in time, with corresponding observations at each point. Then, it is natural to ask about the state of the process at the end.

3839:

placed on hidden states are severely limited; (2) It is not possible to predict the probability of seeing an arbitrary observation. This second limitation is often not an issue in practice, since many common usages of HMM's do not require such predictive probabilities.

711:

3774:, where some parts of speech occur much more commonly than others; learning algorithms that assume a uniform prior distribution generally perform poorly on this task. The parameters of models of this sort, with non-uniform prior distributions, can be learned using

3753:

prior distribution over the transition probabilities. However, it is also possible to create hidden Markov models with other types of prior distributions. An obvious candidate, given the categorical distribution of the transition probabilities, is the

1162:

3762:

distribution of the categorical distribution. Typically, a symmetric

Dirichlet distribution is chosen, reflecting ignorance about which states are inherently more likely than others. The single parameter of this distribution (termed the

1699:

1613:

1792:

exclusively by the weather on a given day. Alice has no definite information about the weather, but she knows general trends. Based on what Bob tells her he did each day, Alice tries to guess what the weather must have been like.

2732:

3440:

corresponding to an observed sequence of words. In this case, what is of interest is the entire sequence of parts of speech, rather than simply the part of speech for a single word, as filtering or smoothing would compute.

4625:

Conversely, there exists a space of subshifts on 6 symbols, projected to subshifts on 2 symbols, such that any Markov measure on the smaller subshift has a preimage measure that is not Markov of any order (Example 2.6 ).

6427:, M. Y. Boudaren, E. Monfrini, and W. Pieczynski, Unsupervised segmentation of random discrete data hidden with switching noise distributions, IEEE Signal Processing Letters, Vol. 19, No. 10, pp. 619-622, October 2012.

4185:, in which an auxiliary underlying process is added to model some data specificities. Many variants of this model have been proposed. One should also mention the interesting link that has been established between the

3818:

of standard HMMs. This type of model directly models the conditional distribution of the hidden states given the observations, rather than modeling the joint distribution. An example of this model is the so-called

1803:

from her. On each day, there is a certain chance that Bob will perform one of the following activities, depending on the weather: "walk", "shop", or "clean". Since Bob tells Alice about his activities, those are the

6412:

2100:

represents how likely Bob is to perform a certain activity on each day. If it is rainy, there is a 50% chance that he is cleaning his apartment; if it is sunny, there is a 60% chance that he is outside for a walk.

4227:

Given a Markov transition matrix and an invariant distribution on the states, we can impose a probability measure on the set of subshifts. For example, consider the Markov chain given on the left on the states

6403:, M. Y. Boudaren, E. Monfrini, W. Pieczynski, and A. Aissani, Dempster-Shafer fusion of multisensor signals in nonstationary Markovian context, EURASIP Journal on Advances in Signal Processing, No. 134, 2012.

3481:

estimate of the parameters of the HMM given the set of output sequences. No tractable algorithm is known for solving this problem exactly, but a local maximum likelihood can be derived efficiently using the

3282:

6424:

908:{\displaystyle \operatorname {\mathbf {P} } {\bigl (}Y_{n}\in A\ {\bigl |}\ X_{1}=x_{1},\ldots ,X_{n}=x_{n}{\bigr )}=\operatorname {\mathbf {P} } {\bigl (}Y_{n}\in A\ {\bigl |}\ X_{n}=x_{n}{\bigr )},}

6415:, P. Lanchantin and W. Pieczynski, Unsupervised restoration of hidden non stationary Markov chain using evidential priors, IEEE Transactions on Signal Processing, Vol. 53, No. 8, pp. 3091-3098, 2005.

2285:

In the standard type of hidden Markov model considered here, the state space of the hidden variables is discrete, while the observations themselves can either be discrete (typically generated from a

4193:

and which allows to fuse data in

Markovian context and to model nonstationary data. Note that alternative multi-stream data fusion strategies have also been proposed in the recent literature, e.g.

3477:

The parameter learning task in HMMs is to find, given an output sequence or a set of such sequences, the best set of state transition and emission probabilities. The task is usually to derive the

3125:

The task is to compute, given the model's parameters and a sequence of observations, the distribution over hidden states of the last latent variable at the end of the sequence, i.e. to compute

4796:

2957:

4620:

3520:

HMMs can be applied in many fields where the goal is to recover a data sequence that is not immediately observable (but other data that depend on the sequence are). Applications include:

3372:

3205:

3044:

6534:

Ng, A., & Jordan, M. (2001). On discriminative vs. generative classifiers: A comparison of logistic regression and naive bayes. Advances in neural information processing systems, 14.

2871:

6821:

3678:

In the hidden Markov models considered above, the state space of the hidden variables is discrete, while the observations themselves can either be discrete (typically generated from a

1783:, at which state) the genie has drawn the third ball from. However, the observer can work out other information, such as the likelihood that the third ball came from each of the urns.

1461:

2789:

The task is to compute in a best way, given the parameters of the model, the probability of a particular output sequence. This requires summation over all possible state sequences:

2126:

The diagram below shows the general architecture of an instantiated HMM. Each oval shape represents a random variable that can adopt any of a number of values. The random variable

1811:

Alice knows the general weather trends in the area, and what Bob likes to do on average. In other words, the parameters of the HMM are known. They can be represented as follows in

994:

2607:

4340:

6516:

Azeraf, E., Monfrini, E., Vignon, E., & Pieczynski, W. (2020). Hidden markov chains, entropic forward-backward, and part-of-speech tagging. arXiv preprint arXiv:2005.10629.

3116:

7356:

4044:

4426:

4272:

1121:

666:

3994:

1342:{\displaystyle \operatorname {\mathbf {P} } (Y_{t_{0}}\in A\mid \{X_{t}\in B_{t}\}_{t\leq t_{0}})=\operatorname {\mathbf {P} } (Y_{t_{0}}\in A\mid X_{t_{0}}\in B_{t_{0}})}

4380:

4108:

6507:

Azeraf, E., Monfrini, E., & Pieczynski, W. (2023). Equivalence between LC-CRF and HMM, and

Discriminative Computing of HMM-Based MPM and MAP. Algorithms, 16(3), 173.

6266:

Beal, Matthew J., Zoubin

Ghahramani, and Carl Edward Rasmussen. "The infinite hidden Markov model." Advances in neural information processing systems 14 (2002): 577-584.

348:

3686:). Hidden Markov models can also be generalized to allow continuous state spaces. Examples of such models are those where the Markov process over hidden variables is a

2431:

7180:

946:

457:

5026:. In: Proceedings, 4th Stochastic Modeling Techniques and Data Analysis International Conference with Demographics Workshop (SMTDA2016), pp. 295-306. Valletta, 2016.

620:

401:

275:

222:

3398:

2547:

2470:

1526:

1382:

4560:

3911:

2405:

2096:

represents the change of the weather in the underlying Markov chain. In this example, there is only a 30% chance that tomorrow will be sunny if today is rainy. The

1496:

1155:

1075:

1048:

700:

590:

563:

5140:

Petropoulos, Anastasios; Chatzis, Sotirios P.; Xanthopoulos, Stylianos (2016). "A novel corporate credit rating system based on

Student's-t hidden Markov models".

1618:

7783:

6776:

4533:

4507:

4478:

4452:

2512:

2378:

2333:

6695:

4744:

1405:

169:

7313:

7293:

5817:

4168:

4148:

4128:

4068:

3951:

3931:

3884:

1017:

421:

368:

315:

295:

242:

189:

146:

126:

106:

86:

66:

1535:

7697:

4774:

3850:) rather than the directed graphical models of MEMM's and similar models. The advantage of this type of model is that it does not suffer from the so-called

6586:

3065:

A number of related tasks ask about the probability of one or more of the latent variables, given the model's parameters and a sequence of observations

2616:

2342:

possible values, modelled as a categorical distribution. (See the section below on extensions for other possibilities.) This means that for each of the

3802:

for short. It was originally described under the name "Infinite Hidden Markov Model" and was further formalized in "Hierarchical

Dirichlet Processes".

7614:

6703:

1763: − 1)-th ball. The choice of urn does not directly depend on the urns chosen before this single previous urn; therefore, this is called a

7624:

7298:

3830:

7308:

6525:

Azeraf, E., Monfrini, E., & Pieczynski, W. (2022). Deriving discriminative classifiers from generative models. arXiv preprint arXiv:2201.00844.

5949:

Baum, L.E. (1972). "An

Inequality and Associated Maximization Technique in Statistical Estimation of Probabilistic Functions of a Markov Process".

7666:

6000:

3698:); however, in general, exact inference in HMMs with continuous latent variables is infeasible, and approximate methods must be used, such as the

5040:

7381:

7563:

6396:

2742:

7853:

7843:

7366:

6570:

6067:

6039:

5896:

7753:

7717:

4791:

2223:

5354:

Stigler, J.; Ziegler, F.; Gieseke, A.; Gebhardt, J. C. M.; Rief, M. (2011). "The Complex Folding Network of Single Calmodulin Molecules".

7670:

5636:

El Zarwi, Feraz (May 2011). "Modeling and Forecasting the Evolution of Preferences over Time: A Hidden Markov Model of Travel Behavior".

4758:

3292:

This is similar to filtering but asks about the distribution of a latent variable somewhere in the middle of a sequence, i.e. to compute

8021:

7758:

4912:

3400:. From the perspective described above, this can be thought of as the probability distribution over hidden states for a point in time

6868:

6769:

5813:"An inequality with applications to statistical estimation for probabilistic functions of Markov processes and to a model for ecology"

3779:

3491:

2554:

2407:

transition probabilities. Note that the set of transition probabilities for transitions from any given state must sum to 1. Thus, the

7823:

7401:

7371:

6156:

5325:

3750:

5553:

Shah, Shalin; Dubey, Abhishek K.; Reif, John (2019-04-10). "Programming Temporal DNA Barcodes for Single-Molecule Fingerprinting".

7674:

7658:

3854:

problem of MEMM's, and thus may make more accurate predictions. The disadvantage is that training can be slower than for MEMM's.

7868:

7573:

6793:

5998:

Jelinek, F.; Bahl, L.; Mercer, R. (1975). "Design of a linguistic statistical decoder for the recognition of continuous speech".

4693:

3217:

3509:

7773:

7738:

7707:

7702:

7341:

7138:

7055:

6494:

M. Lukosevicius, H. Jaeger (2009) Reservoir computing approaches to recurrent neural network training, Computer Science Review

5341:

4708:

7712:

7040:

7336:

7143:

3694:. In simple cases, such as the linear dynamical system just mentioned, exact inference is tractable (in this case, using the

7062:

7798:

7678:

8047:

8026:

7803:

7639:

7538:

7523:

6935:

6851:

6762:

6715:

460:

7813:

7449:

6275:

Teh, Yee Whye, et al. "Hierarchical dirichlet processes." Journal of the American Statistical Association 101.476 (2006).

5692:

Munkhammar, J.; Widén, J. (Aug 2018). "A Markov-chain probability distribution mixture approach to the clear-sky index".

5105:

Sipos, I. Róbert; Ceffer, Attila; Levendovszky, János (2016). "Parallel Optimization of Sparse Portfolios with AR-HMMs".

7808:

6085:

3821:

7411:

5510:

Shah, Shalin; Dubey, Abhishek K.; Reif, John (2019-05-17). "Improved Optical Multiplexing with Temporal DNA Barcodes".

6995:

6940:

6856:

4719:

4703:

4698:

2104:

1812:

7743:

7733:

7376:

7346:

2886:

6587:

Sofic Measures: Characterizations of Hidden Markov Chains by Linear Algebra, Formal Languages, and Symbolic Dynamics

4070:

adjacent states). The disadvantage of such models is that dynamic-programming algorithms for training them have an

7748:

6913:

6811:

6298:

6148:

5735:

Munkhammar, J.; Widén, J. (Oct 2018). "An N-state Markov-chain mixture distribution model of the clear-sky index".

4565:

3629:

3529:

3412:

7459:

7035:

6816:

4812:"Modeling linkage disequilibrium and identifying recombination hotspots using single-nucleotide polymorphism data"

4771:

3295:

3128:

2968:

7828:

7629:

7543:

7528:

6918:

3835:

3657:

In the second half of the 1980s, HMMs began to be applied to the analysis of biological sequences, in particular

3609:

2798:

7662:

7548:

6970:

4641:

3487:

3483:

464:

7050:

7025:

6438:"Visual Workflow Recognition Using a Variational Bayesian Treatment of Multistream Fused Hidden Markov Models,"

4688:

4661:

3843:

3742:

3710:

3679:

3498:

3457:

2485:

2286:

7768:

7351:

6886:

4454:, and this projection also projects the probability measure down to a probability measure on the subshifts on

1410:

6635:

Teif, V. B.; Rippe, K. (2010). "Statistical–mechanical lattice models for protein–DNA binding in chromatin".

3444:

This task requires finding a maximum over all possible state sequences, and can be solved efficiently by the

8057:

7963:

7953:

7644:

7426:

7165:

7030:

6841:

5892:"A Maximization Technique Occurring in the Statistical Analysis of Probabilistic Functions of Markov Chains"

4214:

3866:, which allows for a single observation to be conditioned on the corresponding hidden variables of a set of

3687:

3581:

511:

7248:

5009:

8052:

7905:

7833:

7092:

6700:

6116:

5063:

4927:

4683:

3786:

3771:

3755:

3699:

3690:, with a linear relationship among related variables and where all hidden and observed variables follow a

3558:

3437:

3433:

950:

519:

6440:

IEEE Transactions on Circuits and Systems for Video Technology, vol. 22, no. 7, pp. 1076-1086, July 2012.

2568:

7928:

7910:

7890:

7885:

7604:

7436:

7416:

7263:

7206:

7045:

6955:

6450:

Chatzis, Sotirios P.; Demiris, Yiannis (2012). "A Reservoir-Driven Non-Stationary Hidden Markov Model".

4277:

4175:

3768:

3691:

3683:

3524:

2290:

475:

7396:

3933:

states for each chain), and therefore, learning in such a model is difficult: for a sequence of length

3068:

2437:. Because any transition probability can be determined once the others are known, there are a total of

5244:

5081:

4622:, meaning that the observable part of the system can be affected by something infinitely in the past.

3999:

8003:

7958:

7948:

7689:

7634:

7609:

7578:

7558:

7318:

7303:

7170:

6654:

6459:

5744:

5701:

5666:

5562:

5365:

5055:

4651:

3886:

independent Markov chains, rather than a single Markov chain. It is equivalent to a single HMM, with

3811:

3596:

3497:

If the HMMs are used for time series prediction, more sophisticated Bayesian inference methods, like

1799:. There are two states, "Rainy" and "Sunny", but she cannot observe them directly, that is, they are

6393:

5194:

Higgins, Cameron; Vidaurre, Diego; Kolling, Nils; Liu, Yunzhe; Behrens, Tim; Woolrich, Mark (2022).

5068:

4932:

7998:

7838:

7763:

7568:

7328:

7238:

4385:

4231:

4205:

models, with special attention to the model assumptions and to their practical use is provided in

3847:

3826:

3566:

3466:

3050:

1080:

625:

515:

503:

6736:

6732:

3956:

7968:

7933:

7848:

7818:

7588:

7583:

7406:

7243:

6908:

6846:

6785:

6678:

6644:

6608:

6231:

5915:

5760:

5717:

5637:

5594:

5535:

5443:

5389:

5290:

5122:

4945:

4656:

4646:

4345:

4073:

3734:

3730:

3651:

3586:

3544:

3478:

593:

507:

499:

7649:

4046:

complexity. In practice, approximate techniques, such as variational approaches, could be used.

320:

171:

By definition of being a Markov model, an HMM has an additional requirement that the outcome of

6328:

5407:

Blasiak, S.; Rangwala, H. (2011). "A Hidden Markov Model Variant for Sequence Classification".

5317:

2549:

emission parameters over all hidden states. On the other hand, if the observed variable is an

2410:

7988:

7793:

7444:

7201:

7118:

7087:

6980:

6960:

6950:

6806:

6801:

6670:

6566:

6289:

6285:

6249:

6200:

6162:

6152:

6130:

6102:

6063:

6035:

5586:

5578:

5527:

5492:

5381:

5356:

5321:

5282:

5274:

5225:

4998:

4890:

4841:

4724:

4671:

4666:

3790:

3571:

3461:

3445:

3424:

3211:

3054:

2770:

2610:

2434:

2114:

922:

523:

495:

426:

7654:

7391:

4954:

3650:

and other authors in the second half of the 1960s. One of the first applications of HMMs was

1694:{\displaystyle \operatorname {\mathbf {P} } {\bigl (}Y_{t}\in A\mid X_{t}\in B_{t}{\bigr )})}

599:

373:

247:

194:

8008:

7895:

7778:

7148:

7123:

7072:

7000:

6923:

6876:

6662:

6475:

6467:

6374:

6343:

6307:

6241:

6192:

6094:

6009:

5980:

5931:

5905:

5867:

5836:

5826:

5791:

5752:

5709:

5674:

5570:

5519:

5482:

5474:

5435:

5373:

5264:

5256:

5215:

5207:

5176:

5149:

5114:

5073:

5041:"A variational Bayesian methodology for hidden Markov models utilizing Student's-t mixtures"

4988:

4978:

4937:

4908:

4880:

4872:

4831:

4823:

4535:, not even multiple orders. Intuitively, this is because if one observes a long sequence of

3815:

3726:

3634:

3553:

3377:

2760:

on the arrows that are present in the diagram, the following state sequences are candidates:

2517:

2440:

1771:

observer knows the composition of the urns and has just observed a sequence of three balls,

1501:

1357:

5927:

5312:

The Master Algorithm: How the Quest for the Ultimate Learning Machine Will Remake Our World

4538:

3996:. To find an exact solution, a junction tree algorithm could be used, but it results in an

3889:

2383:

1750:

In its discrete form, a hidden Markov process can be visualized as a generalization of the

1474:

1133:

1053:

1026:

678:

568:

541:

7973:

7873:

7858:

7619:

7553:

7231:

7175:

7158:

6903:

6707:

6590:- Karl Petersen, Mathematics 210, Spring 2006, University of North Carolina at Chapel Hill

6400:

5935:

5923:

5887:

5840:

5342:

Recognition of handwritten word: first and second order hidden Markov model based approach

5243:

Diomedi, S.; Vaccari, F. E.; Galletti, C.; Hadjidimitrakis, K.; Fattori, P. (2021-10-01).

4778:

3759:

3714:

3703:

3647:

3601:

3576:

2259:

2216:

7788:

7020:

6745:

6666:

6142:

4759:

Real-Time American Sign Language Visual Recognition From Video Using Hidden Markov Models

4512:

4486:

4457:

4431:

2491:

2357:

2312:

1608:{\displaystyle \operatorname {\mathbf {P} } {\bigl (}Y_{n}\in A\mid X_{n}=x_{n}{\bigr )}}

6658:

6463:

5748:

5705:

5670:

5566:

5369:

5059:

1387:

151:

7978:

7943:

7863:

7469:

7216:

7133:

7102:

7097:

7077:

7067:

7010:

7005:

6985:

6965:

6930:

6898:

6881:

5968:

5487:

5462:

5310:

5220:

5195:

4993:

4966:

4885:

4860:

4836:

4811:

4171:

4153:

4133:

4113:

4053:

3936:

3916:

3869:

3775:

3662:

3614:

1764:

1002:

703:

527:

471:

406:

353:

300:

280:

227:

174:

131:

111:

91:

71:

51:

45:

6560:

6347:

6181:"Inference in finite state space non parametric Hidden Markov Models and applications"

5678:

4219:

3214:. An example is when the algorithm is applied to a Hidden Markov Network to determine

8041:

7880:

7421:

7258:

7253:

7211:

7153:

6975:

6891:

6831:

6749:

6098:

6055:

6027:

5764:

5721:

5294:

4636:

3695:

3539:

6723:

5831:

5598:

5539:

5260:

5126:

4949:

4913:"A tutorial on Hidden Markov Models and selected applications in speech recognition"

2769:

likely explanation for an observation sequence) can be solved efficiently using the

17:

7938:

7900:

7454:

7386:

7275:

7270:

7082:

7015:

6990:

6826:

6138:

6083:

M. Bishop and E. Thompson (1986). "Maximum Likelihood Alignment of DNA Sequences".

5447:

5393:

5167:

NICOLAI, CHRISTOPHER (2013). "SOLVING ION CHANNEL KINETICS WITH THE QuB SOFTWARE".

4223:

The hidden part of a hidden Markov model, whose observable states is non-Markovian.

3534:

3415:

is a good method for computing the smoothed values for all hidden state variables.

1796:

41:

7518:

6682:

5812:

5756:

5713:

5027:

5024:

Parallel stratified MCMC sampling of AR-HMMs for stochastic time series prediction

4827:

2727:{\displaystyle N\left(M+{\frac {M(M+1)}{2}}\right)={\frac {NM(M+3)}{2}}=O(NM^{2})}

6471:

5780:"Statistical Inference for Probabilistic Functions of Finite State Markov Chains"

5574:

5077:

7983:

7502:

7497:

7492:

7482:

7285:

7226:

7221:

7185:

6945:

6836:

6437:

6144:

Biological Sequence Analysis: Probabilistic Models of Proteins and Nucleic Acids

5657:

Morf, H. (Feb 1998). "The stochastic two-state solar irradiance model (STSIM)".

4713:

3591:

1751:

1722:

6379:

6362:

6219:

5984:

5153:

3842:

A variant of the previously described discriminative model is the linear-chain

7993:

7533:

7477:

7361:

6546:

Panel Analysis: Latent Probability Models for Attitude and Behaviour Processes

6312:

6293:

6196:

5910:

5891:

5796:

5779:

5523:

5439:

5180:

5118:

1759:-th ball depends only upon a random number and the choice of the urn for the (

6253:

6245:

6204:

6166:

6013:

5582:

5278:

4983:

7487:

6689:

6180:

6134:

5872:

5855:

5377:

4967:"Error statistics of hidden Markov model and hidden Boltzmann model results"

3623:

2778:

2350:

can be in, there is a transition probability from this state to each of the

997:

487:

483:

6674:

6480:

5590:

5531:

5496:

5409:

IJCAI Proceedings-International Joint Conference on Artificial Intelligence

5385:

5286:

5229:

5002:

4894:

4845:

6106:

3469:

associated with failing to reject the hypothesis for the output sequence.

2305:). The transition probabilities control the way the hidden state at time

5478:

3646:

Hidden Markov models were described in a series of statistical papers by

1157:

is a Markov process whose behavior is not directly observable ("hidden");

5269:

4792:

Use of hidden Markov models for partial discharge pattern classification

3456:

For some of the above problems, it may also be interesting to ask about

2755:

7314:

Generalized autoregressive conditional heteroskedasticity (GARCH) model

6754:

5919:

4876:

491:

479:

5245:"Motor-like neural dynamics in two parietal areas during arm reaching"

5211:

4483:

The curious thing is that the probability measure on the subshifts on

3825:(MEMM), which models the conditional distribution of the states using

3717:

of the model and the learnability limits are still under exploration.

2781:

problems are associated with hidden Markov models, as outlined below.

4861:"ChromHMM: automating chromatin-state discovery and characterization"

3513:

A profile HMM modelling a multiple sequence alignment of proteins in

1726:

Figure 1. Probabilistic parameters of a hidden Markov model (example)

4941:

6236:

6218:

Abraham, Kweku; Gassiat, Elisabeth; Naulet, Zacharie (March 2023).

5642:

5612:

5196:"Spatiotemporally Resolved Multivariate Pattern Analysis for M/EEG"

3785:

An extension of the previously described hidden Markov models with

6649:

6613:

4680:, a free hidden Markov model program for protein sequence analysis

4677:

3508:

1721:

44:

in which the observations are dependent on a latent (or "hidden")

6696:

Fitting HMM's with expectation-maximization – complete derivation

1775:

y1, y2 and y3 on the conveyor belt, the observer still cannot be

128:

cannot be observed directly, the goal is to learn about state of

6220:"Fundamental Limits for Learning Hidden Markov Model Parameters"

5461:

Wong, K. -C.; Chan, T. -M.; Peng, C.; Li, Y.; Zhang, Z. (2013).

3548:

3514:

2562:

2258: − 2 and before have no influence. This is called the

6758:

5426:

Wong, W.; Stamp, M. (2006). "Hunting for metamorphic engines".

3658:

2293:). The parameters of a hidden Markov model are of two types,

459:

Estimation of the parameters in an HMM can be performed using

5973:

IEEE Transactions on Acoustics, Speech, and Signal Processing

3733:

of observations and hidden states, or equivalently both the

3709:

Nowadays, inference in hidden Markov models is performed in

2741:

2103:

1808:. The entire system is that of a hidden Markov model (HMM).

6605:

Hidden Markov processes in the context of symbolic dynamics

4772:

Modeling Form for On-line Following of Musical Performances

3460:. What is the probability that a sequence drawn from some

3277:{\displaystyle \mathrm {P} {\big (}h_{t}\ |v_{1:t}{\big )}}

2962:

where the sum runs over all possible hidden-node sequences

7294:

Autoregressive conditional heteroskedasticity (ARCH) model

6363:"Multisensor triplet Markov chains and theory of evidence"

5613:"ChromHMM: Chromatin state discovery and characterization"

4797:

IEEE Transactions on Dielectrics and Electrical Insulation

3661:. Since then, they have become ubiquitous in the field of

3053:, this problem, too, can be handled efficiently using the

2734:

emission parameters. (In such a case, unless the value of

2553:-dimensional vector distributed according to an arbitrary

3749:), is modeled. The above algorithms implicitly assume a

470:

Hidden Markov models are known for their applications to

6822:

Independent and identically distributed random variables

5039:

Chatzis, Sotirios P.; Kosmopoulos, Dimitrios I. (2011).

4761:. Master's Thesis, MIT, Feb 1995, Program in Media Arts

4674:

free server and software for protein sequence searching

2338:

The hidden state space is assumed to consist of one of

1795:

Alice believes that the weather operates as a discrete

68:). An HMM requires that there be an observable process

7299:

Autoregressive integrated moving average (ARIMA) model

4170:

Markov chain). This extension has been widely used in

4568:

4541:

4515:

4489:

4460:

4434:

4388:

4348:

4280:

4234:

4156:

4136:

4116:

4076:

4056:

4002:

3959:

3953:, a straightforward Viterbi algorithm has complexity

3939:

3919:

3892:

3872:

3465:

sequence, the statistical significance indicates the

3380:

3298:

3220:

3131:

3071:

2971:

2889:

2801:

2619:

2571:

2520:

2494:

2443:

2413:

2386:

2360:

2315:

1767:. It can be described by the upper part of Figure 1.

1621:

1538:

1504:

1477:

1413:

1390:

1360:

1165:

1136:

1083:

1056:

1029:

1005:

953:

925:

714:

706:

whose behavior is not directly observable ("hidden");

681:

628:

602:

571:

544:

429:

409:

376:

356:

323:

303:

283:

250:

230:

197:

177:

154:

134:

114:

94:

74:

54:

6731:

Hidden Markov Models: Fundamentals and Applications

2215: }). The arrows in the diagram (often called a

7921:

7726:

7688:

7597:

7511:

7468:

7435:

7327:

7284:

7194:

7111:

6867:

6792:

6559:Bartolucci, F.; Farcomeni, A.; Pennoni, F. (2013).

5856:"Growth transformations for functions on manifolds"

4562:, then one would become increasingly sure that the

3436:, where the hidden states represent the underlying

2270:) only depends on the value of the hidden variable

224:must be "influenced" exclusively by the outcome of

6179:Gassiat, E.; Cleynen, A.; Robin, S. (2016-01-01).

5309:

4614:

4554:

4527:

4501:

4472:

4446:

4420:

4374:

4334:

4266:

4162:

4142:

4122:

4102:

4062:

4038:

3988:

3945:

3925:

3905:

3878:

3796:hierarchical Dirichlet process hidden Markov model

3721:Bayesian modeling of the transitions probabilities

3713:settings, where the dependency structure enables

3423:The task, unlike the previous two, asks about the

3392:

3366:

3276:

3210:This problem can be handled efficiently using the

3199:

3110:

3038:

2951:

2865:

2726:

2601:

2541:

2506:

2464:

2425:

2399:

2372:

2327:

1693:

1607:

1520:

1490:

1455:

1399:

1376:

1341:

1149:

1115:

1077:be continuous-time stochastic processes. The pair

1069:

1042:

1011:

988:

940:

907:

694:

660:

614:

584:

557:

451:

415:

395:

362:

342:

309:

289:

269:

236:

216:

183:

163:

140:

120:

100:

80:

60:

7181:Stochastic chains with memory of variable length

6690:A Revealing Introduction to Hidden Markov Models

5463:"DNA motif elucidation using belief propagation"

3846:. This uses an undirected graphical model (aka

2262:. Similarly, the value of the observed variable

2952:{\displaystyle P(Y)=\sum _{X}P(Y\mid X)P(X),\,}

2354:possible states of the hidden variable at time

2346:possible states that a hidden variable at time

2113:A similar example is further elaborated in the

6367:International Journal of Approximate Reasoning

6770:

5818:Bulletin of the American Mathematical Society

4615:{\displaystyle Pr(A|B^{n})\to {\frac {2}{3}}}

3269:

3228:

1683:

1634:

1600:

1551:

897:

864:

838:

818:

753:

727:

8:

6436:Sotirios P. Chatzis, Dimitrios Kosmopoulos,

5890:; Petrie, T.; Soules, G.; Weiss, N. (1970).

5344:." Pattern recognition 22.3 (1989): 283-297.

4859:Ernst, Jason; Kellis, Manolis (March 2012).

3367:{\displaystyle P(x(k)\ |\ y(1),\dots ,y(t))}

3200:{\displaystyle P(x(t)\ |\ y(1),\dots ,y(t))}

3039:{\displaystyle X=x(0),x(1),\dots ,x(L-1).\,}

1428:

1414:

1232:

1205:

6032:Hidden Markov Models for Speech Recognition

5340:Kundu, Amlan, Yang He, and Paramvir Bahl. "

2866:{\displaystyle Y=y(0),y(1),\dots ,y(L-1)\,}

2746:Temporal evolution of a hidden Markov model

7309:Autoregressive–moving-average (ARMA) model

6777:

6763:

6755:

6744:Lecture on a Spreadsheet by Jason Eisner,

6603:Boyle, Mike; Petersen, Karl (2010-01-13),

6562:Latent Markov models for longitudinal data

2238:, given the values of the hidden variable

6692:by Mark Stamp, San Jose State University.

6648:

6612:

6479:

6378:

6311:

6235:

5971:(1975). "The DRAGON system—An overview".

5909:

5871:

5830:

5795:

5641:

5486:

5268:

5219:

5067:

4992:

4982:

4931:

4884:

4835:

4602:

4590:

4581:

4567:

4546:

4540:

4514:

4488:

4459:

4433:

4412:

4399:

4387:

4366:

4353:

4347:

4342:. If we "forget" the distinction between

4321:

4307:

4293:

4279:

4258:

4245:

4233:

4155:

4135:

4115:

4093:

4087:

4075:

4055:

4029:

4025:

4013:

4001:

3979:

3970:

3958:

3938:

3918:

3897:

3891:

3871:

3563:Document separation in scanning solutions

3379:

3320:

3297:

3268:

3267:

3255:

3246:

3237:

3227:

3226:

3221:

3219:

3153:

3130:

3070:

3035:

2970:

2948:

2909:

2888:

2862:

2800:

2715:

2669:

2634:

2618:

2572:

2570:

2519:

2493:

2442:

2412:

2391:

2385:

2359:

2314:

2309:is chosen given the hidden state at time

2108:Graphical representation of the given HMM

1682:

1681:

1675:

1662:

1643:

1633:

1632:

1623:

1622:

1620:

1599:

1598:

1592:

1579:

1560:

1550:

1549:

1540:

1539:

1537:

1509:

1503:

1482:

1476:

1442:

1431:

1421:

1412:

1389:

1365:

1359:

1328:

1323:

1308:

1303:

1282:

1277:

1261:

1260:

1246:

1235:

1225:

1212:

1188:

1183:

1167:

1166:

1164:

1141:

1135:

1104:

1091:

1082:

1061:

1055:

1034:

1028:

1004:

977:

958:

952:

924:

896:

895:

889:

876:

863:

862:

847:

837:

836:

827:

826:

817:

816:

810:

797:

778:

765:

752:

751:

736:

726:

725:

716:

715:

713:

686:

680:

649:

636:

627:

601:

576:

570:

549:

543:

440:

428:

408:

387:

375:

355:

334:

322:

302:

282:

261:

249:

229:

208:

196:

176:

153:

133:

113:

93:

88:whose outcomes depend on the outcomes of

73:

53:

4382:, we project this space of subshifts on

4218:

2792:The probability of observing a sequence

2754:

2433:matrix of transition probabilities is a

2138:(with the model from the above diagram,

1456:{\displaystyle \{B_{t}\}_{t\leq t_{0}}.}

467:can be used to estimate the parameters.

6719:(an exposition using basic mathematics)

6224:IEEE Transactions on Information Theory

6001:IEEE Transactions on Information Theory

4736:

2222:From the diagram, it is clear that the

7615:Doob's martingale convergence theorems

7367:Constant elasticity of variance (CEV)

7357:Chan–Karolyi–Longstaff–Sanders (CKLS)

6598:

6596:

6147:(1st ed.), Cambridge, New York:

6058:; Alex Acero; Hsiao-Wuen Hon (2001).

5897:The Annals of Mathematical Statistics

5784:The Annals of Mathematical Statistics

3810:A different type of extension uses a

3486:or the Baldi–Chauvin algorithm. The

350:must be conditionally independent of

7:

6565:. Boca Raton: Chapman and Hall/CRC.

4810:Li, N; Stephens, M (December 2003).

4790:Satish L, Gururaj BI (April 2003). "

4509:is not created by a Markov chain on

4050:four adjacent states (or in general

2514:separate parameters, for a total of

2254: − 1); the values at time

2246:on the value of the hidden variable

2224:conditional probability distribution

989:{\displaystyle x_{1},\ldots ,x_{n},}

4428:into another space of subshifts on

3061:Probability of the latent variables

2785:Probability of an observed sequence

2602:{\displaystyle {\frac {M(M+1)}{2}}}

2219:) denote conditional dependencies.

7854:Skorokhod's representation theorem

7635:Law of large numbers (weak/strong)

5811:Baum, L. E.; Eagon, J. A. (1967).

4335:{\displaystyle \pi =(2/7,4/7,1/7)}

4150:total observations (i.e. a length-

3780:expectation-maximization algorithm

3745:of observations given states (the

3682:) or continuous (typically from a

3492:expectation-maximization algorithm

3222:

2555:multivariate Gaussian distribution

2289:) or continuous (typically from a

25:

7824:Martingale representation theorem

5854:Baum, L. E.; Sell, G. R. (1968).

3111:{\displaystyle y(1),\dots ,y(t).}

7869:Stochastic differential equation

7759:Doob's optional stopping theorem

7754:Doob–Meyer decomposition theorem

6294:"Factorial Hidden Markov Models"

6115:

5778:Baum, L. E.; Petrie, T. (1966).

5142:Expert Systems with Applications

5008:

4694:Hierarchical hidden Markov model

4181:Another recent extension is the

4039:{\displaystyle O(N^{K+1}\,K\,T)}

3530:Single-molecule kinetic analysis

1741:— state transition probabilities

1624:

1541:

1262:

1168:

828:

717:

7739:Convergence of random variables

7625:Fisher–Tippett–Gnedenko theorem

6701:A step-by-step tutorial on HMMs

5832:10.1090/S0002-9904-1967-11751-8

5261:10.1016/j.pneurobio.2021.102116

5169:Biophysical Reviews and Letters

4709:Stochastic context-free grammar

2484:possible values, governed by a

1407:and every family of Borel sets

7337:Binomial options pricing model

6667:10.1088/0953-8984/22/41/414105

6034:. Edinburgh University Press.

5860:Pacific Journal of Mathematics

4599:

4596:

4582:

4575:

4329:

4287:

4274:, with invariant distribution

4097:

4080:

4033:

4006:

3983:

3963:

3404:in the past, relative to time

3361:

3358:

3352:

3337:

3331:

3321:

3314:

3308:

3302:

3247:

3194:

3191:

3185:

3170:

3164:

3154:

3147:

3141:

3135:

3102:

3096:

3081:

3075:

3029:

3017:

3002:

2996:

2987:

2981:

2942:

2936:

2930:

2918:

2899:

2893:

2859:

2847:

2832:

2826:

2817:

2811:

2721:

2705:

2690:

2678:

2652:

2640:

2590:

2578:

2536:

2524:

2459:

2447:

2167: }). The random variable

2134:) is the hidden state at time

2090:{'Rainy': 0.57, 'Sunny': 0.43}

1718:Drawing balls from hidden urns

1688:

1515:

1336:

1270:

1254:

1176:

1110:

1084:

655:

629:

1:

7804:Kolmogorov continuity theorem

7640:Law of the iterated logarithm

6361:Pieczynski, Wojciech (2007).

6348:10.1016/S1631-073X(02)02462-7

6327:Pieczynski, Wojciech (2002).

5757:10.1016/j.solener.2018.07.056

5714:10.1016/j.solener.2018.05.055

5679:10.1016/S0038-092X(98)00004-8

4757:Thad Starner, Alex Pentland.

4421:{\displaystyle A,B_{1},B_{2}}

4267:{\displaystyle A,B_{1},B_{2}}

3864:factorial hidden Markov model

3654:, starting in the mid-1970s.

2475:In addition, for each of the

2175:) is the observation at time

1116:{\displaystyle (X_{t},Y_{t})}

661:{\displaystyle (X_{n},Y_{n})}

463:. For linear chain HMMs, the

7809:Kolmogorov extension theorem

7488:Generalized queueing network

6996:Interacting particle systems

6472:10.1016/j.patcog.2012.04.018

6329:"Chaı̂nes de Markov Triplet"

6141:; Mitchison, Graeme (1998),

6099:10.1016/0022-2836(86)90289-5

6086:Journal of Molecular Biology

6030:; M. Jack; Y. Ariki (1990).

5575:10.1021/acs.nanolett.9b00590

5428:Journal in Computer Virology

5078:10.1016/j.patcog.2010.09.001

4770:B. Pardo and W. Birmingham.

3989:{\displaystyle O(N^{2K}\,T)}

3822:maximum entropy Markov model

3778:or extended versions of the

3617:discovery (DNA and proteins)

6941:Continuous-time random walk

6336:Comptes Rendus Mathématique

4828:10.1093/genetics/165.4.2213

4781:. AAAI-05 Proc., July 2005.

4720:Variable-order Markov model

4704:Sequential dynamical system

4699:Layered hidden Markov model

4375:{\displaystyle B_{1},B_{2}}

4103:{\displaystyle O(N^{K}\,T)}

3913:states (assuming there are

3862:Yet another variant is the

3610:Metamorphic virus detection

2609:parameters controlling the

2561:parameters controlling the

522:, musical score following,

8074:

7949:Extreme value theory (EVT)

7749:Doob decomposition theorem

7041:Ornstein–Uhlenbeck process

6812:Chinese restaurant process

6380:10.1016/j.ijar.2006.05.001

6149:Cambridge University Press

6060:Spoken Language Processing

5985:10.1109/TASSP.1975.1162650

5154:10.1016/j.eswa.2016.01.015

4212:

3630:Transportation forecasting

3620:DNA hybridization kinetics

3587:Alignment of bio-sequences

3413:forward-backward algorithm

3049:Applying the principle of

1471:The states of the process

343:{\displaystyle t<t_{0}}

8017:

7829:Optional stopping theorem

7630:Large deviation principle

7382:Heath–Jarrow–Morton (HJM)

7319:Moving-average (MA) model

7304:Autoregressive (AR) model

7129:Hidden Markov model (HMM)

7063:Schramm–Loewner evolution

6637:J. Phys.: Condens. Matter

6197:10.1007/s11222-014-9523-8

5524:10.1021/acssynbio.9b00010

5440:10.1007/s11416-006-0028-7

5181:10.1142/S1793048013300053

5119:10.1007/s10614-016-9579-y

3836:statistically independent

3725:Hidden Markov models are

3490:is a special case of the

2426:{\displaystyle N\times N}

277:and that the outcomes of

7744:Doléans-Dade exponential

7574:Progressively measurable

7372:Cox–Ingersoll–Ross (CIR)

6246:10.1109/TIT.2022.3213429

6185:Statistics and Computing

6014:10.1109/TIT.1975.1055384

5308:Domingos, Pedro (2015).

5249:Progress in Neurobiology

4984:10.1186/1471-2105-10-212

4689:Hidden semi-Markov model

4662:Conditional random field

3844:conditional random field

3743:conditional distribution

3739:transition probabilities

3680:categorical distribution

3499:Markov chain Monte Carlo

3458:statistical significance

3452:Statistical significance

2486:categorical distribution

2295:transition probabilities

2287:categorical distribution

1817:

941:{\displaystyle n\geq 1,}

452:{\displaystyle t=t_{0}.}

27:Statistical Markov model

7964:Mathematical statistics

7954:Large deviations theory

7784:Infinitesimal generator

7645:Maximal ergodic theorem

7564:Piecewise-deterministic

7166:Random dynamical system

7031:Markov additive process

6750:interactive spreadsheet

6544:Wiggins, L. M. (1973).

6313:10.1023/A:1007425814087

6112:(subscription required)

5911:10.1214/aoms/1177697196

5873:10.2140/pjm.1968.27.211

5797:10.1214/aoms/1177699147

5378:10.1126/science.1207598

5316:. Basic Books. p.

5107:Computational Economics

4920:Proceedings of the IEEE

4215:Subshift of finite type

3806:Discriminative approach

3765:concentration parameter

3688:linear dynamical system

3606:Sequence classification

3582:Handwriting recognition

3419:Most likely explanation

2472:transition parameters.

2226:of the hidden variable

2122:Structural architecture

2083:In this piece of code,

1736:— possible observations

615:{\displaystyle n\geq 1}

396:{\displaystyle t=t_{0}}

270:{\displaystyle t=t_{0}}

217:{\displaystyle t=t_{0}}

7799:Karhunen–Loève theorem

7734:Cameron–Martin formula

7698:Burkholder–Davis–Gundy

7093:Variance gamma process

6727:(by Narada Warakagoda)

6548:. Amsterdam: Elsevier.

5467:Nucleic Acids Research

4684:Hidden Bernoulli model

4616:

4556:

4529:

4503:

4474:

4448:

4422:

4376:

4336:

4268:

4224:

4164:

4144:

4124:

4104:

4064:

4040:

3990:

3947:

3927:

3907:

3880:

3772:part-of-speech tagging

3756:Dirichlet distribution

3747:emission probabilities

3737:of hidden states (the

3700:extended Kalman filter

3559:Part-of-speech tagging

3517:

3434:part-of-speech tagging

3394:

3393:{\displaystyle k<t}

3368:

3278:

3201:

3112:

3040:

2953:

2867:

2774:

2747:

2728:

2603:

2543:

2542:{\displaystyle N(M-1)}

2508:

2466:

2465:{\displaystyle N(N-1)}

2427:

2401:

2374:

2329:

2299:emission probabilities

2242:at all times, depends

2109:

2094:transition_probability

1901:transition_probability

1747:

1746:— output probabilities

1695:

1609:

1522:

1521:{\displaystyle X_{t})}

1492:

1457:

1401:

1378:

1377:{\displaystyle t_{0},}

1343:

1151:

1117:

1071:

1044:

1013:

990:

942:

909:

696:

662:

616:

586:

559:

520:part-of-speech tagging

453:

417:

397:

364:

344:

311:

291:

271:

238:

218:

185:

165:

142:

122:

108:in a known way. Since

102:

82:

62:

7929:Actuarial mathematics

7891:Uniform integrability

7886:Stratonovich integral

7814:Lévy–Prokhorov metric

7718:Marcinkiewicz–Zygmund

7605:Central limit theorem

7207:Gaussian random field

7036:McKean–Vlasov process

6956:Dyson Brownian motion

6817:Galton–Watson process

6711:(University of Leeds)

5512:ACS Synthetic Biology

4617:

4557:

4555:{\displaystyle B^{n}}

4530:

4504:

4475:

4449:

4423:

4377:

4337:

4269:

4222:

4191:triplet Markov models

4174:, in the modeling of

4165:

4145:

4125:

4105:

4065:

4041:

3991:

3948:

3928:

3908:

3906:{\displaystyle N^{K}}

3881:

3692:Gaussian distribution

3684:Gaussian distribution

3525:Computational finance

3512:

3395:

3369:

3279:

3202:

3113:

3041:

2954:

2868:

2758:

2745:

2729:

2604:

2544:

2509:

2467:

2428:

2402:

2400:{\displaystyle N^{2}}

2375:

2330:

2291:Gaussian distribution

2187:) ∈ {

2146:) ∈ {

2107:

1787:Weather guessing game

1725:

1696:

1610:

1523:

1493:

1491:{\displaystyle X_{n}}

1458:

1402:

1379:

1344:

1152:

1150:{\displaystyle X_{t}}

1118:

1072:

1070:{\displaystyle Y_{t}}

1045:

1043:{\displaystyle X_{t}}

1014:

991:

943:

910:

697:

695:{\displaystyle X_{n}}

663:

617:

587:

585:{\displaystyle Y_{n}}

560:

558:{\displaystyle X_{n}}

476:statistical mechanics

454:

418:

398:

365:

345:

312:

292:

272:

239:

219:

186:

166:

143:

123:

103:

83:

63:

8048:Hidden Markov models

8004:Time series analysis

7959:Mathematical finance

7844:Reflection principle

7171:Regenerative process

6971:Fleming–Viot process

6786:Stochastic processes

6724:Hidden Markov Models

6716:Hidden Markov Models

4965:Newberg, L. (2009).

4652:Bayesian programming

4642:Baum–Welch algorithm

4566:

4539:

4513:

4487:

4458:

4432:

4386:

4346:

4278:

4232:

4183:triplet Markov model

4154:

4134:

4130:adjacent states and

4114:

4074:

4054:

4000:

3957:

3937:

3917:

3890:

3870:

3812:discriminative model

3674:General state spaces

3597:Activity recognition

3592:Time series analysis

3488:Baum–Welch algorithm

3484:Baum–Welch algorithm

3378:

3296:

3218:

3129:

3069:

2969:

2887:

2799:

2617:

2569:

2518:

2492:

2441:

2411:

2384:

2358:

2313:

2303:output probabilities

2098:emission_probability

1979:emission_probability

1703:emission probability

1619:

1536:

1502:

1475:

1411:

1388:

1358:

1163:

1134:

1081:

1054:

1027:

1003:

951:

923:

712:

679:

626:

600:

594:stochastic processes

569:

542:

465:Baum–Welch algorithm

427:

407:

374:

354:

321:

301:

281:

248:

228:

195:

175:

152:

132:

112:

92:

72:

52:

18:Hidden Markov models

7999:Stochastic analysis

7839:Quadratic variation

7834:Prokhorov's theorem

7769:Feynman–Kac formula

7239:Markov random field

6887:Birth–death process

6659:2010JPCM...22O4105T

6464:2012PatRe..45.3985C

6452:Pattern Recognition

5749:2018SoEn..173..487M

5706:2018SoEn..170..174M

5671:1998SoEn...62..101M

5567:2019NanoL..19.2668S

5370:2011Sci...334..512S

5200:Human Brain Mapping

5060:2011PatRe..44..295C

5048:Pattern Recognition

4909:Lawrence R. Rabiner

4528:{\displaystyle A,B}

4502:{\displaystyle A,B}

4473:{\displaystyle A,B}

4447:{\displaystyle A,B}

3848:Markov random field

3827:logistic regression

3567:Machine translation

3467:false positive rate

3051:dynamic programming

2507:{\displaystyle M-1}

2373:{\displaystyle t+1}

2328:{\displaystyle t-1}

1125:hidden Markov model

670:hidden Markov model

516:gesture recognition

504:pattern recognition

34:hidden Markov model

7969:Probability theory

7849:Skorokhod integral

7819:Malliavin calculus

7402:Korn-Kreer-Lenssen

7286:Time series models

7249:Pitman–Yor process

6706:2017-08-13 at the

6399:2014-03-11 at the

6290:Jordan, Michael I.

6286:Ghahramani, Zoubin

6131:Durbin, Richard M.

5479:10.1093/nar/gkt574

5022:Sipos, I. Róbert.

4971:BMC Bioinformatics

4877:10.1038/nmeth.1906

4777:2012-02-06 at the

4657:Richard James Boys

4647:Bayesian inference

4612:

4552:

4525:

4499:

4470:

4444:

4418:

4372:

4332:

4264:

4225:

4187:theory of evidence

4160:

4140:

4120:

4110:running time, for

4100:

4060:

4036:

3986:

3943:

3923:

3903:

3876:

3829:(also known as a "

3735:prior distribution

3731:joint distribution

3652:speech recognition

3545:Speech recognition

3518:

3479:maximum likelihood

3390:

3364:

3274:

3197:

3108:

3036:

2949:

2914:

2863:

2775:

2748:

2724:

2599:

2539:

2504:

2462:

2423:

2397:

2370:

2325:

2110:

1748:

1707:output probability

1691:

1605:

1518:

1488:

1453:

1400:{\displaystyle A,}

1397:

1374:

1339:

1147:

1113:

1067:

1040:

1009:

986:

938:

905:

692:

658:

612:

582:

555:

524:partial discharges

500:information theory

461:maximum likelihood

449:

413:

393:

360:

340:

307:

287:

267:

234:

214:

181:

164:{\displaystyle Y.}

161:

138:

118:

98:

78:

58:

8035:

8034:

7989:Signal processing

7708:Doob's upcrossing

7703:Doob's martingale

7667:Engelbert–Schmidt

7610:Donsker's theorem

7544:Feller-continuous

7412:Rendleman–Bartter

7202:Dirichlet process

7119:Branching process

7088:Telegraph process

6981:Geometric process

6961:Empirical process

6951:Diffusion process

6807:Branching process

6802:Bernoulli process

6740:(by V. Petrushin)

6572:978-14-3981-708-7

6458:(11): 3985–3996.

6413:Lanchantin et al.

6069:978-0-13-022616-7

6062:. Prentice Hall.

6041:978-0-7486-0162-2

5364:(6055): 512–516.

5212:10.1002/hbm.25835

5206:(10): 3062–3085.

5175:(3n04): 191–211.

4911:(February 1989).

4725:Viterbi algorithm

4672:HHpred / HHsearch

4667:Estimation theory

4610:

4163:{\displaystyle T}

4143:{\displaystyle T}

4123:{\displaystyle K}

4063:{\displaystyle K}

3946:{\displaystyle T}

3926:{\displaystyle N}

3879:{\displaystyle K}

3791:Dirichlet process

3727:generative models

3572:Partial discharge

3462:null distribution

3446:Viterbi algorithm

3425:joint probability

3327:

3319:

3245:

3212:forward algorithm

3160:

3152:

3055:forward algorithm

2905:

2771:Viterbi algorithm

2697:

2659:

2613:, for a total of

2611:covariance matrix

2597:

2380:, for a total of

2115:Viterbi algorithm

2085:start_probability

2066:"clean"

2033:"Sunny"

2021:"clean"

1988:"Rainy"

1964:"Sunny"

1952:"Rainy"

1943:"Sunny"

1931:"Sunny"

1919:"Rainy"

1910:"Rainy"

1889:"Sunny"

1877:"Rainy"

1868:start_probability

1862:"clean"

1835:"Sunny"

1829:"Rainy"

1012:{\displaystyle A}

871:

861:

760:

750:

592:be discrete-time

496:signal processing

416:{\displaystyle X}

363:{\displaystyle Y}

310:{\displaystyle Y}

290:{\displaystyle X}

237:{\displaystyle X}

184:{\displaystyle Y}

141:{\displaystyle X}

121:{\displaystyle X}

101:{\displaystyle X}

81:{\displaystyle Y}

61:{\displaystyle X}

16:(Redirected from

8065:

8009:Machine learning

7896:Usual hypotheses

7779:Girsanov theorem

7764:Dynkin's formula

7529:Continuous paths

7437:Actuarial models

7377:Garman–Kohlhagen

7347:Black–Karasinski

7342:Black–Derman–Toy

7329:Financial models

7195:Fields and other

7124:Gaussian process

7073:Sigma-martingale

6877:Additive process

6779:

6772:

6765:

6756:

6686:

6652:

6618:

6617:

6616:

6600:

6591:

6583:

6577:

6576:

6556:

6550:

6549:

6541:

6535:

6532:

6526:

6523:

6517:

6514:

6508:

6505:

6499:

6492:

6486:

6485:

6483:

6447:

6441:

6434:

6428:

6422:

6416:

6410:

6404:

6391:

6385:

6384:

6382:

6358:

6352:

6351:

6333:

6324:

6318:

6317:

6315:

6306:(2/3): 245–273.

6299:Machine Learning

6282:

6276:

6273:

6267:

6264:

6258:

6257:

6239:

6230:(3): 1777–1794.

6215:

6209:

6208:

6176:

6170:

6169:

6127:

6121:

6120:

6119:

6113:

6110:

6080:

6074:

6073:

6052:

6046:

6045:

6024:

6018:

6017:

5995:

5989:

5988:

5965:

5959:

5958:

5946:

5940:

5939:

5913:

5884:

5878:

5877:

5875:

5851:

5845:

5844:

5834:

5808:

5802:

5801:

5799:

5790:(6): 1554–1563.

5775:

5769:

5768:

5732:

5726:

5725:

5689:

5683:

5682:

5654:

5648:

5647:

5645:

5633:

5627:

5626:

5624:

5623:

5609:

5603:

5602:

5561:(4): 2668–2673.

5550:

5544:

5543:

5518:(5): 1100–1111.

5507:

5501:

5500:

5490:

5458:

5452:

5451:

5423:

5417:

5416:

5404:

5398:

5397:

5351:

5345:

5338:

5332:

5331:

5315:

5305:

5299:

5298:

5272:

5240:

5234:

5233:

5223:

5191:

5185:

5184:

5164:

5158:

5157:

5137:

5131:

5130:

5102:

5096:

5095:

5093:

5092:

5086:

5080:. Archived from

5071:

5045:

5036:

5030:

5020:

5014:

5013:

5012:

5006:

4996:

4986:

4962:

4956:

4953:

4935:

4917:

4905:

4899:

4898:

4888:

4856:

4850:

4849:

4839:

4807:

4801:

4788:

4782:

4768:

4762:

4755:

4749:

4748:

4745:"Google Scholar"

4741:

4621:

4619:

4618:

4613:

4611:

4603:

4595:

4594:

4585:

4561:

4559:

4558:

4553:

4551:

4550:

4534:

4532:

4531:

4526:

4508:

4506:

4505:

4500:

4479:

4477:

4476:

4471:

4453:

4451:

4450:

4445:

4427:

4425:

4424:

4419:

4417:

4416:

4404:

4403:

4381:

4379:

4378:

4373:

4371:

4370:

4358:

4357:

4341:

4339:

4338:

4333:

4325:

4311:

4297:

4273:

4271:

4270:

4265:

4263:

4262:

4250:

4249:

4169:

4167:

4166:

4161:

4149:

4147:

4146:

4141:

4129:

4127:

4126:

4121:

4109:

4107:

4106:

4101:

4092:

4091:

4069:

4067:

4066:

4061:

4045:

4043:

4042:

4037:

4024:

4023:

3995:

3993:

3992:

3987:

3978:

3977:

3952:

3950:

3949:

3944:

3932:

3930:

3929:

3924:

3912:

3910:

3909:

3904:

3902:

3901:

3885:

3883:

3882:

3877:

3858:Other extensions

3816:generative model

3814:in place of the

3635:Solar irradiance

3554:Speech synthesis

3399:

3397:

3396:

3391:

3373:

3371:

3370:

3365:

3325:

3324:

3317:

3283:

3281:

3280:

3275:

3273:

3272:

3266:

3265:

3250:

3243:

3242:

3241:

3232:

3231:

3225:

3206:

3204:

3203:

3198:

3158:

3157:

3150:

3117:

3115:

3114:

3109:

3045:

3043:

3042:

3037:

2958:

2956:

2955:

2950:

2913:

2872:

2870:

2869:

2864:

2737:

2733:

2731:

2730:

2725:

2720:

2719:

2698:

2693:

2670:

2665:

2661:

2660:

2655:

2635:

2608:

2606:

2605:

2600:

2598:

2593:

2573:

2560:

2557:, there will be

2552:

2548:

2546:

2545:

2540:

2513:

2511:

2510:

2505:

2488:, there will be

2483:

2478:

2471:

2469:

2468:

2463:

2432:

2430:

2429:

2424:

2406:

2404:

2403:

2398:

2396:

2395:

2379:

2377:

2376:

2371:

2353:

2349:

2345:

2341:

2334:

2332:

2331:

2326:

2308:

2281:

2278:) (both at time

2241:

2237:

2178:

2137:

2099:

2095:

2091:

2086:

2079:

2076:

2073:

2070:

2067:

2064:

2061:

2058:

2055:

2054:"shop"

2052:

2049:

2046:

2043:

2042:"walk"

2040:

2037:

2034:

2031:

2028:

2025:

2022:

2019:

2016:

2013:

2010:

2009:"shop"

2007:

2004:

2001:

1998:

1997:"walk"

1995:

1992:

1989:

1986:

1983:

1980:

1977:

1974:

1971:

1968:

1965:

1962:

1959:

1956:

1953:

1950:

1947:

1944:

1941:

1938:

1935:

1932:

1929:

1926:

1923:

1920:

1917:

1914:

1911:

1908:

1905:

1902:

1899:

1896:

1893:

1890:

1887:

1884:

1881:

1878:

1875:

1872:

1869:

1866:

1863:

1860:

1857:

1856:"shop"

1854:

1851:

1850:"walk"

1848:

1845:

1842:

1839:

1836:

1833:

1830:

1827:

1824:

1821:

1700:

1698:

1697:

1692:

1687:

1686:

1680:

1679:

1667:

1666:

1648:

1647:

1638:

1637:

1628:

1627:

1614:

1612:

1611:

1606:

1604:

1603:

1597:

1596:

1584:

1583:

1565:

1564:

1555:

1554:

1545:

1544:

1527:

1525:

1524:

1519:

1514:

1513:

1497:

1495:

1494:

1489:

1487:

1486:

1462:

1460:

1459:

1454:

1449:

1448:

1447:

1446:

1426:

1425:

1406:

1404:

1403:

1398:

1384:every Borel set

1383:

1381:

1380:

1375:

1370:

1369:

1348:

1346:

1345:

1340:

1335:

1334:

1333:

1332:

1315:

1314:

1313:

1312:

1289:

1288:

1287:

1286:

1266:

1265:

1253:

1252:

1251:

1250:

1230:

1229:

1217:

1216:

1195:

1194:

1193:

1192:

1172:

1171:

1156:

1154:

1153:

1148:

1146:

1145:

1122:

1120:

1119:

1114:

1109:

1108:

1096:

1095:

1076:

1074:

1073:

1068:

1066:

1065:

1049:

1047:

1046:

1041:

1039:

1038: