4514:

3966:

3970:

5069:

2579:

1996:

3541:

6115:

4509:{\displaystyle p_{a}(j|i,l_{e},l_{f})\leftarrow {\frac {1}{\lambda _{i,l_{e},l_{f}}}}\sum _{k}{\frac {t(e_{i}^{(k)}|f_{j}^{(k)})p_{a}(j|i,l_{e},l_{f})\delta (e_{x},e_{i}^{(k)})\delta (f_{y},f_{j}^{(k)})\delta (l_{e},l_{e}^{(k)})\delta (l_{f},l_{f}^{(k)})}{\sum _{j'}t(e_{i}^{(k)}|f_{j'}^{(k)})p_{a}(j'|i,l_{e},l_{f})}}}

6464:

5852:

The alignment models that use first-order dependencies like the HMM or IBM Models 4 and 5 produce better results than the other alignment methods. The main idea of HMM is to predict the distance between subsequent source language positions. On the other hand, IBM Model 4 tries to predict the distance

5219:

In IBM Model 4, each word is dependent on the previously aligned word and on the word classes of the surrounding words. Some words tend to get reordered during translation more than others (e.g. adjective–noun inversion when translating Polish to

English). Adjectives often get moved before the noun

5590:

IBM Model 5 reformulates IBM Model 4 by enhancing the alignment model with more training parameters in order to overcome the model deficiency. During the translation in Model 3 and Model 4 there are no heuristics that would prohibit the placement of an output word in a position already taken. In

5581:

Both Model 3 and Model 4 ignore if an input position was chosen and if the probability mass was reserved for the input positions outside the sentence boundaries. It is the reason for the probabilities of all correct alignments not sum up to unity in these two models (deficient models).

4749:

26:

to train a translation model and an alignment model, starting with lexical translation probabilities and moving to reordering and word duplication. They underpinned the majority of statistical machine translation systems for almost twenty years starting in the early 1990s, until

1696:

2288:

3961:{\displaystyle t(e_{x}|f_{y})\leftarrow {\frac {1}{\lambda _{y}}}\sum _{k,i,j}{\frac {t(e_{i}^{(k)}|f_{j}^{(k)})p_{a}(j|i,l_{e},l_{f})\delta (e_{x},e_{i}^{(k)})\delta (f_{y},f_{j}^{(k)})}{\sum _{j'}t(e_{i}^{(k)}|f_{j'}^{(k)})p_{a}(j'|i,l_{e},l_{f})}}}

5591:

Model 5 it is important to place words only in free positions. It is done by tracking the number of free positions and allowing placement only in such positions. The distortion model is similar to IBM Model 4, but it is based on free positions. If

5220:

that precedes them. The word classes introduced in Model 4 solve this problem by conditioning the probability distributions of these classes. The result of such distribution is a lexicalized model. Such a distribution can be defined as follows:

1402:

4720:

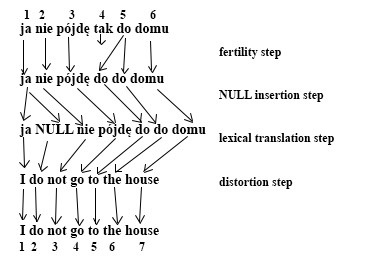

The last step is called distortion instead of alignment because it is possible to produce the same translation with the same alignment in different ways. For example, in the above example, we have another way to get the same alignment:

3536:

5853:

between subsequent target language positions. Since it was expected to achieve better alignment quality when using both types of such dependencies, HMM and Model 4 were combined in a log-linear manner in Model 6 as follows:

6242:

5859:

5848:

4709:

The number of inserted words depends on sentence length. This is why the NULL token insertion is modeled as an additional step: the fertility step. It increases the IBM Model 3 translation process to four steps:

5064:{\displaystyle P(S\mid E,A)=\prod _{i=1}^{I}\Phi _{i}!n(\Phi \mid e_{j})*\prod _{j=1}^{J}t(f_{j}\mid e_{a_{j}})*\prod _{j:a(j)\neq 0}^{J}d(j|a_{j},I,J){\binom {J-\Phi _{0}}{\Phi _{0}}}p_{0}^{\Phi _{0}}p_{1}^{J}}

3097:

5725:

1067:

590:

385:

5338:

5423:

2574:{\displaystyle t(e_{x}|f_{y})\leftarrow {\frac {t(e_{x}|f_{y})}{\lambda _{y}}}\sum _{k,i,j}{\frac {\delta (e_{x},e_{i}^{(k)})\delta (f_{y},f_{j}^{(k)})}{\sum _{j'}t(e_{i}^{(k)}|f_{j'}^{(k)})}}}

1991:{\displaystyle {\begin{cases}\max _{t'}\sum _{k}\sum _{i}\sum _{a^{(k)}}t(a^{(k)}|e^{(k)},f^{(k)})\ln t(e_{i}^{(k)}|f_{a^{(k)}(i)}^{(k)})\\\sum _{x}t'(e_{x}|f_{y})=1\quad \forall y\end{cases}}}

464:

This in general happens due to the different grammar and conventions of speech in different languages. English sentences require a subject, and when there is no subject available, it uses a

2676:

2225:

2078:

1481:

4704:

1139:

3247:

1235:

3349:

2981:

3153:

1226:

6515:

6190:

6234:

1683:

1634:

1531:

4580:

2732:

2280:

2134:

1585:

761:

656:

2609:

5099:

4534:

171:

6138:

4649:

3026:

2756:

911:

5616:

5576:

5510:

5126:

4623:

3341:

3314:

2916:

1166:

958:

840:

813:

710:

683:

279:

6630:

5549:

5483:

5454:

434:

5206:

5186:

5166:

5146:

4603:

3287:

3267:

3001:

2871:

2849:

2827:

2805:

2783:

2158:

931:

786:

405:

231:

211:

191:

128:

108:

88:

3158:

Does not explicitly model fertility: some foreign words tend to produce a fixed number of

English words. For example, for German-to-English translation,

236:

The meaning of an alignment grows increasingly complicated as the model version number grew. See Model 1 for the most simple and understandable version.

6459:{\displaystyle p_{6}(f,a\lor e)={\frac {\prod _{k=1}^{K}p_{k}(f,a\lor e)^{\alpha _{k}}}{\sum _{a',f'}\prod _{k=1}^{K}p_{k}(f',a'\mid e)^{\alpha _{k}}}}}

6110:{\displaystyle p_{6}(f,a\lor e)={\frac {p_{4}(f,a\lor e)^{\alpha }*p_{HMM}(f,a\lor e)}{\sum _{a',f'}p_{4}(f',a'\lor e)^{\alpha }*p_{HMM}(f',a'\lor e)}}}

1693:. Due to the simplistic assumptions, the algorithm has a closed-form, efficiently computable solution, which is the solution to the following equations:

471:. Japanese verbs do not have different forms for future and present tense, and the future tense is implied by the noun 明日 (tomorrow). Conversely, the

6749:

Wołk, K. (2015). "Noisy-Parallel and

Comparable Corpora Filtering Methodology for the Extraction of Bi-Lingual Equivalent Data at Sentence Level".

6688:. Proceedings of the Fourteenth Conference on Computational Natural Language Learning. Association for Computational Linguistics. pp. 98–106.

1690:

1404:

IBM Model 1 uses very simplistic assumptions on the statistical model, in order to allow the following algorithm to have closed-form solution.

6607:

3034:

6671:

FERNÁNDEZ, Pablo Malvar. Improving Word-to-word

Alignments Using Morphological Information. 2008. PhD Thesis. San Diego State University.

6557:

5732:

4714:

5624:

475:は and the grammar word だ (roughly "to be") do not correspond to any word in the English sentence. So, we can write the alignment as

23:

966:

489:

284:

5226:

6808:

6623:

5345:

4544:

The fertility problem is addressed in IBM Model 3. The fertility is modeled using probability distribution defined as:

70:

The IBM alignment models translation as a conditional probability model. For each source-language ("foreign") sentence

28:

5618:

denotes the number of free positions in the output, the IBM Model 5 distortion probabilities would be defined as:

2614:

2163:

2012:

1415:

6698:

KNIGHT, Kevin. A statistical MT tutorial workbook. Manuscript prepared for the 1999 JHU Summer

Workshop, 1999.

4665:

1075:

38:

proposed five models, and a model 6 was proposed later. The sequence of the six models can be summarized as:

31:

began to dominate. These models offer principled probabilistic formulation and (mostly) tractable inference.

3182:

3179:

Model 2 allows alignment to be conditional on sentence lengths. That is, we have a probability distribution

6813:

3102:

2140:

This could either be uniform or random. It is only required that every entry is positive, and for each

1171:

6472:

6147:

5551:

are distortion probability distributions of the words. The cept is formed by aligning each input word

6768:

6195:

2759:

1642:

1593:

1490:

1412:

If a dictionary is not provided at the start, but we have a corpus of

English-foreign language pairs

6708:

Brown, Peter F. (1993). "The mathematics of statistical machine translation: Parameter estimation".

4550:

2921:

2686:

2234:

2088:

1705:

1539:

715:

610:

6141:

1999:

58:

6651:. Proceedings of the 11th International Workshop on Spoken Language Translation, Lake Tahoe, USA.

2587:

6784:

6758:

6652:

5077:

2762:-- it equals 1 if the two entries are equal, and 0 otherwise. The index notation is as follows:

6603:

6564:

6517:

values are typically different in terms of their orders of magnitude for HMM and IBM Model 4.

4519:

1397:{\displaystyle p(e,a|f)={\frac {1/N}{(1+l_{f})^{l_{e}}}}\prod _{i=1}^{l_{e}}t(e_{i}|f_{a(i)})}

603:

Given the above definition of alignment, we can define the statistical model used by Model 1:

133:

6123:

4628:

2741:

845:

6776:

4659:

token that can also have its fertility modeled using a conditional distribution defined as:

3531:{\displaystyle p(e,a|f)={1/N}\prod _{i=1}^{l_{e}}t(e_{i}|f_{a(i)})p_{a}(a(i)|i,l_{e},l_{f})}

6724:

5594:

5554:

5488:

5104:

4608:

3319:

3292:

2889:

1144:

936:

818:

791:

688:

661:

252:

5515:

5459:

5430:

410:

4536:

are still normalization factors. See section 4.4.1 of for a derivation and an algorithm.

6772:

3006:

963:

Then, we generate an alignment uniformly in the set of all possible alignment functions

5191:

5171:

5151:

5131:

4588:

3272:

3252:

2986:

2856:

2834:

2812:

2790:

2768:

2143:

916:

771:

390:

216:

196:

176:

113:

93:

73:

595:

Future models will allow one

English world to be aligned with multiple foreign words.

6802:

1483:(without alignment information), then the model can be cast into the following form:

465:

6788:

4625:

it usually translates. This model deals with dropping input words because it allows

3538:

The EM algorithm can still be solved in closed-form, giving the following algorithm:

2002:, then simplified. For a detailed derivation of the algorithm, see chapter 4 and.

472:

444:

We can align some

English words to corresponding Japanese words, but not everyone:

6597:

5208:

refer to the absolute lengths of the target and source sentences, respectively.

6649:

Polish-English Speech

Statistical Machine Translation Systems for the IWSLT 2014

6780:

6534:

4651:. But there is still an issue when adding words. For example, the English word

3031:

No length preference: The probability of each length of translation is equal:

6469:

The log-linear combination is used instead of linear combination because the

1141:, generate each one independently of every other English word. For the word

4713:

486:

Thus, we see that the alignment function is in general a function of type

3092:{\displaystyle \sum _{e{\text{ has length }}l}p(e|f)={\frac {1}{N}}}

6763:

6657:

5843:{\displaystyle d_{1}(v_{j}-v_{\pi _{i,k-1}}\lor B(e_{j}),v_{max'})}

173:, the probability that we would generate English language sentence

6140:

is used in order to count the weight of Model 4 relatively to the

2851:

ranges over the entire vocabulary of

English words in the corpus;

2873:

ranges over the entire vocabulary of foreign words in the corpus.

6624:"CS288, Spring 2020, Lectur 05: Statistical Machine Translation"

4655:

is often inserted when negating. This issue generates a special

6144:. A log-linear combination of several models can be defined as

5720:{\displaystyle d_{1}(v_{j}\lor B(e_{j}),v_{\odot i-1},v_{max})}

35:

6558:"A Systematic Bayesian Treatment of the IBM Alignment Models"

2918:, any permutation of the English sentence is equally likely:

6686:

Computing optimal alignments for the IBM-3 translation model

4712:

1689:

In this form, this is exactly the kind of problem solved by

1984:

281:, an alignment for the sentence pair is a function of type

130:. The problem then is to find a good statistical model for

90:, we generate both a target-language ("English") sentence

5211:

See section 4.4.2 of for a derivation and an algorithm.

1062:{\displaystyle \{1,.,...,l_{e}\}\to \{0,1,.,...,l_{f}\}}

585:{\displaystyle \{1,.,...,l_{e}\}\to \{0,1,.,...,l_{f}\}}

483:

where 0 means that there is no corresponding alignment.

436:. For example, consider the following pair of sentences

380:{\displaystyle \{1,.,...,l_{e}\}\to \{0,1,.,...,l_{f}\}}

34:

The original work on statistical machine translation at

5333:{\displaystyle d_{1}(j-\odot _{}\lor A(f_{}),B(e_{j}))}

4605:, such distribution indicates to how many output words

658:, which can be interpreted as saying "the foreign word

387:. That is, we assume that the English word at location

2785:

ranges over English-foreign sentence pairs in corpus;

22:

are a sequence of increasingly complex models used in

6475:

6245:

6198:

6150:

6126:

5862:

5735:

5627:

5597:

5557:

5518:

5491:

5462:

5433:

5348:

5229:

5194:

5174:

5154:

5134:

5107:

5080:

4752:

4668:

4631:

4611:

4591:

4553:

4522:

3973:

3544:

3352:

3346:

The rest of Model 1 is unchanged. With that, we have

3322:

3295:

3275:

3255:

3185:

3105:

3037:

3009:

2989:

2924:

2892:

2859:

2837:

2815:

2793:

2771:

2744:

2689:

2617:

2590:

2291:

2237:

2166:

2146:

2091:

2015:

1699:

1645:

1596:

1542:

1493:

1418:

1238:

1174:

1147:

1078:

969:

939:

919:

848:

821:

794:

774:

718:

691:

664:

613:

492:

413:

393:

287:

255:

219:

199:

179:

136:

116:

96:

76:

16:

Sequence of models in statistical machine translation

1536:

learnable parameters: the entries of the dictionary

479:

1-> 0; 2 -> 0; 3 -> 3; 4 -> 4; 5 -> 1

607:Start with a "dictionary". Its entries are of form

6509:

6458:

6228:

6184:

6132:

6109:

5842:

5719:

5610:

5570:

5543:

5504:

5477:

5448:

5418:{\displaystyle d_{1}(j-\pi _{i,k-1}\lor B(e_{j}))}

5417:

5332:

5200:

5180:

5160:

5140:

5120:

5093:

5063:

4698:

4643:

4617:

4597:

4574:

4528:

4508:

3960:

3530:

3335:

3308:

3281:

3261:

3241:

3147:

3091:

3020:

2995:

2975:

2910:

2882:There are several limitations to the IBM model 1.

2865:

2843:

2821:

2799:

2777:

2750:

2726:

2670:

2603:

2573:

2274:

2219:

2152:

2128:

2072:

2009:INPUT. a corpus of English-foreign sentence pairs

1990:

1677:

1628:

1579:

1525:

1475:

1396:

1220:

1160:

1133:

1061:

952:

925:

905:

834:

807:

780:

755:

704:

677:

650:

584:

428:

399:

379:

273:

225:

205:

185:

165:

122:

102:

82:

5018:

4983:

2829:ranges over words in foreign language sentences;

2611:is a normalization constant that makes sure each

2085:INITIALIZE. matrix of translations probabilities

4743:IBM Model 3 can be mathematically expressed as:

1709:

6596:Koehn, Philipp (2010). "4. Word-Based Models".

5485:functions map words to their word classes, and

815:, we first generate an English sentence length

407:is "explained" by the foreign word at location

6679:

6677:

6537:. SMT Research Survey Wiki. 11 September 2015

3249:, meaning "the probability that English word

8:

3142:

3112:

2983:for any permutation of the English sentence

2061:

2016:

1666:

1646:

1617:

1597:

1590:observable variables: the English sentences

1514:

1494:

1464:

1419:

1056:

1013:

1007:

970:

579:

536:

530:

493:

440:It will surely rain tomorrow -- 明日 は きっと 雨 だ

374:

331:

325:

288:

45:Model 2: additional absolute alignment model

6725:"Term Alignment. State of the Art Overview"

2005:In short, the EM algorithm goes as follows:

6762:

6656:

6563:. University of Cambridge. Archived from

6480:

6474:

6445:

6440:

6402:

6392:

6381:

6355:

6341:

6336:

6308:

6298:

6287:

6280:

6250:

6244:

6197:

6155:

6149:

6125:

6061:

6048:

6010:

5984:

5945:

5932:

5904:

5897:

5867:

5861:

5820:

5804:

5771:

5766:

5753:

5740:

5734:

5702:

5680:

5664:

5645:

5632:

5626:

5602:

5596:

5562:

5556:

5523:

5517:

5496:

5490:

5461:

5432:

5403:

5372:

5353:

5347:

5318:

5284:

5253:

5234:

5228:

5193:

5173:

5153:

5133:

5112:

5106:

5085:

5079:

5055:

5050:

5038:

5033:

5028:

5017:

5010:

4999:

4982:

4980:

4959:

4950:

4935:

4909:

4891:

4886:

4873:

4857:

4846:

4830:

4805:

4795:

4784:

4751:

4667:

4630:

4610:

4590:

4552:

4521:

4494:

4481:

4466:

4449:

4430:

4420:

4411:

4399:

4394:

4373:

4352:

4347:

4334:

4309:

4304:

4291:

4266:

4261:

4248:

4223:

4218:

4205:

4186:

4173:

4158:

4146:

4127:

4122:

4113:

4101:

4096:

4083:

4077:

4063:

4050:

4039:

4030:

4018:

4005:

3990:

3978:

3972:

3946:

3933:

3918:

3901:

3882:

3872:

3863:

3851:

3846:

3825:

3804:

3799:

3786:

3761:

3756:

3743:

3724:

3711:

3696:

3684:

3665:

3660:

3651:

3639:

3634:

3621:

3603:

3591:

3582:

3570:

3561:

3555:

3543:

3519:

3506:

3491:

3470:

3448:

3439:

3433:

3415:

3410:

3399:

3386:

3382:

3368:

3351:

3327:

3321:

3300:

3294:

3289:, when the English sentence is of length

3274:

3254:

3230:

3217:

3202:

3190:

3184:

3104:

3079:

3065:

3046:

3042:

3036:

3008:

2988:

2962:

2934:

2923:

2891:

2858:

2836:

2814:

2792:

2770:

2743:

2715:

2706:

2700:

2688:

2671:{\displaystyle \sum _{x}t(e_{x}|f_{y})=1}

2653:

2644:

2638:

2622:

2616:

2595:

2589:

2553:

2543:

2534:

2522:

2517:

2496:

2475:

2470:

2457:

2432:

2427:

2414:

2401:

2383:

2371:

2357:

2348:

2342:

2329:

2317:

2308:

2302:

2290:

2263:

2254:

2248:

2236:

2220:{\displaystyle \sum _{x}t(e_{x}|f_{y})=1}

2202:

2193:

2187:

2171:

2165:

2145:

2117:

2108:

2102:

2090:

2073:{\displaystyle \{(e^{(k)},f^{(k)})\}_{k}}

2064:

2045:

2026:

2014:

1959:

1950:

1944:

1923:

1900:

1878:

1873:

1864:

1852:

1847:

1816:

1797:

1788:

1776:

1752:

1747:

1737:

1727:

1712:

1700:

1698:

1669:

1653:

1644:

1620:

1604:

1595:

1568:

1559:

1553:

1541:

1517:

1501:

1492:

1476:{\displaystyle \{(e^{(k)},f^{(k)})\}_{k}}

1467:

1448:

1429:

1417:

1376:

1367:

1361:

1343:

1338:

1327:

1312:

1307:

1297:

1274:

1268:

1254:

1237:

1200:

1191:

1185:

1173:

1152:

1146:

1123:

1118:

1096:

1083:

1077:

1050:

1001:

968:

944:

938:

918:

847:

826:

820:

799:

793:

773:

744:

735:

729:

717:

696:

690:

669:

663:

639:

630:

624:

612:

573:

524:

491:

412:

392:

368:

319:

286:

254:

218:

198:

178:

152:

135:

115:

95:

75:

6556:Yarin Gal; Phil Blunsom (12 June 2013).

4699:{\displaystyle n(\varnothing \lor NULL)}

3316:, and the foreign sentence is of length

2807:ranges over words in English sentences;

1487:fixed parameters: the foreign sentences

1134:{\displaystyle e_{1},e_{2},...e_{l_{e}}}

249:Given any foreign-English sentence pair

6526:

4675:

913:. In particular, it does not depend on

51:Model 4: added relative alignment model

3242:{\displaystyle p_{a}(j|i,l_{e},l_{f})}

5148:is assigned a fertility distribution

768:After being given a foreign sentence

7:

6591:

6589:

6587:

6585:

2886:No fluency: Given any sentence pair

5621:For the initial word in the cept:

61:alignment model in a log linear way

6120:where the interpolation parameter

5223:For the initial word in the cept:

5082:

5035:

5007:

4996:

4987:

4820:

4802:

3148:{\displaystyle l\in \{1,2,...,N\}}

1975:

1691:expectation–maximization algorithm

685:is translated to the English word

54:Model 5: fixed deficiency problem.

14:

6636:from the original on 24 Oct 2020.

1639:latent variables: the alignments

1232:Together, we have the probability

1221:{\displaystyle t(e_{i}|f_{a(i)})}

57:Model 6: Model 4 combined with a

6730:. Katholieke Universiteit Leuven

6510:{\displaystyle P_{r}(f,a\mid e)}

6185:{\displaystyle p_{k}(f,a\mid e)}

3166:is usually translated to one of

6599:Statistical Machine Translation

6229:{\displaystyle k=1,2,\dotsc ,K}

2160:, the probability sums to one:

1974:

1678:{\displaystyle \{a^{(k)}\}_{k}}

1629:{\displaystyle \{e^{(k)}\}_{k}}

1526:{\displaystyle \{f^{(k)}\}_{k}}

1072:Finally, for each English word

24:statistical machine translation

6602:. Cambridge University Press.

6504:

6486:

6437:

6408:

6333:

6314:

6274:

6256:

6179:

6161:

6101:

6073:

6045:

6016:

5975:

5957:

5929:

5910:

5891:

5873:

5837:

5810:

5797:

5746:

5714:

5670:

5657:

5638:

5536:

5524:

5472:

5466:

5443:

5437:

5412:

5409:

5396:

5359:

5327:

5324:

5311:

5302:

5297:

5285:

5277:

5266:

5254:

5240:

4977:

4951:

4944:

4925:

4919:

4899:

4866:

4836:

4817:

4774:

4756:

4693:

4672:

4575:{\displaystyle n(\phi \lor f)}

4569:

4557:

4500:

4467:

4455:

4442:

4437:

4431:

4412:

4406:

4400:

4387:

4364:

4359:

4353:

4327:

4321:

4316:

4310:

4284:

4278:

4273:

4267:

4241:

4235:

4230:

4224:

4198:

4192:

4159:

4152:

4139:

4134:

4128:

4114:

4108:

4102:

4089:

4027:

4024:

3991:

3984:

3952:

3919:

3907:

3894:

3889:

3883:

3864:

3858:

3852:

3839:

3816:

3811:

3805:

3779:

3773:

3768:

3762:

3736:

3730:

3697:

3690:

3677:

3672:

3666:

3652:

3646:

3640:

3627:

3579:

3576:

3562:

3548:

3525:

3492:

3488:

3482:

3476:

3463:

3458:

3452:

3440:

3426:

3376:

3369:

3356:

3236:

3203:

3196:

3073:

3066:

3059:

2976:{\displaystyle p(e|f)=p(e'|f)}

2970:

2963:

2951:

2942:

2935:

2928:

2905:

2893:

2727:{\displaystyle t(e_{x}|f_{y})}

2721:

2707:

2693:

2659:

2645:

2631:

2565:

2560:

2554:

2535:

2529:

2523:

2510:

2487:

2482:

2476:

2450:

2444:

2439:

2433:

2407:

2363:

2349:

2335:

2326:

2323:

2309:

2295:

2275:{\displaystyle t(e_{x}|f_{y})}

2269:

2255:

2241:

2208:

2194:

2180:

2129:{\displaystyle t(e_{x}|f_{y})}

2123:

2109:

2095:

2057:

2052:

2046:

2033:

2027:

2019:

1965:

1951:

1937:

1912:

1907:

1901:

1896:

1890:

1885:

1879:

1865:

1859:

1853:

1840:

1828:

1823:

1817:

1804:

1798:

1789:

1783:

1777:

1769:

1759:

1753:

1660:

1654:

1611:

1605:

1580:{\displaystyle t(e_{i}|f_{j})}

1574:

1560:

1546:

1508:

1502:

1460:

1455:

1449:

1436:

1430:

1422:

1391:

1386:

1380:

1368:

1354:

1304:

1284:

1262:

1255:

1242:

1215:

1210:

1204:

1192:

1178:

1010:

900:

870:

756:{\displaystyle t(e_{i}|f_{j})}

750:

736:

722:

651:{\displaystyle t(e_{i}|f_{j})}

645:

631:

617:

533:

423:

417:

328:

268:

256:

160:

153:

140:

48:Model 3: extra fertility model

1:

5578:to at least one output word.

6684:Schoenemann, Thomas (2010).

6647:Wołk K., Marasek K. (2014).

5101:represents the fertility of

3168:to the, for the, to a, for a

2604:{\displaystyle \lambda _{y}}

42:Model 1: lexical translation

3269:is aligned to foreign word

1168:, generate it according to

6830:

6781:10.7494/csci.2015.16.2.169

29:neural machine translation

6710:Computational Linguistics

5094:{\displaystyle \Phi _{i}}

213:given a foreign sentence

4739:I do not go to the house

4529:{\displaystyle \lambda }

3162:is usually omitted, and

166:{\displaystyle p(e,a|f)}

6133:{\displaystyle \alpha }

5729:For additional words:

4644:{\displaystyle \phi =0}

2751:{\displaystyle \delta }

906:{\displaystyle Uniform}

6511:

6460:

6397:

6303:

6230:

6186:

6134:

6111:

5844:

5721:

5612:

5572:

5545:

5506:

5479:

5450:

5419:

5342:For additional words:

5334:

5202:

5182:

5162:

5142:

5122:

5095:

5065:

4940:

4862:

4800:

4725:ja NULL nie pôjde tak

4717:

4700:

4645:

4619:

4599:

4585:For each foreign word

4576:

4530:

4510:

3962:

3532:

3422:

3337:

3310:

3283:

3263:

3243:

3149:

3093:

3048: has length

3022:

2997:

2977:

2912:

2875:

2867:

2845:

2823:

2801:

2779:

2752:

2738:In the above formula,

2736:

2728:

2681:

2680:

2672:

2605:

2575:

2276:

2229:

2221:

2154:

2130:

2074:

2000:Lagrangian multipliers

1998:This can be solved by

1992:

1679:

1630:

1581:

1527:

1477:

1408:Learning from a corpus

1398:

1350:

1222:

1162:

1135:

1063:

954:

927:

907:

836:

809:

782:

757:

706:

679:

652:

586:

481:

462:

442:

430:

401:

381:

275:

227:

207:

187:

167:

124:

104:

84:

6512:

6461:

6377:

6283:

6231:

6187:

6135:

6112:

5845:

5722:

5613:

5611:{\displaystyle v_{j}}

5573:

5571:{\displaystyle f_{i}}

5546:

5507:

5505:{\displaystyle e_{j}}

5480:

5451:

5420:

5335:

5203:

5183:

5163:

5143:

5123:

5121:{\displaystyle e_{i}}

5096:

5066:

4905:

4842:

4780:

4716:

4701:

4646:

4620:

4618:{\displaystyle \phi }

4600:

4577:

4531:

4511:

3963:

3533:

3395:

3338:

3336:{\displaystyle l_{f}}

3311:

3309:{\displaystyle l_{e}}

3284:

3264:

3244:

3150:

3094:

3023:

2998:

2978:

2913:

2911:{\displaystyle (e,f)}

2868:

2846:

2824:

2802:

2780:

2764:

2753:

2729:

2673:

2606:

2582:

2576:

2284:

2277:

2222:

2155:

2138:

2131:

2075:

2007:

1993:

1680:

1631:

1582:

1528:

1478:

1399:

1323:

1223:

1163:

1161:{\displaystyle e_{i}}

1136:

1064:

955:

953:{\displaystyle l_{f}}

928:

908:

842:uniformly in a range

837:

835:{\displaystyle l_{e}}

810:

808:{\displaystyle l_{f}}

783:

758:

707:

705:{\displaystyle e_{i}}

680:

678:{\displaystyle f_{j}}

653:

587:

477:

446:

438:

431:

402:

382:

276:

274:{\displaystyle (e,f)}

228:

208:

188:

168:

125:

105:

85:

6473:

6243:

6196:

6148:

6124:

5860:

5733:

5625:

5595:

5555:

5544:{\displaystyle f_{}}

5516:

5489:

5478:{\displaystyle B(e)}

5460:

5449:{\displaystyle A(f)}

5431:

5346:

5227:

5192:

5172:

5152:

5132:

5105:

5078:

4750:

4666:

4629:

4609:

4589:

4551:

4520:

3971:

3542:

3350:

3320:

3293:

3273:

3253:

3183:

3103:

3035:

3007:

2987:

2922:

2890:

2857:

2835:

2813:

2791:

2769:

2760:Dirac delta function

2742:

2687:

2615:

2588:

2289:

2235:

2164:

2144:

2089:

2013:

1697:

1643:

1594:

1540:

1491:

1416:

1236:

1172:

1145:

1076:

967:

937:

917:

846:

819:

792:

772:

716:

689:

662:

611:

490:

429:{\displaystyle a(i)}

411:

391:

285:

253:

217:

197:

177:

134:

114:

94:

74:

20:IBM alignment models

6809:Machine translation

6773:2015arXiv151004500W

6142:hidden Markov model

5128:, each source word

5060:

5045:

4441:

4410:

4363:

4320:

4277:

4234:

4138:

4112:

3893:

3862:

3815:

3772:

3676:

3650:

2564:

2533:

2486:

2443:

1911:

1863:

6507:

6456:

6376:

6226:

6182:

6130:

6107:

6005:

5840:

5717:

5608:

5568:

5541:

5502:

5475:

5446:

5415:

5330:

5198:

5178:

5158:

5138:

5118:

5091:

5061:

5046:

5024:

4718:

4696:

4641:

4615:

4595:

4572:

4526:

4506:

4416:

4390:

4383:

4343:

4300:

4257:

4214:

4118:

4092:

4082:

3958:

3868:

3842:

3835:

3795:

3752:

3656:

3630:

3620:

3528:

3333:

3306:

3279:

3259:

3239:

3145:

3089:

3055:

3021:{\displaystyle e'}

3018:

2993:

2973:

2908:

2863:

2841:

2819:

2797:

2775:

2748:

2724:

2668:

2627:

2601:

2571:

2539:

2513:

2506:

2466:

2423:

2400:

2272:

2217:

2176:

2150:

2126:

2070:

1988:

1983:

1928:

1869:

1843:

1765:

1742:

1732:

1722:

1675:

1626:

1577:

1523:

1473:

1394:

1218:

1158:

1131:

1059:

950:

923:

903:

832:

805:

778:

753:

702:

675:

648:

582:

451:will -> ?

426:

397:

377:

271:

223:

203:

183:

163:

120:

100:

80:

66:Mathematical setup

6723:Vulić I. (2010).

6609:978-0-521-87415-1

6454:

6351:

6105:

5980:

5201:{\displaystyle J}

5181:{\displaystyle I}

5161:{\displaystyle n}

5141:{\displaystyle s}

5016:

4598:{\displaystyle j}

4504:

4369:

4073:

4071:

3956:

3821:

3599:

3597:

3282:{\displaystyle j}

3262:{\displaystyle i}

3087:

3049:

3038:

2996:{\displaystyle e}

2866:{\displaystyle y}

2844:{\displaystyle x}

2822:{\displaystyle j}

2800:{\displaystyle i}

2778:{\displaystyle k}

2618:

2569:

2492:

2379:

2377:

2167:

2153:{\displaystyle y}

1919:

1743:

1733:

1723:

1708:

1321:

926:{\displaystyle f}

781:{\displaystyle f}

712:with probability

599:Statistical model

460:tomorrow -> 明日

454:surely -> きっと

400:{\displaystyle i}

226:{\displaystyle f}

206:{\displaystyle a}

193:and an alignment

186:{\displaystyle e}

123:{\displaystyle a}

110:and an alignment

103:{\displaystyle e}

83:{\displaystyle f}

6821:

6793:

6792:

6766:

6751:Computer Science

6746:

6740:

6739:

6737:

6735:

6729:

6720:

6714:

6713:

6705:

6699:

6696:

6690:

6689:

6681:

6672:

6669:

6663:

6662:

6660:

6644:

6638:

6637:

6635:

6628:

6620:

6614:

6613:

6593:

6580:

6579:

6577:

6575:

6569:

6562:

6553:

6547:

6546:

6544:

6542:

6531:

6516:

6514:

6513:

6508:

6485:

6484:

6465:

6463:

6462:

6457:

6455:

6453:

6452:

6451:

6450:

6449:

6429:

6418:

6407:

6406:

6396:

6391:

6375:

6374:

6363:

6349:

6348:

6347:

6346:

6345:

6313:

6312:

6302:

6297:

6281:

6255:

6254:

6235:

6233:

6232:

6227:

6191:

6189:

6188:

6183:

6160:

6159:

6139:

6137:

6136:

6131:

6116:

6114:

6113:

6108:

6106:

6104:

6094:

6083:

6072:

6071:

6053:

6052:

6037:

6026:

6015:

6014:

6004:

6003:

5992:

5978:

5956:

5955:

5937:

5936:

5909:

5908:

5898:

5872:

5871:

5849:

5847:

5846:

5841:

5836:

5835:

5834:

5809:

5808:

5790:

5789:

5788:

5787:

5758:

5757:

5745:

5744:

5726:

5724:

5723:

5718:

5713:

5712:

5694:

5693:

5669:

5668:

5650:

5649:

5637:

5636:

5617:

5615:

5614:

5609:

5607:

5606:

5577:

5575:

5574:

5569:

5567:

5566:

5550:

5548:

5547:

5542:

5540:

5539:

5511:

5509:

5508:

5503:

5501:

5500:

5484:

5482:

5481:

5476:

5455:

5453:

5452:

5447:

5424:

5422:

5421:

5416:

5408:

5407:

5389:

5388:

5358:

5357:

5339:

5337:

5336:

5331:

5323:

5322:

5301:

5300:

5270:

5269:

5239:

5238:

5207:

5205:

5204:

5199:

5187:

5185:

5184:

5179:

5167:

5165:

5164:

5159:

5147:

5145:

5144:

5139:

5127:

5125:

5124:

5119:

5117:

5116:

5100:

5098:

5097:

5092:

5090:

5089:

5070:

5068:

5067:

5062:

5059:

5054:

5044:

5043:

5042:

5032:

5023:

5022:

5021:

5015:

5014:

5005:

5004:

5003:

4986:

4964:

4963:

4954:

4939:

4934:

4898:

4897:

4896:

4895:

4878:

4877:

4861:

4856:

4835:

4834:

4810:

4809:

4799:

4794:

4705:

4703:

4702:

4697:

4650:

4648:

4647:

4642:

4624:

4622:

4621:

4616:

4604:

4602:

4601:

4596:

4581:

4579:

4578:

4573:

4535:

4533:

4532:

4527:

4515:

4513:

4512:

4507:

4505:

4503:

4499:

4498:

4486:

4485:

4470:

4465:

4454:

4453:

4440:

4429:

4428:

4415:

4409:

4398:

4382:

4381:

4367:

4362:

4351:

4339:

4338:

4319:

4308:

4296:

4295:

4276:

4265:

4253:

4252:

4233:

4222:

4210:

4209:

4191:

4190:

4178:

4177:

4162:

4151:

4150:

4137:

4126:

4117:

4111:

4100:

4084:

4081:

4072:

4070:

4069:

4068:

4067:

4055:

4054:

4031:

4023:

4022:

4010:

4009:

3994:

3983:

3982:

3967:

3965:

3964:

3959:

3957:

3955:

3951:

3950:

3938:

3937:

3922:

3917:

3906:

3905:

3892:

3881:

3880:

3867:

3861:

3850:

3834:

3833:

3819:

3814:

3803:

3791:

3790:

3771:

3760:

3748:

3747:

3729:

3728:

3716:

3715:

3700:

3689:

3688:

3675:

3664:

3655:

3649:

3638:

3622:

3619:

3598:

3596:

3595:

3583:

3575:

3574:

3565:

3560:

3559:

3537:

3535:

3534:

3529:

3524:

3523:

3511:

3510:

3495:

3475:

3474:

3462:

3461:

3443:

3438:

3437:

3421:

3420:

3419:

3409:

3394:

3390:

3372:

3342:

3340:

3339:

3334:

3332:

3331:

3315:

3313:

3312:

3307:

3305:

3304:

3288:

3286:

3285:

3280:

3268:

3266:

3265:

3260:

3248:

3246:

3245:

3240:

3235:

3234:

3222:

3221:

3206:

3195:

3194:

3154:

3152:

3151:

3146:

3098:

3096:

3095:

3090:

3088:

3080:

3069:

3054:

3050:

3047:

3027:

3025:

3024:

3019:

3017:

3002:

3000:

2999:

2994:

2982:

2980:

2979:

2974:

2966:

2961:

2938:

2917:

2915:

2914:

2909:

2872:

2870:

2869:

2864:

2850:

2848:

2847:

2842:

2828:

2826:

2825:

2820:

2806:

2804:

2803:

2798:

2784:

2782:

2781:

2776:

2757:

2755:

2754:

2749:

2733:

2731:

2730:

2725:

2720:

2719:

2710:

2705:

2704:

2677:

2675:

2674:

2669:

2658:

2657:

2648:

2643:

2642:

2626:

2610:

2608:

2607:

2602:

2600:

2599:

2580:

2578:

2577:

2572:

2570:

2568:

2563:

2552:

2551:

2538:

2532:

2521:

2505:

2504:

2490:

2485:

2474:

2462:

2461:

2442:

2431:

2419:

2418:

2402:

2399:

2378:

2376:

2375:

2366:

2362:

2361:

2352:

2347:

2346:

2330:

2322:

2321:

2312:

2307:

2306:

2281:

2279:

2278:

2273:

2268:

2267:

2258:

2253:

2252:

2226:

2224:

2223:

2218:

2207:

2206:

2197:

2192:

2191:

2175:

2159:

2157:

2156:

2151:

2135:

2133:

2132:

2127:

2122:

2121:

2112:

2107:

2106:

2079:

2077:

2076:

2071:

2069:

2068:

2056:

2055:

2037:

2036:

1997:

1995:

1994:

1989:

1987:

1986:

1964:

1963:

1954:

1949:

1948:

1936:

1927:

1910:

1899:

1889:

1888:

1868:

1862:

1851:

1827:

1826:

1808:

1807:

1792:

1787:

1786:

1764:

1763:

1762:

1741:

1731:

1721:

1720:

1684:

1682:

1681:

1676:

1674:

1673:

1664:

1663:

1635:

1633:

1632:

1627:

1625:

1624:

1615:

1614:

1586:

1584:

1583:

1578:

1573:

1572:

1563:

1558:

1557:

1532:

1530:

1529:

1524:

1522:

1521:

1512:

1511:

1482:

1480:

1479:

1474:

1472:

1471:

1459:

1458:

1440:

1439:

1403:

1401:

1400:

1395:

1390:

1389:

1371:

1366:

1365:

1349:

1348:

1347:

1337:

1322:

1320:

1319:

1318:

1317:

1316:

1302:

1301:

1282:

1278:

1269:

1258:

1227:

1225:

1224:

1219:

1214:

1213:

1195:

1190:

1189:

1167:

1165:

1164:

1159:

1157:

1156:

1140:

1138:

1137:

1132:

1130:

1129:

1128:

1127:

1101:

1100:

1088:

1087:

1068:

1066:

1065:

1060:

1055:

1054:

1006:

1005:

959:

957:

956:

951:

949:

948:

932:

930:

929:

924:

912:

910:

909:

904:

841:

839:

838:

833:

831:

830:

814:

812:

811:

806:

804:

803:

787:

785:

784:

779:

762:

760:

759:

754:

749:

748:

739:

734:

733:

711:

709:

708:

703:

701:

700:

684:

682:

681:

676:

674:

673:

657:

655:

654:

649:

644:

643:

634:

629:

628:

591:

589:

588:

583:

578:

577:

529:

528:

448:it -> ?

435:

433:

432:

427:

406:

404:

403:

398:

386:

384:

383:

378:

373:

372:

324:

323:

280:

278:

277:

272:

232:

230:

229:

224:

212:

210:

209:

204:

192:

190:

189:

184:

172:

170:

169:

164:

156:

129:

127:

126:

121:

109:

107:

106:

101:

89:

87:

86:

81:

6829:

6828:

6824:

6823:

6822:

6820:

6819:

6818:

6799:

6798:

6797:

6796:

6748:

6747:

6743:

6733:

6731:

6727:

6722:

6721:

6717:

6707:

6706:

6702:

6697:

6693:

6683:

6682:

6675:

6670:

6666:

6646:

6645:

6641:

6633:

6626:

6622:

6621:

6617:

6610:

6595:

6594:

6583:

6573:

6571:

6567:

6560:

6555:

6554:

6550:

6540:

6538:

6533:

6532:

6528:

6523:

6476:

6471:

6470:

6441:

6436:

6422:

6411:

6398:

6367:

6356:

6350:

6337:

6332:

6304:

6282:

6246:

6241:

6240:

6194:

6193:

6151:

6146:

6145:

6122:

6121:

6087:

6076:

6057:

6044:

6030:

6019:

6006:

5996:

5985:

5979:

5941:

5928:

5900:

5899:

5863:

5858:

5857:

5827:

5816:

5800:

5767:

5762:

5749:

5736:

5731:

5730:

5698:

5676:

5660:

5641:

5628:

5623:

5622:

5598:

5593:

5592:

5588:

5558:

5553:

5552:

5519:

5514:

5513:

5492:

5487:

5486:

5458:

5457:

5429:

5428:

5399:

5368:

5349:

5344:

5343:

5314:

5280:

5249:

5230:

5225:

5224:

5217:

5190:

5189:

5170:

5169:

5150:

5149:

5130:

5129:

5108:

5103:

5102:

5081:

5076:

5075:

5034:

5006:

4995:

4988:

4981:

4955:

4887:

4882:

4869:

4826:

4801:

4748:

4747:

4664:

4663:

4627:

4626:

4607:

4606:

4587:

4586:

4549:

4548:

4542:

4518:

4517:

4490:

4477:

4458:

4445:

4421:

4374:

4368:

4330:

4287:

4244:

4201:

4182:

4169:

4142:

4085:

4059:

4046:

4035:

4014:

4001:

3974:

3969:

3968:

3942:

3929:

3910:

3897:

3873:

3826:

3820:

3782:

3739:

3720:

3707:

3680:

3623:

3587:

3566:

3551:

3540:

3539:

3515:

3502:

3466:

3444:

3429:

3411:

3348:

3347:

3323:

3318:

3317:

3296:

3291:

3290:

3271:

3270:

3251:

3250:

3226:

3213:

3186:

3181:

3180:

3177:

3101:

3100:

3033:

3032:

3010:

3005:

3004:

2985:

2984:

2954:

2920:

2919:

2888:

2887:

2880:

2855:

2854:

2833:

2832:

2811:

2810:

2789:

2788:

2767:

2766:

2740:

2739:

2711:

2696:

2685:

2684:

2649:

2634:

2613:

2612:

2591:

2586:

2585:

2544:

2497:

2491:

2453:

2410:

2403:

2367:

2353:

2338:

2331:

2313:

2298:

2287:

2286:

2259:

2244:

2233:

2232:

2198:

2183:

2162:

2161:

2142:

2141:

2113:

2098:

2087:

2086:

2082:

2060:

2041:

2022:

2011:

2010:

1982:

1981:

1955:

1940:

1929:

1916:

1915:

1874:

1812:

1793:

1772:

1748:

1713:

1701:

1695:

1694:

1688:

1665:

1649:

1641:

1640:

1616:

1600:

1592:

1591:

1564:

1549:

1538:

1537:

1513:

1497:

1489:

1488:

1463:

1444:

1425:

1414:

1413:

1410:

1372:

1357:

1339:

1308:

1303:

1293:

1283:

1270:

1234:

1233:

1196:

1181:

1170:

1169:

1148:

1143:

1142:

1119:

1114:

1092:

1079:

1074:

1073:

1046:

997:

965:

964:

940:

935:

934:

915:

914:

844:

843:

822:

817:

816:

795:

790:

789:

770:

769:

740:

725:

714:

713:

692:

687:

686:

665:

660:

659:

635:

620:

609:

608:

601:

569:

520:

488:

487:

409:

408:

389:

388:

364:

315:

283:

282:

251:

250:

247:

242:

215:

214:

195:

194:

175:

174:

132:

131:

112:

111:

92:

91:

72:

71:

68:

17:

12:

11:

5:

6827:

6825:

6817:

6816:

6811:

6801:

6800:

6795:

6794:

6757:(2): 169–184.

6741:

6715:

6712:(19): 263–311.

6700:

6691:

6673:

6664:

6639:

6615:

6608:

6581:

6548:

6525:

6524:

6522:

6519:

6506:

6503:

6500:

6497:

6494:

6491:

6488:

6483:

6479:

6467:

6466:

6448:

6444:

6439:

6435:

6432:

6428:

6425:

6421:

6417:

6414:

6410:

6405:

6401:

6395:

6390:

6387:

6384:

6380:

6373:

6370:

6366:

6362:

6359:

6354:

6344:

6340:

6335:

6331:

6328:

6325:

6322:

6319:

6316:

6311:

6307:

6301:

6296:

6293:

6290:

6286:

6279:

6276:

6273:

6270:

6267:

6264:

6261:

6258:

6253:

6249:

6225:

6222:

6219:

6216:

6213:

6210:

6207:

6204:

6201:

6181:

6178:

6175:

6172:

6169:

6166:

6163:

6158:

6154:

6129:

6118:

6117:

6103:

6100:

6097:

6093:

6090:

6086:

6082:

6079:

6075:

6070:

6067:

6064:

6060:

6056:

6051:

6047:

6043:

6040:

6036:

6033:

6029:

6025:

6022:

6018:

6013:

6009:

6002:

5999:

5995:

5991:

5988:

5983:

5977:

5974:

5971:

5968:

5965:

5962:

5959:

5954:

5951:

5948:

5944:

5940:

5935:

5931:

5927:

5924:

5921:

5918:

5915:

5912:

5907:

5903:

5896:

5893:

5890:

5887:

5884:

5881:

5878:

5875:

5870:

5866:

5839:

5833:

5830:

5826:

5823:

5819:

5815:

5812:

5807:

5803:

5799:

5796:

5793:

5786:

5783:

5780:

5777:

5774:

5770:

5765:

5761:

5756:

5752:

5748:

5743:

5739:

5716:

5711:

5708:

5705:

5701:

5697:

5692:

5689:

5686:

5683:

5679:

5675:

5672:

5667:

5663:

5659:

5656:

5653:

5648:

5644:

5640:

5635:

5631:

5605:

5601:

5587:

5584:

5565:

5561:

5538:

5535:

5532:

5529:

5526:

5522:

5499:

5495:

5474:

5471:

5468:

5465:

5445:

5442:

5439:

5436:

5414:

5411:

5406:

5402:

5398:

5395:

5392:

5387:

5384:

5381:

5378:

5375:

5371:

5367:

5364:

5361:

5356:

5352:

5329:

5326:

5321:

5317:

5313:

5310:

5307:

5304:

5299:

5296:

5293:

5290:

5287:

5283:

5279:

5276:

5273:

5268:

5265:

5262:

5259:

5256:

5252:

5248:

5245:

5242:

5237:

5233:

5216:

5213:

5197:

5177:

5157:

5137:

5115:

5111:

5088:

5084:

5072:

5071:

5058:

5053:

5049:

5041:

5037:

5031:

5027:

5020:

5013:

5009:

5002:

4998:

4994:

4991:

4985:

4979:

4976:

4973:

4970:

4967:

4962:

4958:

4953:

4949:

4946:

4943:

4938:

4933:

4930:

4927:

4924:

4921:

4918:

4915:

4912:

4908:

4904:

4901:

4894:

4890:

4885:

4881:

4876:

4872:

4868:

4865:

4860:

4855:

4852:

4849:

4845:

4841:

4838:

4833:

4829:

4825:

4822:

4819:

4816:

4813:

4808:

4804:

4798:

4793:

4790:

4787:

4783:

4779:

4776:

4773:

4770:

4767:

4764:

4761:

4758:

4755:

4741:

4740:

4737:

4730:

4707:

4706:

4695:

4692:

4689:

4686:

4683:

4680:

4677:

4674:

4671:

4640:

4637:

4634:

4614:

4594:

4583:

4582:

4571:

4568:

4565:

4562:

4559:

4556:

4541:

4538:

4525:

4502:

4497:

4493:

4489:

4484:

4480:

4476:

4473:

4469:

4464:

4461:

4457:

4452:

4448:

4444:

4439:

4436:

4433:

4427:

4424:

4419:

4414:

4408:

4405:

4402:

4397:

4393:

4389:

4386:

4380:

4377:

4372:

4366:

4361:

4358:

4355:

4350:

4346:

4342:

4337:

4333:

4329:

4326:

4323:

4318:

4315:

4312:

4307:

4303:

4299:

4294:

4290:

4286:

4283:

4280:

4275:

4272:

4269:

4264:

4260:

4256:

4251:

4247:

4243:

4240:

4237:

4232:

4229:

4226:

4221:

4217:

4213:

4208:

4204:

4200:

4197:

4194:

4189:

4185:

4181:

4176:

4172:

4168:

4165:

4161:

4157:

4154:

4149:

4145:

4141:

4136:

4133:

4130:

4125:

4121:

4116:

4110:

4107:

4104:

4099:

4095:

4091:

4088:

4080:

4076:

4066:

4062:

4058:

4053:

4049:

4045:

4042:

4038:

4034:

4029:

4026:

4021:

4017:

4013:

4008:

4004:

4000:

3997:

3993:

3989:

3986:

3981:

3977:

3954:

3949:

3945:

3941:

3936:

3932:

3928:

3925:

3921:

3916:

3913:

3909:

3904:

3900:

3896:

3891:

3888:

3885:

3879:

3876:

3871:

3866:

3860:

3857:

3854:

3849:

3845:

3841:

3838:

3832:

3829:

3824:

3818:

3813:

3810:

3807:

3802:

3798:

3794:

3789:

3785:

3781:

3778:

3775:

3770:

3767:

3764:

3759:

3755:

3751:

3746:

3742:

3738:

3735:

3732:

3727:

3723:

3719:

3714:

3710:

3706:

3703:

3699:

3695:

3692:

3687:

3683:

3679:

3674:

3671:

3668:

3663:

3659:

3654:

3648:

3645:

3642:

3637:

3633:

3629:

3626:

3618:

3615:

3612:

3609:

3606:

3602:

3594:

3590:

3586:

3581:

3578:

3573:

3569:

3564:

3558:

3554:

3550:

3547:

3527:

3522:

3518:

3514:

3509:

3505:

3501:

3498:

3494:

3490:

3487:

3484:

3481:

3478:

3473:

3469:

3465:

3460:

3457:

3454:

3451:

3447:

3442:

3436:

3432:

3428:

3425:

3418:

3414:

3408:

3405:

3402:

3398:

3393:

3389:

3385:

3381:

3378:

3375:

3371:

3367:

3364:

3361:

3358:

3355:

3330:

3326:

3303:

3299:

3278:

3258:

3238:

3233:

3229:

3225:

3220:

3216:

3212:

3209:

3205:

3201:

3198:

3193:

3189:

3176:

3173:

3172:

3171:

3156:

3144:

3141:

3138:

3135:

3132:

3129:

3126:

3123:

3120:

3117:

3114:

3111:

3108:

3086:

3083:

3078:

3075:

3072:

3068:

3064:

3061:

3058:

3053:

3045:

3041:

3029:

3016:

3013:

2992:

2972:

2969:

2965:

2960:

2957:

2953:

2950:

2947:

2944:

2941:

2937:

2933:

2930:

2927:

2907:

2904:

2901:

2898:

2895:

2879:

2876:

2862:

2840:

2818:

2796:

2774:

2747:

2723:

2718:

2714:

2709:

2703:

2699:

2695:

2692:

2667:

2664:

2661:

2656:

2652:

2647:

2641:

2637:

2633:

2630:

2625:

2621:

2598:

2594:

2567:

2562:

2559:

2556:

2550:

2547:

2542:

2537:

2531:

2528:

2525:

2520:

2516:

2512:

2509:

2503:

2500:

2495:

2489:

2484:

2481:

2478:

2473:

2469:

2465:

2460:

2456:

2452:

2449:

2446:

2441:

2438:

2435:

2430:

2426:

2422:

2417:

2413:

2409:

2406:

2398:

2395:

2392:

2389:

2386:

2382:

2374:

2370:

2365:

2360:

2356:

2351:

2345:

2341:

2337:

2334:

2328:

2325:

2320:

2316:

2311:

2305:

2301:

2297:

2294:

2271:

2266:

2262:

2257:

2251:

2247:

2243:

2240:

2216:

2213:

2210:

2205:

2201:

2196:

2190:

2186:

2182:

2179:

2174:

2170:

2149:

2125:

2120:

2116:

2111:

2105:

2101:

2097:

2094:

2067:

2063:

2059:

2054:

2051:

2048:

2044:

2040:

2035:

2032:

2029:

2025:

2021:

2018:

1985:

1980:

1977:

1973:

1970:

1967:

1962:

1958:

1953:

1947:

1943:

1939:

1935:

1932:

1926:

1922:

1918:

1917:

1914:

1909:

1906:

1903:

1898:

1895:

1892:

1887:

1884:

1881:

1877:

1872:

1867:

1861:

1858:

1855:

1850:

1846:

1842:

1839:

1836:

1833:

1830:

1825:

1822:

1819:

1815:

1811:

1806:

1803:

1800:

1796:

1791:

1785:

1782:

1779:

1775:

1771:

1768:

1761:

1758:

1755:

1751:

1746:

1740:

1736:

1730:

1726:

1719:

1716:

1711:

1707:

1706:

1704:

1686:

1685:

1672:

1668:

1662:

1659:

1656:

1652:

1648:

1637:

1623:

1619:

1613:

1610:

1607:

1603:

1599:

1588:

1576:

1571:

1567:

1562:

1556:

1552:

1548:

1545:

1534:

1520:

1516:

1510:

1507:

1504:

1500:

1496:

1470:

1466:

1462:

1457:

1454:

1451:

1447:

1443:

1438:

1435:

1432:

1428:

1424:

1421:

1409:

1406:

1393:

1388:

1385:

1382:

1379:

1375:

1370:

1364:

1360:

1356:

1353:

1346:

1342:

1336:

1333:

1330:

1326:

1315:

1311:

1306:

1300:

1296:

1292:

1289:

1286:

1281:

1277:

1273:

1267:

1264:

1261:

1257:

1253:

1250:

1247:

1244:

1241:

1230:

1229:

1217:

1212:

1209:

1206:

1203:

1199:

1194:

1188:

1184:

1180:

1177:

1155:

1151:

1126:

1122:

1117:

1113:

1110:

1107:

1104:

1099:

1095:

1091:

1086:

1082:

1070:

1058:

1053:

1049:

1045:

1042:

1039:

1036:

1033:

1030:

1027:

1024:

1021:

1018:

1015:

1012:

1009:

1004:

1000:

996:

993:

990:

987:

984:

981:

978:

975:

972:

961:

947:

943:

922:

902:

899:

896:

893:

890:

887:

884:

881:

878:

875:

872:

869:

866:

863:

860:

857:

854:

851:

829:

825:

802:

798:

777:

765:

764:

752:

747:

743:

738:

732:

728:

724:

721:

699:

695:

672:

668:

647:

642:

638:

633:

627:

623:

619:

616:

600:

597:

581:

576:

572:

568:

565:

562:

559:

556:

553:

550:

547:

544:

541:

538:

535:

532:

527:

523:

519:

516:

513:

510:

507:

504:

501:

498:

495:

425:

422:

419:

416:

396:

376:

371:

367:

363:

360:

357:

354:

351:

348:

345:

342:

339:

336:

333:

330:

327:

322:

318:

314:

311:

308:

305:

302:

299:

296:

293:

290:

270:

267:

264:

261:

258:

246:

245:Word alignment

243:

241:

238:

222:

202:

182:

162:

159:

155:

151:

148:

145:

142:

139:

119:

99:

79:

67:

64:

63:

62:

55:

52:

49:

46:

43:

15:

13:

10:

9:

6:

4:

3:

2:

6826:

6815:

6812:

6810:

6807:

6806:

6804:

6790:

6786:

6782:

6778:

6774:

6770:

6765:

6760:

6756:

6752:

6745:

6742:

6726:

6719:

6716:

6711:

6704:

6701:

6695:

6692:

6687:

6680:

6678:

6674:

6668:

6665:

6659:

6654:

6650:

6643:

6640:

6632:

6625:

6619:

6616:

6611:

6605:

6601:

6600:

6592:

6590:

6588:

6586:

6582:

6570:on 4 Mar 2016

6566:

6559:

6552:

6549:

6536:

6530:

6527:

6520:

6518:

6501:

6498:

6495:

6492:

6489:

6481:

6477:

6446:

6442:

6433:

6430:

6426:

6423:

6419:

6415:

6412:

6403:

6399:

6393:

6388:

6385:

6382:

6378:

6371:

6368:

6364:

6360:

6357:

6352:

6342:

6338:

6329:

6326:

6323:

6320:

6317:

6309:

6305:

6299:

6294:

6291:

6288:

6284:

6277:

6271:

6268:

6265:

6262:

6259:

6251:

6247:

6239:

6238:

6237:

6223:

6220:

6217:

6214:

6211:

6208:

6205:

6202:

6199:

6176:

6173:

6170:

6167:

6164:

6156:

6152:

6143:

6127:

6098:

6095:

6091:

6088:

6084:

6080:

6077:

6068:

6065:

6062:

6058:

6054:

6049:

6041:

6038:

6034:

6031:

6027:

6023:

6020:

6011:

6007:

6000:

5997:

5993:

5989:

5986:

5981:

5972:

5969:

5966:

5963:

5960:

5952:

5949:

5946:

5942:

5938:

5933:

5925:

5922:

5919:

5916:

5913:

5905:

5901:

5894:

5888:

5885:

5882:

5879:

5876:

5868:

5864:

5856:

5855:

5854:

5850:

5831:

5828:

5824:

5821:

5817:

5813:

5805:

5801:

5794:

5791:

5784:

5781:

5778:

5775:

5772:

5768:

5763:

5759:

5754:

5750:

5741:

5737:

5727:

5709:

5706:

5703:

5699:

5695:

5690:

5687:

5684:

5681:

5677:

5673:

5665:

5661:

5654:

5651:

5646:

5642:

5633:

5629:

5619:

5603:

5599:

5585:

5583:

5579:

5563:

5559:

5533:

5530:

5527:

5520:

5497:

5493:

5469:

5463:

5440:

5434:

5425:

5404:

5400:

5393:

5390:

5385:

5382:

5379:

5376:

5373:

5369:

5365:

5362:

5354:

5350:

5340:

5319:

5315:

5308:

5305:

5294:

5291:

5288:

5281:

5274:

5271:

5263:

5260:

5257:

5250:

5246:

5243:

5235:

5231:

5221:

5214:

5212:

5209:

5195:

5175:

5155:

5135:

5113:

5109:

5086:

5056:

5051:

5047:

5039:

5029:

5025:

5011:

5000:

4992:

4989:

4974:

4971:

4968:

4965:

4960:

4956:

4947:

4941:

4936:

4931:

4928:

4922:

4916:

4913:

4910:

4906:

4902:

4892:

4888:

4883:

4879:

4874:

4870:

4863:

4858:

4853:

4850:

4847:

4843:

4839:

4831:

4827:

4823:

4814:

4811:

4806:

4796:

4791:

4788:

4785:

4781:

4777:

4771:

4768:

4765:

4762:

4759:

4753:

4746:

4745:

4744:

4738:

4735:

4731:

4728:

4724:

4723:

4722:

4715:

4711:

4690:

4687:

4684:

4681:

4678:

4669:

4662:

4661:

4660:

4658:

4654:

4638:

4635:

4632:

4612:

4592:

4566:

4563:

4560:

4554:

4547:

4546:

4545:

4539:

4537:

4523:

4495:

4491:

4487:

4482:

4478:

4474:

4471:

4462:

4459:

4450:

4446:

4434:

4425:

4422:

4417:

4403:

4395:

4391:

4384:

4378:

4375:

4370:

4356:

4348:

4344:

4340:

4335:

4331:

4324:

4313:

4305:

4301:

4297:

4292:

4288:

4281:

4270:

4262:

4258:

4254:

4249:

4245:

4238:

4227:

4219:

4215:

4211:

4206:

4202:

4195:

4187:

4183:

4179:

4174:

4170:

4166:

4163:

4155:

4147:

4143:

4131:

4123:

4119:

4105:

4097:

4093:

4086:

4078:

4074:

4064:

4060:

4056:

4051:

4047:

4043:

4040:

4036:

4032:

4019:

4015:

4011:

4006:

4002:

3998:

3995:

3987:

3979:

3975:

3947:

3943:

3939:

3934:

3930:

3926:

3923:

3914:

3911:

3902:

3898:

3886:

3877:

3874:

3869:

3855:

3847:

3843:

3836:

3830:

3827:

3822:

3808:

3800:

3796:

3792:

3787:

3783:

3776:

3765:

3757:

3753:

3749:

3744:

3740:

3733:

3725:

3721:

3717:

3712:

3708:

3704:

3701:

3693:

3685:

3681:

3669:

3661:

3657:

3643:

3635:

3631:

3624:

3616:

3613:

3610:

3607:

3604:

3600:

3592:

3588:

3584:

3571:

3567:

3556:

3552:

3545:

3520:

3516:

3512:

3507:

3503:

3499:

3496:

3485:

3479:

3471:

3467:

3455:

3449:

3445:

3434:

3430:

3423:

3416:

3412:

3406:

3403:

3400:

3396:

3391:

3387:

3383:

3379:

3373:

3365:

3362:

3359:

3353:

3344:

3328:

3324:

3301:

3297:

3276:

3256:

3231:

3227:

3223:

3218:

3214:

3210:

3207:

3199:

3191:

3187:

3174:

3169:

3165:

3161:

3157:

3139:

3136:

3133:

3130:

3127:

3124:

3121:

3118:

3115:

3109:

3106:

3084:

3081:

3076:

3070:

3062:

3056:

3051:

3043:

3039:

3030:

3014:

3011:

2990:

2967:

2958:

2955:

2948:

2945:

2939:

2931: