2267:

the plane, but a person who arrives after can not, a discontinuity and asymmetry which makes arriving slightly late much more costly than arriving slightly early. In drug dosing, the cost of too little drug may be lack of efficacy, while the cost of too much may be tolerable toxicity, another example of asymmetry. Traffic, pipes, beams, ecologies, climates, etc. may tolerate increased load or stress with little noticeable change up to a point, then become backed up or break catastrophically. These situations, Deming and Taleb argue, are common in real-life problems, perhaps more common than classical smooth, continuous, symmetric, differentials cases.

374:

1194:- it minimises the average loss over all possible states of nature θ, over all possible (probability-weighted) data outcomes. One advantage of the Bayesian approach is to that one need only choose the optimal action under the actual observed data to obtain a uniformly optimal one, whereas choosing the actual frequentist optimal decision rule as a function of all possible observations, is a much more difficult problem. Of equal importance though, the Bayes Rule reflects consideration of loss outcomes under different states of nature, θ.

5269:

168:

1157:

6209:

5255:

6189:

898:

33:

5293:

1952:

5281:

796:

1152:{\displaystyle \rho (\pi ^{*},a)=\int _{\Theta }\int _{\mathbf {X}}L(\theta ,a({\mathbf {x}}))\,\mathrm {d} P({\mathbf {x}}\vert \theta )\,\mathrm {d} \pi ^{*}(\theta )=\int _{\mathbf {X}}\int _{\Theta }L(\theta ,a({\mathbf {x}}))\,\mathrm {d} \pi ^{*}(\theta \vert {\mathbf {x}})\,\mathrm {d} M({\mathbf {x}})}

1778:

2266:

argue that empirical reality, not nice mathematical properties, should be the sole basis for selecting loss functions, and real losses often are not mathematically nice and are not differentiable, continuous, symmetric, etc. For example, a person who arrives before a plane gate closure can still make

1962:

Sound statistical practice requires selecting an estimator consistent with the actual acceptable variation experienced in the context of a particular applied problem. Thus, in the applied use of loss functions, selecting which statistical method to use to model an applied problem depends on knowing

553:

data that were elicited through computer-assisted interviews with decision makers. Among other things, he constructed objective functions to optimally distribute budgets for 16 Westfalian universities and the

European subsidies for equalizing unemployment rates among 271 German regions.

2251:

The choice of a loss function is not arbitrary. It is very restrictive and sometimes the loss function may be characterized by its desirable properties. Among the choice principles are, for example, the requirement of completeness of the class of symmetric statistics in the case of

531:

In many applications, objective functions, including loss functions as a particular case, are determined by the problem formulation. In other situations, the decision maker’s preference must be elicited and represented by a scalar-valued function (called also

1759:

2417:

Constructing Scalar-Valued

Objective Functions. Proceedings of the Third International Conference on Econometric Decision Models: Constructing Scalar-Valued Objective Functions, University of Hagen, held in Katholische Akademie Schwerte September 5–8,

216:, i.e., the loss associated with a decision should be the difference between the consequences of the best decision that could have been made had the underlying circumstances been known and the decision that was in fact taken before they were known.

1421:

639:

2451:

Constructing and

Applying Objective Functions. Proceedings of the Fourth International Conference on Econometric Decision Models Constructing and Applying Objective Functions, University of Hagen, held in Haus Nordhelle, August, 28 — 31,

1947:{\displaystyle {\underset {\delta }{\operatorname {arg\,min} }}\operatorname {E} _{\theta \in \Theta }={\underset {\delta }{\operatorname {arg\,min} }}\ \int _{\theta \in \Theta }R(\theta ,\delta )\,p(\theta )\,d\theta .}

1323:

1637:

1651:

of the uncertain variable of interest, such as end-of-period wealth. Since the value of this variable is uncertain, so is the value of the utility function; it is the expected value of utility that is maximized.

544:

showed that the most usable objective functions — quadratic and additive — are determined by a few indifference points. He used this property in the models for constructing these objective functions from either

1546:

1676:

6083:

1332:

1982:

is the estimator that minimizes expected loss experienced under the absolute-difference loss function. Still different estimators would be optimal under other, less common circumstances.

838:

299:

540:

has highlighted in his Nobel Prize lecture. The existing methods for constructing objective functions are collected in the proceedings of two dedicated conferences. In particular,

473:

366:, the expected value of the quadratic form is used. The quadratic loss assigns more importance to outliers than to the true data due to its square nature, so alternatives like the

2238:

1234:

515:

5925:

791:{\displaystyle R(\theta ,\delta )=\operatorname {E} _{\theta }L{\big (}\theta ,\delta (X){\big )}=\int _{X}L{\big (}\theta ,\delta (x){\big )}\,\mathrm {d} P_{\theta }(x).}

5331:

2082:

2131:

350:. In these problems, even in the absence of uncertainty, it may not be possible to achieve the desired values of all target variables. Often loss is expressed as a

4390:

2157:

1247:

4895:

2181:

1648:

1554:

5045:

4669:

3310:

4443:

176:

4882:

5441:

1464:

124:, and the event in question is some function of the difference between estimated and true values for an instance of data. The concept, as old as

1989:, the objective function is simply expressed as the expected value of a monetary quantity, such as profit, income, or end-of-period wealth. For

236:, as well as being symmetric: an error above the target causes the same loss as the same magnitude of error below the target. If the target is

5324:

2885:

2767:

2734:

2693:

2657:

2467:

2433:

6114:

3305:

3005:

6215:

5766:

5503:

3909:

3057:

5297:

308:; the value of the constant makes no difference to a decision, and can be ignored by setting it equal to 1. This is also known as the

6027:

5654:

5461:

5317:

4692:

4584:

2809:

2343:

2281:

568:

In some contexts, the value of the loss function itself is a random quantity because it depends on the outcome of a random variable

5982:

4870:

4744:

4928:

4589:

4334:

3705:

3295:

3919:

600:

We first define the expected loss in the frequentist context. It is obtained by taking the expected value with respect to the

6169:

6109:

5707:

4979:

4191:

3998:

3887:

3845:

3084:

5702:

5391:

5222:

4181:

2622:

2483:

Tangian, Andranik (2002). "Constructing a quasi-concave quadratic objective function from interviewing a decision maker".

1549:

117:, etc.), in which case it is to be maximized. The loss function could include terms from several levels of the hierarchy.

4231:

6144:

5498:

5451:

5446:

4773:

4722:

4707:

4697:

4566:

4438:

4405:

4186:

4016:

1443:

1170:(θ | x) is the posterior distribution, and the order of integration has been changed. One then should choose the action

373:

4842:

4143:

6195:

5491:

5417:

5117:

4918:

3897:

3566:

3030:

2865:

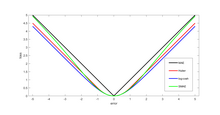

2635:

2617:

563:

5002:

4969:

2612:

6243:

5819:

5754:

5355:

4974:

4717:

4476:

4382:

4362:

4270:

3981:

3799:

3282:

3154:

347:

145:

86:

4148:

3914:

3772:

1673:: Choose the decision rule with the lowest worst loss — that is, minimize the worst-case (maximum possible) loss:

816:

6220:

6078:

5717:

5548:

5371:

4734:

4502:

4223:

4077:

4006:

3926:

3784:

3765:

3473:

3194:

1754:{\displaystyle {\underset {\delta }{\operatorname {arg\,min} }}\ \max _{\theta \in \Theta }\ R(\theta ,\delta ).}

161:

70:

4847:

6119:

5376:

5217:

4984:

4532:

4497:

4461:

4246:

3688:

3597:

3556:

3468:

3159:

2998:

866:

601:

212:

198:

141:

4254:

4238:

2782:

Detailed information on mathematical principles of the loss function choice is given in

Chapter 2 of the book

246:

232:

techniques. It is often more mathematically tractable than other loss functions because of the properties of

6248:

6164:

6149:

5802:

5797:

5697:

5565:

5346:

5126:

4739:

4679:

4616:

3976:

3838:

3828:

3678:

3592:

2286:

2032:

4887:

4824:

2392:

1974:

or average is the statistic for estimating location that minimizes the expected loss experienced under the

403:

152:, it is used in an insurance context to model benefits paid over premiums, particularly since the works of

6124:

5884:

5603:

5598:

5164:

5094:

4579:

4466:

3463:

3360:

3267:

3146:

3045:

2024:

1432:

810:

355:

5285:

4163:

2186:

6154:

6139:

6104:

5792:

5692:

5560:

5189:

5131:

5074:

4900:

4793:

4702:

4428:

4312:

4171:

4053:

4045:

3860:

3756:

3734:

3693:

3658:

3625:

3571:

3546:

3501:

3440:

3400:

3202:

3025:

2955:

Waud, Roger N. (1976). "Asymmetric

Policymaker Utility Functions and Optimal Policy under Uncertainty".

2263:

359:

332:

6022:

5268:

4158:

2581:

1416:{\displaystyle R(\theta ,{\hat {\theta }})=\operatorname {E} _{\theta }(\theta -{\hat {\theta }})^{2}.}

1210:

485:

6174:

6129:

5575:

5520:

5366:

5361:

5112:

4687:

4636:

4612:

4574:

4492:

4471:

4423:

4302:

4280:

4249:

4035:

3986:

3904:

3877:

3833:

3789:

3551:

3327:

3207:

2873:

2645:

585:

125:

121:

94:

41:

5749:

5727:

5476:

5471:

5429:

5381:

5259:

5184:

5107:

4788:

4552:

4545:

4507:

4415:

4395:

4367:

4100:

3966:

3961:

3951:

3943:

3761:

3722:

3612:

3602:

3511:

3290:

3246:

3164:

3089:

2991:

2028:

1963:

the losses that will be experienced from being wrong under the problem's particular circumstances.

1764:

854:

328:

172:

6188:

4834:

2391:

Frisch, Ragnar (1969). "From utopian theory to practical applications: the case of econometrics".

6134:

5712:

5541:

5273:

5084:

4938:

4783:

4659:

4556:

4540:

4517:

4294:

4028:

4011:

3971:

3882:

3777:

3739:

3710:

3670:

3630:

3576:

3493:

3179:

3174:

2972:

2553:

Tangian, Andranik (2004). "Redistribution of university budgets with respect to the status quo".

2535:

2500:

2329:

2085:

2039:

2017:

1967:

1447:

1439:

1428:

1326:

1241:

882:

363:

225:

2045:

2830:

167:

6200:

5992:

5644:

5515:

5508:

5179:

5149:

5141:

4961:

4952:

4877:

4808:

4664:

4649:

4624:

4512:

4453:

4319:

4307:

3933:

3850:

3794:

3717:

3561:

3483:

3262:

3136:

2881:

2853:

2805:

2763:

2730:

2689:

2681:

2653:

2582:"Multi-criteria optimization of regional employment policy: A simulation analysis for Germany"

2463:

2429:

2339:

2325:

2259:

2091:

340:

203:

149:

51:

2857:

2518:

Tangian, Andranik (2004). "A model for ordinally constructing additive objective functions".

592:

of the loss function; however, this quantity is defined differently under the two paradigms.

5945:

5935:

5742:

5536:

5486:

5481:

5424:

5412:

5204:

5159:

4923:

4910:

4803:

4778:

4712:

4644:

4522:

4130:

4023:

3956:

3869:

3816:

3635:

3506:

3300:

3099:

3066:

2964:

2941:

2918:

2845:

2722:

2593:

2562:

2527:

2492:

2455:

2421:

2301:

550:

541:

153:

114:

110:

2895:

2744:

2703:

2667:

354:

in the deviations of the variables of interest from their desired values; this approach is

6058:

6002:

5824:

5466:

5386:

5121:

4865:

4727:

4654:

4329:

4203:

4176:

4153:

4122:

3749:

3744:

3698:

3428:

3079:

2891:

2740:

2699:

2663:

2276:

546:

479:

391:

157:

106:

102:

74:

2136:

2877:

2649:

2454:. Lecture Notes in Economics and Mathematical Systems. Vol. 510. Berlin: Springer.

2420:. Lecture Notes in Economics and Mathematical Systems. Vol. 453. Berlin: Springer.

6032:

5997:

5987:

5812:

5570:

5396:

5070:

5065:

3528:

3458:

3104:

2166:

2005:

1772:

1451:

589:

351:

2566:

2531:

2496:

6237:

5977:

5957:

5874:

5553:

5227:

5194:

5057:

5018:

4829:

4798:

4262:

4216:

3523:

3350:

3114:

3109:

2945:

2849:

2597:

2159:. The squared loss has the disadvantage that it has the tendency to be dominated by

2013:

1990:

1975:

1661:

537:

336:

229:

137:

3380:

2539:

2504:

6063:

5894:

5169:

5102:

5079:

4994:

4324:

3620:

3518:

3453:

3395:

3317:

3272:

2906:

2296:

2133:. However the absolute loss has the disadvantage that it is not differentiable at

1986:

526:

129:

101:

is either a loss function or its opposite (in specific domains, variously called a

46:

2333:

17:

2639:

6159:

5930:

5839:

5834:

5456:

5434:

5212:

5174:

4857:

4758:

4620:

4433:

4400:

3892:

3809:

3804:

3448:

3405:

3385:

3365:

3355:

3124:

2360:

1994:

581:

90:

2001:, and the objective function to be optimized is the expected value of utility.

1664:

makes a choice using an optimality criterion. Some commonly used criteria are:

6053:

6012:

6007:

5920:

5829:

5737:

5649:

5629:

4058:

3538:

3238:

3169:

3119:

3094:

3014:

2459:

2425:

2291:

387:

367:

180:

6048:

6017:

5915:

5759:

5722:

5659:

5613:

5608:

5593:

4211:

4063:

3683:

3478:

3390:

3375:

3370:

3335:

2922:

2009:

1647:

In economics, decision-making under uncertainty is often modelled using the

1424:

320:

133:

5309:

1318:{\displaystyle L(\theta ,{\hat {\theta }})=(\theta -{\hat {\theta }})^{2},}

370:, Log-Cosh and SMAE losses are used when the data has many large outliers.

2726:

5950:

5782:

3727:

3345:

3222:

3217:

3212:

3184:

2831:"Asymmetric Loss Functions and the Rationality of Expected Stock Returns"

1771:

Choose the decision rule with the lowest average loss (i.e. minimize the

1632:{\displaystyle R(f,{\hat {f}})=\operatorname {E} \|f-{\hat {f}}\|^{2}.\,}

233:

6073:

5910:

5864:

5787:

5687:

5682:

5634:

5232:

4933:

2976:

2160:

1998:

1669:

1455:

533:

207:

2256:

observations, the principle of complete information, and some others.

148:, it is the penalty for an incorrect classification of an example. In

6088:

6068:

5940:

5732:

5154:

4135:

4109:

4089:

3340:

3131:

2253:

1979:

1768:: Choose the decision rule which satisfies an invariance requirement.

377:

Effect of using different loss functions, when the data has outliers.

324:

160:, the loss is the penalty for failing to achieve a desired value. In

85:(sometimes also called an error function) is a function that maps an

2968:

2829:

Aretz, Kevin; Bartram, Söhnke M.; Pope, Peter F. (April–June 2011).

2240:), the final sum tends to be the result of a few particularly large

1178:. In the latter equation, the integrand inside dx is known as the

2721:. Springer Texts in Statistics (2nd ed.). New York: Springer.

93:

intuitively representing some "cost" associated with the event. An

5889:

5869:

5859:

5854:

5849:

5844:

5807:

5639:

536:

function) in a form suitable for optimization — the problem that

372:

166:

5879:

3074:

1971:

881:

In a

Bayesian approach, the expectation is calculated using the

5313:

5043:

4610:

4357:

3656:

3426:

3043:

2987:

2784:

Klebanov, B.; Rachev, Svetlozat T.; Fabozzi, Frank J. (2009).

1186:

also minimizes the overall Bayes Risk. This optimal decision,

26:

2983:

2932:

Horowitz, Ann R. (1987). "Loss functions and public policy".

66:

Mathematical relation assigning a probability event to a cost

1174:

which minimises this expected loss, which is referred to as

2027:, it is desirable to have a loss function that is globally

1541:{\displaystyle L(f,{\hat {f}})=\|f-{\hat {f}}\|_{2}^{2}\,,}

588:

statistical theory involve making a decision based on the

809:

is a vector of observations stochastically drawn from a

343:

theory, which is based on the quadratic loss function.

2189:

1446:

itself. The loss function is typically chosen to be a

132:

in the middle of the 20th century. In the context of

120:

In statistics, typically a loss function is used for

2169:

2139:

2094:

2048:

1781:

1679:

1557:

1467:

1335:

1250:

1213:

901:

819:

642:

488:

406:

249:

4896:

Autoregressive conditional heteroskedasticity (ARCH)

6097:

6041:

5970:

5903:

5775:

5675:

5668:

5622:

5586:

5529:

5405:

5345:

5203:

5140:

5093:

5056:

5011:

4993:

4960:

4951:

4909:

4856:

4817:

4766:

4757:

4678:

4635:

4565:

4531:

4485:

4452:

4414:

4381:

4293:

4202:

4121:

4076:

4044:

3997:

3942:

3868:

3859:

3669:

3611:

3585:

3537:

3492:

3439:

3326:

3281:

3255:

3237:

3193:

3145:

3065:

3056:

629:. Here the decision rule depends on the outcome of

210:, the loss function should be based on the idea of

2244:-values, rather than an expression of the average

2232:

2175:

2151:

2125:

2076:

1946:

1753:

1631:

1540:

1415:

1317:

1228:

1151:

832:

790:

509:

467:

293:

2870:Statistical decision theory and Bayesian Analysis

2641:Statistical decision theory and Bayesian Analysis

2004:Other measures of cost are possible, for example

840:is the expectation over all population values of

805:is a fixed but possibly unknown state of nature,

1712:

865:) and the integral is evaluated over the entire

228:loss function is common, for example when using

4444:Multivariate adaptive regression splines (MARS)

206:argued that using non-Bayesian methods such as

2907:"Making monetary policy: Objectives and rules"

2038:Two very commonly used loss functions are the

1997:agents, loss is measured as the negative of a

1970:". Under typical statistical assumptions, the

5325:

2999:

1182:, and minimising it with respect to decision

755:

730:

707:

682:

164:, the function is mapped to a monetary loss.

8:

2788:. New York: Nova Scientific Publishers, Inc.

1616:

1594:

1520:

1498:

1114:

1002:

833:{\displaystyle \operatorname {E} _{\theta }}

346:The quadratic loss function is also used in

2872:(2nd ed.). New York: Springer-Verlag.

2644:(2nd ed.). New York: Springer-Verlag.

40:It has been suggested that this article be

5672:

5332:

5318:

5310:

5053:

5040:

4957:

4763:

4632:

4607:

4378:

4354:

4082:

3865:

3666:

3653:

3436:

3423:

3062:

3053:

3040:

3006:

2992:

2984:

2786:Robust and Non-Robust Models in Statistics

290:

89:or values of one or more variables onto a

2449:Tangian, Andranik; Gruber, Josef (2002).

2415:Tangian, Andranik; Gruber, Josef (1997).

2319:

2317:

2221:

2205:

2194:

2188:

2168:

2138:

2118:

2110:

2093:

2068:

2047:

1934:

1921:

1891:

1868:

1858:

1856:

1814:

1794:

1784:

1782:

1780:

1715:

1692:

1682:

1680:

1678:

1628:

1619:

1604:

1603:

1571:

1570:

1556:

1534:

1528:

1523:

1508:

1507:

1481:

1480:

1466:

1404:

1389:

1388:

1370:

1349:

1348:

1334:

1306:

1291:

1290:

1264:

1263:

1249:

1215:

1214:

1212:

1140:

1139:

1128:

1127:

1118:

1117:

1102:

1093:

1092:

1080:

1079:

1055:

1044:

1043:

1021:

1012:

1011:

996:

995:

984:

983:

971:

970:

945:

944:

934:

912:

900:

824:

818:

770:

761:

760:

754:

753:

729:

728:

719:

706:

705:

681:

680:

668:

641:

521:Constructing loss and objective functions

490:

489:

487:

443:

442:

414:

413:

405:

394:, a frequently used loss function is the

348:linear-quadratic optimal control problems

284:

248:

183:, and Log-Cosh Loss) used for regression

2586:Review of Urban and Regional Development

2555:European Journal of Operational Research

2520:European Journal of Operational Research

2485:European Journal of Operational Research

1649:von Neumann–Morgenstern utility function

294:{\displaystyle \lambda (x)=C(t-x)^{2}\;}

2313:

4970:Kaplan–Meier estimator (product limit)

1966:A common example involves estimating "

482:notation, i.e. it evaluates to 1 when

97:seeks to minimize a loss function. An

1166:wherein θ has been "integrated out,"

468:{\displaystyle L({\hat {y}},y)=\left}

171:Comparison of common loss functions (

7:

6170:Generative adversarial network (GAN)

5280:

4980:Accelerated failure time (AFT) model

2838:International Journal of Forecasting

2335:The Elements of Statistical Learning

2233:{\textstyle \sum _{i=1}^{n}L(a_{i})}

240:, then a quadratic loss function is

128:, was reintroduced in statistics by

5292:

4575:Analysis of variance (ANOVA, anova)

1207:, a decision function whose output

4670:Cochran–Mantel–Haenszel statistics

3296:Pearson product-moment correlation

2378:On the mathematical theory of risk

1898:

1875:

1872:

1869:

1865:

1862:

1859:

1821:

1811:

1801:

1798:

1795:

1791:

1788:

1785:

1722:

1699:

1696:

1693:

1689:

1686:

1683:

1588:

1367:

1129:

1094:

1056:

1013:

985:

935:

821:

762:

665:

617:. This is also referred to as the

25:

2613:"Risk of a statistical procedure"

2282:Loss functions for classification

1643:Economic choice under uncertainty

1240:, and a quadratic loss function (

633:. The risk function is given by:

6208:

6207:

6187:

5291:

5279:

5267:

5254:

5253:

2911:Oxford Review of Economic Policy

2850:10.1016/j.ijforecast.2009.10.008

2598:10.1111/j.1467-940X.2008.00144.x

1229:{\displaystyle {\hat {\theta }}}

1141:

1119:

1081:

1045:

997:

972:

946:

510:{\displaystyle {\hat {y}}\neq y}

31:

4929:Least-squares spectral analysis

1985:In economics, when an agent is

136:, for example, this is usually

6120:Recurrent neural network (RNN)

6110:Differentiable neural computer

3910:Mean-unbiased minimum-variance

2362:Statistical Decision Functions

2227:

2214:

2119:

2111:

2104:

2098:

2058:

2052:

1931:

1925:

1918:

1906:

1850:

1847:

1835:

1829:

1745:

1733:

1609:

1582:

1576:

1561:

1548:the risk function becomes the

1513:

1492:

1486:

1471:

1401:

1394:

1379:

1360:

1354:

1339:

1325:the risk function becomes the

1303:

1296:

1281:

1275:

1269:

1254:

1220:

1146:

1136:

1124:

1108:

1089:

1086:

1076:

1064:

1033:

1027:

1008:

992:

980:

977:

967:

955:

924:

905:

782:

776:

750:

744:

702:

696:

658:

646:

495:

448:

431:

419:

410:

281:

268:

259:

253:

1:

6165:Variational autoencoder (VAE)

6125:Long short-term memory (LSTM)

5392:Computational learning theory

5223:Geographic information system

4439:Simultaneous equations models

2762:. Berlin: Walter de Gruyter.

2760:Parametric Statistical Theory

2717:Robert, Christian P. (2007).

2686:Optimal Statistical Decisions

2567:10.1016/S0377-2217(03)00271-6

2532:10.1016/S0377-2217(03)00413-2

2497:10.1016/S0377-2217(01)00185-0

2394:The Nobel Prize–Prize Lecture

1550:mean integrated squared error

358:because it results in linear

6145:Convolutional neural network

4406:Coefficient of determination

4017:Uniformly most powerful test

2946:10.1016/0164-0704(87)90016-4

2163:—when summing over a set of

6140:Multilayer perceptron (MLP)

4975:Proportional hazards models

4919:Spectral density estimation

4901:Vector autoregression (VAR)

4335:Maximum posterior estimator

3567:Randomized controlled trial

2800:Deming, W. Edwards (2000).

2618:Encyclopedia of Mathematics

1442:, the unknown parameter is

1162:where m(x) is known as the

564:Empirical risk minimization

6265:

6216:Artificial neural networks

6130:Gated recurrent unit (GRU)

5356:Differentiable programming

4735:Multivariate distributions

3155:Average absolute deviation

2688:. Wiley Classics Library.

2580:Tangian, Andranik (2008).

2077:{\displaystyle L(a)=a^{2}}

561:

524:

196:

6183:

5549:Artificial neural network

5372:Automatic differentiation

5249:

5052:

5039:

4723:Structural equation model

4631:

4606:

4377:

4353:

4085:

4059:Score/Lagrange multiplier

3665:

3652:

3474:Sample size determination

3435:

3422:

3052:

3039:

3021:

2934:Journal of Macroeconomics

2460:10.1007/978-3-642-56038-5

2426:10.1007/978-3-642-48773-6

1978:loss function, while the

1958:Selecting a loss function

596:Frequentist expected loss

162:financial risk management

71:mathematical optimization

57:Proposed since July 2024.

5377:Neuromorphic engineering

5340:Differentiable computing

5218:Environmental statistics

4740:Elliptical distributions

4533:Generalized linear model

4462:Simple linear regression

4232:Hodges–Lehmann estimator

3689:Probability distribution

3598:Stochastic approximation

3160:Coefficient of variation

2338:. Springer. p. 18.

2126:{\displaystyle L(a)=|a|}

1427:found by minimizing the

857:over the event space of

613:, of the observed data,

602:probability distribution

199:Regret (decision theory)

6150:Residual neural network

5566:Artificial Intelligence

4878:Cross-correlation (XCF)

4486:Non-standard predictors

3920:Lehmann–Scheffé theorem

3593:Adaptive clinical trial

2790:(and references there).

2611:Nikulin, M.S. (2001) ,

2287:Discounted maximum loss

2025:optimization algorithms

1775:of the loss function):

1236:is an estimate of

1203:For a scalar parameter

220:Quadratic loss function

5274:Mathematics portal

5095:Engineering statistics

5003:Nelson–Aalen estimator

4580:Analysis of covariance

4467:Ordinary least squares

4391:Pearson product-moment

3795:Statistical functional

3706:Empirical distribution

3539:Controlled experiments

3268:Frequency distribution

3046:Descriptive statistics

2905:Cecchetti, S. (2000).

2234:

2210:

2177:

2153:

2127:

2078:

1948:

1755:

1633:

1542:

1433:Posterior distribution

1417:

1319:

1230:

1198:Examples in statistics

1153:

888:of the parameter

861:(parametrized by

834:

792:

511:

469:

378:

360:first-order conditions

339:methods applied using

295:

184:

6105:Neural Turing machine

5693:Human image synthesis

5190:Population statistics

5132:System identification

4866:Autocorrelation (ACF)

4794:Exponential smoothing

4708:Discriminant analysis

4703:Canonical correlation

4567:Partition of variance

4429:Regression validation

4273:(Jonckheere–Terpstra)

4172:Likelihood-ratio test

3861:Frequentist inference

3773:Location–scale family

3694:Sampling distribution

3659:Statistical inference

3626:Cross-sectional study

3613:Observational studies

3572:Randomized experiment

3401:Stem-and-leaf display

3203:Central limit theorem

2923:10.1093/oxrep/16.4.43

2758:Pfanzagl, J. (1994).

2727:10.1007/0-387-71599-1

2264:Nassim Nicholas Taleb

2235:

2190:

2178:

2154:

2128:

2079:

1949:

1756:

1634:

1543:

1418:

1320:

1231:

1192:Bayes (decision) Rule

1164:predictive likelihood

1154:

835:

793:

621:of the decision rule

512:

470:

376:

335:, and much else, use

333:design of experiments

296:

170:

6196:Computer programming

6175:Graph neural network

5750:Text-to-video models

5728:Text-to-image models

5576:Large language model

5561:Scientific computing

5367:Statistical manifold

5362:Information geometry

5113:Probabilistic design

4698:Principal components

4541:Exponential families

4493:Nonlinear regression

4472:General linear model

4434:Mixed effects models

4424:Errors and residuals

4401:Confounding variable

4303:Bayesian probability

4281:Van der Waerden test

4271:Ordered alternative

4036:Multiple comparisons

3915:Rao–Blackwellization

3878:Estimating equations

3834:Statistical distance

3552:Factorial experiment

3085:Arithmetic-Geometric

2380:. Centraltryckeriet.

2187:

2167:

2137:

2092:

2046:

1779:

1677:

1555:

1465:

1333:

1248:

1211:

899:

817:

640:

486:

404:

362:. In the context of

247:

122:parameter estimation

95:optimization problem

5542:In-context learning

5382:Pattern recognition

5185:Official statistics

5108:Methods engineering

4789:Seasonal adjustment

4557:Poisson regressions

4477:Bayesian regression

4416:Regression analysis

4396:Partial correlation

4368:Regression analysis

3967:Prediction interval

3962:Likelihood interval

3952:Confidence interval

3944:Interval estimation

3905:Unbiased estimators

3723:Model specification

3603:Up-and-down designs

3291:Partial correlation

3247:Index of dispersion

3165:Interquartile range

2878:1985sdtb.book.....B

2719:The Bayesian Choice

2650:1985sdtb.book.....B

2376:Cramér, H. (1930).

2330:Friedman, Jerome H.

2152:{\displaystyle a=0}

1533:

1454:. For example, for

1444:probability density

855:probability measure

517:, and 0 otherwise.

6135:Echo state network

6023:Jürgen Schmidhuber

5718:Facial recognition

5713:Speech recognition

5623:Software libraries

5205:Spatial statistics

5085:Medical statistics

4985:First hitting time

4939:Whittle likelihood

4590:Degrees of freedom

4585:Multivariate ANOVA

4518:Heteroscedasticity

4330:Bayesian estimator

4295:Bayesian inference

4144:Kolmogorov–Smirnov

4029:Randomization test

3999:Testing hypotheses

3972:Tolerance interval

3883:Maximum likelihood

3778:Exponential family

3711:Density estimation

3671:Statistical theory

3631:Natural experiment

3577:Scientific control

3494:Survey methodology

3180:Standard deviation

2326:Tibshirani, Robert

2230:

2173:

2149:

2123:

2074:

2018:safety engineering

1944:

1882:

1808:

1751:

1726:

1706:

1629:

1538:

1519:

1450:in an appropriate

1440:density estimation

1429:Mean squared error

1413:

1327:mean squared error

1315:

1242:squared error loss

1226:

1149:

883:prior distribution

830:

788:

625:and the parameter

507:

465:

379:

364:stochastic control

310:squared error loss

304:for some constant

291:

185:

99:objective function

18:Squared error loss

6244:Optimal decisions

6231:

6230:

5993:Stephen Grossberg

5966:

5965:

5307:

5306:

5245:

5244:

5241:

5240:

5180:National accounts

5150:Actuarial science

5142:Social statistics

5035:

5034:

5031:

5030:

5027:

5026:

4962:Survival function

4947:

4946:

4809:Granger causality

4650:Contingency table

4625:Survival analysis

4602:

4601:

4598:

4597:

4454:Linear regression

4349:

4348:

4345:

4344:

4320:Credible interval

4289:

4288:

4072:

4071:

3888:Method of moments

3757:Parametric family

3718:Statistical model

3648:

3647:

3644:

3643:

3562:Random assignment

3484:Statistical power

3418:

3417:

3414:

3413:

3263:Contingency table

3233:

3232:

3100:Generalized/power

2887:978-0-387-96098-2

2804:. The MIT Press.

2802:Out of the Crisis

2769:978-3-11-013863-4

2736:978-0-387-95231-4

2695:978-0-471-68029-1

2659:978-0-387-96098-2

2469:978-3-540-42669-1

2435:978-3-540-63061-6

2359:Wald, A. (1950).

2260:W. Edwards Deming

2176:{\displaystyle a}

1886:

1857:

1783:

1729:

1711:

1710:

1681:

1612:

1579:

1516:

1489:

1397:

1357:

1329:of the estimate,

1299:

1272:

1223:

498:

451:

422:

396:0-1 loss function

382:0-1 loss function

341:linear regression

204:Leonard J. Savage

156:in the 1920s. In

150:actuarial science

64:

63:

59:

16:(Redirected from

6256:

6221:Machine learning

6211:

6210:

6191:

5946:Action selection

5936:Self-driving car

5743:Stable Diffusion

5708:Speech synthesis

5673:

5537:Machine learning

5413:Gradient descent

5334:

5327:

5320:

5311:

5295:

5294:

5283:

5282:

5272:

5271:

5257:

5256:

5160:Crime statistics

5054:

5041:

4958:

4924:Fourier analysis

4911:Frequency domain

4891:

4838:

4804:Structural break

4764:

4713:Cluster analysis

4660:Log-linear model

4633:

4608:

4549:

4523:Homoscedasticity

4379:

4355:

4274:

4266:

4258:

4257:(Kruskal–Wallis)

4242:

4227:

4182:Cross validation

4167:

4149:Anderson–Darling

4096:

4083:

4054:Likelihood-ratio

4046:Parametric tests

4024:Permutation test

4007:1- & 2-tails

3898:Minimum distance

3870:Point estimation

3866:

3817:Optimal decision

3768:

3667:

3654:

3636:Quasi-experiment

3586:Adaptive designs

3437:

3424:

3301:Rank correlation

3063:

3054:

3041:

3008:

3001:

2994:

2985:

2980:

2949:

2926:

2899:

2866:Berger, James O.

2861:

2835:

2816:

2815:

2797:

2791:

2789:

2780:

2774:

2773:

2755:

2749:

2748:

2714:

2708:

2707:

2678:

2672:

2671:

2636:Berger, James O.

2632:

2626:

2625:

2608:

2602:

2601:

2577:

2571:

2570:

2550:

2544:

2543:

2515:

2509:

2508:

2480:

2474:

2473:

2446:

2440:

2439:

2412:

2406:

2405:

2403:

2401:

2388:

2382:

2381:

2373:

2367:

2366:

2356:

2350:

2349:

2324:Hastie, Trevor;

2321:

2302:Statistical risk

2239:

2237:

2236:

2231:

2226:

2225:

2209:

2204:

2182:

2180:

2179:

2174:

2158:

2156:

2155:

2150:

2132:

2130:

2129:

2124:

2122:

2114:

2083:

2081:

2080:

2075:

2073:

2072:

2012:in the field of

1999:utility function

1953:

1951:

1950:

1945:

1902:

1901:

1884:

1883:

1878:

1825:

1824:

1809:

1804:

1760:

1758:

1757:

1752:

1727:

1725:

1708:

1707:

1702:

1638:

1636:

1635:

1630:

1624:

1623:

1614:

1613:

1605:

1581:

1580:

1572:

1547:

1545:

1544:

1539:

1532:

1527:

1518:

1517:

1509:

1491:

1490:

1482:

1422:

1420:

1419:

1414:

1409:

1408:

1399:

1398:

1390:

1375:

1374:

1359:

1358:

1350:

1324:

1322:

1321:

1316:

1311:

1310:

1301:

1300:

1292:

1274:

1273:

1265:

1235:

1233:

1232:

1227:

1225:

1224:

1216:

1190:is known as the

1169:

1158:

1156:

1155:

1150:

1145:

1144:

1132:

1123:

1122:

1107:

1106:

1097:

1085:

1084:

1060:

1059:

1050:

1049:

1048:

1026:

1025:

1016:

1001:

1000:

988:

976:

975:

951:

950:

949:

939:

938:

917:

916:

887:

839:

837:

836:

831:

829:

828:

797:

795:

794:

789:

775:

774:

765:

759:

758:

734:

733:

724:

723:

711:

710:

686:

685:

673:

672:

542:Andranik Tangian

516:

514:

513:

508:

500:

499:

491:

474:

472:

471:

466:

464:

460:

453:

452:

444:

424:

423:

415:

300:

298:

297:

292:

289:

288:

115:fitness function

111:utility function

55:

35:

34:

27:

21:

6264:

6263:

6259:

6258:

6257:

6255:

6254:

6253:

6234:

6233:

6232:

6227:

6179:

6093:

6059:Google DeepMind

6037:

6003:Geoffrey Hinton

5962:

5899:

5825:Project Debater

5771:

5669:Implementations

5664:

5618:

5582:

5525:

5467:Backpropagation

5401:

5387:Tensor calculus

5341:

5338:

5308:

5303:

5266:

5237:

5199:

5136:

5122:quality control

5089:

5071:Clinical trials

5048:

5023:

5007:

4995:Hazard function

4989:

4943:

4905:

4889:

4852:

4848:Breusch–Godfrey

4836:

4813:

4753:

4728:Factor analysis

4674:

4655:Graphical model

4627:

4594:

4561:

4547:

4527:

4481:

4448:

4410:

4373:

4372:

4341:

4285:

4272:

4264:

4256:

4240:

4225:

4204:Rank statistics

4198:

4177:Model selection

4165:

4123:Goodness of fit

4117:

4094:

4068:

4040:

3993:

3938:

3927:Median unbiased

3855:

3766:

3699:Order statistic

3661:

3640:

3607:

3581:

3533:

3488:

3431:

3429:Data collection

3410:

3322:

3277:

3251:

3229:

3189:

3141:

3058:Continuous data

3048:

3035:

3017:

3012:

2969:10.2307/1911380

2954:

2931:

2904:

2888:

2864:

2833:

2828:

2825:

2823:Further reading

2820:

2819:

2812:

2799:

2798:

2794:

2783:

2781:

2777:

2770:

2757:

2756:

2752:

2737:

2716:

2715:

2711:

2696:

2682:DeGroot, Morris

2680:

2679:

2675:

2660:

2634:

2633:

2629:

2610:

2609:

2605:

2579:

2578:

2574:

2552:

2551:

2547:

2517:

2516:

2512:

2482:

2481:

2477:

2470:

2448:

2447:

2443:

2436:

2414:

2413:

2409:

2399:

2397:

2390:

2389:

2385:

2375:

2374:

2370:

2358:

2357:

2353:

2346:

2323:

2322:

2315:

2310:

2277:Bayesian regret

2273:

2217:

2185:

2184:

2165:

2164:

2135:

2134:

2090:

2089:

2064:

2044:

2043:

1960:

1887:

1810:

1777:

1776:

1675:

1674:

1658:

1645:

1615:

1553:

1552:

1463:

1462:

1400:

1366:

1331:

1330:

1302:

1246:

1245:

1209:

1208:

1200:

1167:

1098:

1051:

1039:

1017:

940:

930:

908:

897:

896:

885:

879:

852:

820:

815:

814:

766:

715:

664:

638:

637:

612:

598:

578:

566:

560:

529:

523:

484:

483:

480:Iverson bracket

441:

437:

402:

401:

392:decision theory

384:

280:

245:

244:

222:

201:

195:

190:

158:optimal control

107:profit function

103:reward function

75:decision theory

67:

60:

36:

32:

23:

22:

15:

12:

11:

5:

6262:

6260:

6252:

6251:

6249:Loss functions

6246:

6236:

6235:

6229:

6228:

6226:

6225:

6224:

6223:

6218:

6205:

6204:

6203:

6198:

6184:

6181:

6180:

6178:

6177:

6172:

6167:

6162:

6157:

6152:

6147:

6142:

6137:

6132:

6127:

6122:

6117:

6112:

6107:

6101:

6099:

6095:

6094:

6092:

6091:

6086:

6081:

6076:

6071:

6066:

6061:

6056:

6051:

6045:

6043:

6039:

6038:

6036:

6035:

6033:Ilya Sutskever

6030:

6025:

6020:

6015:

6010:

6005:

6000:

5998:Demis Hassabis

5995:

5990:

5988:Ian Goodfellow

5985:

5980:

5974:

5972:

5968:

5967:

5964:

5963:

5961:

5960:

5955:

5954:

5953:

5943:

5938:

5933:

5928:

5923:

5918:

5913:

5907:

5905:

5901:

5900:

5898:

5897:

5892:

5887:

5882:

5877:

5872:

5867:

5862:

5857:

5852:

5847:

5842:

5837:

5832:

5827:

5822:

5817:

5816:

5815:

5805:

5800:

5795:

5790:

5785:

5779:

5777:

5773:

5772:

5770:

5769:

5764:

5763:

5762:

5757:

5747:

5746:

5745:

5740:

5735:

5725:

5720:

5715:

5710:

5705:

5700:

5695:

5690:

5685:

5679:

5677:

5670:

5666:

5665:

5663:

5662:

5657:

5652:

5647:

5642:

5637:

5632:

5626:

5624:

5620:

5619:

5617:

5616:

5611:

5606:

5601:

5596:

5590:

5588:

5584:

5583:

5581:

5580:

5579:

5578:

5571:Language model

5568:

5563:

5558:

5557:

5556:

5546:

5545:

5544:

5533:

5531:

5527:

5526:

5524:

5523:

5521:Autoregression

5518:

5513:

5512:

5511:

5501:

5499:Regularization

5496:

5495:

5494:

5489:

5484:

5474:

5469:

5464:

5462:Loss functions

5459:

5454:

5449:

5444:

5439:

5438:

5437:

5427:

5422:

5421:

5420:

5409:

5407:

5403:

5402:

5400:

5399:

5397:Inductive bias

5394:

5389:

5384:

5379:

5374:

5369:

5364:

5359:

5351:

5349:

5343:

5342:

5339:

5337:

5336:

5329:

5322:

5314:

5305:

5304:

5302:

5301:

5289:

5277:

5263:

5250:

5247:

5246:

5243:

5242:

5239:

5238:

5236:

5235:

5230:

5225:

5220:

5215:

5209:

5207:

5201:

5200:

5198:

5197:

5192:

5187:

5182:

5177:

5172:

5167:

5162:

5157:

5152:

5146:

5144:

5138:

5137:

5135:

5134:

5129:

5124:

5115:

5110:

5105:

5099:

5097:

5091:

5090:

5088:

5087:

5082:

5077:

5068:

5066:Bioinformatics

5062:

5060:

5050:

5049:

5044:

5037:

5036:

5033:

5032:

5029:

5028:

5025:

5024:

5022:

5021:

5015:

5013:

5009:

5008:

5006:

5005:

4999:

4997:

4991:

4990:

4988:

4987:

4982:

4977:

4972:

4966:

4964:

4955:

4949:

4948:

4945:

4944:

4942:

4941:

4936:

4931:

4926:

4921:

4915:

4913:

4907:

4906:

4904:

4903:

4898:

4893:

4885:

4880:

4875:

4874:

4873:

4871:partial (PACF)

4862:

4860:

4854:

4853:

4851:

4850:

4845:

4840:

4832:

4827:

4821:

4819:

4818:Specific tests

4815:

4814:

4812:

4811:

4806:

4801:

4796:

4791:

4786:

4781:

4776:

4770:

4768:

4761:

4755:

4754:

4752:

4751:

4750:

4749:

4748:

4747:

4732:

4731:

4730:

4720:

4718:Classification

4715:

4710:

4705:

4700:

4695:

4690:

4684:

4682:

4676:

4675:

4673:

4672:

4667:

4665:McNemar's test

4662:

4657:

4652:

4647:

4641:

4639:

4629:

4628:

4611:

4604:

4603:

4600:

4599:

4596:

4595:

4593:

4592:

4587:

4582:

4577:

4571:

4569:

4563:

4562:

4560:

4559:

4543:

4537:

4535:

4529:

4528:

4526:

4525:

4520:

4515:

4510:

4505:

4503:Semiparametric

4500:

4495:

4489:

4487:

4483:

4482:

4480:

4479:

4474:

4469:

4464:

4458:

4456:

4450:

4449:

4447:

4446:

4441:

4436:

4431:

4426:

4420:

4418:

4412:

4411:

4409:

4408:

4403:

4398:

4393:

4387:

4385:

4375:

4374:

4371:

4370:

4365:

4359:

4358:

4351:

4350:

4347:

4346:

4343:

4342:

4340:

4339:

4338:

4337:

4327:

4322:

4317:

4316:

4315:

4310:

4299:

4297:

4291:

4290:

4287:

4286:

4284:

4283:

4278:

4277:

4276:

4268:

4260:

4244:

4241:(Mann–Whitney)

4236:

4235:

4234:

4221:

4220:

4219:

4208:

4206:

4200:

4199:

4197:

4196:

4195:

4194:

4189:

4184:

4174:

4169:

4166:(Shapiro–Wilk)

4161:

4156:

4151:

4146:

4141:

4133:

4127:

4125:

4119:

4118:

4116:

4115:

4107:

4098:

4086:

4080:

4078:Specific tests

4074:

4073:

4070:

4069:

4067:

4066:

4061:

4056:

4050:

4048:

4042:

4041:

4039:

4038:

4033:

4032:

4031:

4021:

4020:

4019:

4009:

4003:

4001:

3995:

3994:

3992:

3991:

3990:

3989:

3984:

3974:

3969:

3964:

3959:

3954:

3948:

3946:

3940:

3939:

3937:

3936:

3931:

3930:

3929:

3924:

3923:

3922:

3917:

3902:

3901:

3900:

3895:

3890:

3885:

3874:

3872:

3863:

3857:

3856:

3854:

3853:

3848:

3843:

3842:

3841:

3831:

3826:

3825:

3824:

3814:

3813:

3812:

3807:

3802:

3792:

3787:

3782:

3781:

3780:

3775:

3770:

3754:

3753:

3752:

3747:

3742:

3732:

3731:

3730:

3725:

3715:

3714:

3713:

3703:

3702:

3701:

3691:

3686:

3681:

3675:

3673:

3663:

3662:

3657:

3650:

3649:

3646:

3645:

3642:

3641:

3639:

3638:

3633:

3628:

3623:

3617:

3615:

3609:

3608:

3606:

3605:

3600:

3595:

3589:

3587:

3583:

3582:

3580:

3579:

3574:

3569:

3564:

3559:

3554:

3549:

3543:

3541:

3535:

3534:

3532:

3531:

3529:Standard error

3526:

3521:

3516:

3515:

3514:

3509:

3498:

3496:

3490:

3489:

3487:

3486:

3481:

3476:

3471:

3466:

3461:

3459:Optimal design

3456:

3451:

3445:

3443:

3433:

3432:

3427:

3420:

3419:

3416:

3415:

3412:

3411:

3409:

3408:

3403:

3398:

3393:

3388:

3383:

3378:

3373:

3368:

3363:

3358:

3353:

3348:

3343:

3338:

3332:

3330:

3324:

3323:

3321:

3320:

3315:

3314:

3313:

3308:

3298:

3293:

3287:

3285:

3279:

3278:

3276:

3275:

3270:

3265:

3259:

3257:

3256:Summary tables

3253:

3252:

3250:

3249:

3243:

3241:

3235:

3234:

3231:

3230:

3228:

3227:

3226:

3225:

3220:

3215:

3205:

3199:

3197:

3191:

3190:

3188:

3187:

3182:

3177:

3172:

3167:

3162:

3157:

3151:

3149:

3143:

3142:

3140:

3139:

3134:

3129:

3128:

3127:

3122:

3117:

3112:

3107:

3102:

3097:

3092:

3090:Contraharmonic

3087:

3082:

3071:

3069:

3060:

3050:

3049:

3044:

3037:

3036:

3034:

3033:

3028:

3022:

3019:

3018:

3013:

3011:

3010:

3003:

2996:

2988:

2982:

2981:

2951:

2950:

2940:(4): 489–504.

2928:

2927:

2901:

2900:

2886:

2862:

2844:(2): 413–437.

2824:

2821:

2818:

2817:

2810:

2792:

2775:

2768:

2750:

2735:

2709:

2694:

2673:

2658:

2627:

2603:

2592:(2): 103–122.

2572:

2561:(2): 409–428.

2545:

2526:(2): 476–512.

2510:

2491:(3): 608–640.

2475:

2468:

2441:

2434:

2407:

2383:

2368:

2351:

2344:

2312:

2311:

2309:

2306:

2305:

2304:

2299:

2294:

2289:

2284:

2279:

2272:

2269:

2229:

2224:

2220:

2216:

2213:

2208:

2203:

2200:

2197:

2193:

2172:

2148:

2145:

2142:

2121:

2117:

2113:

2109:

2106:

2103:

2100:

2097:

2071:

2067:

2063:

2060:

2057:

2054:

2051:

2033:differentiable

1959:

1956:

1955:

1954:

1943:

1940:

1937:

1933:

1930:

1927:

1924:

1920:

1917:

1914:

1911:

1908:

1905:

1900:

1897:

1894:

1890:

1881:

1877:

1874:

1871:

1867:

1864:

1861:

1855:

1852:

1849:

1846:

1843:

1840:

1837:

1834:

1831:

1828:

1823:

1820:

1817:

1813:

1807:

1803:

1800:

1797:

1793:

1790:

1787:

1773:expected value

1769:

1761:

1750:

1747:

1744:

1741:

1738:

1735:

1732:

1724:

1721:

1718:

1714:

1705:

1701:

1698:

1695:

1691:

1688:

1685:

1657:

1656:Decision rules

1654:

1644:

1641:

1640:

1639:

1627:

1622:

1618:

1611:

1608:

1602:

1599:

1596:

1593:

1590:

1587:

1584:

1578:

1575:

1569:

1566:

1563:

1560:

1537:

1531:

1526:

1522:

1515:

1512:

1506:

1503:

1500:

1497:

1494:

1488:

1485:

1479:

1476:

1473:

1470:

1452:function space

1436:

1431:estimates the

1412:

1407:

1403:

1396:

1393:

1387:

1384:

1381:

1378:

1373:

1369:

1365:

1362:

1356:

1353:

1347:

1344:

1341:

1338:

1314:

1309:

1305:

1298:

1295:

1289:

1286:

1283:

1280:

1277:

1271:

1268:

1262:

1259:

1256:

1253:

1222:

1219:

1199:

1196:

1180:Posterior Risk

1160:

1159:

1148:

1143:

1138:

1135:

1131:

1126:

1121:

1116:

1113:

1110:

1105:

1101:

1096:

1091:

1088:

1083:

1078:

1075:

1072:

1069:

1066:

1063:

1058:

1054:

1047:

1042:

1038:

1035:

1032:

1029:

1024:

1020:

1015:

1010:

1007:

1004:

999:

994:

991:

987:

982:

979:

974:

969:

966:

963:

960:

957:

954:

948:

943:

937:

933:

929:

926:

923:

920:

915:

911:

907:

904:

878:

875:

848:

827:

823:

799:

798:

787:

784:

781:

778:

773:

769:

764:

757:

752:

749:

746:

743:

740:

737:

732:

727:

722:

718:

714:

709:

704:

701:

698:

695:

692:

689:

684:

679:

676:

671:

667:

663:

660:

657:

654:

651:

648:

645:

608:

597:

594:

590:expected value

577:

574:

559:

556:

522:

519:

506:

503:

497:

494:

476:

475:

463:

459:

456:

450:

447:

440:

436:

433:

430:

427:

421:

418:

412:

409:

383:

380:

352:quadratic form

302:

301:

287:

283:

279:

276:

273:

270:

267:

264:

261:

258:

255:

252:

221:

218:

197:Main article:

194:

191:

189:

186:

146:classification

65:

62:

61:

39:

37:

30:

24:

14:

13:

10:

9:

6:

4:

3:

2:

6261:

6250:

6247:

6245:

6242:

6241:

6239:

6222:

6219:

6217:

6214:

6213:

6206:

6202:

6199:

6197:

6194:

6193:

6190:

6186:

6185:

6182:

6176:

6173:

6171:

6168:

6166:

6163:

6161:

6158:

6156:

6153:

6151:

6148:

6146:

6143:

6141:

6138:

6136:

6133:

6131:

6128:

6126:

6123:

6121:

6118:

6116:

6113:

6111:

6108:

6106:

6103:

6102:

6100:

6098:Architectures

6096:

6090:

6087:

6085:

6082:

6080:

6077:

6075:

6072:

6070:

6067:

6065:

6062:

6060:

6057:

6055:

6052:

6050:

6047:

6046:

6044:

6042:Organizations

6040:

6034:

6031:

6029:

6026:

6024:

6021:

6019:

6016:

6014:

6011:

6009:

6006:

6004:

6001:

5999:

5996:

5994:

5991:

5989:

5986:

5984:

5981:

5979:

5978:Yoshua Bengio

5976:

5975:

5973:

5969:

5959:

5958:Robot control

5956:

5952:

5949:

5948:

5947:

5944:

5942:

5939:

5937:

5934:

5932:

5929:

5927:

5924:

5922:

5919:

5917:

5914:

5912:

5909:

5908:

5906:

5902:

5896:

5893:

5891:

5888:

5886:

5883:

5881:

5878:

5876:

5875:Chinchilla AI

5873:

5871:

5868:

5866:

5863:

5861:

5858:

5856:

5853:

5851:

5848:

5846:

5843:

5841:

5838:

5836:

5833:

5831:

5828:

5826:

5823:

5821:

5818:

5814:

5811:

5810:

5809:

5806:

5804:

5801:

5799:

5796:

5794:

5791:

5789:

5786:

5784:

5781:

5780:

5778:

5774:

5768:

5765:

5761:

5758:

5756:

5753:

5752:

5751:

5748:

5744:

5741:

5739:

5736:

5734:

5731:

5730:

5729:

5726:

5724:

5721:

5719:

5716:

5714:

5711:

5709:

5706:

5704:

5701:

5699:

5696:

5694:

5691:

5689:

5686:

5684:

5681:

5680:

5678:

5674:

5671:

5667:

5661:

5658:

5656:

5653:

5651:

5648:

5646:

5643:

5641:

5638:

5636:

5633:

5631:

5628:

5627:

5625:

5621:

5615:

5612:

5610:

5607:

5605:

5602:

5600:

5597:

5595:

5592:

5591:

5589:

5585:

5577:

5574:

5573:

5572:

5569:

5567:

5564:

5562:

5559:

5555:

5554:Deep learning

5552:

5551:

5550:

5547:

5543:

5540:

5539:

5538:

5535:

5534:

5532:

5528:

5522:

5519:

5517:

5514:

5510:

5507:

5506:

5505:

5502:

5500:

5497:

5493:

5490:

5488:

5485:

5483:

5480:

5479:

5478:

5475:

5473:

5470:

5468:

5465:

5463:

5460:

5458:

5455:

5453:

5450:

5448:

5445:

5443:

5442:Hallucination

5440:

5436:

5433:

5432:

5431:

5428:

5426:

5423:

5419:

5416:

5415:

5414:

5411:

5410:

5408:

5404:

5398:

5395:

5393:

5390:

5388:

5385:

5383:

5380:

5378:

5375:

5373:

5370:

5368:

5365:

5363:

5360:

5358:

5357:

5353:

5352:

5350:

5348:

5344:

5335:

5330:

5328:

5323:

5321:

5316:

5315:

5312:

5300:

5299:

5290:

5288:

5287:

5278:

5276:

5275:

5270:

5264:

5262:

5261:

5252:

5251:

5248:

5234:

5231:

5229:

5228:Geostatistics

5226:

5224:

5221:

5219:

5216:

5214:

5211:

5210:

5208:

5206:

5202:

5196:

5195:Psychometrics

5193:

5191:

5188:

5186:

5183:

5181:

5178:

5176:

5173:

5171:

5168:

5166:

5163:

5161:

5158:

5156:

5153:

5151:

5148:

5147:

5145:

5143:

5139:

5133:

5130:

5128:

5125:

5123:

5119:

5116:

5114:

5111:

5109:

5106:

5104:

5101:

5100:

5098:

5096:

5092:

5086:

5083:

5081:

5078:

5076:

5072:

5069:

5067:

5064:

5063:

5061:

5059:

5058:Biostatistics

5055:

5051:

5047:

5042:

5038:

5020:

5019:Log-rank test

5017:

5016:

5014:

5010:

5004:

5001:

5000:

4998:

4996:

4992:

4986:

4983:

4981:

4978:

4976:

4973:

4971:

4968:

4967:

4965:

4963:

4959:

4956:

4954:

4950:

4940:

4937:

4935:

4932:

4930:

4927:

4925:

4922:

4920:

4917:

4916:

4914:

4912:

4908:

4902:

4899:

4897:

4894:

4892:

4890:(Box–Jenkins)

4886:

4884:

4881:

4879:

4876:

4872:

4869:

4868:

4867:

4864:

4863:

4861:

4859:

4855:

4849:

4846:

4844:

4843:Durbin–Watson

4841:

4839:

4833:

4831:

4828:

4826:

4825:Dickey–Fuller

4823:

4822:

4820:

4816:

4810:

4807:

4805:

4802:

4800:

4799:Cointegration

4797:

4795:

4792:

4790:

4787:

4785:

4782:

4780:

4777:

4775:

4774:Decomposition

4772:

4771:

4769:

4765:

4762:

4760:

4756:

4746:

4743:

4742:

4741:

4738:

4737:

4736:

4733:

4729:

4726:

4725:

4724:

4721:

4719:

4716:

4714:

4711:

4709:

4706:

4704:

4701:

4699:

4696:

4694:

4691:

4689:

4686:

4685:

4683:

4681:

4677:

4671:

4668:

4666:

4663:

4661:

4658:

4656:

4653:

4651:

4648:

4646:

4645:Cohen's kappa

4643:

4642:

4640:

4638:

4634:

4630:

4626:

4622:

4618:

4614:

4609:

4605:

4591:

4588:

4586:

4583:

4581:

4578:

4576:

4573:

4572:

4570:

4568:

4564:

4558:

4554:

4550:

4544:

4542:

4539:

4538:

4536:

4534:

4530:

4524:

4521:

4519:

4516:

4514:

4511:

4509:

4506:

4504:

4501:

4499:

4498:Nonparametric

4496:

4494:

4491:

4490:

4488:

4484:

4478:

4475:

4473:

4470:

4468:

4465:

4463:

4460:

4459:

4457:

4455:

4451:

4445:

4442:

4440:

4437:

4435:

4432:

4430:

4427:

4425:

4422:

4421:

4419:

4417:

4413:

4407:

4404:

4402:

4399:

4397:

4394:

4392:

4389:

4388:

4386:

4384:

4380:

4376:

4369:

4366:

4364:

4361:

4360:

4356:

4352:

4336:

4333:

4332:

4331:

4328:

4326:

4323:

4321:

4318:

4314:

4311:

4309:

4306:

4305:

4304:

4301:

4300:

4298:

4296:

4292:

4282:

4279:

4275:

4269:

4267:

4261:

4259:

4253:

4252:

4251:

4248:

4247:Nonparametric

4245:

4243:

4237:

4233:

4230:

4229:

4228:

4222:

4218:

4217:Sample median

4215:

4214:

4213:

4210:

4209:

4207:

4205:

4201:

4193:

4190:

4188:

4185:

4183:

4180:

4179:

4178:

4175:

4173:

4170:

4168:

4162:

4160:

4157:

4155:

4152:

4150:

4147:

4145:

4142:

4140:

4138:

4134:

4132:

4129:

4128:

4126:

4124:

4120:

4114:

4112:

4108:

4106:

4104:

4099:

4097:

4092:

4088:

4087:

4084:

4081:

4079:

4075:

4065:

4062:

4060:

4057:

4055:

4052:

4051:

4049:

4047:

4043:

4037:

4034:

4030:

4027:

4026:

4025:

4022:

4018:

4015:

4014:

4013:

4010:

4008:

4005:

4004:

4002:

4000:

3996:

3988:

3985:

3983:

3980:

3979:

3978:

3975:

3973:

3970:

3968:

3965:

3963:

3960:

3958:

3955:

3953:

3950:

3949:

3947:

3945:

3941:

3935:

3932:

3928:

3925:

3921:

3918:

3916:

3913:

3912:

3911:

3908:

3907:

3906:

3903:

3899:

3896:

3894:

3891:

3889:

3886:

3884:

3881:

3880:

3879:

3876:

3875:

3873:

3871:

3867:

3864:

3862:

3858:

3852:

3849:

3847:

3844:

3840:

3837:

3836:

3835:

3832:

3830:

3827:

3823:

3822:loss function

3820:

3819:

3818:

3815:

3811:

3808:

3806:

3803:

3801:

3798:

3797:

3796:

3793:

3791:

3788:

3786:

3783:

3779:

3776:

3774:

3771:

3769:

3763:

3760:

3759:

3758:

3755:

3751:

3748:

3746:

3743:

3741:

3738:

3737:

3736:

3733:

3729:

3726:

3724:

3721:

3720:

3719:

3716:

3712:

3709:

3708:

3707:

3704:

3700:

3697:

3696:

3695:

3692:

3690:

3687:

3685:

3682:

3680:

3677:

3676:

3674:

3672:

3668:

3664:

3660:

3655:

3651:

3637:

3634:

3632:

3629:

3627:

3624:

3622:

3619:

3618:

3616:

3614:

3610:

3604:

3601:

3599:

3596:

3594:

3591:

3590:

3588:

3584:

3578:

3575:

3573:

3570:

3568:

3565:

3563:

3560:

3558:

3555:

3553:

3550:

3548:

3545:

3544:

3542:

3540:

3536:

3530:

3527:

3525:

3524:Questionnaire

3522:

3520:

3517:

3513:

3510:

3508:

3505:

3504:

3503:

3500:

3499:

3497:

3495:

3491:

3485:

3482:

3480:

3477:

3475:

3472:

3470:

3467:

3465:

3462:

3460:

3457:

3455:

3452:

3450:

3447:

3446:

3444:

3442:

3438:

3434:

3430:

3425:

3421:

3407:

3404:

3402:

3399:

3397:

3394:

3392:

3389:

3387:

3384:

3382:

3379:

3377:

3374:

3372:

3369:

3367:

3364:

3362:

3359:

3357:

3354:

3352:

3351:Control chart

3349:

3347:

3344:

3342:

3339:

3337:

3334:

3333:

3331:

3329:

3325:

3319:

3316:

3312:

3309:

3307:

3304:

3303:

3302:

3299:

3297:

3294:

3292: