274:

170:

1849:, a centroid is chosen as the representative of all points. There are two approaches, the selection of the voxel centroid or select the centroid of the points lying within the voxel. To obtain internal points average has a higher computational cost, but offers better results. Thus, a subset of the input space is obtained that roughly represents the underlying surface. The Voxel Grid method presents the same problems as other filtering techniques: impossibility of defining the final number of points that represent the surface, geometric information loss due to the reduction of the points inside a voxel and sensitivity to noisy input spaces.

1783:, while other use a polyharmonic radial basis function is used to adjust the initial point set. Functions like Moving Least Squares, basic functions with local support, based on the Poisson equation have also been used. Loss of the geometry precision in areas with extreme curvature, i.e., corners, edges is one of the main issues encountered. Furthermore, pretreatment of information, by applying some kind of filtering technique, also affects the definition of the corners by softening them. There are several studies related to post-processing techniques used in the reconstruction for the

1726:

1791:

51:

427:

82:

1743:

of a two dimensional polygon and a three-dimensional polyhedron) which is neither convex nor necessarily connected. For a large value, the alpha-shape is identical to the convex-hull of S. The algorithm proposed by

Edelsbrunner and Mucke eliminates all tetrahedrons which are delimited by a surrounding sphere smaller than α. The surface is then obtained with the external triangles from the resulting tetrahedron.

1833:

1755:

354:

282:

1804:

plane. This technique helps us to see comprehensively an entire compact structure of the object. Since the technique needs enormous amount of calculations, which requires strong configuration computers is appropriate for low contrast data. Two main methods for rays projecting can be considered as follows:

1750:

Both methods have been recently extended for reconstructing point clouds with noise. In this method the quality of points determines the feasibility of the method. For precise triangulation since we are using the whole point cloud set, the points on the surface with the error above the threshold will

1742:

Delaunay method involves extraction of tetrahedron surfaces from initial point cloud. The idea of ‘shape’ for a set of points in space is given by concept of alpha-shapes. Given a finite point set S, and the real parameter alpha, the alpha-shape of S is a polytope (the generalization to any dimension

1465:

The aim of feature extraction is to gain the characteristics of the images, through which the stereo correspondence processes. As a result, the characteristics of the images closely link to the choice of matching methods. There is no such universally applicable theory for features extraction, leading

1262:

2D digital image acquisition is the information source of 3D reconstruction. Commonly used 3D reconstruction is based on two or more images, although it may employ only one image in some cases. There are various types of methods for image acquisition that depends on the occasions and purposes of the

120:

The research of 3D reconstruction has always been a difficult goal. By Using 3D reconstruction one can determine any object's 3D profile, as well as knowing the 3D coordinate of any point on the profile. The 3D reconstruction of objects is a generally scientific problem and core technology of a wide

1600:

According to precise correspondence, combined with camera location parameters, 3D geometric information can be recovered without difficulties. Due to the fact that accuracy of 3D reconstruction depends on the precision of correspondence, error of camera location parameters and so on, the previous

1803:

Entire volume transparence of the object is visualized using VR technique. Images will be performed by projecting rays through volume data. Along each ray, opacity and color need to be calculated at every voxel. Then information calculated along each ray will to be aggregated to a pixel on image

333:

Machine learning enables learning the correspondance between the subtle features in the input and the respective 3D equivalent. Deep neural networks have shown to be highly effective for 3D reconstruction from a single color image. This works even for non-photorealistic input images such as

406:

to observe one same object, acquiring two images from different points of view. In terms of trigonometry relations, depth information can be calculated from disparity. Binocular stereo vision method is well developed and stably contributes to favorable 3D reconstruction, leading to a better

1811:

Image-order or ray-casting method: Projecting rays go through volume from front to back (from image plane to volume).There exists some other methods to composite image, appropriate methods depending on the user's purposes. Some usual methods in medical image are

257:

methods refer to using one or more images from one viewpoint (camera) to proceed to 3D construction. It makes use of 2D characteristics(e.g. Silhouettes, shading and texture) to measure 3D shape, and that's why it is also named Shape-From-X, where X can be

1771:. A contour algorithm is used to extracting a zero-set which is used to obtain polygonal representation of the object. Thus, the problem of reconstructing a surface from a disorganized point cloud is reduced to the definition of the appropriate function

399:, or by one single camera at different time in different viewing angles, are used to restore its 3D geometric information and reconstruct its 3D profile and location. This is more direct than Monocular methods such as shape-from-shading.

471:

are in the same direction with x-axis and y-axis of the camera's coordinate system respectively. The origin of the image's coordinate system is located on the intersection of imaging plane and the optical axis. Suppose such world point

312:

This approach is more sophisticated than the shape-of-shading method. Images taken in different lighting conditions are used to solve the depth information. It is worth mentioning that more than one image is required by this approach.

1609:

Clinical routine of diagnosis, patient follow-up, computer assisted surgery, surgical planning etc. are facilitated by accurate 3D models of the desired part of human anatomy. Main motivation behind 3D reconstruction includes

107:

is the process of capturing the shape and appearance of real objects. This process can be accomplished either by active or passive methods. If the model is allowed to change its shape in time, this is referred to as

229:

Passive methods of 3D reconstruction do not interfere with the reconstructed object; they only use a sensor to measure the radiance reflected or emitted by the object's surface to infer its 3D structure through

334:

sketches. Thanks to the high level of accuracy in the reconstructed 3D features, deep learning based method has been employed for biomedical engineering applications to reconstruct CT imagery from X-ray.

1716:

Mostly algorithms available for 3D reconstruction are extremely slow and cannot be used in real-time. Though the algorithms presented are still in infancy but they have the potential for fast computation.

197:, laser range finder and other active sensing techniques. A simple example of a mechanical method would use a depth gauge to measure a distance to a rotating object put on a turntable. More applicable

1263:

specific application. Not only the requirements of the application must be met, but also the visual disparity, illumination, performance of camera and the feature of scenario should be considered.

273:

896:

1072:

825:

153:, etc. For instance, the lesion information of the patients can be presented in 3D on the computer, which offers a new and accurate approach in diagnosis and thus has vital clinical value.

759:

2955:

1204:

1151:

266:, texture etc. 3D reconstruction through monocular cues is simple and quick, and only one appropriate digital image is needed thus only one camera is adequate. Technically, it avoids

2104:

438:

of the camera's lens. However, to simplify the calculation, images are drawn in front of the optical center of the lens by f. The u-axis and v-axis of the image's coordinate system

1250:

1449:

957:

1590:

1534:

1387:

1331:

602:

546:

423:. The following picture provides a simple schematic diagram of horizontally sighted Binocular Stereo Vision, where b is the baseline between projective centers of two cameras.

3164:

696:

3194:

3116:

1626:

Helps in a number of clinical areas, such as radiotherapy planning and treatment verification, spinal surgery, hip replacement, neurointerventions and aortic stenting.

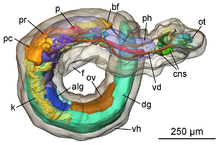

407:

performance when compared to other 3D construction. Unfortunately, it is computationally intensive, besides it performs rather poorly when baseline distance is large.

189:

approach and build the object in scenario based on model. These methods actively interfere with the reconstructed object, either mechanically or radiometrically using

469:

656:

629:

434:

The origin of the camera's coordinate system is at the optical center of the camera's lens as shown in the figure. Actually, the camera's image plane is behind the

1982:

Liping Zheng; Guangyao Li; Jing Sha (2007). "The survey of medical image 3D reconstruction". In Luo, Qingming; Wang, Lihong V.; Tuchin, Valery V.; Gu, Min (eds.).

3488:

3189:

1863:

1094:

997:

977:

490:

1746:

Another algorithm called Tight Cocone labels the initial tetrahedrons as interior and exterior. The triangles found in and out generate the resulting surface.

3421:

3154:

2518:

3149:

1836:

Tracing a ray through a voxel grid. The voxels which are traversed in addition to those selected using a standard 8-connected algorithm are shown hatched.

2404:

Corona-Figueroa, Abril; Bond-Taylor, Sam; Bhowmik, Neelanjan; Gaus, Yona

Falinie A.; Breckon, Toby P.; Shum, Hubert P. H.; Willcocks, Chris G. (2023).

3184:

3252:

2654:

Wang, Jun; Gu, Dongxiao; Yu, Zeyun; Tan, Changbai; Zhou, Laishui (December 2012). "A framework for 3D model reconstruction in reverse engineering".

2575:

169:

1099:

Therefore, once the coordinates of image points is known, besides the parameters of two cameras, the 3D coordinate of the point can be determined.

1592:

from two images. Certain interference factors in the scenario should be noticed, e.g. illumination, noise, surface physical characteristic, etc.

2970:

3269:

2238:

1868:

1767:

Reconstruction of the surface is performed using a distance function which assigns to each point in the space a signed distance to the surface

3289:

2548:

2347:

2181:

2130:

327:. Distortion and perspective measured in 2D images provide the hint for inversely solving depth of normal information of the object surface.

3199:

3169:

3159:

3139:

3109:

2895:

Hoppe, Hugues; DeRose, Tony; Duchamp, Tom; McDonald, John; Stuetzle, Werner (July 1992). "Surface reconstruction from unorganized points".

2320:

Feng, Qi; Shum, Hubert P. H.; Morishima, Shigeo (2022). "360 Depth

Estimation in the Wild - The Depth360 Dataset and the SegFuse Network".

2694:

2484:

Mahmoudzadeh, Ahmadreza; Yeganeh, Sayna

Firoozi; Golroo, Amir (2019-07-09). "3D pavement surface reconstruction using an RGB-D sensor".

2101:

1003:

of the camera. Visual disparity is defined as the difference in image point location of a certain world point acquired by two cameras,

3354:

3179:

3209:

3044:

3359:

2831:

2641:

3344:

3102:

1277:

Camera calibration in

Binocular Stereo Vision refers to the determination of the mapping relationship between the image points

387:

Stereo vision obtains the 3-dimensional geometric information of an object from multiple images based on the research of human

2026:

2681:

109:

2858:

Lorensen, William E.; Cline, Harvey E. (July 1987). "Marching cubes: A high resolution 3D surface construction algorithm".

3525:

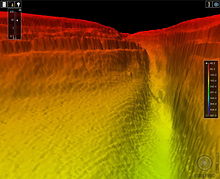

3453:

3384:

3235:

2471:

1878:

2723:

Angelopoulou, A.; Psarrou, A.; Garcia-Rodriguez, J.; Orts-Escolano, S.; Azorin-Lopez, J.; Revett, K. (20 February 2015).

831:

205:

towards the object and then measure its reflected part. Examples range from moving light sources, colored visible light,

2614:

1821:

1813:

1272:

319:

Suppose such an object with smooth surface covered by replicated texture units, and its projection from 3D to 2D causes

1451:

in the 3D scenario. Camera calibration is a basic and essential part in 3D reconstruction via

Binocular Stereo Vision.

1008:

3204:

1845:

In this filtering technique input space is sampled using a grid of 3D voxels to reduce the number of points. For each

194:

765:

2724:

1779:

established the use of such methods. There are different variants for given algorithm, some use a discrete function

3349:

1659:

707:

2016:." International archives of photogrammetry remote sensing and spatial information sciences 34.3/W4 (2001): 37-44.

3401:

3314:

2707:

2154:

Saxena, Ashutosh; Sun, Min; Ng, Andrew Y. (2007). "3-D Reconstruction from Sparse Views using

Monocular Vision".

1645:

604:

respectively on the left and right image plane. Assume two cameras are in the same plane, then y-coordinates of

3535:

3505:

3379:

1157:

1104:

158:

35:

2224:

3084:

3441:

3431:

3174:

2932:

Carr, J.C.; Beatson, R.K.; Cherrie, J.B.; Mitchell, T.J.; Fright, W.R.; McCallum, B.C.; Evans, T.R. (2001).

1480:

Stereo correspondence is to establish the correspondence between primitive factors in images, i.e. to match

186:

154:

39:

2287:

1210:

3478:

3446:

3225:

2904:

2867:

2159:

1790:

1729:

1392:

416:

372:

367:

343:

297:

2610:"From 3D reconstruction to virtual reality: A complete methodology for digital archaeological exhibition"

2572:

2207:"Synthesizing 3D Shapes via Modeling Multi-View Depth Maps and Silhouettes With Deep Generative Networks"

903:

362:

3483:

3294:

3240:

2505:

2044:"Estimating Pavement Roughness by Fusing Color and Depth Data Obtained from an Inexpensive RGB-D Sensor"

1908:

1697:

1539:

1483:

1336:

1280:

551:

495:

392:

267:

206:

142:

3062:

Synthesizing 3D Shapes via

Modeling Multi-View Depth Maps and Silhouettes with Deep Generative Networks

2595:

2042:

Mahmoudzadeh, Ahmadreza; Golroo, Amir; Jahanshahi, Mohammad R.; Firoozi

Yeganeh, Sayna (January 2019).

1808:

Object-order method: Projecting rays go through volume from back to front (from volume to image plane).

1775:

with a zero value for the sampled points and different to zero value for the rest. An algorithm called

1725:

242:(one, two or more) or video. In this case we talk about image-based reconstruction and the output is a

1620:

Can be used to plan, simulate, guide, or otherwise assist a surgeon in performing a medical procedure.

3406:

3389:

3369:

3339:

2789:

2725:"3D reconstruction of medical images from slices automatically landmarked with growing neural models"

2644:." Proceedings of the 24th annual ACM symposium on User interface software and technology. ACM, 2011.

2253:

2055:

3074:

2269:

1794:

Solid geometry with volume rendering Image courtesy of

Patrick Chris Fragile Ph.D., UC Santa Barbara

3530:

3411:

3274:

3079:

2872:

2470:

McCoun, Jacques, and Lucien Reeves. Binocular vision: development, depth perception and disorders.

2164:

1701:

1667:

420:

231:

87:

2909:

2406:

Unaligned 2D to 3D Translation with Conditional Vector-Quantized Code Diffusion using Transformers

246:. By comparison to active methods, passive methods can be applied to a wider range of situations.

238:

is an image sensor in a camera sensitive to visible light and the input to the method is a set of

3426:

3334:

3319:

3279:

3038:

2805:

2779:

2554:

2533:

2011 International Conference on 3D Imaging, Modeling, Processing, Visualization and Transmission

2485:

2453:

2430:

2409:

2325:

2187:

1995:

1475:

1460:

661:

308:

130:

3089:

2013:

1928:

3364:

3309:

2544:

2386:

2343:

2177:

2136:

2126:

2083:

1883:

1817:

1684:

1676:

301:

126:

100:

441:

3473:

3436:

3284:

3144:

3061:

3026:

3003:

2914:

2877:

2840:

2797:

2747:

2739:

2663:

2623:

2536:

2445:

2376:

2335:

2302:

2261:

2225:

Shape from shading: A method for obtaining the shape of a smooth opaque object from one view

2206:

2169:

2073:

2063:

1987:

1825:

1784:

254:

634:

607:

277:

Generating and reconstructing 3D shapes from single or multi-view depth maps or silhouettes

3468:

3416:

3125:

2579:

2108:

1941:

1671:

1651:

146:

138:

134:

96:

31:

2363:

Nozawa, Naoki; Shum, Hubert P. H.; Feng, Qi; Ho, Edmond S. L.; Morishima, Shigeo (2022).

1954:

2793:

2257:

2059:

64:

Please help update this article to reflect recent events or newly available information.

3493:

3463:

3374:

3299:

3230:

3064:- Generate and reconstruct 3D shapes via modeling multi-view depth maps or silhouettes.

2829:; Goswami, Samrat (August 2006). "Probable surface reconstruction from noisy samples".

2708:

Real time hand tracking and 3d gesture recognition for interactive interfaces using hmm

2697:." Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2014.

2078:

2043:

2027:

Investigating landslides with space-borne Synthetic Aperture Radar (SAR) interferometry

1898:

1776:

1692:

1079:

982:

962:

475:

435:

426:

239:

85:

3D reconstruction of the general anatomy of the right side view of a small marine slug

2994:

Wang, C.L. (June 2006). "Incremental reconstruction of sharp edges on mesh surfaces".

2770:

Edelsbrunner, Herbert; Mücke, Ernst (January 1994). "Three-dimensional alpha shapes".

81:

3519:

3458:

2642:

KinectFusion: real-time 3D reconstruction and interaction using a moving depth camera

2609:

2306:

1705:

396:

388:

324:

214:

174:

150:

2941:

28th Annual Conference on Computer Graphics and Interactive Techniques SIGGRAPH 2001

2457:

2191:

1999:

1601:

procedures must be done carefully to achieve relatively accurate 3D reconstruction.

3396:

2809:

2558:

1466:

to a great diversity of stereo correspondence in Binocular Stereo Vision research.

1000:

699:

403:

391:. The results are presented in form of depth maps. Images of an object acquired by

2573:

Real-time 3D computed tomographic reconstruction using commodity graphics hardware

2956:"Implicit Surface Modelling with a Globally Regularised Basis of Compact Support"

2933:

2844:

2743:

2627:

3304:

2531:

Poullis, Charalambos; You, Suya (May 2011). "3D Reconstruction of Urban Areas".

2111:." Fibers' 91, Boston, MA. International Society for Optics and Photonics, 1991.

1903:

1858:

1832:

1623:

The precise position and orientation of the patient's anatomy can be determined.

286:

259:

218:

198:

190:

3030:

3021:

Connolly, C. (1984). "Cumulative generation of octree models from range data".

2381:

2365:"3D Car Shape Reconstruction from a Contour Sketch using GAN and Lazy Learning"

2364:

2339:

1984:

Fifth International Conference on Photonics and Imaging in Biology and Medicine

3007:

2667:

2173:

1931:." Foundations and Trends in Computer Graphics and Vision 4.4 (2010): 287-404.

1873:

320:

281:

210:

2934:"Reconstruction and representation of 3d objects with radial basis functions"

2390:

2239:"Photometric method for determining surface orientation from multiple images"

2211:

Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition

2140:

2826:

2540:

2521:." Exploring artificial intelligence in the new millennium 1.1-35 (2002): 1.

1888:

402:

Binocular stereo vision method requires two identical cameras with parallel

182:

2087:

1754:

3085:

http://research.microsoft.com/apps/search/default.aspx?q=3d+reconstruction

3023:

Proceedings. 1984 IEEE International Conference on Robotics and Automation

2918:

2801:

2205:

Soltani, A.A.; Huang, H.; Wu, J.; Kulkarni, T.D.; Tenenbaum, J.B. (2017).

1816:(maximum intensity projection), MinIP (minimum intensity projection), AC (

17:

243:

202:

2881:

2125:. Gool, Luc van., Vergauwen, Maarten. Hanover, MA: Now Publishers, Inc.

2752:

2449:

2265:

419:

to acquire object's 3D geometric information is on the basis of visual

263:

157:

can be reconstructed using methods such as airborne laser altimetry or

2068:

1991:

2784:

2695:

Analysis by synthesis: 3d object recognition by object reconstruction

1893:

235:

296:

Due to the analysis of the shade information in the image, by using

2490:

2414:

2330:

2322:

2022 IEEE Conference on Virtual Reality and 3D User Interfaces (VR)

2014:

3D building model reconstruction from point clouds and ground plans

3094:

1846:

1831:

1789:

1753:

1724:

1635:

3D reconstruction has applications in many fields. They include:

425:

280:

272:

168:

80:

3075:

http://www.nature.com/subjects/3d-reconstruction#news-and-comment

1986:. Proceedings of SPIE. Vol. 6534. pp. 65342K–65342K–6.

3080:

http://6.869.csail.mit.edu/fa13/lectures/lecture11shapefromX.pdf

2684:." Computer vision and image understanding 81.3 (2001): 231-268.

122:

3098:

347:

304:

information of the object surface is restored to reconstruct.

44:

3090:

https://research.google.com/search.html#q=3D%20reconstruction

27:

Process of capturing the shape and appearance of real objects

2429:

Kass, Michael; Witkin, Andrew; Terzopoulos, Demetri (1988).

1787:

but these methods increase the complexity of the solution.

121:

variety of fields, such as Computer Aided Geometric Design (

2508:." ACM Transactions on Graphics. Vol. 22. No. 3. ACM, 2003.

2156:

2007 IEEE 11th International Conference on Computer Vision

2123:

3D reconstruction from multiple images. Part 1, Principles

1254:

The 3D reconstruction consists of the following sections:

1929:

3D reconstruction from multiple images part 1: Principles

2582:." Physics in Medicine & Biology 52.12 (2007): 3405.

1942:

Real-time non-rigid reconstruction using an RGB-D camera

2288:"Recovering surface shape and orientation from texture"

2682:

A survey of computer vision-based human motion capture

2598:." Journal of Cultural Heritage 15.3 (2014): 318-325.

1751:

be explicitly represented on reconstructed geometry.

1542:

1486:

1395:

1339:

1283:

1213:

1160:

1107:

1082:

1011:

985:

965:

906:

834:

768:

710:

664:

637:

610:

554:

498:

478:

444:

3260:

3251:

3218:

3132:

2037:

2035:

1927:

Moons, Theo, Luc Van Gool, and Maarten Vergauwen. "

891:{\displaystyle v_{1}=v_{2}=f{\frac {y_{p}}{z_{p}}}}

181:Active methods, i.e. range data methods, given the

1584:

1528:

1443:

1381:

1325:

1244:

1198:

1145:

1088:

1066:

991:

971:

951:

890:

819:

753:

690:

650:

623:

596:

540:

484:

463:

1944:." ACM Transactions on Graphics 33.4 (2014): 156.

1067:{\displaystyle d=u_{1}-u_{2}=f{\frac {b}{z_{p}}}}

2954:Walder, C.; Schölkopf, B.; Chapelle, O. (2006).

2608:Bruno, Fabio; et al. (January–March 2010).

1614:Improved accuracy due to multi view aggregation.

820:{\displaystyle u_{2}=f{\frac {x_{p}-b}{z_{p}}}}

2029:." Engineering geology 88.3-4 (2006): 173-199.

38:. For 3D reconstruction of sound sources, see

3110:

2100:Buelthoff, Heinrich H., and Alan L. Yuille. "

1864:3D data acquisition and object reconstruction

754:{\displaystyle u_{1}=f{\frac {x_{p}}{z_{p}}}}

8:

2706:Keskin, Cem, Ayse Erkan, and Lale Akarun. "

2596:Learning cultural heritage by serious games

2102:Shape-from-X: Psychophysics and computation

361:It has been suggested that this section be

3257:

3117:

3103:

3095:

2590:

2588:

193:, in order to acquire the depth map, e.g.

2908:

2871:

2783:

2751:

2489:

2413:

2380:

2329:

2163:

2077:

2067:

1573:

1560:

1547:

1541:

1517:

1504:

1491:

1485:

1432:

1419:

1406:

1394:

1370:

1357:

1344:

1338:

1314:

1301:

1288:

1282:

1227:

1218:

1212:

1199:{\displaystyle y_{p}={\frac {bv_{1}}{d}}}

1184:

1174:

1165:

1159:

1146:{\displaystyle x_{p}={\frac {bu_{1}}{d}}}

1131:

1121:

1112:

1106:

1081:

1056:

1047:

1035:

1022:

1010:

984:

964:

940:

927:

914:

905:

880:

870:

864:

852:

839:

833:

809:

792:

785:

773:

767:

743:

733:

727:

715:

709:

682:

669:

663:

642:

636:

615:

609:

585:

572:

559:

553:

529:

516:

503:

497:

477:

449:

443:

2438:International Journal of Computer Vision

2213:. pp. 1511–1519 – via GitHub.

2025:Colesanti, Carlo, and Janusz Wasowski. "

2012:Vosselman, George, and Sander Dijkman. "

979:in the left camera's coordinate system,

289:" reconstructed from multiple viewpoints

2680:Moeslund, Thomas B., and Erik Granum. "

1977:

1975:

1920:

3270:3D reconstruction from multiple images

3036:

2656:Computers & Industrial Engineering

1869:3D reconstruction from multiple images

3290:Simultaneous localization and mapping

2821:

2819:

2765:

2763:

2718:

2716:

1245:{\displaystyle z_{p}={\frac {bf}{d}}}

492:whose corresponding image points are

365:out into another article titled

7:

2710:." ICANN/ICONIPP 2003 (2003): 26-29.

2693:Hejrati, Mohsen, and Deva Ramanan. "

2506:Free-viewpoint video of human actors

1444:{\displaystyle P(x_{p},y_{p},z_{p})}

1785:detection and refinement of corners

1605:3D Reconstruction of medical images

952:{\displaystyle (x_{p},y_{p},z_{p})}

3355:Automatic number-plate recognition

1585:{\displaystyle P_{2}(u_{2},v_{2})}

1529:{\displaystyle P_{1}(u_{1},v_{1})}

1382:{\displaystyle P_{2}(u_{2},v_{2})}

1326:{\displaystyle P_{1}(u_{1},v_{1})}

1076:based on which the coordinates of

597:{\displaystyle P_{2}(u_{2},v_{2})}

541:{\displaystyle P_{1}(u_{1},v_{1})}

25:

430:Geometry of a stereoscopic system

3360:Automated species identification

415:The approach of using Binocular

352:

331:Machine Learning Based Solutions

185:, reconstruct the 3D profile by

49:

3345:Audio-visual speech recognition

3025:. Vol. 1. pp. 25–32.

2431:"Snakes: Active contour models"

3190:Recognition and categorization

2897:ACM SIGGRAPH Computer Graphics

2860:ACM SIGGRAPH Computer Graphics

2571:Xu, Fang, and Klaus Mueller. "

1579:

1553:

1523:

1497:

1438:

1399:

1376:

1350:

1320:

1294:

946:

907:

591:

565:

535:

509:

1:

3454:Optical character recognition

3385:Content-based image retrieval

3043:: CS1 maint: date and year (

2472:Nova Science Publishers, Inc.

1879:3D SEM surface reconstruction

2845:10.1016/j.comgeo.2005.10.006

2744:10.1016/j.neucom.2014.03.078

2628:10.1016/j.culher.2009.02.006

2615:Journal of Cultural Heritage

2307:10.1016/0004-3702(81)90019-9

1940:Zollhöfer, Michael, et al. "

1273:Geometric camera calibration

411:Problem statement and basics

110:non-rigid or spatio-temporal

2237:Woodham, Robert J. (1980).

1955:"The Future of 3D Modeling"

1617:Detailed surface estimates.

691:{\displaystyle v_{1}=v_{2}}

270:, which is fairly complex.

177:map of an underwater canyon

116:Motivation and applications

3552:

3350:Automatic image annotation

3185:Noise reduction techniques

3031:10.1109/ROBOT.1984.1087212

2594:Mortara, Michela, et al. "

2382:10.1007/s00371-020-02024-y

2375:(4). Springer: 1317–1330.

2340:10.1109/VR51125.2022.00087

2324:. IEEE. pp. 664–673.

2286:Witkin, Andrew P. (1981).

1660:Tomographic reconstruction

1473:

1458:

1270:

393:two cameras simultaneously

341:

29:

3502:

3315:Free viewpoint television

3008:10.1016/j.cad.2006.02.009

2668:10.1016/j.cie.2012.07.009

2630:– via ResearchGate.

2519:Robotic mapping: A survey

2174:10.1109/ICCV.2007.4409219

1737:Delaunay and alpha-shapes

58:This article needs to be

30:For 3D reconstruction in

3380:Computer-aided diagnosis

2640:Izadi, Shahram, et al. "

2504:Carranza, Joel, et al. "

159:synthetic aperture radar

155:Digital elevation models

36:Iterative reconstruction

3442:Moving object detection

3432:Medical image computing

3195:Research infrastructure

3165:Image sensor technology

2541:10.1109/3dimpvt.2011.14

2295:Artificial Intelligence

1389:, and space coordinate

464:{\displaystyle O_{1}uv}

187:numerical approximation

40:3D sound reconstruction

3479:Video content analysis

3447:Small object detection

3226:Computer stereo vision

2943:. ACM. pp. 67–76.

2832:Computational Geometry

1837:

1795:

1759:

1733:

1730:Delaunay triangulation

1586:

1530:

1445:

1383:

1327:

1246:

1200:

1147:

1090:

1068:

993:

973:

953:

892:

821:

755:

692:

652:

625:

598:

542:

486:

465:

431:

368:Computer stereo vision

344:Computer stereo vision

298:Lambertian reflectance

290:

278:

250:Monocular cues methods

178:

92:

3484:Video motion analysis

3295:Structure from motion

3241:3D object recognition

2996:Computer-Aided Design

2919:10.1145/142920.134011

2802:10.1145/174462.156635

1909:Structure from motion

1835:

1793:

1757:

1728:

1698:3D object recognition

1587:

1531:

1470:Stereo correspondence

1446:

1384:

1328:

1247:

1201:

1148:

1091:

1069:

994:

974:

954:

893:

822:

756:

693:

653:

651:{\displaystyle P_{2}}

626:

624:{\displaystyle P_{1}}

599:

543:

487:

466:

429:

284:

276:

268:stereo correspondence

172:

143:computational science

84:

3526:3D computer graphics

3407:Foreground detection

3390:Reverse image search

3370:Bioimage informatics

3340:Activity recognition

2223:Horn, Berthold KP. "

2121:Moons, Theo (2010).

1824:(non-photorealistic

1721:Existing Approaches:

1668:Virtual environments

1648:video reconstruction

1639:Pavement engineering

1540:

1484:

1393:

1337:

1281:

1211:

1158:

1105:

1080:

1009:

983:

963:

904:

832:

766:

708:

662:

658:are identical, i.e.,

635:

608:

552:

496:

476:

442:

3474:Autonomous vehicles

3412:Gesture recognition

3275:2D to 3D conversion

2969:(3). Archived from

2882:10.1145/37402.37422

2794:1994math.....10208E

2517:Thrun, Sebastian. "

2258:1980OptEn..19..139W

2246:Optical Engineering

2060:2019Senso..19.1655M

1702:gesture recognition

1689:Reverse engineering

1096:can be worked out.

959:are coordinates of

232:image understanding

88:Pseudunela viatoris

3489:Video surveillance

3427:Landmark detection

3335:3D pose estimation

3320:Volumetric capture

3280:Gaussian splatting

3236:Object recognition

3150:Commercial systems

2578:2016-03-19 at the

2535:. pp. 33–40.

2450:10.1007/BF00133570

2266:10.1117/12.7972479

2107:2011-01-07 at the

1838:

1796:

1760:

1734:

1712:Problem Statement:

1582:

1526:

1476:Image registration

1461:Feature extraction

1455:Feature extraction

1441:

1379:

1323:

1267:Camera calibration

1242:

1196:

1143:

1086:

1064:

989:

969:

949:

888:

817:

751:

688:

648:

621:

594:

538:

482:

461:

432:

317:Shape-from-texture

309:Photometric Stereo

294:Shape-from-shading

291:

279:

221:for more details.

179:

131:computer animation

93:

3513:

3512:

3422:Image restoration

3365:Augmented reality

3330:

3329:

3310:4D reconstruction

3262:3D reconstruction

3155:Feature detection

2738:(Part A): 16–25.

2550:978-1-61284-429-9

2349:978-1-6654-9617-9

2183:978-1-4244-1630-1

2132:978-1-60198-285-8

2069:10.3390/s19071655

1992:10.1117/12.741321

1884:4D reconstruction

1818:alpha compositing

1685:Augmented reality

1677:Earth observation

1258:Image acquisition

1240:

1194:

1141:

1089:{\displaystyle P}

1062:

992:{\displaystyle f}

972:{\displaystyle P}

886:

815:

749:

485:{\displaystyle P}

385:

384:

380:

234:. Typically, the

127:computer graphics

105:3D reconstruction

101:computer graphics

79:

78:

16:(Redirected from

3543:

3437:Object detection

3402:Face recognition

3285:Shape from focus

3258:

3145:Digital geometry

3119:

3112:

3105:

3096:

3049:

3048:

3042:

3034:

3018:

3012:

3011:

2991:

2985:

2984:

2982:

2981:

2975:

2960:

2951:

2945:

2944:

2938:

2929:

2923:

2922:

2912:

2892:

2886:

2885:

2875:

2855:

2849:

2848:

2839:(1–2): 124–141.

2823:

2814:

2813:

2787:

2772:ACM Trans. Graph

2767:

2758:

2757:

2755:

2729:

2720:

2711:

2704:

2698:

2691:

2685:

2678:

2672:

2671:

2662:(4): 1189–1200.

2651:

2645:

2638:

2632:

2631:

2605:

2599:

2592:

2583:

2569:

2563:

2562:

2528:

2522:

2515:

2509:

2502:

2496:

2495:

2493:

2481:

2475:

2468:

2462:

2461:

2435:

2426:

2420:

2419:

2417:

2401:

2395:

2394:

2384:

2360:

2354:

2353:

2333:

2317:

2311:

2310:

2292:

2283:

2277:

2276:

2274:

2268:. Archived from

2243:

2234:

2228:

2221:

2215:

2214:

2202:

2196:

2195:

2167:

2158:. pp. 1–8.

2151:

2145:

2144:

2118:

2112:

2098:

2092:

2091:

2081:

2071:

2039:

2030:

2023:

2017:

2010:

2004:

2003:

1979:

1970:

1969:

1967:

1966:

1951:

1945:

1938:

1932:

1925:

1826:volume rendering

1763:Zero set Methods

1591:

1589:

1588:

1583:

1578:

1577:

1565:

1564:

1552:

1551:

1535:

1533:

1532:

1527:

1522:

1521:

1509:

1508:

1496:

1495:

1450:

1448:

1447:

1442:

1437:

1436:

1424:

1423:

1411:

1410:

1388:

1386:

1385:

1380:

1375:

1374:

1362:

1361:

1349:

1348:

1332:

1330:

1329:

1324:

1319:

1318:

1306:

1305:

1293:

1292:

1251:

1249:

1248:

1243:

1241:

1236:

1228:

1223:

1222:

1205:

1203:

1202:

1197:

1195:

1190:

1189:

1188:

1175:

1170:

1169:

1152:

1150:

1149:

1144:

1142:

1137:

1136:

1135:

1122:

1117:

1116:

1095:

1093:

1092:

1087:

1073:

1071:

1070:

1065:

1063:

1061:

1060:

1048:

1040:

1039:

1027:

1026:

998:

996:

995:

990:

978:

976:

975:

970:

958:

956:

955:

950:

945:

944:

932:

931:

919:

918:

897:

895:

894:

889:

887:

885:

884:

875:

874:

865:

857:

856:

844:

843:

826:

824:

823:

818:

816:

814:

813:

804:

797:

796:

786:

778:

777:

760:

758:

757:

752:

750:

748:

747:

738:

737:

728:

720:

719:

697:

695:

694:

689:

687:

686:

674:

673:

657:

655:

654:

649:

647:

646:

630:

628:

627:

622:

620:

619:

603:

601:

600:

595:

590:

589:

577:

576:

564:

563:

547:

545:

544:

539:

534:

533:

521:

520:

508:

507:

491:

489:

488:

483:

470:

468:

467:

462:

454:

453:

376:

356:

355:

348:

195:structured light

112:reconstruction.

74:

71:

65:

53:

52:

45:

21:

3551:

3550:

3546:

3545:

3544:

3542:

3541:

3540:

3536:Computer vision

3516:

3515:

3514:

3509:

3498:

3469:Robotic mapping

3417:Image denoising

3326:

3247:

3214:

3180:Motion analysis

3128:

3126:Computer vision

3123:

3071:

3058:

3053:

3052:

3035:

3020:

3019:

3015:

2993:

2992:

2988:

2979:

2977:

2973:

2958:

2953:

2952:

2948:

2936:

2931:

2930:

2926:

2894:

2893:

2889:

2857:

2856:

2852:

2825:

2824:

2817:

2769:

2768:

2761:

2727:

2722:

2721:

2714:

2705:

2701:

2692:

2688:

2679:

2675:

2653:

2652:

2648:

2639:

2635:

2607:

2606:

2602:

2593:

2586:

2580:Wayback Machine

2570:

2566:

2551:

2530:

2529:

2525:

2516:

2512:

2503:

2499:

2483:

2482:

2478:

2469:

2465:

2433:

2428:

2427:

2423:

2403:

2402:

2398:

2369:Visual Computer

2362:

2361:

2357:

2350:

2319:

2318:

2314:

2290:

2285:

2284:

2280:

2272:

2241:

2236:

2235:

2231:

2222:

2218:

2204:

2203:

2199:

2184:

2153:

2152:

2148:

2133:

2120:

2119:

2115:

2109:Wayback Machine

2099:

2095:

2041:

2040:

2033:

2024:

2020:

2011:

2007:

1981:

1980:

1973:

1964:

1962:

1953:

1952:

1948:

1939:

1935:

1926:

1922:

1917:

1855:

1672:virtual tourism

1652:Robotic mapping

1607:

1598:

1569:

1556:

1543:

1538:

1537:

1513:

1500:

1487:

1482:

1481:

1478:

1472:

1463:

1457:

1428:

1415:

1402:

1391:

1390:

1366:

1353:

1340:

1335:

1334:

1310:

1297:

1284:

1279:

1278:

1275:

1269:

1260:

1229:

1214:

1209:

1208:

1180:

1176:

1161:

1156:

1155:

1127:

1123:

1108:

1103:

1102:

1078:

1077:

1052:

1031:

1018:

1007:

1006:

981:

980:

961:

960:

936:

923:

910:

902:

901:

876:

866:

848:

835:

830:

829:

805:

788:

787:

769:

764:

763:

739:

729:

711:

706:

705:

698:. According to

678:

665:

660:

659:

638:

633:

632:

611:

606:

605:

581:

568:

555:

550:

549:

525:

512:

499:

494:

493:

474:

473:

445:

440:

439:

413:

381:

357:

353:

346:

340:

300:, the depth of

252:

227:

225:Passive methods

167:

147:virtual reality

139:medical imaging

135:computer vision

118:

97:computer vision

75:

69:

66:

63:

54:

50:

43:

32:medical imaging

28:

23:

22:

15:

12:

11:

5:

3549:

3547:

3539:

3538:

3533:

3528:

3518:

3517:

3511:

3510:

3503:

3500:

3499:

3497:

3496:

3494:Video tracking

3491:

3486:

3481:

3476:

3471:

3466:

3464:Remote sensing

3461:

3456:

3451:

3450:

3449:

3444:

3434:

3429:

3424:

3419:

3414:

3409:

3404:

3399:

3394:

3393:

3392:

3382:

3377:

3375:Blob detection

3372:

3367:

3362:

3357:

3352:

3347:

3342:

3337:

3331:

3328:

3327:

3325:

3324:

3323:

3322:

3317:

3307:

3302:

3300:View synthesis

3297:

3292:

3287:

3282:

3277:

3272:

3266:

3264:

3255:

3249:

3248:

3246:

3245:

3244:

3243:

3233:

3231:Motion capture

3228:

3222:

3220:

3216:

3215:

3213:

3212:

3207:

3202:

3197:

3192:

3187:

3182:

3177:

3172:

3167:

3162:

3157:

3152:

3147:

3142:

3136:

3134:

3130:

3129:

3124:

3122:

3121:

3114:

3107:

3099:

3093:

3092:

3087:

3082:

3077:

3070:

3069:External links

3067:

3066:

3065:

3057:

3056:External links

3054:

3051:

3050:

3013:

3002:(6): 689–702.

2986:

2946:

2924:

2887:

2873:10.1.1.545.613

2866:(4): 163–169.

2850:

2815:

2759:

2732:Neurocomputing

2712:

2699:

2686:

2673:

2646:

2633:

2600:

2584:

2564:

2549:

2523:

2510:

2497:

2476:

2463:

2444:(4): 321–331.

2421:

2396:

2355:

2348:

2312:

2301:(1–3): 17–45.

2278:

2275:on 2014-03-27.

2252:(1): 138–141.

2229:

2216:

2197:

2182:

2165:10.1.1.78.5303

2146:

2131:

2113:

2093:

2031:

2018:

2005:

1971:

1946:

1933:

1919:

1918:

1916:

1913:

1912:

1911:

1906:

1901:

1899:Photogrammetry

1896:

1891:

1886:

1881:

1876:

1871:

1866:

1861:

1854:

1851:

1830:

1829:

1809:

1777:marching cubes

1758:Marching Cubes

1748:

1747:

1744:

1709:

1708:

1695:

1693:Motion capture

1690:

1687:

1682:

1679:

1674:

1665:

1662:

1657:

1654:

1649:

1646:Free-viewpoint

1643:

1640:

1628:

1627:

1624:

1621:

1618:

1615:

1606:

1603:

1597:

1594:

1581:

1576:

1572:

1568:

1563:

1559:

1555:

1550:

1546:

1525:

1520:

1516:

1512:

1507:

1503:

1499:

1494:

1490:

1474:Main article:

1471:

1468:

1459:Main article:

1456:

1453:

1440:

1435:

1431:

1427:

1422:

1418:

1414:

1409:

1405:

1401:

1398:

1378:

1373:

1369:

1365:

1360:

1356:

1352:

1347:

1343:

1322:

1317:

1313:

1309:

1304:

1300:

1296:

1291:

1287:

1271:Main article:

1268:

1265:

1259:

1256:

1239:

1235:

1232:

1226:

1221:

1217:

1193:

1187:

1183:

1179:

1173:

1168:

1164:

1140:

1134:

1130:

1126:

1120:

1115:

1111:

1085:

1059:

1055:

1051:

1046:

1043:

1038:

1034:

1030:

1025:

1021:

1017:

1014:

988:

968:

948:

943:

939:

935:

930:

926:

922:

917:

913:

909:

883:

879:

873:

869:

863:

860:

855:

851:

847:

842:

838:

812:

808:

803:

800:

795:

791:

784:

781:

776:

772:

746:

742:

736:

732:

726:

723:

718:

714:

685:

681:

677:

672:

668:

645:

641:

618:

614:

593:

588:

584:

580:

575:

571:

567:

562:

558:

537:

532:

528:

524:

519:

515:

511:

506:

502:

481:

460:

457:

452:

448:

436:optical center

412:

409:

397:viewing angles

383:

382:

378:(October 2021)

360:

358:

351:

342:Main article:

339:

336:

255:Monocular cues

251:

248:

240:digital images

226:

223:

207:time-of-flight

166:

165:Active methods

163:

117:

114:

77:

76:

57:

55:

48:

26:

24:

14:

13:

10:

9:

6:

4:

3:

2:

3548:

3537:

3534:

3532:

3529:

3527:

3524:

3523:

3521:

3508:

3507:

3506:Main category

3501:

3495:

3492:

3490:

3487:

3485:

3482:

3480:

3477:

3475:

3472:

3470:

3467:

3465:

3462:

3460:

3459:Pose tracking

3457:

3455:

3452:

3448:

3445:

3443:

3440:

3439:

3438:

3435:

3433:

3430:

3428:

3425:

3423:

3420:

3418:

3415:

3413:

3410:

3408:

3405:

3403:

3400:

3398:

3395:

3391:

3388:

3387:

3386:

3383:

3381:

3378:

3376:

3373:

3371:

3368:

3366:

3363:

3361:

3358:

3356:

3353:

3351:

3348:

3346:

3343:

3341:

3338:

3336:

3333:

3332:

3321:

3318:

3316:

3313:

3312:

3311:

3308:

3306:

3303:

3301:

3298:

3296:

3293:

3291:

3288:

3286:

3283:

3281:

3278:

3276:

3273:

3271:

3268:

3267:

3265:

3263:

3259:

3256:

3254:

3250:

3242:

3239:

3238:

3237:

3234:

3232:

3229:

3227:

3224:

3223:

3221:

3217:

3211:

3208:

3206:

3203:

3201:

3198:

3196:

3193:

3191:

3188:

3186:

3183:

3181:

3178:

3176:

3173:

3171:

3168:

3166:

3163:

3161:

3158:

3156:

3153:

3151:

3148:

3146:

3143:

3141:

3138:

3137:

3135:

3131:

3127:

3120:

3115:

3113:

3108:

3106:

3101:

3100:

3097:

3091:

3088:

3086:

3083:

3081:

3078:

3076:

3073:

3072:

3068:

3063:

3060:

3059:

3055:

3046:

3040:

3032:

3028:

3024:

3017:

3014:

3009:

3005:

3001:

2997:

2990:

2987:

2976:on 2017-09-22

2972:

2968:

2964:

2957:

2950:

2947:

2942:

2935:

2928:

2925:

2920:

2916:

2911:

2910:10.1.1.5.3672

2906:

2902:

2898:

2891:

2888:

2883:

2879:

2874:

2869:

2865:

2861:

2854:

2851:

2846:

2842:

2838:

2834:

2833:

2828:

2827:Dey, Tamal K.

2822:

2820:

2816:

2811:

2807:

2803:

2799:

2795:

2791:

2786:

2781:

2777:

2773:

2766:

2764:

2760:

2754:

2749:

2745:

2741:

2737:

2733:

2726:

2719:

2717:

2713:

2709:

2703:

2700:

2696:

2690:

2687:

2683:

2677:

2674:

2669:

2665:

2661:

2657:

2650:

2647:

2643:

2637:

2634:

2629:

2625:

2621:

2617:

2616:

2611:

2604:

2601:

2597:

2591:

2589:

2585:

2581:

2577:

2574:

2568:

2565:

2560:

2556:

2552:

2546:

2542:

2538:

2534:

2527:

2524:

2520:

2514:

2511:

2507:

2501:

2498:

2492:

2487:

2480:

2477:

2473:

2467:

2464:

2459:

2455:

2451:

2447:

2443:

2439:

2432:

2425:

2422:

2416:

2411:

2407:

2400:

2397:

2392:

2388:

2383:

2378:

2374:

2370:

2366:

2359:

2356:

2351:

2345:

2341:

2337:

2332:

2327:

2323:

2316:

2313:

2308:

2304:

2300:

2296:

2289:

2282:

2279:

2271:

2267:

2263:

2259:

2255:

2251:

2247:

2240:

2233:

2230:

2226:

2220:

2217:

2212:

2208:

2201:

2198:

2193:

2189:

2185:

2179:

2175:

2171:

2166:

2161:

2157:

2150:

2147:

2142:

2138:

2134:

2128:

2124:

2117:

2114:

2110:

2106:

2103:

2097:

2094:

2089:

2085:

2080:

2075:

2070:

2065:

2061:

2057:

2053:

2049:

2045:

2038:

2036:

2032:

2028:

2022:

2019:

2015:

2009:

2006:

2001:

1997:

1993:

1989:

1985:

1978:

1976:

1972:

1960:

1956:

1950:

1947:

1943:

1937:

1934:

1930:

1924:

1921:

1914:

1910:

1907:

1905:

1902:

1900:

1897:

1895:

1892:

1890:

1887:

1885:

1882:

1880:

1877:

1875:

1872:

1870:

1867:

1865:

1862:

1860:

1857:

1856:

1852:

1850:

1848:

1843:

1842:

1834:

1827:

1823:

1819:

1815:

1810:

1807:

1806:

1805:

1801:

1800:

1792:

1788:

1786:

1782:

1778:

1774:

1770:

1765:

1764:

1756:

1752:

1745:

1741:

1740:

1739:

1738:

1731:

1727:

1723:

1722:

1718:

1714:

1713:

1707:

1706:hand tracking

1703:

1699:

1696:

1694:

1691:

1688:

1686:

1683:

1680:

1678:

1675:

1673:

1669:

1666:

1663:

1661:

1658:

1656:City planning

1655:

1653:

1650:

1647:

1644:

1641:

1638:

1637:

1636:

1633:

1632:

1631:Applications:

1625:

1622:

1619:

1616:

1613:

1612:

1611:

1604:

1602:

1595:

1593:

1574:

1570:

1566:

1561:

1557:

1548:

1544:

1518:

1514:

1510:

1505:

1501:

1492:

1488:

1477:

1469:

1467:

1462:

1454:

1452:

1433:

1429:

1425:

1420:

1416:

1412:

1407:

1403:

1396:

1371:

1367:

1363:

1358:

1354:

1345:

1341:

1315:

1311:

1307:

1302:

1298:

1289:

1285:

1274:

1266:

1264:

1257:

1255:

1252:

1237:

1233:

1230:

1224:

1219:

1215:

1206:

1191:

1185:

1181:

1177:

1171:

1166:

1162:

1153:

1138:

1132:

1128:

1124:

1118:

1113:

1109:

1100:

1097:

1083:

1074:

1057:

1053:

1049:

1044:

1041:

1036:

1032:

1028:

1023:

1019:

1015:

1012:

1004:

1002:

986:

966:

941:

937:

933:

928:

924:

920:

915:

911:

898:

881:

877:

871:

867:

861:

858:

853:

849:

845:

840:

836:

827:

810:

806:

801:

798:

793:

789:

782:

779:

774:

770:

761:

744:

740:

734:

730:

724:

721:

716:

712:

703:

701:

683:

679:

675:

670:

666:

643:

639:

616:

612:

586:

582:

578:

573:

569:

560:

556:

530:

526:

522:

517:

513:

504:

500:

479:

458:

455:

450:

446:

437:

428:

424:

422:

418:

417:stereo vision

410:

408:

405:

400:

398:

395:in different

394:

390:

389:visual system

379:

374:

370:

369:

364:

359:

350:

349:

345:

338:Stereo vision

337:

335:

332:

328:

326:

322:

318:

314:

311:

310:

305:

303:

299:

295:

288:

283:

275:

271:

269:

265:

261:

256:

249:

247:

245:

241:

237:

233:

224:

222:

220:

216:

215:3D ultrasound

212:

208:

204:

201:methods emit

200:

196:

192:

188:

184:

176:

175:echo sounding

171:

164:

162:

160:

156:

152:

151:digital media

148:

144:

140:

136:

132:

128:

124:

115:

113:

111:

106:

102:

98:

90:

89:

83:

73:

61:

56:

47:

46:

41:

37:

33:

19:

3504:

3397:Eye tracking

3261:

3253:Applications

3219:Technologies

3205:Segmentation

3022:

3016:

2999:

2995:

2989:

2978:. Retrieved

2971:the original

2966:

2963:Eurographics

2962:

2949:

2940:

2927:

2903:(2): 71–78.

2900:

2896:

2890:

2863:

2859:

2853:

2836:

2830:

2785:math/9410208

2778:(1): 43–72.

2775:

2771:

2735:

2731:

2702:

2689:

2676:

2659:

2655:

2649:

2636:

2622:(1): 42–49.

2619:

2613:

2603:

2567:

2532:

2526:

2513:

2500:

2479:

2466:

2441:

2437:

2424:

2408:. IEEE/CVF.

2405:

2399:

2372:

2368:

2358:

2321:

2315:

2298:

2294:

2281:

2270:the original

2249:

2245:

2232:

2219:

2210:

2200:

2155:

2149:

2122:

2116:

2096:

2051:

2047:

2021:

2008:

1983:

1963:. Retrieved

1961:. 2017-05-27

1958:

1949:

1936:

1923:

1844:

1840:

1839:

1802:

1799:VR Technique

1798:

1797:

1780:

1772:

1768:

1766:

1762:

1761:

1749:

1736:

1735:

1720:

1719:

1715:

1711:

1710:

1634:

1630:

1629:

1608:

1599:

1479:

1464:

1276:

1261:

1253:

1207:

1154:

1101:

1098:

1075:

1005:

1001:focal length

899:

828:

762:

704:

700:trigonometry

433:

414:

404:optical axis

401:

386:

377:

366:

330:

329:

316:

315:

307:

306:

293:

292:

253:

228:

191:rangefinders

180:

119:

104:

94:

86:

70:October 2019

67:

59:

3305:Visual hull

3200:Researchers

2753:10045/42544

2054:(7): 1655.

1904:Stereoscopy

1859:3D modeling

1732:(25 Points)

1681:Archaeology

1596:Restoration

702:relations,

325:perspective

287:visual hull

260:silhouettes

219:3D scanning

209:lasers to

199:radiometric

3531:3D imaging

3520:Categories

3175:Morphology

3133:Categories

2980:2018-10-09

2491:1907.04124

2415:2308.14152

2331:2202.08010

2227:." (1970).

1965:2017-05-27

1959:GarageFarm

1915:References

1874:3D scanner

1841:Voxel Grid

321:distortion

211:microwaves

18:3D mapping

3039:cite book

2905:CiteSeerX

2868:CiteSeerX

2391:1432-2315

2160:CiteSeerX

2141:607557354

1889:Depth map

1029:−

799:−

421:disparity

183:depth map

3210:Software

3170:Learning

3160:Geometry

3140:Datasets

2576:Archived

2458:12849354

2192:17571812

2105:Archived

2088:30959936

2000:62548928

1853:See also

1642:Medicine

244:3D model

203:radiance

2810:1600979

2790:Bibcode

2559:1189988

2474:, 2010.

2254:Bibcode

2079:6479490

2056:Bibcode

2048:Sensors

373:Discuss

264:shading

60:updated

2907:

2870:

2808:

2557:

2547:

2456:

2389:

2346:

2190:

2180:

2162:

2139:

2129:

2086:

2076:

1998:

1894:Kinect

1820:) and

1664:Gaming

900:where

302:normal

236:sensor

217:. See

34:, see

2974:(PDF)

2959:(PDF)

2937:(PDF)

2806:S2CID

2780:arXiv

2728:(PDF)

2555:S2CID

2486:arXiv

2454:S2CID

2434:(PDF)

2410:arXiv

2326:arXiv

2291:(PDF)

2273:(PDF)

2242:(PDF)

2188:S2CID

1996:S2CID

1847:voxel

363:split

3045:link

2545:ISBN

2387:ISSN

2344:ISBN

2178:ISBN

2137:OCLC

2127:ISBN

2084:PMID

1822:NPVR

1704:and

1670:and

1536:and

1333:and

631:and

548:and

323:and

123:CAGD

99:and

3027:doi

3004:doi

2915:doi

2878:doi

2841:doi

2798:doi

2748:hdl

2740:doi

2736:150

2664:doi

2624:doi

2537:doi

2446:doi

2377:doi

2336:doi

2303:doi

2262:doi

2170:doi

2074:PMC

2064:doi

1988:doi

1814:MIP

999:is

371:. (

285:A "

213:or

173:3D

125:),

95:In

3522::

3041:}}

3037:{{

3000:38

2998:.

2967:25

2965:.

2961:.

2939:.

2913:.

2901:26

2899:.

2876:.

2864:21

2862:.

2837:35

2835:.

2818:^

2804:.

2796:.

2788:.

2776:13

2774:.

2762:^

2746:.

2734:.

2730:.

2715:^

2660:63

2658:.

2620:11

2618:.

2612:.

2587:^

2553:.

2543:.

2452:.

2440:.

2436:.

2385:.

2373:38

2371:.

2367:.

2342:.

2334:.

2299:17

2297:.

2293:.

2260:.

2250:19

2248:.

2244:.

2209:.

2186:.

2176:.

2168:.

2135:.

2082:.

2072:.

2062:.

2052:19

2050:.

2046:.

2034:^

1994:.

1974:^

1957:.

1828:).

1700:,

375:)

262:,

161:.

149:,

145:,

141:,

137:,

133:,

129:,

103:,

3118:e

3111:t

3104:v

3047:)

3033:.

3029::

3010:.

3006::

2983:.

2921:.

2917::

2884:.

2880::

2847:.

2843::

2812:.

2800::

2792::

2782::

2756:.

2750::

2742::

2670:.

2666::

2626::

2561:.

2539::

2494:.

2488::

2460:.

2448::

2442:1

2418:.

2412::

2393:.

2379::

2352:.

2338::

2328::

2309:.

2305::

2264::

2256::

2194:.

2172::

2143:.

2090:.

2066::

2058::

2002:.

1990::

1968:.

1781:f

1773:f

1769:S

1580:)

1575:2

1571:v

1567:,

1562:2

1558:u

1554:(

1549:2

1545:P

1524:)

1519:1

1515:v

1511:,

1506:1

1502:u

1498:(

1493:1

1489:P

1439:)

1434:p

1430:z

1426:,

1421:p

1417:y

1413:,

1408:p

1404:x

1400:(

1397:P

1377:)

1372:2

1368:v

1364:,

1359:2

1355:u

1351:(

1346:2

1342:P

1321:)

1316:1

1312:v

1308:,

1303:1

1299:u

1295:(

1290:1

1286:P

1238:d

1234:f

1231:b

1225:=

1220:p

1216:z

1192:d

1186:1

1182:v

1178:b

1172:=

1167:p

1163:y

1139:d

1133:1

1129:u

1125:b

1119:=

1114:p

1110:x

1084:P

1058:p

1054:z

1050:b

1045:f

1042:=

1037:2

1033:u

1024:1

1020:u

1016:=

1013:d

987:f

967:P

947:)

942:p

938:z

934:,

929:p

925:y

921:,

916:p

912:x

908:(

882:p

878:z

872:p

868:y

862:f

859:=

854:2

850:v

846:=

841:1

837:v

811:p

807:z

802:b

794:p

790:x

783:f

780:=

775:2

771:u

745:p

741:z

735:p

731:x

725:f

722:=

717:1

713:u

684:2

680:v

676:=

671:1

667:v

644:2

640:P

617:1

613:P

592:)

587:2

583:v

579:,

574:2

570:u

566:(

561:2

557:P

536:)

531:1

527:v

523:,

518:1

514:u

510:(

505:1

501:P

480:P

459:v

456:u

451:1

447:O

91:.

72:)

68:(

62:.

42:.

20:)

Text is available under the Creative Commons Attribution-ShareAlike License. Additional terms may apply.